CPS Management Cluster and Its Performance

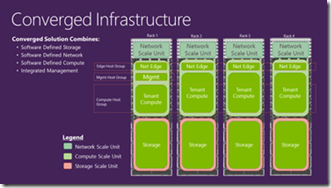

By now, I hope that you’ve had some opportunities to read and understand what Cloud Platform System (CPS) is, how the fabric monitoring capability works in CPS, how we take advantage of CPS inside of Microsoft, and how we're building and testing Microsoft workloads in this platform. In today’s post, we’d like to take you on a deep-dive tour of the CPS management cluster.

Design Tenets

When we started designing for CPS, one of the key design principles is the solution’s scale, performance and availability. Our scale goal was to support up to 8000 VMs per stamp, our performance goal was to be able to meet our customers requirements with cost-efficient hardware, our availability goal was to be able to offer highly fault-resilient (management or tenant) system availability even in case of common hardware failures. For the management cluster, we designed the right mix of hardware and software architecture to support just that. Let’s walk through each of these design points.

Physical Composition

First, let’s look at the physical composition of the management cluster. The management cluster is located on the first rack, and is formed by a six-node Hyper-V cluster. These six nodes have identical hardware configuration to all of the compute nodes in CPS. This is by design, so we can simplify deployment and failure replacement experiences, and reduce the customer’s cost of ownership. We chose the Dell PowerEdge C6220ii model for the compute nodes, each of which has the following hardware configuration:

- Dual-socket Intel IvyBridge (E5-2650v2 @ 2.6GHz)

- 256 GB memory

- 2 x 10 GbE Mellanox NICs (LBFO Team, NVGRE offload)

- 2 x 10 GbE Chelsio (iWARP/RDMA)

- 1 local SSD @ 200 GB (boot/paging)

Availability Considerations in Hardware Architecture

In total, this six-node management cluster has a combined capacity of 1.5 TB of memory, and 96 cores. What’s important to note is that the N+2 availability is built-in in this design, so that outages of the management infrastructure services are very unlikely.

- With a six-node cluster, a total of 1.5 TB memory, and 96 cores, this combined capacity provides sufficient resources to let the system comfortably sustain failure of up to two physical nodes. Even in the unlikely scenario that you lose two physical nodes in your management cluster, CPS could continue to operate at expected performance without any reduced capability.

- CPS hardware configuration can sustain a failure of two compute nodes without any performance degradation under the maximum load of 8,000 VMs. For disks, it can sustain a failure of any two disks from different enclosures; but also, due to enclosure awareness, it can sustain a failure of all the disks in a single enclosure (or the enclosure itself).

- With the redundant networking and storage design, the solution can also survive a failure of a switch, a network cable, or a single JBOD unit.

Software Components

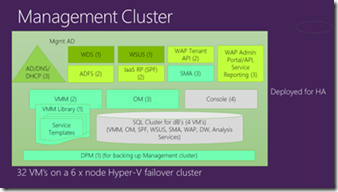

Next, let’s look at the software components inside of the management cluster. CPS is built with Windows Server 2012 R2, System Center 2012 R2, and Windows Azure Pack technologies. From an abstract level, the diagram on the right shows what the software stack looks like inside of the management cluster.

Next, let’s look at the software components inside of the management cluster. CPS is built with Windows Server 2012 R2, System Center 2012 R2, and Windows Azure Pack technologies. From an abstract level, the diagram on the right shows what the software stack looks like inside of the management cluster.

We deploy all of the components needed for fabric (compute, storage & networking resources) management, automation (runbook / workflow automation), and data protection (backup and restore), as well as user self-service (service admin and tenant portals), metering (usage collection and reporting), etc. This includes the System Center stack along with the Windows Azure Pack. We also provide out-of-the-box integration experience with Azure Operational Insights service, where CPS customers can take advantage of log insights, capacity planning, and other great benefits from this service. When customers order more than one stamp of CPS, disaster recovery service is also included in the solution (at no additional cost to customers), where we’ll leverage the Azure Site Recovery service to manage the primary and recovery sites. Lastly but not leastly, CPS comes with a set of built-in (pre-configured) highly available infrastructure services (AD, DNS, DHCP, WSUS, WDS, etc.).

Availability Considerations in Software Architecture

There are two levels of protections for management service availability in CPS.

- VM level protection:

- All management components are deployed on highly available VMs. The only exception to this is the core infrastructure servers hosting AD/DNS/DHCP, which achieve high availability on the application level by running multiple domain controllers / DHCP servers. This offers protection from hardware failure.

- Any hardware or VM container-level failure prompts the Hyper-V cluster to activate highly available resources from shared storage on a different node, and start the VMs there.

- App-level clustering / load balancing:

- The VMM servers are deployed in a high-availability (HA) VMM model, where critical VMM services are also monitored by the clustering service.

- There are three OM server VMs, on which the three management servers are configured in active-active mode, so that losing one of the three servers doesn’t bring down the monitoring infrastructure.

- For SPF, SMA and WAP components (AD FS, Tenant API, Admin API) are provisioned with two or more instances. They are configured behind the load balancer, so that they both are resilient, and can balance the loads placed on the system.

- All databases of the management components are running in guest SQL Server Failover Cluster instances.

- AD/DHCP/DNS services are provisioned in three distinct VMs, which are deployed in three different physical hosts. They are configured with app-level clustering, so that these core infrastructure services can also survive a failure of up to two physical nodes.

Testing CPS Performance

Lastly, let’s take a look at the performance of the management cluster and its software components.

We measured CPS’ performance characteristics in three different vectors:

- Availability of management services

- Success rate of operations (with fault injections)

- Response time for successful operations

How do we test?

From a management service availability point of view, as stated in the previous section, CPS is designed to recover from hardware failures, and planned or unplanned downtimes.

“How do we test CPS?” you may ask. Here is a high-level flow describing our test approach in our labs and at our early beta customer sites:

How do we measure?

There are four steps that define what to test and how to measure:

- Step 1.

We studied Windows Azure IaaS platform data to understand the user pattern in the IaaS platform. We defined our test mix with 80% “Get” operations and 20% “Set” operations, and assumed that roughly 20% of the total user base is active at any given point in time.

We studied Windows Azure IaaS platform data to understand the user pattern in the IaaS platform. We defined our test mix with 80% “Get” operations and 20% “Set” operations, and assumed that roughly 20% of the total user base is active at any given point in time. - Step 2. To simulate customer environments, we conducted extensive research across our TAP (technical adoption program) and our internal customers (Nebula team is one of them) on exactly what VM operations they perform daily, weekly, and monthly. We built a model, based on the customer data, with the proportional “Get” and “Set” operations. We also condensed the tests, so that we can simulate a week’s worth of customer operations in under a day (one day test load = one week customer operations load).

- Step 3. After stabilizing the system at the desired load, we then introduce faults into the system (software and hardware faults at the management cluster and compute cluster) at a rate of one fault per every two hours. Again, this is N-times higher than what we typically hear from our customers.

- Step 4. We then let our test systems fire at the CPS system, and measure the job completion rate and success rate.

Note that our fault injection engine does provide planned faults (examples include graceful, rolling patching and update activities, etc.) and unplanned faults (examples include powering down a node or killing off a core service process, etc.). Thanks to CPS’ highly resilient hardware and software architecture, our test results have shown that the CPS system performs equally well under both planned and unplanned faults.

CPS Supported Scale

Finally, the max scale we’ve tested and CPS supports is as follows:

- 2000 VMs with 1000 tenant users for a single-rack CPS system

- 8000 VMs with 1000 tenant users for a four-rack CPS system

P.S. Above VM size is two vCPU, 1.75GB memory and 50GB disk.

In summary, CPS is truly a solid bundle of “goodness” that is baked with tons of knowledge that we designed each component the optimized way how it should work, and built with experience and best practices that we learned and adapted from operating Azure. And we’ve also gone to extensive lengths to ensure its availability, performance, and reliability. The result of all these efforts is a worry-free and instant-on cloud system that can immediately deliver a fully-packaged private cloud solution to your datacenter.

Thanks for reading,

CPS Engineering Team

References

For people who are unfamiliar with System Center and the WAP software suite, here are the full product names (with links to documentation on Microsoft TechNet ) for the acronyms in the article:

- VMM – System Center Virtual Machine Manager

- OM – System Center Operations Manager

- DPM – System Center Data Protection Manager

- IaaS RP SPF – System Center Service Provider Foundation as the Infrastructure-as-a-Service resource provider to Windows Azure Pack

- SMA – System Center Service Management Automation

- WAP – Windows Azure Pack

The management cluster also provides several essential services via Windows Server 2012 R2 roles:

- AD DS – Active Directory Domain Services

- AD FS – Active Directory Federation Services

- DHCP – Dynamic Host Configuration Protocol Server

- DNS – Domain Name System Server

- WSUS – Windows Server Update Services

- WDS – Windows Deployment Services

A four-VM SQL Server cluster serves as the database: SQL Server 2012