Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Author: John Hoang

Technical Reviewers: Dimitri Furman, Murshed Zaman, Sanjay Mishra

Overview

This article is one of the several new blogs from the AzureCAT team discussing common customer implementations and proven architecture patterns using SQL DW. In this blog, I will discuss the patterns use by Independent Software Vendor (ISV) on SQL DW. Although the focus is on the ISV workloads, the majority of these characteristics should be applicable for common workloads using Azure SQL DW.

Since its inception, SQL DW has been very popular with ISV. In addition to all the cloud managed service advantages such as quick startup, no infrastructure maintenance, deploying in multiple data centers worldwide, automatic data protection, the service allows the ISV to scale compute resources on-demand, storing petabyte of data, and pause and resume compute resources to save cost. As with any technology, to get the best performance and experience from the product, you need to read the user manual first to understand the core principles. You can start with our SQL Data Warehouse Documentation and highly recommended article Best practices for Azure SQL Data Warehouse.

For the article, I will start with the main characteristics of successful common ISV patterns, discussing some common considerations/workarounds and finally, walk you through three common patterns from our production customers.

Common Pattern Characteristics

Please refer to article Azure SQL Data Warehouse Workload Patterns and Anti-Patterns for a great overview of common SQL DW workload patterns. Again, the ISV workloads will have many characteristics that are similar to the common SQL DW workloads described in the article.

Below are common characteristics of successful SaaS implementations on SQL DW.

Allows for the massive scale of processing power, useful for “burst” scenario.

ISV leverages the on-demand scaling feature for loading, transforming data from multiple data sources of their end users and external data services for “spike” workload such as custom processing for broad, large range of data, month-end processing, “Black Friday”, holiday spike.

Automatically scale up to a petabyte of storage.

With the separation of computing and storage architecture, the storage layer is no longer restricted by computing hardware. Storage capacity is automatically scaled transparently so new users/workload can be added without the restriction tied to your SLO.

Can be paused to reduce compute costs when the instance is idle.

When ISV is done with their data loading, transformation processing, the idled instance can be paused to save cost.

Consolidation of multiple databases into a single instance.

By combining multiple data warehouse instances into a single environment, this simplifies management of the environment, provides a centralized data warehouse with a single source of the truth, and capability to query across all the data.

For multi-tenancy application, create a separate datamart for each tenant.

The benefits of single database tenancy include mitigate resource contention, secure data access, individually control performance level of each tenant database, mitigate service disruption due to scaling/pause from shared tenants and easily track user utilization for charge back billing.

The source data can come from multiple sources.

This can be on-premises databases, Hadoop, flat files sent from end users, cloud data sources from other cloud providers, purchased data sets from the market place, etc.

Data loading frequency

The data loading frequency could range from every few minutes, every few hours to daily. Even if data source pipeline is real time, schedule data ingestion into SQL DW no more frequently than at 5-minute intervals. Allowing extra time between each load will help mitigate the SQL DW backup process from causing load timeout. For the backup process, SQL DW takes a snapshot of your database every 4 hours. Before a snapshot is taken, SQL DW pauses your active DML operations until the snapshot is completed. A typical snapshot could take anywhere from 30 seconds to a couple of minutes.

Query patterns

The queries on SQL DW follow typical data warehouse patterns: star join with aggregation, sequential scan, complex fact to fact join. Common usage is batch reporting, ad-hoc and data mining query patterns. For singleton lookup queries, it is recommended to add a b-tree index for optimal performance.

Dashboard queries

Power BI users with dashboard query performance requirements should use SSAS tabular model that sources data from SQL DW.

Concurrency limits

Understand the concurrency limits, and offload large numbers of users to data marts such as SSAS, SQLDB, SQL Server on VM. The data mart choices will depend on your performance, capacity, and architecture requirements.

Common considerations and workarounds

While it is important to understand the “common patterns” for SQL DW, it is just as important, if not, more important to understand some limitations of the service at the current state. In this section, I will discuss several important considerations and workarounds.

Multi-tenant database

With the capability to scale compute resources, the capacity to store up to a petabyte and leveraging the MPP architecture, it is very tempting for ISVs to use SQL DW as a multi-tenancy database. Please note that I am referring to multi-tenancy database, and not multi-tenancy application. SQL DW implements a simplified workload management model. It has four pre-defined Dynamic Resource Classes and recently added eight Static Resource Classes. Each resource class allocates the number of concurrency slots, the size of memory grants, and query priority. The values for these parameters cannot be changed by users, and depend on the DWU used. All the resources are shared within the database, and you do not have any option for granular control to allocate or limit resource use for a given tenant. To illustrate, if a user from company A is running a “monster” query consuming all the CPU, then the second user from company B could notice that their typically ten-second query is still running after minutes due to resource contention. Resources such as CPU, memory, IO, transaction logs and TEMPDB are shared among all users in the same database. For optimal performance, we strongly recommend ISVs to create one database per tenant. This will not only mitigate resource contention, but allow the tenants the flexibility to choose their own performance level via DWU scaling, PAUSE/RESUME on their own schedule, and easily identify per user utilization for charge back.

Hundreds of thousands of concurrent queries

Azure SQL DW allows up to 1,024 concurrent connections. To provide predictable query performance, Azure SQL DW currently supports from 4 to a maximum of 128 concurrent queries, depending on the DWU. Please see Concurrency limits for detailed information. Any queries submitted after reaching the concurrency limit will be queued until a concurrency slot is available. The number of queued operations can be up to 896 (1,024 - 128). Any connections attempted after the 1,024 concurrent connection limit is reached will return with a failure error message. We recommend our ISVs to create separate data marts for each of their customers. The data mart can be in the form of SQL Data Warehouse, SQL Database, SQL Server on VM (IaaS), SSAS cube, exported data stored in Azure Data Lake Store or Azure Blob Storage.

Scaling/Pausing impact

Currently, the scaling operation is an offline operation. Any pending queries and DML operations will be canceled. For any logged operation, a transaction rollback will occur. The scaling operation does not initiate until the transaction rollback is completed. In a multi-tenant design, or in a single tenant design where multiple users have the permission to scale/pause, unexpected scaling operations can cause a huge impact on currently running workloads, causing unexpected downtime. The recommendation is still to provide a separate database for each tenant. Even for single tenant scenarios, design your security to allow only a minimal number of users who can execute scale/pause. In addition, check and drain active transactions, and coordinate and schedule any scale/pause operation before execution.

Transaction limit impact

In order to guard against long rollback, transaction size limit is implemented in SQL DW. Note that SQL DW has a total limit of 160 GB of transaction log per distribution. The transaction size limit applies to a single DML operation size, and the limit varies depending on the DWU use. To illustrate, at DWU1000, the total transaction log size is 9600 GB (160 GB * 60 distributions). However, the transaction size limit is only 7.5 GB per distribution, or 450 GB total transaction size (7.5 GB * 60 distributions). This safety limit was put in place to prevent a long database outage in the case where a user tries to pause or scale during a long running transaction. Please refer to article “Optimizing transactions for SQL Data Warehouse” for further information and best practices for minimizing the risk of hitting the transaction limit and avoiding long rollbacks.

Restricting data access limitation

Azure SQL DW supports permission grants at the schema and object level within the database. If your application requires a more granular level of data access management, SQL DW does not support Row Level Security (RLS), Dynamic Data Masking, Always Encrypted, and Column Level Encryption. As a workaround for the lack of RLS, you can create multiple views, and assign the appropriate users to the appropriate views. Other than that, there is no practical workaround for lack of Data Masking, Always Encrypted and Column Level Encryption. We recommend you to create data marts in SQL Database, SQL Server on VM (IaaS), SSAS to take advantage of the specific security features to meet your data access requirements.

Cross database join limitation

Azure SQL DW currently does not support cross database joins. As a workaround, use schema instead of a database to separate different database objects.

The Three Top Common Workload Pattern

In this section, I will discuss the three top common patterns implemented by our ISV.

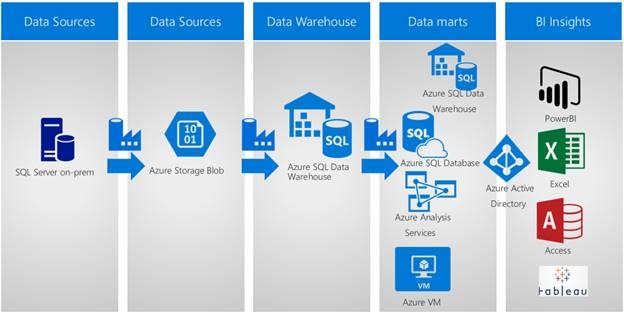

The Hybrid Pattern

One of the most popular and common patterns with SQL DW is the “Hybrid” scenario. This is where the data source(s) are the on-prem RDBMS database(s). The data is incrementally and periodically loaded into SQL DW. This can be daily, several times a day, and sometimes on-demand. (ADF) with PolyBase enabled can be used to orchestrate data loading. Data is extracted from the source database(s), copied to Azure Blob Storage, and finally loaded into SQL DW. For detailed information on data loading, please refer to article Azure SQL Data Warehouse loading patterns and strategies. Any transformation is done within SQL DW. This is the ELT approach we recommend to our customers to leverage the MPP power and on-demand scaling capability for the transformation step.

For the end user of the application, each user gets their own data mart. This can either be in the form of another SQL Data Warehouse, Azure SQL Database, Azure Analysis Services or SQL Server on VM (IaaS), with the performance level of their choice. The end user can choose whatever tool they want to consume the data. Some common tools are Power BI against SSAS tabular in-memory mode, Microsoft Excel, Microsoft Access, and Tableau. Security access is managed by Azure Active Directory with the benefits of self-service password reset, multi-factor authentication, and federation to customer current Active Directory. For an ISV, additional revenue can be generated with additional services such as canned reports, customized data generation, and data processing. ISVs can use tags to organize Azure resources when needed to for billing or management.

Figure 1. The Hybrid pattern architecture

The Database Consolidation Pattern

The second most popular and common pattern for ISVs is using Azure SQL DW for database consolidation. In this example, the ISV has various disparate data in SQL Server databases on-prem, in Azure Table Storage, Azure SQLDB, Oracle on-prem, SQL Server in AWS, and Azure Redis Cache. They wanted to consolidate data from multiple systems into one data repository in the cloud, to have a consolidated view of the end to end data lifecycle. This allows them to perform advanced analysis against a single source database with consistent taxonomy, and to generate reports leveraging on-demand scaling feature. Using ADF as the orchestration tool, data is copied onto Azure Blob storage, then loaded into Azure SQL DW. The ISV opted to uses PolyBase to export data to Azure blob storage as another layer of data protection. This feature allows them to export the data at the object level to various file formats that PolyBase currently supports. For batch reporting, SSRS reports are either scheduled or run on-demand against Azure SQL DW. For ad-hoc interactive dashboard query, Power BI is used against an Azure Analysis Service data mart. With this consolidation cloud solution, the ISV not only saved money over the on-prem alternatives, they also save processing time, allowing their customers to spend more time analyzing the data to make better business decisions.

Figure 2. The Database consolidation pattern architecture

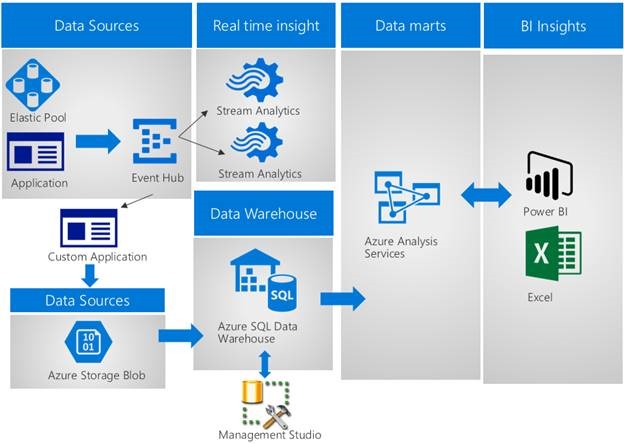

The IoT Pattern

We have a handful of customers with IoT workload that has had unpleasant experience using SQL DW. The data ingestion into SQL DW was so slow that taking extended lunch and many coffee breaks were not enough. Remember that for an MPP system, there is an overhead with query parsing, orchestration, communication with other nodes and processing against distributed databases. Therefore, treating an MPP system like an OLTP system will result in sub-optimal performance. The top pattern to avoid is any type of real time ingestion into SQL DW. Techniques such as singleton insert, using Azure Stream Analytics, which in the background, is nothing more than singleton insert, should be avoided. For this workload, the ISV has a SaaS application that generates logs from over 16,000 Azure SQL DB Elastic Pool databases. The log data is flushed into Azure Event Hub. Real time analytic for application query statistics and fatal logs is being done using Azure Stream Analytics. To serve data warehouse query and BI users, data is written to Azure Blob storage and loaded into SQL DW using PolyBase. An important performance consideration with IoT workload is the number of files generated. The ISV originally had 621,000 files with total data size of 80 GB for 1 day worth of data. Although the total data size is very small, due to the overhead of traversing through a large number of files, it was taking an hour just to create the external table. Obviously, the same overhead also affected the data loading performance. Unable to leverage Event Hub Archive due to this overhead, the ISV built a custom application to reduce the number of files down to 8,700 files per day. Data is loaded every 5 minutes to meet the end user consumption SLA. The ISV is also not able to leverage ADF for the data loading orchestration. ADF is designed for Batch processing so the minimum data loading processing frequency is currently 15 minutes. Finally, another important factor to consider is the extra time needed for post load processing within SQL DW for optimal query performance. Take into consideration the time needed to check for row group compression quality. You may need to either perform an INDEX REORG or INDEX REBUILD depending on the status and quality of your row group. Furthermore, you will also need to create/update statistics to provide the necessary histogram and cardinality for the cost-based optimizer to build an efficient DSQL plan. For customers who require detailed level information or batch reporting, they use SSMS with familiar T-SQL to query against SQL DW. For interactive, dashboard query, end users use Power BI and Excel against SSAS tabular model. This will provide a greater user experience for dashboard query performance, greater concurrency capacity and leveraging the dimensional model with drag and drop capability without having to understand complex join relationship.

Figure 3. The IoT pattern architecture