Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Businesses are continuously trying to be more productive, while also better serving their customers and partners, and AI is one of the new technology areas they are looking at to help with this work. My colleague Alysa Taylor recently shared some of the work we are doing to help in this area with our upcoming Dynamics 365 + AI offerings, a new class of business applications that will deliver AI-powered insights out of the box. These solutions help customers to make the transition from business intelligence (BI) to artificial intelligence (AI) to help address increasingly complex scenarios and derive actionable insights. Many of these new capabilities that will be shipping this October, are powered by breakthrough innovations from our world-class AI research & development teams who contribute to our products (including Bing and Microsoft Search) and participate in the broader research community by publishing their results.

This time last year, I wrote about the Stanford Question Answer Dataset (SQuAD) test for machine reading comprehension (MRC). Since writing that post, Microsoft reached another major milestone, creating a system that could read a document and answer as well as a human in the SQuAD 1.1 test. Although this is a test which is different than real world usage we find that the research innovations make our products better for our customers. Today I’d like to share an update on the next wave of innovation in natural language understanding and machine reading comprehension. Microsoft’s AI research and business engineering teams have now taken the top positions in three important industry competitions hosted by Salesforce, the Allen Institute for AI, and Stanford University. Even though the top spots on the leaderboards continuously change I would like to highlight some of our recent progress.

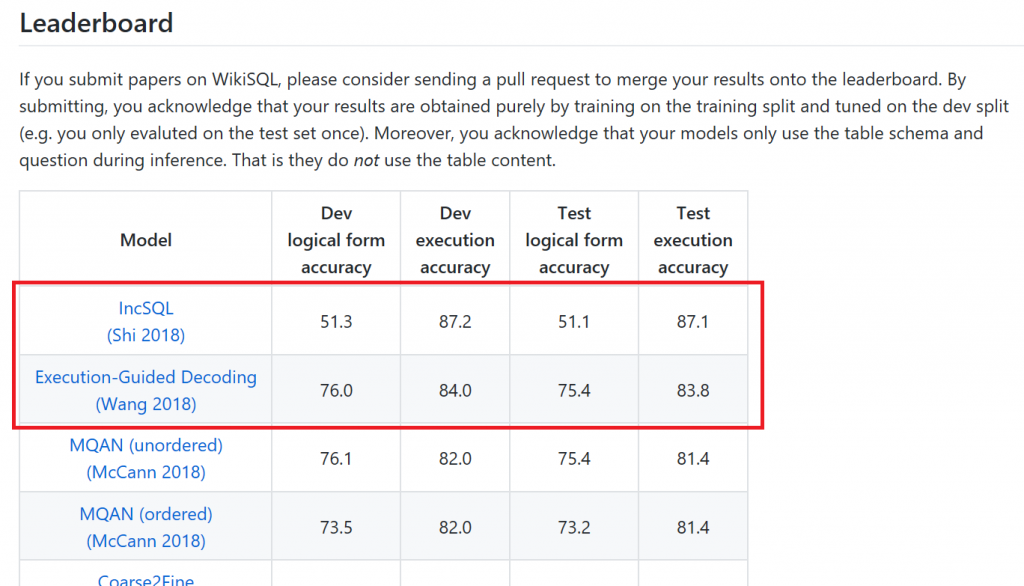

Microsoft tops Salesforce WikiSQL Challenge

Data stored in relational databases is the “fuel” that sales and marketing professionals tap to inform daily decisions. However, understanding how to get value from the data often requires deeply understanding the structure of the data. An easier approach is to use a natural language interface to query the data. Salesforce published a large crowd-sourced dataset based on Wikipedia, called WikiSQL, for developing and testing such interfaces. Over the last year many research teams have been developing techniques using this dataset and Salesforce has maintained a leaderboard for this purpose. Earlier this month, Microsoft took the top position on Salesforce’s leaderboard with a new approach called IncSQL. The significant improvement (from 81.4% to 87.1%) in test execution is due to a fundamentally novel incremental parsing approach combined with the idea of execution guided decoding detailed in the linked academic articles above. This work is the result of collaboration between scientists in Microsoft Research and in the Business Application Group.

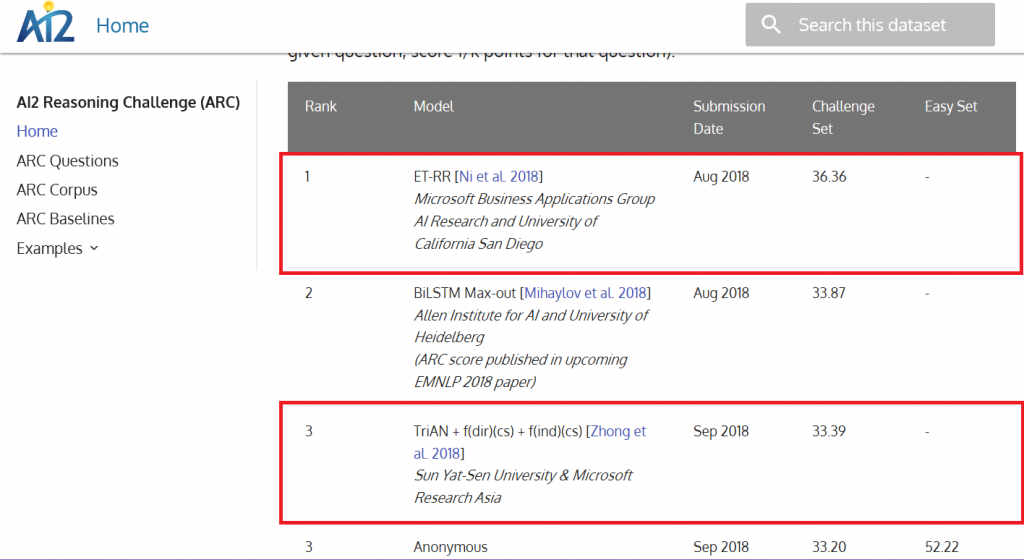

Microsoft tops Allen Institute for AI’s Reasoning Challenge (ARC)

The ARC question answering challenge, provides a dataset of 7,787 grade-school level, multiple-choice open domain questions designed to test approaches in question answering. Open domain is a more challenging approach for text understanding since the answer is not explicitly present. Models must first retrieve related evidence from large corpora before selecting the choice. This is a more realistic setting for a general-purpose application in this space. The top approach, essential term aware – retriever reader (ET-RR) was developed jointly by our Dynamics 365 + AI research team working with interns from the University of San Diego. The #3 position on the leaderboard is a separate research team comprised of Sun Yat-Sen University researchers and Microsoft Research Asia. Both results serve as a great reminder of the value of collaboration between academia and industry to solve real-world problems.

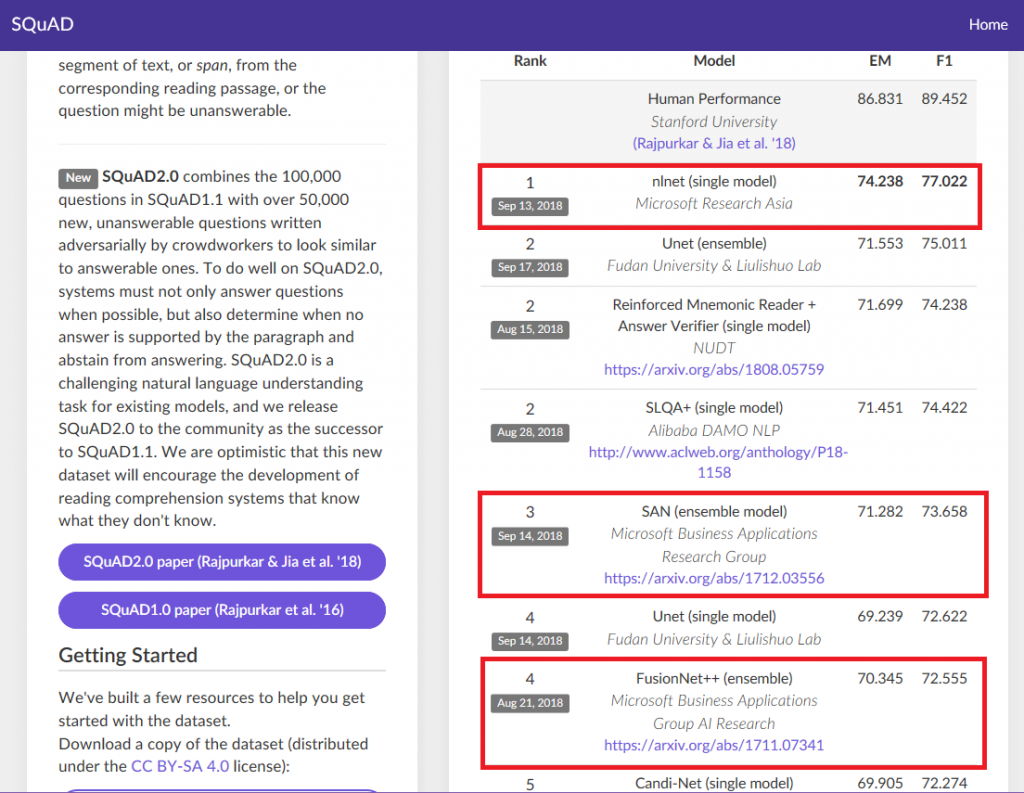

Microsoft tops new Stanford SQuAD 2.0 Reading Comprehension

In June 2018, SQuAD version 2.0 was released to “encourage the development of reading comprehension systems that know what they don't know.” Microsoft currently occupies the #1 position on SQuAD 2.0 and three of the top five rankings overall on, while simultaneously maintaining the #1 position on SQuAD 1.1. What’s exciting is how multiple positions are occupied by the Microsoft business applications group responsible for Dynamics 365 + AI demonstrating the benefits of infusing AI researchers in our engineering groups.

These results show the breadth of MRC challenges our teams are researching and the rapid pace of innovation and collaboration in the industry. Combining researchers with engineering to tackle product challenges while participating in industry research challenges is shaping up to be a beneficial way to advance AI research and bring AI based solutions to customers more quickly.

Cheers,

Guggs