ConfigMgr 2012: OSD Machine Variables not available to task sequence – Post Mortem review

Note: This blog post discusses details of the database for the purposes of understanding and troubleshooting the described scenario. Editing the database directly is unsupported and should be done only when so advised by Microsoft support.

Note: Attempting to trace the following in a production environment can be difficult depending on the amount of activity taking place. An isolated and controlled lab is a far better location to try and do such tracing. In addition, when working to trace specific actions be sure to focus your data collection as much as possible. In other words, when collecting a SQL trace or performance data do so for as brief of time as possible so the amount of data available to review is manageable. With all tracing, remain aware of the time stamps when actions take place.

I ran across an interesting problem the other day that took me a bit to find. Though the issue was a bit obscure I thought it would be interesting to write up the problem here in the event others come across it and also to show how troubleshooting something like this can be done.

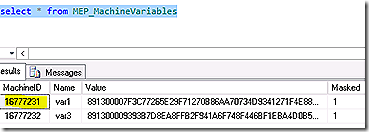

The problem scenario seemed simple enough – when executing a task sequence, variables that had been defined on the machine object were not being passed into the task sequence but variables defined on the collection were available. In this case – and jumping ahead just a bit, the machine variable when created are stored in the MEP_MachineVariables table. These entries were confirmed to be present.

This was the second time I had seen a problem like this. The first time was because a script was being used to write the variables to the machine object using the SDK - and the script was acting directly on a child primary. We adjusted the script to make the variable additions at the CAS and it worked as expected. This wasn’t the case here because, as stated, the variables were in the proper table.

To properly troubleshoot this issue we first had to decide where the problem was being seen – on the server side or client side. It seemed logical to start with the client side. Reviewing the SMSTS.log file during the imaging process we quickly spotted the problem as shown in the log snippet below.

Retrieving collection variable policy.

Downloading policy body {RCA00011}.

<Multiple lines of log processing omitted for brevity>

Parsing Policy Body.

Found 3 collection variables.

Retrieving machine variable policy.

Found 0 machine variables.

If you examine the SMSTS.log file during task sequence execution you will see one of the first actions is to identify all policy applicable to the system and then begin to parse the policy to ensure all needed contents – package files, drivers or variables – are available during execution. Once applicable policies are identified they are parsed. In the log snip above we see the section of log where we are checking to see if any collection or machine variables are available. The first check is for collection variables and, as you can see, immediately after we attempt to retrieve collection policy we start to process it – which means we were able to find policy for collection variables. Once complete we move on to check for any policy that would contain machine variables and, in this case, we move on immediately and indicate 0 machine variables were found. Strange, right? Especially since we know the variables exist in the database tables – but I’m getting ahead of myself.

One thing that should also be clear, or at least inferred, from the log entries is that collection and machine variables are made available to an imaging system through policy. So, something in the policy acquisition either is broken or there is no policy containing machine variables. To answer this question requires us to trace the process that requests policy to see if the policy is there at all. So how do we go about doing that? A couple of ways which I will describe. First though, one thing that should be understood at this point is all of the information we need will not be directly on the management point but in the database, which is where the management point looks it up. So, our tracing will ultimately lead us to the database for details.

To get a handle on what is being requested during the task sequence execution we can look at the management point logs to see which components are active during the request. Don’t forget to enable verbose and debug logging on the management point – a good practice when troubleshooting at that level as some logs or log contents won’t be available unless the more detailed logging is enabled. In addition. an excellent too to trace activity against the database is SQL Profiler so we will run that simultaneously. Remember, keep track of your time stamps during processing as it makes reviewing the logs and trace much easier.

If we review the time of the request for variables in the SMSTS.log and correlate that to the MP_ logs we will see that one of the active logs at that time is the MP_ClientID which will have a corresponding entry at that time when attempting to query the database for policy. At the same time we will see activity in the SQL Profiler trace showing what exactly was being executed against the database. Let’s tie it all together.

From the SMSTS.log we see the following line, note the time.

Retrieving machine variable policy.]LOG]!><time=" 00:20:08.316 +360"

Now, we know that the client utilizes the Management Point for all activity but if we look at the MP_ logs on the management point we don’t see anything that seems useful, even though there is activity in the MP_ClientID log at exactly the time of the SMSTS.log machine policy request. Based on this we have no choice but to turn to the SQL Profiler trace we captured.

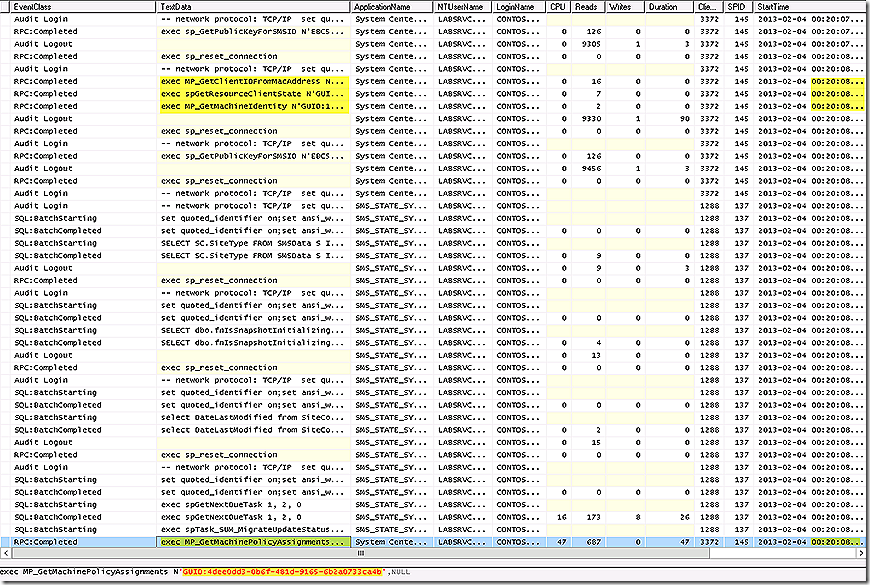

For SQL Profiler remember that all searches are case sensitive so be careful. If we find the time frame of our client request in our trace we see the only real activity at that time. There is some extra noise but the key entries are highlighted.

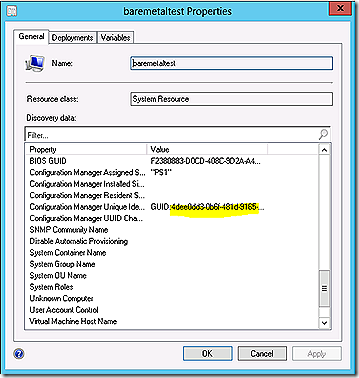

A few things to note here. First, the highlighted activity shows that during this time we are calling several SQL stored procedures. Note the first group of three procedures looks to be querying for client identity information. Hmmmm, that sounds familiar. So that MP_ClientID log that was also active during this time might just have something to do with the flow of activity but that log doesn’t show the detail of the data we are pulling from the database. If we look further down the trace we see a stored procedure called MP_GetMachinePolicyAssigments. That looks promising. And if we look at the details section we see that the stored procedure is acting against the GUID of the client in question. Remember, in order for OSD to work we must have a client that is KNOWN to the site server – whether that client is one that was imported prior to running the task sequence, as in this case, or one of the existing unknown entries. If we examine our test client properties we see the GUID’s indeed match up, as shown.

Interesting. So from all of this information it seems clear that when the imaging system is looking for machine variables (or collection variables) it is pulling that information from policy. So how do we see the policy. We really can’t as policy is an encrypted set of data but we can take it to the next step and find out what policy has been associated with the machine in the database.

Note: If you are reviewing the SQL tables in a hierarchy make sure you examine the tables at the primary site vs. the CAS. The CAS will not contain some of this information as it does not directly host any clients.

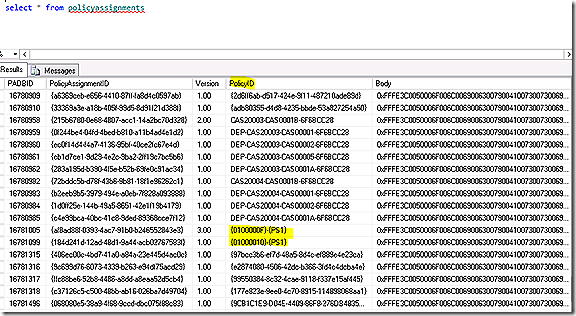

It turns out that several tables are hit when pulling the policy data. If we were to dissect the MP_GetMachinePolicyAssignments we would see that there are several policy tables queried but just looking at the stored procedure it is unclear which of the policies would contain the machine variables we are trying to find. One table that is clearly relevant from the stored procedure is policyassignment. IF we run a query against this table we get an interesting set of data, as follows.

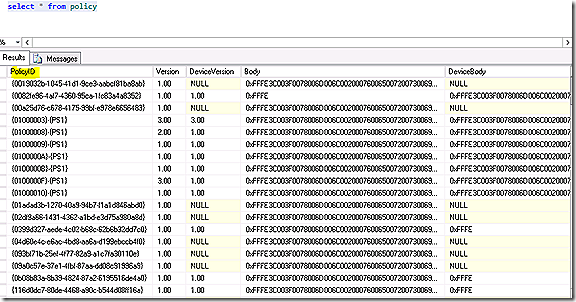

While interesting, this table doesn’t really tell us much….or does it? Notice first that there is a PolicyID column which points to the policy itself. Notice also that the policy ID format is different and we can presume this difference indicates different types of policy. We have a lot of data here and the queries used to get the data in policyassignments is not limited to our imaging system but we are just trying to understand how this all lines up – so we keep digging. Now that we have a policy ID value we can take a look at the policy table itself and see if there is anything interesting, as follows

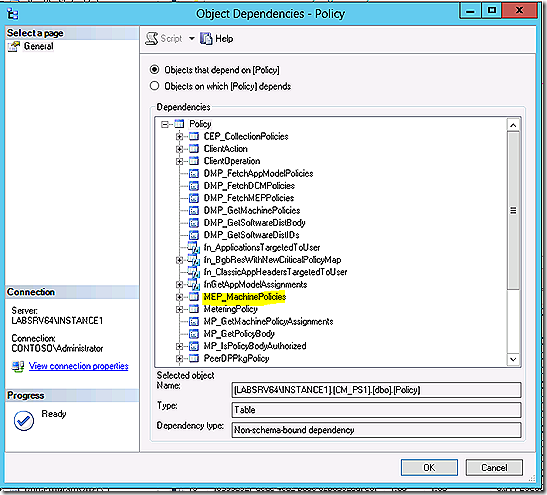

We see our PolicyID column again so we know this is a related table but nothing that seems helpful here – but there must be something we can use to keep digging. Wait, there is! If we right-click on the Policy table and select view dependencies we can display all of the items (not just tables) on which this table depends, or vice versa. So the digging continues. The resulting list is not small.

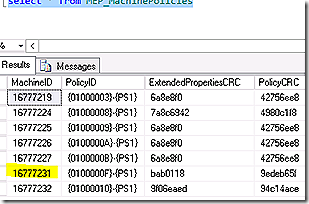

Reviewing the list of tables we see one that looks promising – MEP_MachinePolicies. Since machine policy is what we are looking for, and since we know the MEP tables contain the variable information, this is a great find. If we take a look at the contents of the MEP_MachinePolicies table we see some very interesting information.

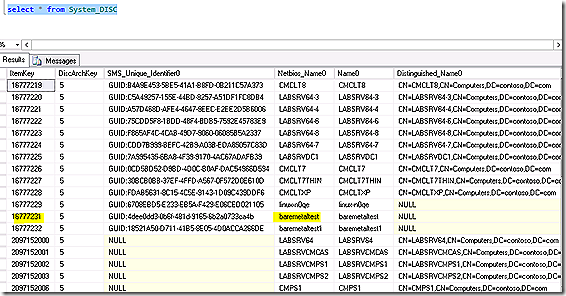

OK! Now we are getting somewhere. We still can’t see the contents of the policy as it’s encrypted but here we clearly see that a policy HAS been created for the machine in question. We can confirm this by looking up the MachineID, also known as the ItemKey, in the System_DISC table as shown.

Now, remember – this is my lab and everything here works. The problem environment is where we see problems. Using my lab though I was able to track the entire process that takes place when a task sequence pulls down policy – one of which is the machine variable policy. To confirm I was seeing what I thought I was seeing I tested by removing the machine variables from the test system a time or two and putting them back. What I noticed when I did this was that no policy was deleted but the policy assignment was tombstoned as reflect in the istombstoned column of the policyassignments table. A tombstoned policy assignment or a missing policy assignment will result in the client not receiving the policy. In the case of the problem environment there was no policy reflected at all in the MEP_PolicyAssignments table. OK – so we have now answered the first question. Which side of processing is causing the problem – server or client? In this case the client processing checks out as being OK – what is happening is exactly what we would expect to happen. There is no policy for the machine in the MEP_PolicyAssignments table and, accordingly, nothing is processed for this during imaging!

Now that we know this is a server side problem we need to begin troubleshooting that side. To trace this we need to add a new machine record or test with an existing one and add some machine variables to it.

Collection membership, or lack thereof, for the machine doesn’t matter since the variables will be written for the machine itself. There is also no need to trace anything during this process because what we are really interested in is the process of adding a variable to the record. I will demonstrate tracing the process in my working lab and the show the problem scenario to contrast.

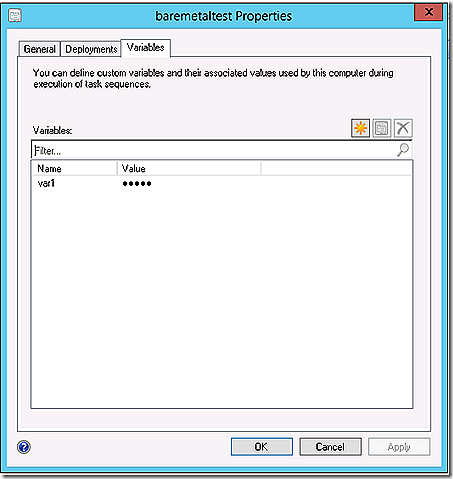

WIth the test machine identified, add at least one variable and select OK. Make sure your SQL profiler trace is running for a minute or so after clicking OK.

With the machine variable added now we must trace what happened as a result of that addition. Remember, we are trying to understand the process so we can understand what went wrong in the problem case.

In almost all cases when a change is made in the console it is implemented through the SMS Provider so that log is a good place to look first. In this case the log shows activity against the machine settings, client operations and more. The SMS Provider log is a good place to go to understand how the provider works but in this case is a bit hard to follow because there is so much activity. In fact, in a production environment with multiple consoles active the SMS Provider log will be so busy that it will be hard to capture anything here. Suffice it to say then that what we know at this stage is that the variables were added which caused them to be written to the database. Now we need to find out what processing happened after the initial database write. In order to know what to look at here either requires a fundamental understanding of how ConfigMgr components function or you can sift through ConfigMgr server logs, keeping in mind the time stamp, until stumbling on the component that is at play. Fortunately, we know the internals already! :) In most cases when an action is taken in the console – such as creating or modifying a software update, software package, software application or, in this case, machine variables, data will be written to the database. That data needs to be acted upon by additional ConfigMgr server components to become active. The component that watches key tables for changes, such as MEP_MachineExtendedProperties in this example, and causes additional processing to be initiated is the SMS Database Monitor thread. More on that shortly.

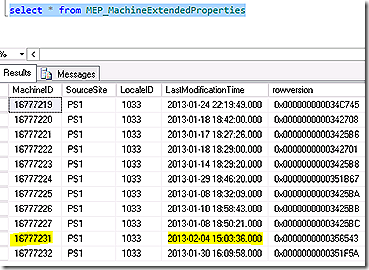

In this case what has happened is the machine variable data was written to the MEP_MachineVariable table and a notification of this change was written to the MEP_MachineExtendedProperties table.

Examining the MEP_MachineVariables table we see the MachineID for our test system. We can’t tell what information the value column holds but based on the name of the table we can easily surmise this is where our machine variable information is located.

Reviewing the MEP_MachineExtendedProperties table for our test system we can see that this is the only system that has a current time stamp for last modified time which confirms the addition that was just made was recorded here.

Knowing that the SMS Database Monitor thread (SMSDBMON) is responsible for watching key tables, including MEP_MachineExtendedProperties, we can take a look at that log to see what might have happened. We find the following snip.

RCV: UPDATE on MEP_MachineExtendedProperties for MEP_ADD_Upd [16777231 ][72057594038037538]

SND: Dropped C:\Program Files\Microsoft Configuration Manager\inboxes\policypv.box\16777231.MEP (non-zero) [72057594038037538]

RCV: UPDATE on MEP_MachineExtendedProperties for MEP_ADD_Upd [16777231 ][72057594038037539] )

SND: Dropped C:\Program Files\Microsoft Configuration Manager\inboxes\policypv.box\16777231.MEP (non-zero) [72057594038037539]

I’ve cleared the time stamp data for clarity but remember that the time stamp in the log is local time for the server while the time stamp in the database is UTC. From this snip we can see that SMS Database Monitor picked up that there was an insert on MEP_MachineExtendedProperties and in response dropped a notification file in the policypv.box directory. Note the name of the file is <MachineID>.MEP. Interesting, huh? And note the name of the inbox – policypv.box. The name of the inbox suggests what might happen next has something to do with policy, right? Indeed – and this is what we care about. From the beginning we were concerned because policy didn’t seem to be making it’s way to our test system. Policy provider (policypv) is responsible for generating all policy for clients to retrieve. Let’s look at a snip on a good system that shows the processing that happens when the MEP file is processed.

MEP-ProcessChangedObjects.

MEP-************Processing Machine Extended Properties for Machine (ID:16777231 - GUID:4dee0dd3-0b6f-481d-9165-6b2a0733ca4b)************

MEP-Successfully deleted/revoked Policy and deleted PolicyInfo and CRCs for Machine Extended Properties of Machine (ID:16777231 - GUID:4dee0dd3-0b6f-481d-9165-6b2a0733ca4b)

MEP-************Successfully processed Machine Extended Properties for Machine (ID:16777231 - GUID:4dee0dd3-0b6f-481d-9165-6b2a0733ca4b)************

MEP-ProcessObjectsInRetry.

Simple, easy and exactly what should happen. When Policy Provider sees the MEP file it begins working on the system, which we see is our test system by the Machine ID in the log test, and creates the appropriate policy. But this isn’t what happened on the problem machine. When the problem machine was traced to this point we saw a different set of log entries, shown below.

MEP-ProcessChangedObjects.

CMEPSource::GetMachineInfo:- Machine Variables are only supported for SMS Client and Machine(159) is not a SMS Client.

MEP-ProcessObjectsInRetry.

No changes found for TSReferencePolicy

Interesting. Quite a different response! And thus we arrive at the reason for our problem. For some reason the system we are adding machine variables to is not being seen as a ConfigMgr client and, accordingly, the policy changes are being dropped! I notice something else in the log snip and that is the value 159. What is that? Sure seems like that is a machine ID and in this case that machine ID is definitely not the machine ID of the system where we are adding the machine variables – so something seems odd.

First thing is to verify that 159 is, in fact, the machine ID in question. In addition, it would be helpful to see the queries that are taking place to determine whether or not the system is a client. SQL Profiler once again is our answer. Remember that we captured the activity taking place when we added the machine variables. Working through the trace then and focusing on the machine ID we are able to locate what looks to be the query – and the time stamps line up.

select t1.SMS_Unique_Identifier0, t1.Name0, t2.SMS_Assigned_Sites0 from System_DISC as t1 inner join System_SMS_Assign_ARR as t2 on t1.ItemKey=t2.ItemKey where t1.ItemKey=159

CONFIRMED! The value 159 is the ItemKey. So somehow this ItemKey is being used in place of the actual ItemKey of our machine where the variables were added. The question is, why? Now that we know the query being used to determine whether a system is a client or not we can run this query against the database ourselves. In this case, the query returned zero records which confirms why Policy Provider thinks the system isn’t a client – because the query returns no record! At this stage I was stumped. I really didn’t understand where the 159 value was coming from or why we were picking that value vs. the ItemKey of the actual system where we had added machine variables but I did recall seeing itemkeys in one other table we looked at along the way – MEP_MachinePolicies and related MEP tables. I went to the MEP_MachinePolicies table and there it was – a record with a machine ID of 159. Knowing that this entry was not valid – because it didn’t have a corresponding entry in System_DISC – and also knowing I was at a loss as to what was causing the issue, I figured there was no harm in cleaning up this orphaned entry so deleted the record. WHAM, as soon as I did that all of the machine variable policy began to flow through again – I didn’t even have to do anything! And now all of my systems were imaging correctly!

Whew, a long process to find the proverbial needle in a haystack. I can’t stress enough that I’ve documented this process in an effort to show how troubleshooting like this can be done. In no way should this blog entry be seen as sanction to edit the database directly. Any direct modifications to the database are unsupported and carry associated risk and should only be done when a good database backup is available AND you are working with a Microsoft support engineer who has sanctioned such changes! Having said that, hope this was an interesting journey for you – it certainly was fun for me!

Comments

Anonymous

February 06, 2014

Hey, Steve, Saw this issue today for the first time. Interesting to note that I saw the bad MachineID in the MEP_MachineExtendedProperties table. Once we removed this record, all their machine variables were applied almost immediately. Thanks for detailing the process for us!!Anonymous

May 22, 2014

Great blog!!! we came across this very issue on a case. Thanks and great work!!!Anonymous

August 26, 2014

Very Nice and deep dive of policy flow ....Thanks a ton.......Anonymous

October 13, 2014

Thanks, great post !!