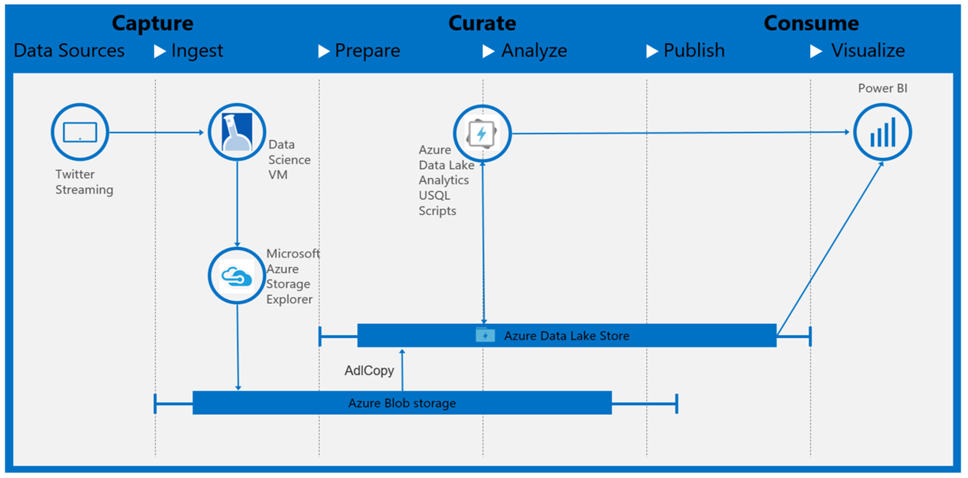

Scenarios for Big Data–Capture, Curate and Consume

Step 1. Ingest Tweets with python scripts on the Data Science Virtual Machine

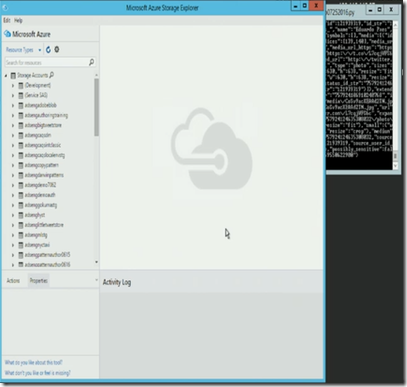

Step 2. Move the Tweets into an Blob on Azure Storage using Azure Storage Explorer

Step 3. Run Azure Data Lake Copy to move data from Blob storage to Azure Data Lake

The easiest way to create a pipeline that copies data to/from Azure Data Lake Store is to use the Copy data wizard. See Tutorial: Create a pipeline using Copy Wizard for a quick walkthrough on creating a pipeline using the Copy data wizard.

The following examples provide sample JSON definitions that you can use to create a pipeline by using Azure portal or Visual Studio or Azure PowerShell. They show how to copy data to and from Azure Data Lake Store and Azure Blob Storage. However, data can be copied directly from any of sources to any of the sinks stated here using the Copy Activity in Azure Data Factory.

Sample: Copy data from Azure Blob to Azure Data Lake Store

The following sample shows:

- A linked service of type AzureStorage.

- A linked service of type AzureDataLakeStore.

- An input dataset of type AzureBlob.

- An output dataset of type AzureDataLakeStore.

- A pipeline with a Copy activity that uses BlobSource and AzureDataLakeStoreSink.

The sample copies time-series data from an Azure Blob Storage to Azure Data Lake Store every hour. The JSON properties used in these samples are described in sections following the samples.

Azure Storage linked service:

{

"name": "StorageLinkedService",

"properties": {

"type": "AzureStorage",

"typeProperties": {

"connectionString": "DefaultEndpointsProtocol=https;AccountName=<accountname>;AccountKey=<accountkey>"

}

}

}

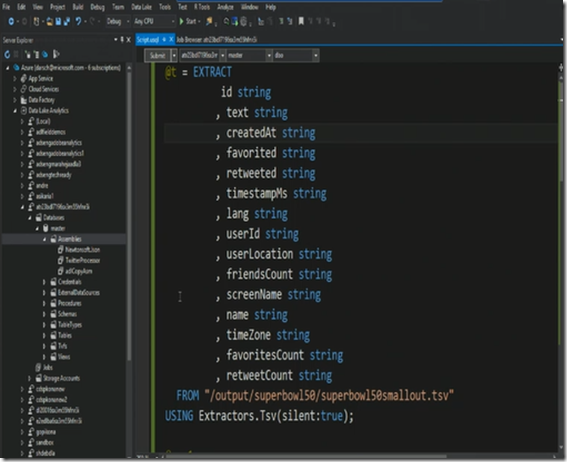

Step 4. Run a U- SQL Script and create outputs in Azure Data Lake Analytics

You must create an Azure Data Lake Analytics account before creating a pipeline with a Data Lake Analytics U-SQL Activity. To learn about Azure Data Lake Analytics, please see Get started with Azure Data Lake Analytics.

Please review the Build your first pipeline tutorial for detailed steps to create a data factory, linked services, datasets, and a pipeline. Use the JSON snippets with Data Factory Editor or Visual Studio or Azure PowerShell to create the Data Factory entities. For details on running U-SQL see https://azure.microsoft.com/en-us/documentation/articles/data-factory-usql-activity/

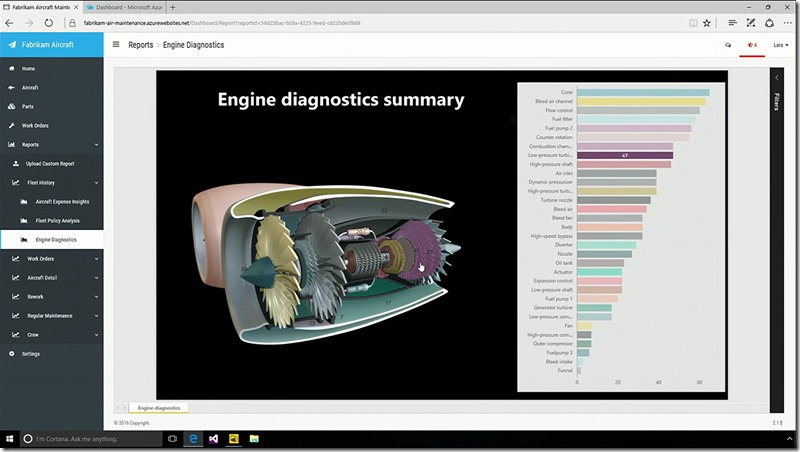

Step 5. Produce Power PI Dash Board on the data contained in the Azure Data Lake