The Intern Perspective: Explorer Interns

Posted By J.T. Kimbell

Program Manager

Over the last two weeks you’ve had the chance to hear from our three Explorer interns and learn about them and their experiences. Now, you get to see what Meg , Colleen , and Andrew were working on all summer.

Intern Cove

Our first day of work, over a free lunch in the beautiful Advanta campus cafe, we are given the instruction to create a Windows Store app that runs on an embedded device that showcases the capabilities and features of Windows Embedded 8. It was made very clear to us from the start that the sky is the limit and because we were given so few constraints, our Explorer pod really had the chance to reach our creative potential. However, In order to focus our creative flow, we decided to distribute a survey about technology to our friends and family. We targeted our peers with the mentality being that this younger demographic consists of the future home owners and potential users of our device. We wanted to learn what these users expect, need, and desire from technology.

After analyzing our data, and much brainstorming and collaboration, we gathered the following insights:

- People would like to be more organized

- People want to control their environment.

- People want product transitions and adjustments to be smooth.

- People don’t want to move. LAZY.

- Comfort is key

- People don’t want personalization, they expect it.

- People want a Centralized feel

- Multi-tasking & Easily switching between tools

- People prefer a device that has multiple uses rather than an item that does one single thing that is essential

- Device should NOT be in the way

- Location of device can limit the usefulness

Paper Prototyping

After a session with a few members of the User Experience (UX) team, we created a few prototypes that represented what our app would look like and how the user would navigate through our app. After making a few core decisions about how the user will interact with our device, we brainstormed potential goals for our product. However, given our time constraints, we really needed to narrow our scope in a way that would maximize the total end to end user experience.

After reaching out to a few stakeholders and a member of the marketing team, we gathered that one of the possible ways to add allure to our application would be to highlight the ability to integrate the application with other pieces of the Microsoft ecosystem. Obviously, this ecosystem is vast and broad, therefore we need to once again narrow our scope and choose specific technologies that would support this goal, and, furthermore, support other goals of the application.

Team Cove at Explorer Fair

In addition to incorporating other technologies from the Microsoft ecosystem, we decided to prioritize the following additional goals: to create an application that allows for seamless integration between home and store, to allow the user to be more organized, while maintaining the device's centralized feel, and to have multiple devices communicating with each other via the cloud. As mentioned above, we gathered from our research that users do not want this app or device to be “in the way” and therefore, we began to think of the many ways to make this application much easier to use and “fade away” in a manner in which it was not in the user’s way. Voice control seemed to be the best way to accomplish this goal as it would not require for the user to physically place his or her hands on the device and they could physically distance themselves from the device yet take full advantage of its complete functionality. We definitely felt that voice navigation would allow use to create a seamless feel for the user and to not limit the usefulness of the device dependent on location. After researching a few options, we decided that integrating the Kinect for Windows would not only satisfy this goal, but it would demonstrate to our team the possibilities of using embedded devices with the Kinect.

Using Kinect

Diagram Demonstrating the "Wall" Between the Windows 8 Application and Kinect

Kinect Speech Recognition actually works in interesting ways: Kinect will constantly be taking in input just like any other listening device, it is up to the developer to dictate what set of words and/or phrases that Kinect can actually recognize. The developer can create something called a Grammar defined using XML that basically has a set of rules which contain items which can be either words of phrases that Kinect can understand; the developer then tags certain rules or items in that Grammar with anything they want, that way once something on that Grammar is recognized by Kinect, that tag is read by the C++ or C# code and some action can be taken from there.

Starting out, we viewed the process as quite simple and straightforward…initially we believe that we would deploy our Windows 8 Application and the Kinect sensor would read those tags directly into our application code. Unfortunately the process was not this easy. Imagine a large brick wall between a Windows 8 Style Application and the Kinect sensor so that no direct connection could be made. Eventually, we discovered a way to get past this wall: we would implement a Kinect WPF application and our Windows 8 Style. In order to communicate between the two technologies, a custom protocol would be registered and a protocol handler on the Windows 8 Application side (“andrew:// ”) would read a tag on the Kinect WPF application side and would kick off a process with some sort of path that the

Diagram Demonstrating the End Solution

Windows 8 Application would then parse and react to accordingly. For example: if someone said something like “Please go to the home page”, the Kinect WPF application would start this process: “andrew://coveapp/navigateto/home” and then the Windows 8 Application would understand navigate to and home and it would go to that relative path.

At the end of the day, it is somewhat unfortunate that we were forced to report back that there is no straightforward way to have a Kinect communicate with a Windows 8 Application, however, it was a great learning experience and called for an interesting and unique solution to a problem that other developers may have in the future. Given more time, we would like to have implemented object and facial recognition with our device in a manner in which a user could simply hold up an object to the Kinect sensor and our app would recognize the user and log him or her into the system.

Securely Logging In

Additionally, we wanted users to be able to log in to this application in order to take advantage of certain things that would be personalize-able. We wanted to make the login experience similar to logging into Windows. I modeled the UI after the Windows 8 login page but the backend was completely new and foreign. We knew that we did not want the user’s passwords to be directly stored in the cloud. We desired to hash the passwords but were weary of creating our own hashing function that could potentially be reversed by a hacker.

After researching multiple hashing algorithms, we ultimately chose the SHA256 hashing algorithm within the System.Cryptography namespace which hashes any string using that algorithm into a hash of that password, which supposedly cannot be reversed. Upon a new account creation, the private information is immediately hashed and upload to the cloud eliminating the need for the password to ever be stored in memory. We also researched the possibility of using SALTS. Essentially a SALT is an addition to a hashed password using other personal information about that user, such as zip code or preference settings. Ultimately, we decided to not move forward with the SALTs because we did not have enough information to make an educated decision.

Connecting to SQL in the Cloud

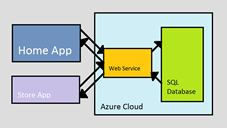

This diagram demonstrates the path connecting both our Home application and Store application to the Azure SQL Database

Going back to the above mentioned goal of integrating other elements in the Microsoft ecosystem and additionally allowing for seamless integration among the tasks that users face daily, we decided that we should incorporate the Azure cloud. Deciding to go forward with Azure Cloud connectivity was a hard decision to make because this due to the associated cons and risks. The most serious being the impossibility of directly connecting a Windows 8 application to any database, much less a database in the cloud. We also knew that we needed to also create an intermediate layer to allow for this connection to be made. After exploring a few different methods (REST, XML, JQuery, SOAP), we ultimately decided to design a SOAP service that was also published to the Azure cloud. The SOAP service was the best choice for our project for it allowed us to maintain the state of the Object we were initializing or reading data from because most of our code base was centered on personalized classes.

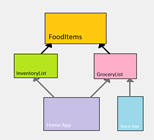

Diagram of our SQL Tables

Regarding our database table structure and class layout, in order to maximize our resources and not store any unnecessary data, we decided to create a master table, "FoodItems" that stored the essential information about the food items being stored in both the inventory list and grocery list. This also allowed for us to have preconfigured data that the application would ship with, thus preventing the need for the user to "ramp up" on how to fully utilize their device. The tables "InventoryList" and "GroceryList" had very minimal information stored within them and had a foreign key that corresponded to a "FoodItem" within the master table. From the diagram below, you can see how both the Home Application and Store Application grabbed data from the grocery list, and then the back end code returned the needed information from the master table. The Home Application was also able to grab similar information Inventory List. This allowed for the user to store a Grocery List from within their home and pull up this very same list when they logged into the Kiosk application.

The Music That Never Played

Another interesting aspect of the project is the implementation of media playing capabilities into our application. Initially, this back end was coded in C++ to provide a new learning experience, however, due to the constraining factors (the most obvious being that the rest of the application was coded in C#), we permanently switched to C# for implementing the backend of this functionality. As a team we had high hopes for this: we created this idea of “Moods” which would be customizable with personal settings such as lighting, temperature, music, etc. We decided that it made the most sense to start with the actually media playing aspect of Moods first. We quickly ran into several obstacles. MP3 files have something called an ID3 tag associated with them that holds all of the metadata such as Artist, Album, Year, etc; the real trouble is actually finding that tag in the file and reading and displaying this tag. There are 3 different versions of ID3 tags some are the first 128 bits of a file, some are the last 128 bits, and the trouble is that not all MP3 files will be using the same version. To solve this problem we had to essentially search the files for the starting string “TAG” which specified the beginning of the tag. After tackling this issue, we needed to implement an efficient way in which we could save the various Moods that people created. The next challenge was actually playing these files in a Windows 8 Application. Unfortunately, it was during this turning point that this functionality had to be cut out of our demo version.

Testing the Application

One of the few things we were able to start thinking of doing in the test role was make a test plan. The four main features that we wanted to focus on in test were Voice Navigation, Inventory, Grocery List and the UI. The UI would have been very basic automation that just went and checked to make sure that the UI was still functioning correctly after each addition or change that we would make. Voice Navigation would have been a little more challenging because we would have to find ways to record our voices as a controlled variable in each test when we wanted to make sure voice was working. We would also have to have different speech methods used to make sure our application could handle the unexpected sounds. Since the Grocery List and Inventory both pulled from Azure, we would have wanted to test the security to make sure no one could have access to our databases. We would have also wanted to make sure our app could handle having no items as well as many items in both Inventory and Grocery List. Some of the stress testing would be to see if we could someday have media playing in the background as well as what is currently being used by the user. Over all, everything should continue to work even at an average usage over a long period of time. This would be a good area to use automation.

A Walk Through the App

When you first launch the app you’ll come to the lock screenthat shows you date, time, and your local weather.

You can then use your voice to ask the app to go to the login in page. From there you will see the homepage with “My Connections.” These are all of the different areas in the application where you can control your own experience.

In “My Kitchen”, you can access your kitchen inventory.

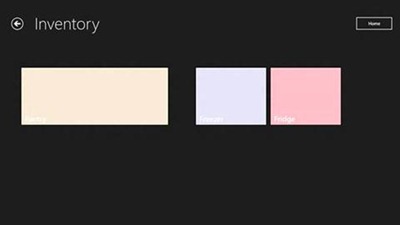

In inventory, there are three areas that you can look for items in your kitchen: The pantry, fridge and freezer.

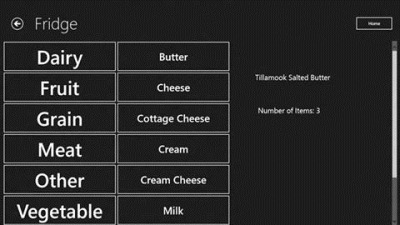

When you select one you are taken to the corresponding page where you can search first by category, then by type and then see your individual items.

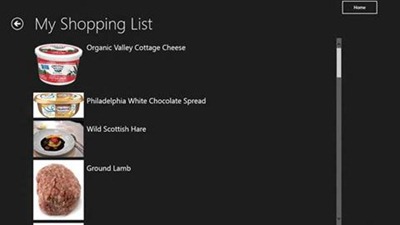

The other part of “My Kitchen” is the grocery list. You can look at the items that you want to buy at the store. When you go to the store and log in at the embedded device there, you’ll be able to find the same grocery list. The store device will also be where you can pick up different coupons for using the application.

A note from J.T. – We hope to be able to take parts of the application and code that the Explorer Interns wrote and share those as sample and showcase applications for how to use Windows 8 Applications on Windows Embedded. Stay tuned for more details on that.