Using UI Automation to enable web browsing with a switch device

This post describes how I used the Windows UI Automation (UIA) API in a Windows Forms app, in order to add support for web browsing with a switch device to one of my assistive technology apps.

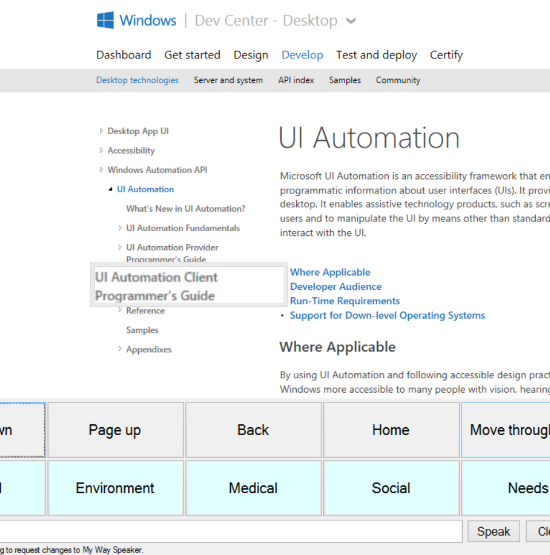

Figure 1: Scan highlighting of a hyperlink on a web page.

Background

A couple of months ago I was contacted by a Rehabilitation Engineer who was considering using my 8 Way Speaker app to help a couple of people with severe disabilities. One of these people has MND/ALS and the other has Cerebral Palsy. But that app can't leverage 3rd party text-to-speech (TTS) voices installed on their devices, and that was blocking them from using the app. So I began work on a desktop app that might be of interest to the Rehabilitation Engineer and the people he works with, and which could use the additional installed voices that they have available. The new app is called My Way Speaker.

I first added a grid of buttons showing words and phrases, such that those words and phrases could be spoken using the default voice set up on the device. The buttons could be invoked through touch, mouse or keyboard input. After I released the first version of My Way Speaker, the Rehabilitation Engineer told me he’d also tested the app with two assistive technology tools which allow alternative forms of input, (including the free Camera Mouse head tracking app,) and that both had worked well.

I then needed to prioritize how I incorporated the other features of my 8 Way Speaker app into my new app, such that I was focusing first on the features that were of most interest to the Rehabilitation Engineer. The engineer’s feedback has turned out to be invaluable. He’s helped me understand usage scenarios for some of my earlier app’s features that I’d not even considered. For example, when I built the “Presentation Mode” feature for my 8 Way Speaker app, I was considering public speaking. (There’s a stand-up comic in the UK who can’t physically speak, so he uses a mobile device to deliver his speech.) And while I was considering scenarios around presentations, lectures and routines, the engineer pointed out that the app has potential to be useful to users delivering a set of questions to ask their doctor or when visiting a business. Or as he pointed out, maybe the user could build up a set of passages to speak when accessing services over the phone.

So I got lots of helpful feedback on features of the 8 Way Speaker app relating to Presentation Mode, multiple boards, and support for very small touch movements at the screen, but I learnt that what I really needed to work on next was support for switch device input. This led to me adding a basic scanning feature, controlled with a single switch device. Again, I got some great feedback on ways that the switch device input could be made more efficient, and all being well I’ll get to implement those before too long.

But some of the feedback which struck me most related to the human side of all this. The engineer pointed out that when people with MND/ALS are introduced to switch devices, this might be far too late for there to be any significant benefit. There’s a learning curve when becoming familiar with a new way of working at the computer, and depending on how familiar the person is with the various technologies involved, this can be a very frustrating and ultimately fruitless exercise.

I saw this first hand when my mum had MND/ALS and she tried using a foot switch to control a device. For a number of reasons this didn’t work out, and so now as I work on a new switch-controlled app, I wonder if there’s anything I can do to increase the chances of the app being useful in practice.

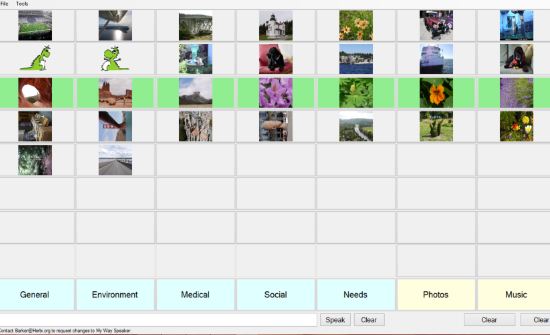

One thing I have wondered, is that if the app could be made more personal to the user, might that mean in some cases the user would stick at trying to learn how to use the hardware and software, where traditionally they would feel it’s all too much to deal with? Perhaps if the user could use a switch device to view photos of family and friends, and places of interest, that might make the technology more engaging. And as they become familiar with the technology, they then might also use the app to speak for them. Similarly if they could use a switch device to have their favourite music played by the app, maybe that would also encourage them to become familiar with the technologies.

So I added features to the app for viewing photos and listening to music. The photo viewing feature was straightforward to add, simply by setting some picture file to be the BackgroundImage property on buttons or a form, and the music feature used the handy Windows Media Player (WMP) ActiveX Control. (Who knows, if people request it, maybe I’ll update the app to have the WMP Control show video too.) The user can use a switch device to move between these features and the speech features. The first version of the photos and music features require someone to set up the photo and music files for use in the app, but depending on the feedback I get, maybe I can update the app to have photos viewed in social media sites on the web.

A short demo of a switch device being used to view photos and listen to music is at https://www.youtube.com/watch?v=DAvXKKD2Eyc.

Figure 2: Scan highlighting moving through a set of photos in the My Way Speaker app.

Now, having done all that, I couldn’t stop thinking about what people mostly do at a computer, and that’s browse the web. Could I add a feature that would allow a switch device to be used to browse the web from inside the My Way Speaker app? The user could then move between boards for speech and web browsing, and only have to become familiar with one form of input.

Adding support to have web pages shown in the app is easy, as all I had to do is add a WebBrowser control. But how could I build a fairly intuitive feature for browsing the web with a switch device? This is where UI Automation comes in.

Scanning through hyperlinks on a web page, and invoking them

There are probably a few ways to have a switch device control input at a web page. For example, a while back I built an app that used UIA to find invokable elements on a web page, and the app would show a number beside those elements. That number could then be input with an on-screen keyboard (OSK), to invoke the element. Screenshots at https://herbi.org/HerbiClicker/HerbiClicker.htm show the Windows OSK in scan mode being used to invoke the element.

But for my new My Way Speaker app, I thought it might be best to have a scan highlight move through the hyperlinks. The user would anticipate the highlight reaching a link, in the same way they anticipate reaching a button elsewhere in the app for speech. So the next question is, how do I get access to the hyperlinks shown on the page in order to highlight them and programmatically invoke them?

And as it happens, all I had to do was turn to one of my own MSDN UIA samples, “UI Automation Client API C# sample (hyperlink processor)”, https://code.msdn.microsoft.com/Windows-7-UI-Automation-0625f55e. This sample shows how to use UIA to get a list of hyperlinks from a browser, and programmatically invoke those hyperlinks. All I had to do was copy across a bunch of code from my sample, stick it in my new app, and hey presto – much of the work was done. I’ll not go into details here on what that sample code does, as the sample’s jam-packed full of comments and you can read the details there.

One important decision I made is that I didn’t pull across all the thread management code that the sample has. That sample runs on Windows 7 as well as later versions of Windows. In Windows 7, UIA had certain constraints around threading, which meant that in some situations, the UIA client calls needed to run on a background thread. I think that constraint mostly related to UIA events, which my new app doesn’t get involved with. So I decided not to copy across the threading code, and instead leave things as simple as possible in my new app. I’d like my app to work great on both Windows 7 and later versions of Windows, but so far I’ve only tested it on Windows 8.1. If it turns out that I do need to add threading code in order for it to work well on Windows 7, I’ll do that.

By copying across the sample code, I achieved three things…

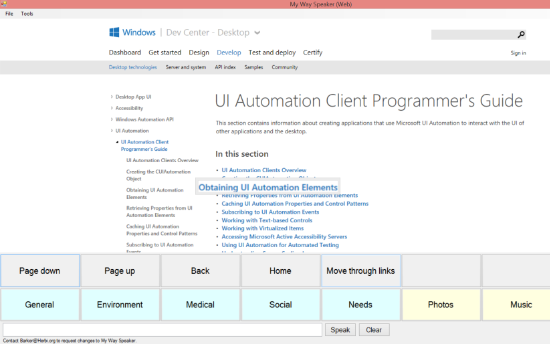

1. Find the hyperlinks shown on the page. A very useful aspect of the code I copied is that I end up with a list of hyperlinks that intersect with the view of the page. That is, I don’t end up highlighting hyperlinks that are off the top or bottom of the section of the page I’m viewing. (Unfortunately I do sometimes get hyperlinks that are in the view but which have no visuals. I suspect I can prevent those from being highlighted, but I’ve not got round to that yet.)

2. Highlight the links. I do this in the same way that the sample does, and use the Windows Magnification API to increase the size of the hyperlink visually on the screen. I set the magnification to x1.5, but I’ll make that configurable if I get feedback requesting that.

3. Invoke the hyperlink programmatically. This is done exactly as the sample does it.

Figure 3: Scan highlighting moving through all the hyperlinks shown on a web page.

Scrolling up and down the web page with UI Automation

Now that I had support for finding, highlighting and invoking the hyperlinks, I integrated this with the existing scan features in the app. This means the same timer is used for scanning both the speech-related buttons and the hyperlinks on the web page. I also added a few buttons below the web browser, such that the scan of the hyperlinks could be triggered through switch input. It seemed that the only other critically important buttons that would be needed would be Home, Back, Page Up and Page down. The Home button would simply navigate the WebBrowser to some URL specified in the app’s options, and the Back button triggers a call to the WebBrowser’s GoBack() function.

However, support for Page Up and Page Down turned out to be more interesting than I’d expected. Maybe I missed it, but I didn’t see anything off the WebBrowser control relating to paging. When I looked up how to do this on the web, I found discussions around simulating paging-related keyboard input and sending that to the control. So I tried taking that approach, but it didn’t work well at all for me because keyboard focus wasn’t always where I wanted it to be, no matter how hard I tried to control it. But then it occurred to me that I should never be messing with simulated keyboard input if I can robustly achieve what I need through UI Automation.

I ran the Inspect SDK tool to verify that there was an element in the UIA tree beneath the WebBrowser control which declared itself to support the UIA Scroll pattern, and then I wrote the code below to programmatically access that element.

// Get the UIA element representing the WebBrowser control window.

IUIAutomationElement elementBrowser = _automation.ElementFromHandle(this.webBrowser.Handle);

if (elementBrowser != null)

{

// Cache the reference to the Scroll pattern, to avoid

// a cross-proc call later if we do scroll.

IUIAutomationCacheRequest cacheRequest = _automation.CreateCacheRequest();

cacheRequest.AddPattern(_patternIdScroll);

// Only search for an element in the UIA Control view.

IUIAutomationCondition conditionControlView = _automation.ControlViewCondition;

// Also only search for an element that can scroll.

bool conditionScrollableValue = true;

IUIAutomationCondition conditionScrollable = _automation.CreatePropertyCondition(

_patternIdValueIsScrollPatternAvailablePropertyId, conditionScrollableValue);

// Combine the above two conditions.

IUIAutomationCondition condition = _automation.CreateAndCondition(

conditionControlView, conditionScrollable);

// Now find the first scrollable element beneath the WebBrowser control window.

_scrollableElement = elementBrowser.FindFirstBuildCache(

TreeScope.TreeScope_Descendants, condition, cacheRequest);

}

Note that I only call that code after the dust has settled following a page being loaded into the WebBrowser. I registered for DocumentCompleted events which get raised when a page has been loaded, but I found that a bunch of these events get raised for the loading of a single page. So I wait a little time after the last of these events have arrived before running the code above to get the scrollable element.

And then later when the user scans to and invokes the Page Up or Page Down buttons, I simply do the following:

int iPatternId = _patternIdScroll;

// Get the reference to the scroll pattern that was cached

// earlier when we found the scrollable element.

IUIAutomationScrollPattern pattern =

(IUIAutomationScrollPattern)_scrollableElement.GetCachedPattern(iPatternId);

if (pattern != null)

{

// Now scroll up or scroll down.

pattern.Scroll(ScrollAmount.ScrollAmount_NoAmount,

(scrollDown ?

ScrollAmount.ScrollAmount_LargeIncrement :

ScrollAmount.ScrollAmount_LargeDecrement));

}

And when I run the above code, I don’t care where keyboard focus is, and that’s the way I like it.

A short demo of a switch device being used to browse the web is at https://www.youtube.com/watch?v=PSqJqpxBXiY.

Conclusion

These new features in the My Way Speaker app could be improved in all sorts of ways. Today the app has many constraints (and bugs) which impact the app’s usability and efficiency. But I have to say that I’ve found this project to be incredibly exciting. I’m hoping that the new features are usable enough to demonstrate the potential for a switch device user to have a similar experience in an app when browsing the web, viewing photos, listening to music and speaking.

I’m very grateful to the Rehabilitation Engineer and the people with MND/ALS and CP that he works with for the feedback they’ve given me so far. As I get more feedback from them and others over time, I do hope I can continue to improve this app and achieve the goal of building a multi-purpose app that’s useful and efficient for the switch device user.

As you learn how people near you might benefit from an assistive technology tool that you could build for them, don’t forget to consider how UI Automation might play a part in that.

Thanks,

Guy