AzureDevOps: CICD for Azure Data Factory

Introduction

Azure Data Factory is a simple ETL/ELT processing without coding or maintenance. Since ADF is not that much mature product it will be frequently updated. So when coming to CICD is one of the big challenges for all the Developers/DevOps Engineer. In this article, we will see how we can implement the CICD for ADF (V2) easily from Azure DevOps.

Existing CICD Process - ARM Template

Microsoft already made clear documentation on how to implement CICD in Azure DevOps and the best practices, which you can find it here. This process is mainly using ARM Template. But this method has some difficulties like

- We need to export the data factory as ARM Template each time when you have some huge changes.

- For any small changes, the whole infrastructure needs to be deployed again through ARM.

Best Practices for ADF(v2) CICD with Azure DevOps

- Integrate Azure Repo (git) to your Develop( or Test) Environment and not your Production. Currently, you can able to use both Git & Data Factory Mode but this will be disabled after Jul 31st, 2019.

- Don't hardcode any of the environment-specific values either in your Linked Service/ DataSet. Try to use the parameters from the pipeline to provide it during the runtime.

- Having a sample IR (Integrated RunTime) Name through out all your environments.

- Don't include Triggers in your deployment pipeline - If your triggers having environment-specific variables/parameters then it would be difficult. So always keep a separate pipeline to deploy your triggers.

New CICD Process - JSON Based Deployment

The other easy way for doing the CICD for ADF is based on JSON files deployment, this task is created by Jan Pieter Posthuma it can be found in github.

The idea here is for each and every services we are creating in ADF will be creating json files behind the scene, we can also see that JSON code. So by using these JSON files we are going go deploy our ADF in a ha higher environment.

Let's see how we can do this.

Install the Extension

First of all, we need to install the extension from Azure DevOps MarketPlace from the below link

https://marketplace.visualstudio.com/items?itemName=liprec.vsts-publish-adf

ADF + Git Repo

From the development environment (not in production)** **we need to do Setup the Code repository with Azure Repo's/GitHub

Read the full detailed documentation on how to integreate your ADF with Git Repo

/en-us/azure/data-factory/media/author-visually/github-integration-image1.png

/en-us/azure/data-factory/media/author-visually/github-integration-image2.png

Once you have been integrated your ADF with git you should see your git repo with folders like dataset, integration runtime,linked service,pipeline, and trigger.

Continuous Integration

The CI is very simple in terms of coping each folder into the artifact respectively

Continuous Deployment

The deployment is also very easy as we are going to follow the below 2 simple steps.

Clean-up - Delete all the existing linked service, dataset, and Pipeline. The main reason the Cleanup the resources is this task is not capable of doing the Incremental Changes/Deletion. So, once you deployed all the resources in ADF after did you delete any of your services like (dataset, pipeline, linked service) then do the CICD with-out the clean-up it won't be reflected in your ADF.

Stop all the Triggers - If you have any active triggers then the deployment will fail

Deploy the respective JSON files.

Start all the Triggers -Post-deployment

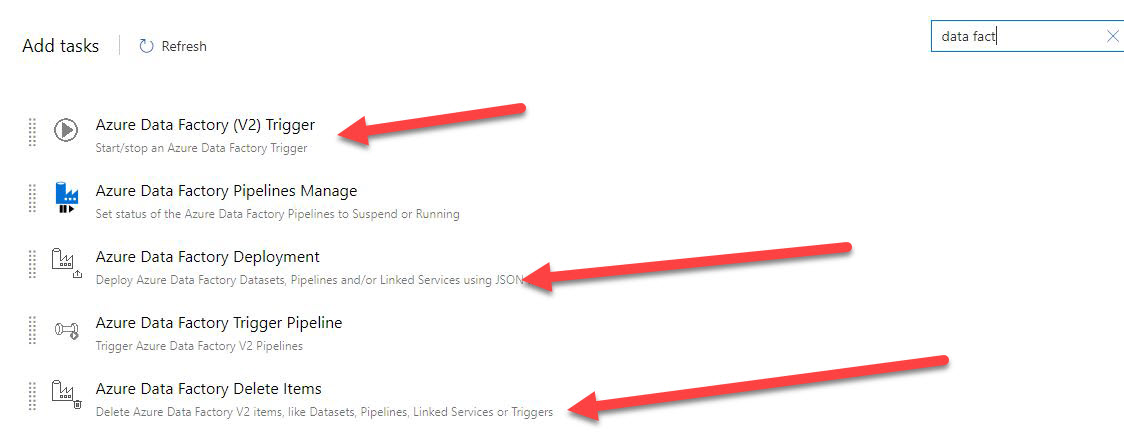

Tasks Required

Release Tasks:

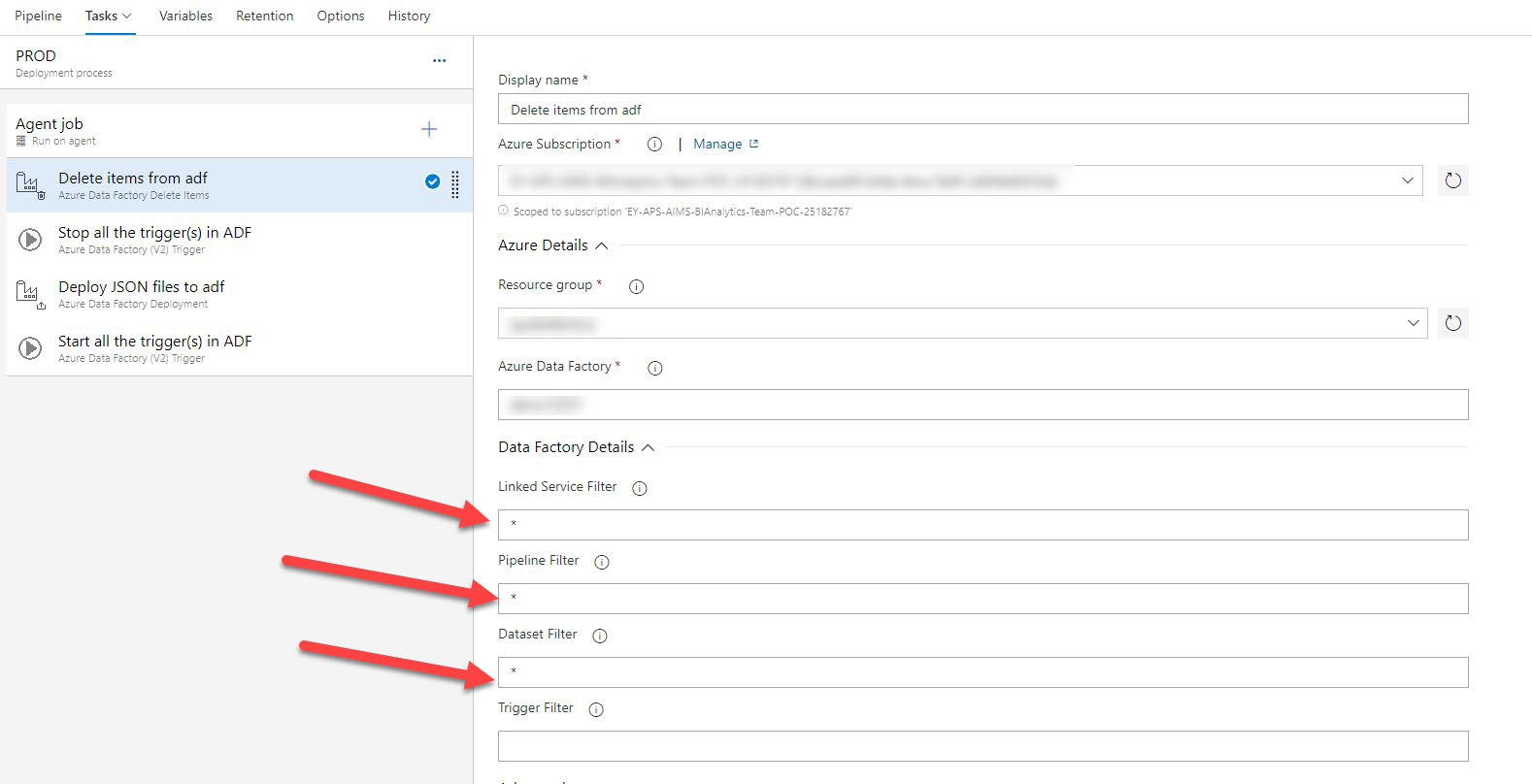

Add the Azure Data Factory Delete Items task and put * to the Linked Service Filter, Pipeline Filter, Data Set Filter because we are going to clean up all the existing code from the ADF before our deployment.

Delete Items

Stopping Triggers

Deploy JSON files

Deploy all the JSON files from respective folders.

Starting all the triggers

Note

Here I've skipped the deployment of triggers because it purely depends on the use-case, if you triggers are not going to change (or) not containing any environment-specific parameters then you can include in your deployment. However, if you contain any parameters that need to be passed dynamically to your triggers then you need to have a separate pipeline to that.

Conclusion

As ADF is evolving each and every days this would be the current best and efficient way of performing CICD. Maybe in future this can be much more simplified with in a single Task