Image captions (version 4.0)

Image captions in Image Analysis 4.0 are available through the Caption and Dense Captions features.

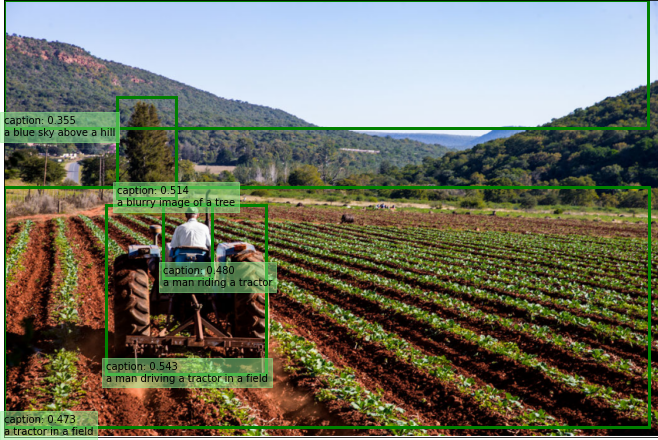

Caption generates a one-sentence description for all image contents. Dense Captions provides more detail by generating one-sentence descriptions of up to 10 regions of the image in addition to describing the whole image. Dense Captions also returns bounding box coordinates of the described image regions. Both these features use the latest groundbreaking Florence-based AI models.

At this time, image captioning is available in English only.

Important

Image captioning in Image Analysis 4.0 is only available in certain Azure data center regions: see Region availability. You must use a Vision resource located in one of these regions to get results from Caption and Dense Captions features.

If you have to use a Vision resource outside these regions to generate image captions, please use Image Analysis 3.2 which is available in all Azure AI Vision regions.

Try out the image captioning features quickly and easily in your browser using Vision Studio.

Gender-neutral captions

Captions contain gender terms ("man", "woman", "boy" and "girl") by default. You have the option to replace these terms with "person" in your results and receive gender-neutral captions. You can do so by setting the optional API request parameter, gender-neutral-caption to true in the request URL.

Caption and Dense Captions examples

The following JSON response illustrates what the Analysis 4.0 API returns when describing the example image based on its visual features.

"captions": [

{

"text": "a man pointing at a screen",

"confidence": 0.4891590476036072

}

]

Use the API

The image captioning feature is part of the Analyze Image API. Include Caption in the features query parameter. Then, when you get the full JSON response, parse the string for the contents of the "captionResult" section.

Next steps

- Learn the related concept of object detection.

- Quickstart: Image Analysis REST API or client libraries

- Call the Analyze Image API

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for