Application availability in AKS enabled by Azure Arc

Applies to: AKS on Azure Stack HCI 22H2, AKS on Windows Server

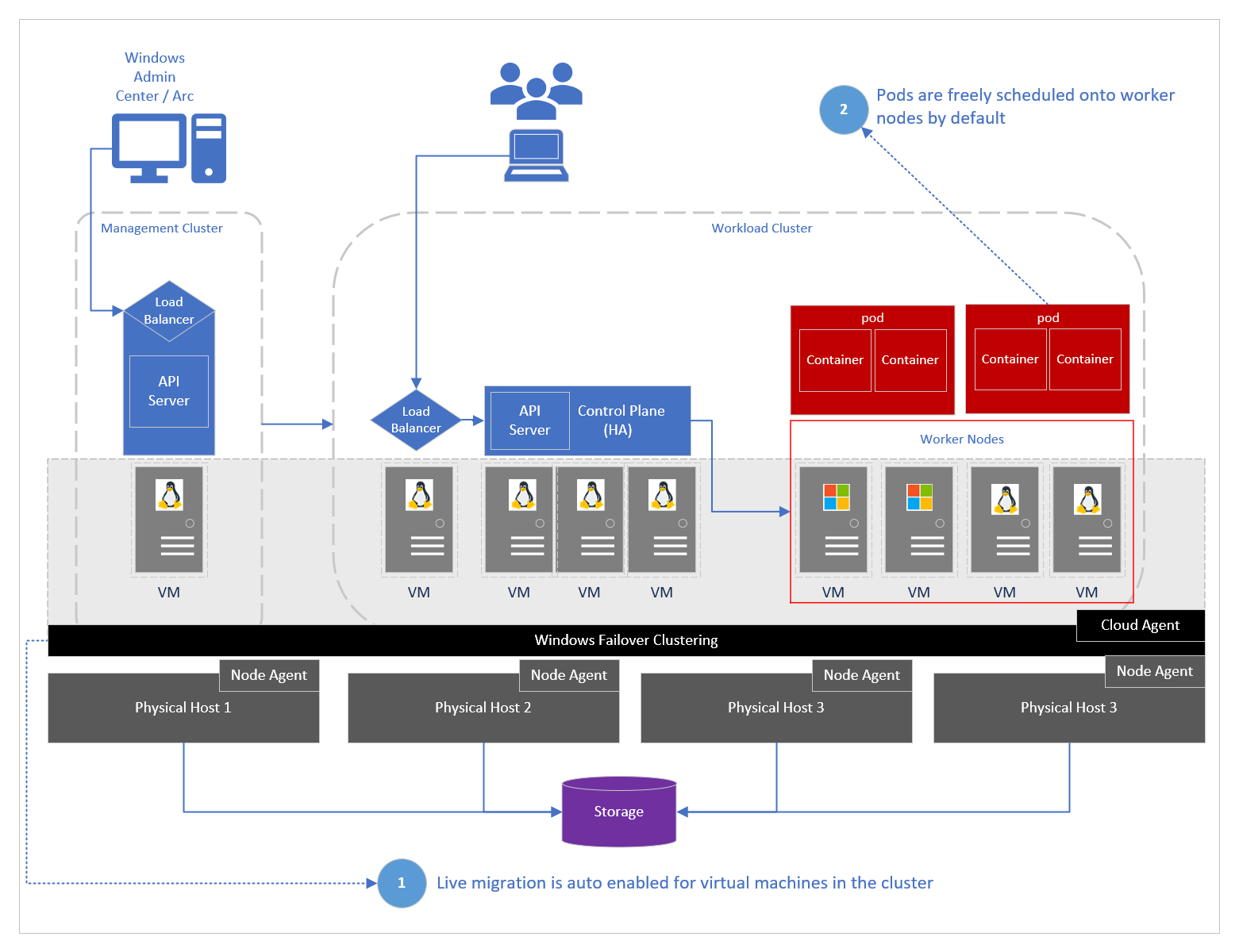

Azure Kubernetes Service (AKS) enabled by Azure Arc offers a fully supported container platform that can run cloud-native applications on the Kubernetes container orchestration platform. The architecture supports running virtualized Windows and Linux workloads.

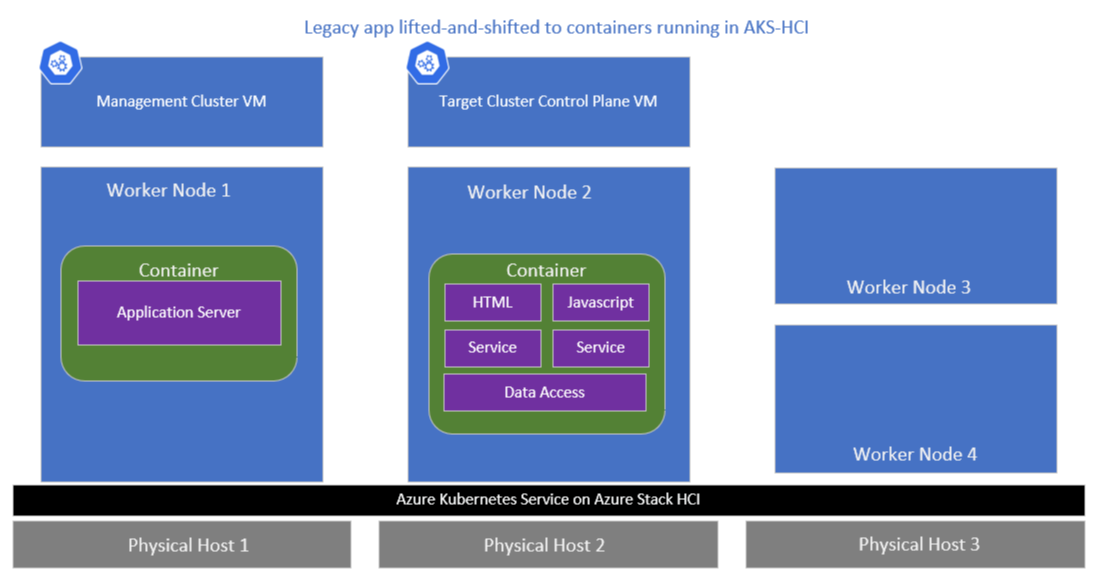

The AKS architecture is built with failover clustering and live migration that is automatically enabled for target (workload) clusters. During various disruption events, virtual machines that host customer workloads are freely moved around without perceived application downtime. This architecture means that a traditional enterprise customer, who's managing a legacy application as a singleton to AKS on Azure Stack HCI or Windows Server, gets similar (or better) uptime than what's currently experienced on a legacy VM application.

This article describes some fundamental concepts for users who want to run containerized applications on AKS Arc with live migration enabled in order to ensure applications are available during a disruption. Kubernetes terminology, such as voluntary disruption and involuntary disruption, is used to refer to downtime of an application running in a pod.

What is live migration?

Live migration is a Hyper-V feature that allows you to transparently move running virtual machines from one Hyper-V host to another without perceived downtime. The primary benefit of live migration is flexibility; running virtual machines is not tied to a single host machine. This allows users to perform actions such as draining a specific host of virtual machines before decommissioning or upgrading the host. When paired with Windows Failover Clustering, live migration enables the creation of highly available and fault tolerant systems.

The current architecture of AKS on Azure Stack HCI and Windows Server assumes that you enabled live migration in your Azure Stack HCI clustered environment. Therefore, all Kubernetes worker node VMs are created with live migration configured. These nodes can be moved around physical hosts in the event of a disruption to ensure the platform is highly available.

When you run a legacy application as a singleton on top of Kubernetes, this architecture meets your high availability needs. Kubernetes manages the scheduling of pods on available worker nodes while live migration manages the scheduling of worker node VMs on available physical hosts.

Application disruption scenarios

A comparative study of the recovery times for applications running in VMs on AKS on Azure Stack HCI and Windows Server clearly shows that there is minimal impact on the application when common disruption events occur. Three example disruption scenarios include:

- Applying an update that results in a reboot of the physical machine.

- Applying an update that involves recreating the worker node.

- Unplanned hardware failure of a physical machine.

Note

These scenarios assume that the application owner still uses Kubernetes affinity and anti-affinity settings to ensure proper scheduling of pods across worker nodes.

| Disruption event | Running applications in VMs on Azure Stack HCI | Running applications in VMs on AKS on Azure Stack HCI or Windows Server |

|---|---|---|

| Apply an update that results in a reboot of the physical machine | No impact | No impact |

| Apply an update that involves recreating the worker node (or rebooting the VM) | No impact | Varies |

| Unplanned hardware failure of a physical machine | 6-8 minutes | 6-8 minutes |

Apply an update that results in a reboot of the physical machine

During a physical host maintenance event, such as applying an update that results in the reboot of a host machine, no impact is expected for applications running in the cluster. The cluster administrator drains the host and ensures that all VMs are live migrated before applying the update.

Apply an update that involves recreating the worker node

This scenario involves bringing down a worker node VM to perform routine maintenance. To prepare for the update, the cluster administrator drains and isolates the node, so that all pods are evicted to an available worker node before applying updates. Once the update is completed, the worker node is rejoined and made available for scheduling.

Note

The availability of an application varies, as it includes the time it takes to download the base container image, especially for larger images stored in the public cloud. Therefore, it's recommended that you use small base container images, and for Windows containers, it's recommended that you use the nano server base image.

Unplanned hardware failure of a physical machine

In this scenario, an involuntary disruption event occurs to a physical machine hosting a legacy application container/pod in one of the worker node VMs. Failover clustering places the host in an isolated state, and then after a period of 6 to 8 minutes, starts the process of live migrating these VMs to surviving hosts. In this case, the application downtime is the equivalent of the time it takes to restart the host and worker node VMs.

Conclusion

AKS failover clustering technologies are designed to ensure that computing environments in both Azure Stack HCI and Windows Server are highly available and fault tolerant. However, the application owner still has to configure deployments to use Kubernetes features, such as Deployments, Affinity Mapping, RelicaSets, to ensure that the pods are resilient in disruption scenarios.