Simplified application autoscaling with Kubernetes Event-driven Autoscaling (KEDA) add-on

Kubernetes Event-driven Autoscaling (KEDA) is a single-purpose and lightweight component that strives to make application autoscaling simple and is a CNCF Graduate project.

It applies event-driven autoscaling to scale your application to meet demand in a sustainable and cost-efficient manner with scale-to-zero.

The KEDA add-on makes it even easier by deploying a managed KEDA installation, providing you with a rich catalog of Azure KEDA scalers that you can scale your applications with on your Azure Kubernetes Services (AKS) cluster.

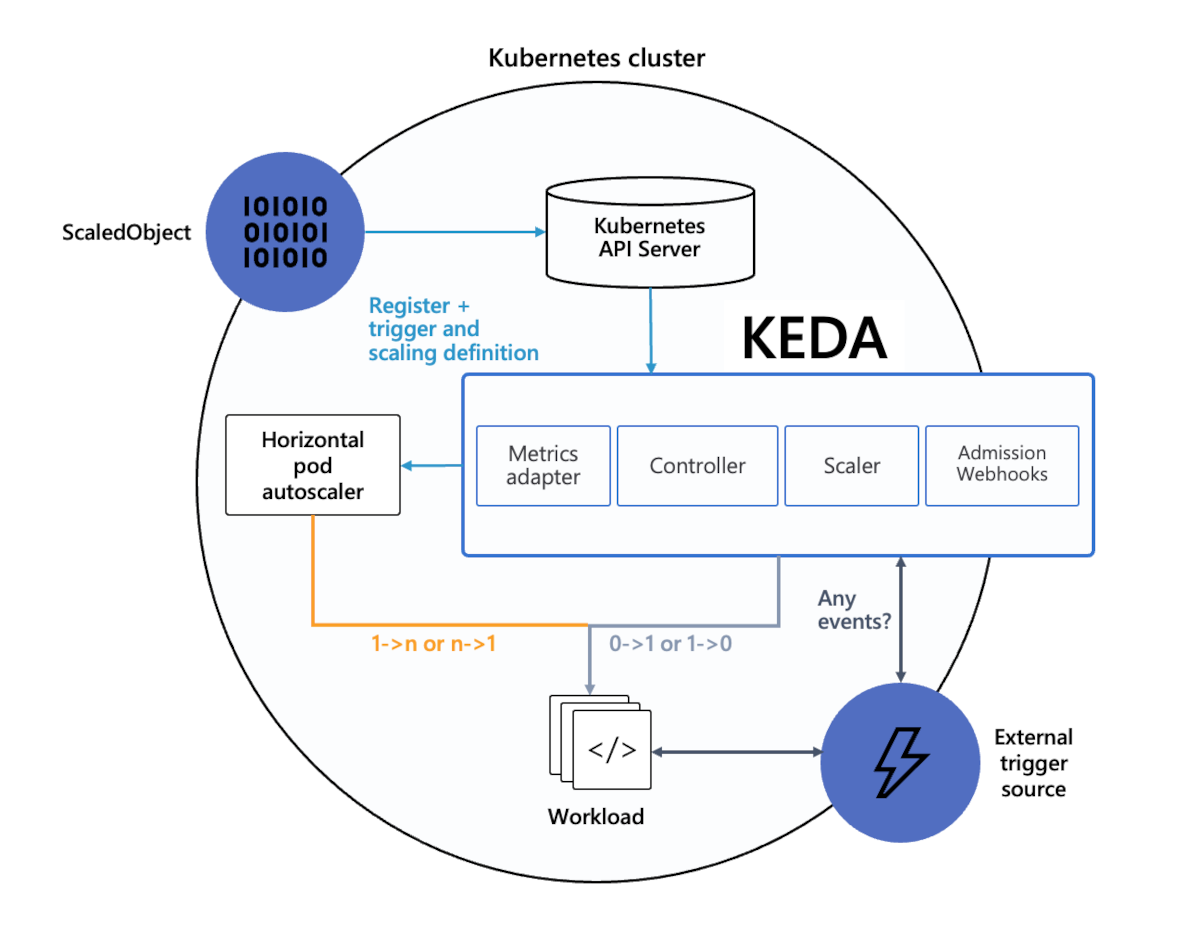

Architecture

KEDA provides two main components:

- KEDA operator allows end-users to scale workloads in/out from 0 to N instances with support for Kubernetes Deployments, Jobs, StatefulSets or any custom resource that defines

/scalesubresource. - Metrics server exposes external metrics to Horizontal Pod Autoscaler (HPA) in Kubernetes for autoscaling purposes such as messages in a Kafka topic, or number of events in an Azure event hub. Due to upstream limitations, KEDA must be the only installed metric adapter.

Learn more about how KEDA works in the official KEDA documentation.

Installation

KEDA can be added to your Azure Kubernetes Service (AKS) cluster by enabling the KEDA add-on using an ARM template or Azure CLI.

The KEDA add-on provides a fully supported installation of KEDA that is integrated with AKS.

Capabilities and features

KEDA provides the following capabilities and features:

- Build sustainable and cost-efficient applications with scale-to-zero

- Scale application workloads to meet demand using a rich catalog of Azure KEDA scalers

- Autoscale applications with

ScaledObjects, such as Deployments, StatefulSets or any custom resource that defines/scalesubresource - Autoscale job-like workloads with

ScaledJobs - Use production-grade security by decoupling autoscaling authentication from workloads

- Bring-your-own external scaler to use tailor-made autoscaling decisions

- Integrate with Microsoft Entra Workload ID for authentication

Note

If you plan to use workload identity, enable the workload identity add-on before enabling the KEDA add-on.

Add-on limitations

The KEDA AKS add-on has the following limitations:

- KEDA's HTTP add-on (preview) to scale HTTP workloads isn't installed with the extension, but can be deployed separately.

- KEDA's external scaler for Azure Cosmos DB to scale based on Azure Cosmos DB change feed isn't installed with the extension, but can be deployed separately.

- Only one metric server is allowed in the Kubernetes cluster. Because of that the KEDA add-on should be the only metrics server inside the cluster.

- Multiple KEDA installations aren't supported

For general KEDA questions, we recommend visiting the FAQ overview.

Note

If you're using Microsoft Entra Workload ID and you enable KEDA before Workload ID, you need to restart the KEDA operator pods so the proper environment variables can be injected:

Restart the pods by running

kubectl rollout restart deployment keda-operator -n kube-system.Obtain KEDA operator pods using

kubectl get pod -n kube-systemand finding pods that begin withkeda-operator.Verify successful injection of the environment variables by running

kubectl describe pod <keda-operator-pod> -n kube-system. UnderEnvironment, you should see values forAZURE_TENANT_ID,AZURE_FEDERATED_TOKEN_FILE, andAZURE_AUTHORITY_HOST.

Supported Kubernetes and KEDA versions

Your cluster Kubernetes version determines what KEDA version will be installed on your AKS cluster. To see which KEDA version maps to each AKS version, see the AKS managed add-ons column of the Kubernetes component version table.

For GA Kubernetes versions, AKS offers full support of the corresponding KEDA minor version in the table. Kubernetes preview versions and the latest KEDA patch are partially covered by customer support on a best-effort basis. As such, these features aren't meant for production use. For more information, see the following support articles:

Next steps

Azure Kubernetes Service

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for