Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure Kubernetes Service (AKS) is a managed Kubernetes service that lets you quickly deploy and manage clusters. In this quickstart, you:

- Deploy an AKS cluster using Terraform.

- Run a sample multi-container application with a group of microservices and web front ends simulating a retail scenario.

Note

To get started with quickly provisioning an AKS cluster, this article includes steps to deploy a cluster with default settings for evaluation purposes only. Before deploying a production-ready cluster, we recommend that you familiarize yourself with our baseline reference architecture to consider how it aligns with your business requirements.

Before you begin

- This quickstart assumes a basic understanding of Kubernetes concepts. For more information, see Kubernetes core concepts for Azure Kubernetes Service (AKS).

- You need an Azure account with an active subscription. If you don't have one, create an account for free.

- Follow the instructions based on your command line interface.

- To learn more about creating a Windows Server node pool, see Create an AKS cluster that supports Windows Server containers.

Important

As of November 30, 2025, Azure Kubernetes Service (AKS) no longer supports or provides security updates for Azure Linux 2.0. The Azure Linux 2.0 node image is frozen at the 202512.06.0 release. Beginning March 31, 2026, node images will be removed, and you'll be unable to scale your node pools. Migrate to a supported Azure Linux version by upgrading your node pools to a supported Kubernetes version or migrating to osSku AzureLinux3. For more information, see [Retirement] Azure Linux 2.0 node pools on AKS.

- Install and configure Terraform.

- Download kubectl.

- Create a random value for the Azure resource group name using random_pet.

- Create an Azure resource group using azurerm_resource_group.

- Access the configuration of the AzureRM provider to get the Azure Object ID using azurerm_client_config.

- Create a Kubernetes cluster using azurerm_kubernetes_cluster.

- Create an AzAPI resource azapi_resource.

- Create an AzAPI resource to generate an SSH key pair using azapi_resource_action.

Login to your Azure account

First, log into your Azure account and authenticate using one of the methods described in the following section.

Terraform only supports authenticating to Azure with the Azure CLI. Authenticating using Azure PowerShell isn't supported. Therefore, while you can use the Azure PowerShell module when doing your Terraform work, you first need to authenticate to Azure.

Implement the Terraform code

Note

The sample code for this article is located in the Azure Terraform GitHub repo. You can view the log file containing the test results from current and previous versions of Terraform.

See more articles and sample code showing how to use Terraform to manage Azure resources

Create a directory you can use to test the sample Terraform code and make it your current directory.

Create a file named

providers.tfand insert the following code:terraform { required_version = ">=1.0" required_providers { azapi = { source = "azure/azapi" version = "~>1.5" } azurerm = { source = "hashicorp/azurerm" version = "~>3.0" } random = { source = "hashicorp/random" version = "~>3.0" } time = { source = "hashicorp/time" version = "0.9.1" } } } provider "azurerm" { features {} }Create a file named

ssh.tfand insert the following code:resource "random_pet" "ssh_key_name" { prefix = "ssh" separator = "" } resource "azapi_resource_action" "ssh_public_key_gen" { type = "Microsoft.Compute/sshPublicKeys@2022-11-01" resource_id = azapi_resource.ssh_public_key.id action = "generateKeyPair" method = "POST" response_export_values = ["publicKey", "privateKey"] } resource "azapi_resource" "ssh_public_key" { type = "Microsoft.Compute/sshPublicKeys@2022-11-01" name = random_pet.ssh_key_name.id location = azurerm_resource_group.rg.location parent_id = azurerm_resource_group.rg.id } output "key_data" { value = azapi_resource_action.ssh_public_key_gen.output.publicKey }Create a file named

main.tfand insert the following code:# Generate random resource group name resource "random_pet" "rg_name" { prefix = var.resource_group_name_prefix } resource "azurerm_resource_group" "rg" { location = var.resource_group_location name = random_pet.rg_name.id } resource "random_pet" "azurerm_kubernetes_cluster_name" { prefix = "cluster" } resource "random_pet" "azurerm_kubernetes_cluster_dns_prefix" { prefix = "dns" } resource "azurerm_kubernetes_cluster" "k8s" { location = azurerm_resource_group.rg.location name = random_pet.azurerm_kubernetes_cluster_name.id resource_group_name = azurerm_resource_group.rg.name dns_prefix = random_pet.azurerm_kubernetes_cluster_dns_prefix.id identity { type = "SystemAssigned" } default_node_pool { name = "agentpool" vm_size = "Standard_D2_v2" node_count = var.node_count } linux_profile { admin_username = var.username ssh_key { key_data = azapi_resource_action.ssh_public_key_gen.output.publicKey } } network_profile { network_plugin = "kubenet" load_balancer_sku = "standard" } }Create a file named

variables.tfand insert the following code:variable "resource_group_location" { type = string default = "eastus" description = "Location of the resource group." } variable "resource_group_name_prefix" { type = string default = "rg" description = "Prefix of the resource group name that's combined with a random ID so name is unique in your Azure subscription." } variable "node_count" { type = number description = "The initial quantity of nodes for the node pool." default = 3 } variable "msi_id" { type = string description = "The Managed Service Identity ID. Set this value if you're running this example using Managed Identity as the authentication method." default = null } variable "username" { type = string description = "The admin username for the new cluster." default = "azureadmin" }Create a file named

outputs.tfand insert the following code:output "resource_group_name" { value = azurerm_resource_group.rg.name } output "kubernetes_cluster_name" { value = azurerm_kubernetes_cluster.k8s.name } output "client_certificate" { value = azurerm_kubernetes_cluster.k8s.kube_config[0].client_certificate sensitive = true } output "client_key" { value = azurerm_kubernetes_cluster.k8s.kube_config[0].client_key sensitive = true } output "cluster_ca_certificate" { value = azurerm_kubernetes_cluster.k8s.kube_config[0].cluster_ca_certificate sensitive = true } output "cluster_password" { value = azurerm_kubernetes_cluster.k8s.kube_config[0].password sensitive = true } output "cluster_username" { value = azurerm_kubernetes_cluster.k8s.kube_config[0].username sensitive = true } output "host" { value = azurerm_kubernetes_cluster.k8s.kube_config[0].host sensitive = true } output "kube_config" { value = azurerm_kubernetes_cluster.k8s.kube_config_raw sensitive = true }

Initialize Terraform

Run terraform init to initialize the Terraform deployment. This command downloads the Azure provider required to manage your Azure resources.

terraform init -upgrade

Key points:

- The

-upgradeparameter upgrades the necessary provider plugins to the newest version that complies with the configuration's version constraints.

Create a Terraform execution plan

Run terraform plan to create an execution plan.

terraform plan -out main.tfplan

Key points:

- The

terraform plancommand creates an execution plan, but doesn't execute it. Instead, it determines what actions are necessary to create the configuration specified in your configuration files. This pattern allows you to verify whether the execution plan matches your expectations before making any changes to actual resources. - The optional

-outparameter allows you to specify an output file for the plan. Using the-outparameter ensures that the plan you reviewed is exactly what is applied.

Apply a Terraform execution plan

Run terraform apply to apply the execution plan to your cloud infrastructure.

terraform apply main.tfplan

Key points:

- The example

terraform applycommand assumes you previously ranterraform plan -out main.tfplan. - If you specified a different filename for the

-outparameter, use that same filename in the call toterraform apply. - If you didn't use the

-outparameter, callterraform applywithout any parameters.

Verify the results

Get the Azure resource group name using the following command.

resource_group_name=$(terraform output -raw resource_group_name)Display the name of your new Kubernetes cluster using the az aks list command.

az aks list \ --resource-group $resource_group_name \ --query "[].{\"K8s cluster name\":name}" \ --output tableGet the Kubernetes configuration from the Terraform state and store it in a file that

kubectlcan read using the following command.echo "$(terraform output kube_config)" > ./azurek8sVerify the previous command didn't add an ASCII EOT character using the following command.

cat ./azurek8sKey points:

- If you see

<< EOTat the beginning andEOTat the end, remove these characters from the file. Otherwise, you may receive the following error message:error: error loading config file "./azurek8s": yaml: line 2: mapping values are not allowed in this context

- If you see

Set an environment variable so

kubectlcan pick up the correct config using the following command.export KUBECONFIG=./azurek8sVerify the health of the cluster using the

kubectl get nodescommand.kubectl get nodes

Key points:

- When you created the AKS cluster, monitoring was enabled to capture health metrics for both the cluster nodes and pods. These health metrics are available in the Azure portal. For more information on container health monitoring, see Monitor Azure Kubernetes Service health.

- Several key values classified as output when you applied the Terraform execution plan. For example, the host address, AKS cluster user name, and AKS cluster password are output.

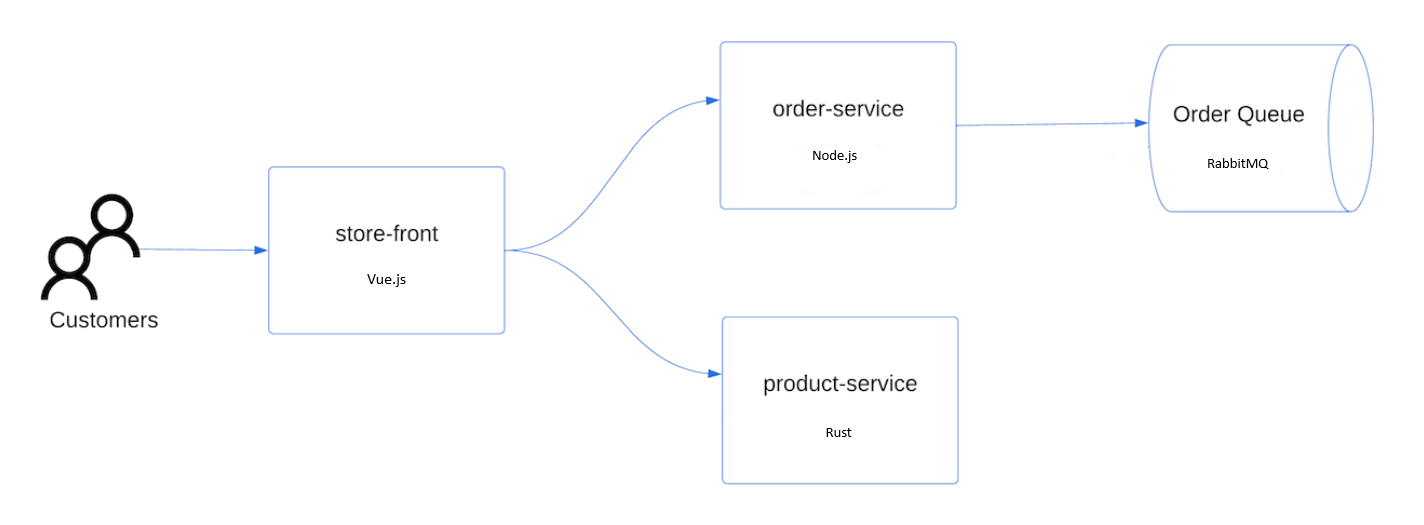

Deploy the application

To deploy the application, you use a manifest file to create all the objects required to run the AKS Store application. A Kubernetes manifest file defines a cluster's desired state, such as which container images to run. The manifest includes the following Kubernetes deployments and services:

- Store front: Web application for customers to view products and place orders.

- Product service: Shows product information.

- Order service: Places orders.

- Rabbit MQ: Message queue for an order queue.

Note

We don't recommend running stateful containers, such as Rabbit MQ, without persistent storage for production. These are used here for simplicity, but we recommend using managed services, such as Azure CosmosDB or Azure Service Bus.

Create a file named

aks-store-quickstart.yamland copy in the following manifest:apiVersion: apps/v1 kind: Deployment metadata: name: rabbitmq spec: replicas: 1 selector: matchLabels: app: rabbitmq template: metadata: labels: app: rabbitmq spec: nodeSelector: "kubernetes.io/os": linux containers: - name: rabbitmq image: mcr.microsoft.com/mirror/docker/library/rabbitmq:3.10-management-alpine ports: - containerPort: 5672 name: rabbitmq-amqp - containerPort: 15672 name: rabbitmq-http env: - name: RABBITMQ_DEFAULT_USER value: "username" - name: RABBITMQ_DEFAULT_PASS value: "password" resources: requests: cpu: 10m memory: 128Mi limits: cpu: 250m memory: 256Mi volumeMounts: - name: rabbitmq-enabled-plugins mountPath: /etc/rabbitmq/enabled_plugins subPath: enabled_plugins volumes: - name: rabbitmq-enabled-plugins configMap: name: rabbitmq-enabled-plugins items: - key: rabbitmq_enabled_plugins path: enabled_plugins --- apiVersion: v1 data: rabbitmq_enabled_plugins: | [rabbitmq_management,rabbitmq_prometheus,rabbitmq_amqp1_0]. kind: ConfigMap metadata: name: rabbitmq-enabled-plugins --- apiVersion: v1 kind: Service metadata: name: rabbitmq spec: selector: app: rabbitmq ports: - name: rabbitmq-amqp port: 5672 targetPort: 5672 - name: rabbitmq-http port: 15672 targetPort: 15672 type: ClusterIP --- apiVersion: apps/v1 kind: Deployment metadata: name: order-service spec: replicas: 1 selector: matchLabels: app: order-service template: metadata: labels: app: order-service spec: nodeSelector: "kubernetes.io/os": linux containers: - name: order-service image: ghcr.io/azure-samples/aks-store-demo/order-service:latest ports: - containerPort: 3000 env: - name: ORDER_QUEUE_HOSTNAME value: "rabbitmq" - name: ORDER_QUEUE_PORT value: "5672" - name: ORDER_QUEUE_USERNAME value: "username" - name: ORDER_QUEUE_PASSWORD value: "password" - name: ORDER_QUEUE_NAME value: "orders" - name: FASTIFY_ADDRESS value: "0.0.0.0" resources: requests: cpu: 1m memory: 50Mi limits: cpu: 75m memory: 128Mi initContainers: - name: wait-for-rabbitmq image: busybox command: ['sh', '-c', 'until nc -zv rabbitmq 5672; do echo waiting for rabbitmq; sleep 2; done;'] resources: requests: cpu: 1m memory: 50Mi limits: cpu: 75m memory: 128Mi --- apiVersion: v1 kind: Service metadata: name: order-service spec: type: ClusterIP ports: - name: http port: 3000 targetPort: 3000 selector: app: order-service --- apiVersion: apps/v1 kind: Deployment metadata: name: product-service spec: replicas: 1 selector: matchLabels: app: product-service template: metadata: labels: app: product-service spec: nodeSelector: "kubernetes.io/os": linux containers: - name: product-service image: ghcr.io/azure-samples/aks-store-demo/product-service:latest ports: - containerPort: 3002 resources: requests: cpu: 1m memory: 1Mi limits: cpu: 1m memory: 7Mi --- apiVersion: v1 kind: Service metadata: name: product-service spec: type: ClusterIP ports: - name: http port: 3002 targetPort: 3002 selector: app: product-service --- apiVersion: apps/v1 kind: Deployment metadata: name: store-front spec: replicas: 1 selector: matchLabels: app: store-front template: metadata: labels: app: store-front spec: nodeSelector: "kubernetes.io/os": linux containers: - name: store-front image: ghcr.io/azure-samples/aks-store-demo/store-front:latest ports: - containerPort: 8080 name: store-front env: - name: VUE_APP_ORDER_SERVICE_URL value: "http://order-service:3000/" - name: VUE_APP_PRODUCT_SERVICE_URL value: "http://product-service:3002/" resources: requests: cpu: 1m memory: 200Mi limits: cpu: 1000m memory: 512Mi --- apiVersion: v1 kind: Service metadata: name: store-front spec: ports: - port: 80 targetPort: 8080 selector: app: store-front type: LoadBalancerFor a breakdown of YAML manifest files, see Deployments and YAML manifests.

If you create and save the YAML file locally, then you can upload the manifest file to your default directory in CloudShell by selecting the Upload/Download files button and selecting the file from your local file system.

Deploy the application using the

kubectl applycommand and specify the name of your YAML manifest.kubectl apply -f aks-store-quickstart.yamlThe following example output shows the deployments and services:

deployment.apps/rabbitmq created service/rabbitmq created deployment.apps/order-service created service/order-service created deployment.apps/product-service created service/product-service created deployment.apps/store-front created service/store-front created

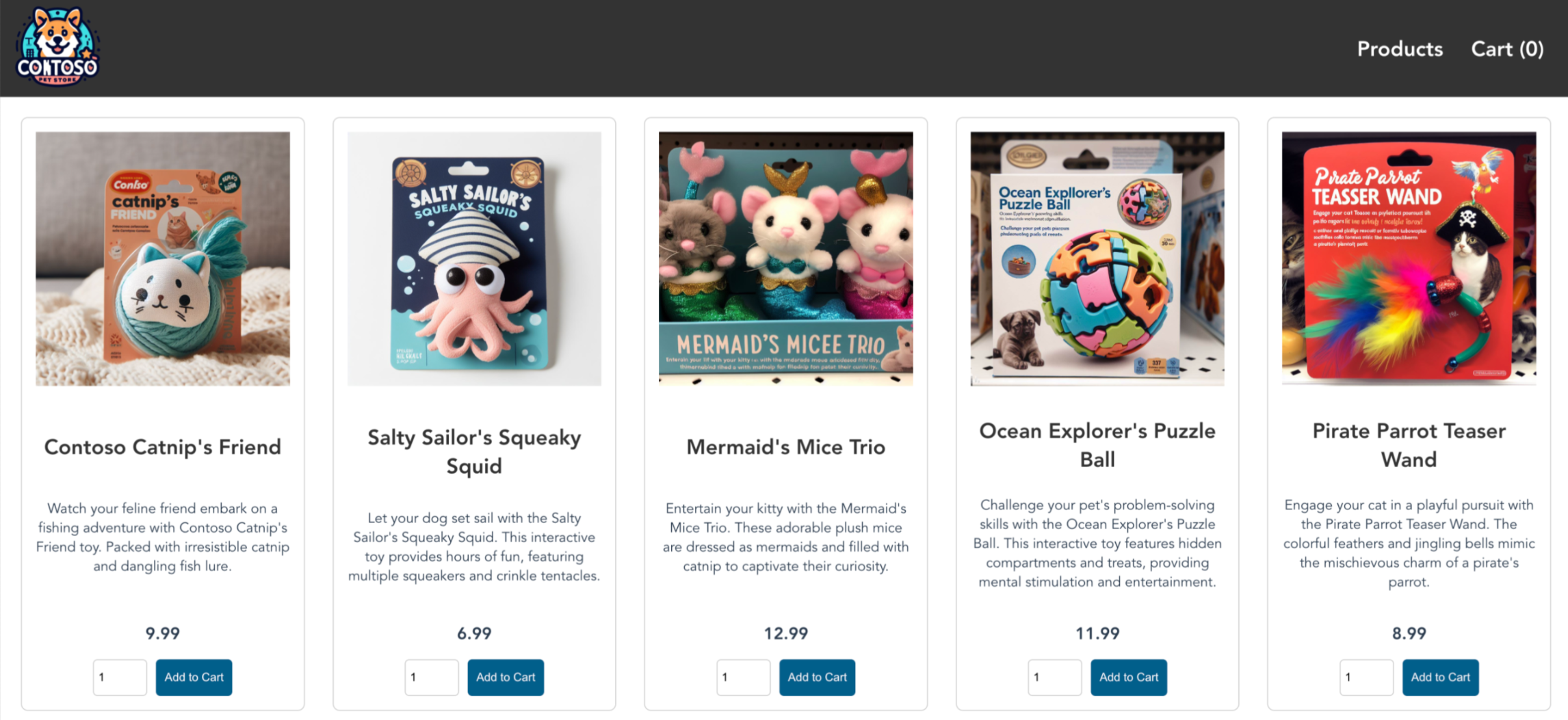

Test the application

When the application runs, a Kubernetes service exposes the application front end to the internet. This process can take a few minutes to complete.

Check the status of the deployed pods using the

kubectl get podscommand. Make all pods areRunningbefore proceeding.kubectl get podsCheck for a public IP address for the store-front application. Monitor progress using the

kubectl get servicecommand with the--watchargument.kubectl get service store-front --watchThe EXTERNAL-IP output for the

store-frontservice initially shows as pending:NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE store-front LoadBalancer 10.0.100.10 <pending> 80:30025/TCP 4h4mOnce the EXTERNAL-IP address changes from pending to an actual public IP address, use

CTRL-Cto stop thekubectlwatch process.The following example output shows a valid public IP address assigned to the service:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE store-front LoadBalancer 10.0.100.10 20.62.159.19 80:30025/TCP 4h5mOpen a web browser to the external IP address of your service to see the Azure Store app in action.

Clean up resources

Delete AKS resources

When you no longer need the resources created via Terraform, do the following steps:

Run terraform plan and specify the

destroyflag.terraform plan -destroy -out main.destroy.tfplanKey points:

- The

terraform plancommand creates an execution plan, but doesn't execute it. Instead, it determines what actions are necessary to create the configuration specified in your configuration files. This pattern allows you to verify whether the execution plan matches your expectations before making any changes to actual resources. - The optional

-outparameter allows you to specify an output file for the plan. Using the-outparameter ensures that the plan you reviewed is exactly what is applied.

- The

Run terraform apply to apply the execution plan.

terraform apply main.destroy.tfplan

Delete service principal

Get the service principal ID using the following command.

sp=$(terraform output -raw sp)Delete the service principal using the az ad sp delete command.

az ad sp delete --id $sp

- Install the Azure Developer CLI (AZD)

- Install and configure Terraform.

- You can review the application code used in the Azure-Samples/aks-store-demo repo.

Clone the Azure Developer CLI template

The Azure Developer CLI allows you to quickly download samples from the Azure-Samples repository. In our quickstart, you download the aks-store-demo application. For more information on the general uses cases, see the azd overview.

Clone the AKS store demo template from the Azure-Samples repository using the

azd initcommand with the--templateparameter.azd init --template Azure-Samples/aks-store-demoEnter an environment name for your project that uses only alphanumeric characters and hyphens, such as aks-terraform-1.

Enter a new environment name: aks-terraform-1

Sign in to your Azure Cloud account

The azd template contains all the code needed to create the services, but you need to sign in to your Azure account in order to host the application on AKS.

Sign in to your account using the

azd auth logincommand.azd auth loginCopy the device code that appears in the output and press enter to sign in.

Start by copying the next code: XXXXXXXXX Then press enter and continue to log in from your browser...Important

If you're using an out-of-network virtual machine or GitHub Codespace, certain Azure security policies cause conflicts when used to sign in with

azd auth login. If you run into an issue here, you can follow the azd auth workaround provided, which involves using acurlrequest to the localhost URL you were redirected to after runningazd auth login.Authenticate with your credentials on your organization's sign in page.

Confirm that it's you trying to connect from the Azure CLI.

Verify the message "Device code authentication completed. Logged in to Azure." appears in your original terminal.

Waiting for you to complete authentication in the browser... Device code authentication completed. Logged in to Azure.

azd auth workaround

This workaround requires you to have the Azure CLI installed.

Open a terminal window and log in with the Azure CLI using the

az logincommand with the--scopeparameter set tohttps://graph.microsoft.com/.default.az login --scope https://graph.microsoft.com/.defaultYou should be redirected to an authentication page in a new tab to create a browser access token, as shown in the following example:

https://login.microsoftonline.com/organizations/oauth2/v2.0/authorize?clientid=<your_client_id>.Copy the localhost URL of the webpage you received after attempting to sign in with

azd auth login.In a new terminal window, use the following

curlrequest to log in. Make sure you replace the<localhost>placeholder with the localhost URL you copied in the previous step.curl <localhost>A successful login outputs an HTML webpage, as shown in the following example:

<!DOCTYPE html> <html> <head> <meta charset="utf-8" /> <meta http-equiv="refresh" content="60;url=https://docs.microsoft.com/cli/azure/"> <title>Login successfully</title> <style> body { font-family: 'Segoe UI', Tahoma, Geneva, Verdana, sans-serif; } code { font-family: Consolas, 'Liberation Mono', Menlo, Courier, monospace; display: inline-block; background-color: rgb(242, 242, 242); padding: 12px 16px; margin: 8px 0px; } </style> </head> <body> <h3>You have logged into Microsoft Azure!</h3> <p>You can close this window, or we will redirect you to the <a href="https://docs.microsoft.com/cli/azure/">Azure CLI documentation</a> in 1 minute.</p> <h3>Announcements</h3> <p>[Windows only] Azure CLI is collecting feedback on using the <a href="https://learn.microsoft.com/windows/uwp/security/web-account-manager">Web Account Manager</a> (WAM) broker for the login experience.</p> <p>You may opt-in to use WAM by running the following commands:</p> <code> az config set core.allow_broker=true<br> az account clear<br> az login </code> </body> </html>Close the current terminal and open the original terminal. You should see a JSON list of your subscriptions.

Copy the

idfield of the subscription you want to use.Set your subscription using the

az account setcommand.az account set --subscription <subscription_id>

Create and deploy resources for your cluster

To deploy the application, you use the azd up command to create all the objects required to run the AKS Store application.

- An

azure.yamlfile defines a cluster's desired state, such as which container images to fetch and includes the following Kubernetes deployments and services:

- Store front: Web application for customers to view products and place orders.

- Product service: Shows product information.

- Order service: Places orders.

- Rabbit MQ: Message queue for an order queue.

Note

We don't recommend running stateful containers, such as Rabbit MQ, without persistent storage for production. These are used here for simplicity, but we recommend using managed services, such as Azure Cosmos DB or Azure Service Bus.

Deploy application resources

The azd template for this quickstart creates a new resource group with an AKS cluster and an Azure Key Vault. The key vault stores client secrets and runs the services in the pets namespace.

Create all the application resources using the

azd upcommand.azd upazd upruns all the hooks inside of theazd-hooksfolder to preregister, provision, and deploy the application services.Customize hooks to add custom code into the

azdworkflow stages. For more information, see theazdhooks reference.Select an Azure subscription for your billing usage.

? Select an Azure Subscription to use: [Use arrows to move, type to filter] > 1. My Azure Subscription (xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx)Select a region to deploy your application to.

Select an Azure location to use: [Use arrows to move, type to filter] 1. (South America) Brazil Southeast (brazilsoutheast) 2. (US) Central US (centralus) 3. (US) East US (eastus) > 43. (US) East US 2 (eastus2) 4. (US) East US STG (eastusstg) 5. (US) North Central US (northcentralus) 6. (US) South Central US (southcentralus)azdautomatically runs the preprovision and postprovision hooks to create the resources for your application. This process can take a few minutes to complete. Once complete, you should see an output similar to the following example:SUCCESS: Your workflow to provision and deploy to Azure completed in 9 minutes 40 seconds.

Generate Terraform plans

Within your Azure Developer template, the /infra/terraform folder contains all the code used to generate the Terraform plan.

Terraform deploys and runs commands using terraform apply as part of azd's provisioning step. Once complete, you should see an output similar to the following example:

Plan: 5 to add, 0 to change, 0 to destroy.

...

Saved the plan to: /workspaces/aks-store-demo/.azure/aks-terraform-azd/infra/terraform/main.tfplan

Test the application

When the application runs, a Kubernetes service exposes the application front end to the internet. This process can take a few minutes to complete.

Set your namespace as the demo namespace

petsusing thekubectl set-contextcommand.kubectl config set-context --current --namespace=petsCheck the status of the deployed pods using the

kubectl get podscommand. Make sure all pods areRunningbefore proceeding.kubectl get podsCheck for a public IP address for the store-front application and monitor progress using the

kubectl get servicecommand with the--watchargument.kubectl get service store-front --watchThe EXTERNAL-IP output for the

store-frontservice initially shows as pending:NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE store-front LoadBalancer 10.0.100.10 <pending> 80:30025/TCP 4h4mOnce the EXTERNAL-IP address changes from pending to an actual public IP address, use

CTRL-Cto stop thekubectlwatch process.The following sample output shows a valid public IP address assigned to the service:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE store-front LoadBalancer 10.0.100.10 20.62.159.19 80:30025/TCP 4h5mOpen a web browser to the external IP address of your service to see the Azure Store app in action.

Delete the cluster

Once you're finished with the quickstart, clean up unnecessary resources to avoid Azure charges.

Delete all the resources created in the quickstart using the

azd downcommand.azd downConfirm your decision to remove all used resources from your subscription by typing

yand pressingEnter.? Total resources to delete: 14, are you sure you want to continue? (y/N)Allow purge to reuse the quickstart variables if applicable by typing

yand pressingEnter.[Warning]: These resources have soft delete enabled allowing them to be recovered for a period or time after deletion. During this period, their names can't be reused. In the future, you can use the argument --purge to skip this confirmation.

Troubleshoot Terraform on Azure

Troubleshoot common problems when using Terraform on Azure.

Next steps

In this quickstart, you deployed a Kubernetes cluster and then deployed a simple multi-container application to it. This sample application is for demo purposes only and doesn't represent all the best practices for Kubernetes applications. For guidance on creating full solutions with AKS for production, see AKS solution guidance.

To learn more about AKS and walk through a complete code-to-deployment example, continue to the Kubernetes cluster tutorial.