Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

The integration of AI into applications is rapidly becoming a must-have for developers looking to help their users be more creative, productive, and achieve their health goals. AI-powered features, such as intelligent chatbots, personalized recommendations, and contextual responses, add significant value to modern apps. The AI-powered apps that came out since ChatGPT captured our imagination are primarily between one user and one AI assistant. As developers get more comfortable with the capabilities of AI, they're exploring AI-powered apps in a team's context. They ask "what value can AI add to a team of collaborators?"

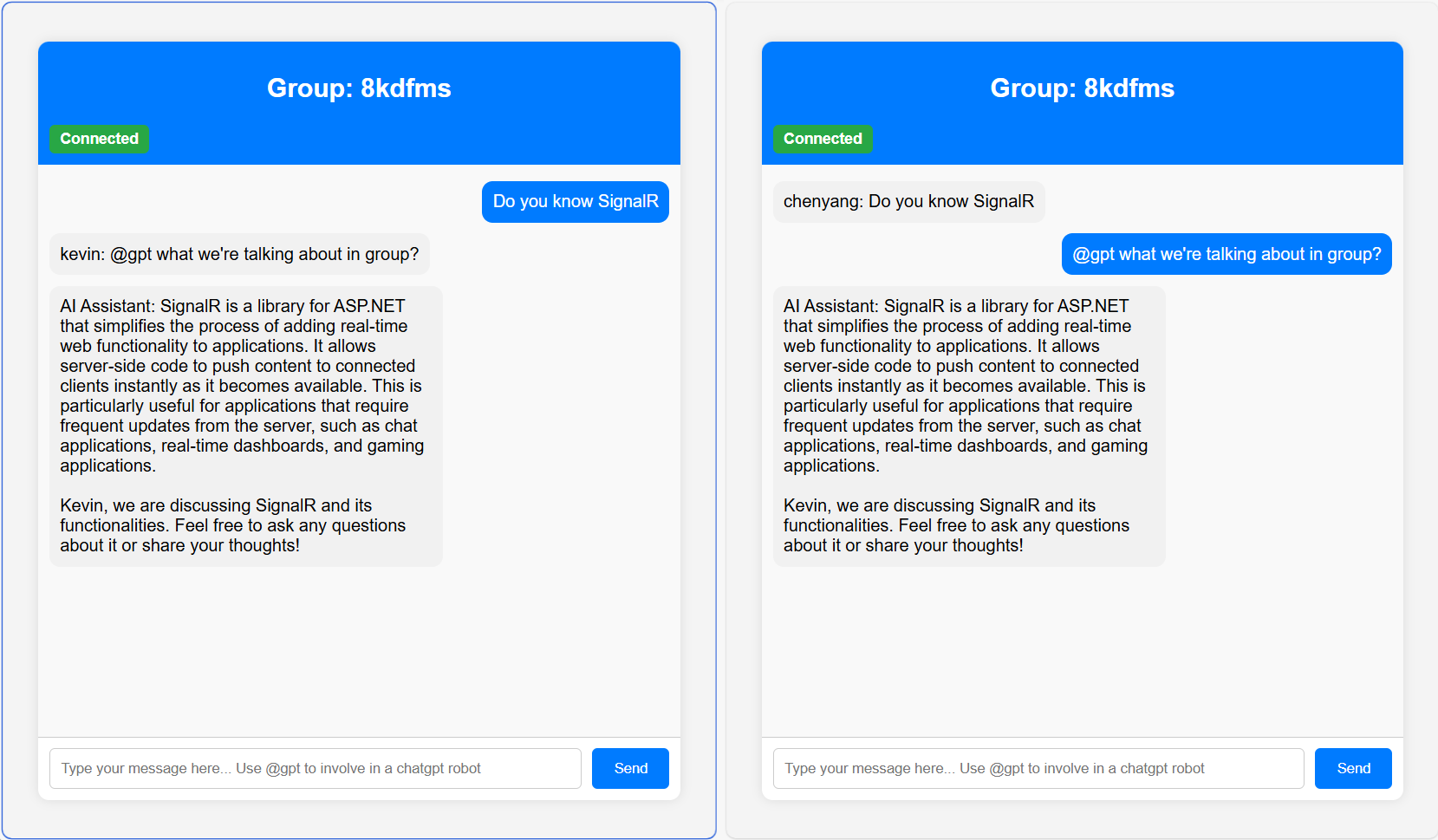

This tutorial guides you through building a real-time group chat application. Among a group of human collaborators in a chat, there's an AI assistant, which has access to the chat history and can be invited to help out by any collaborator when they start the message with @gpt. The finished app looks like this.

We use OpenAI for generating intelligent, context-aware responses and SignalR for delivering the response to users in a group. You can find the complete code in this repo.

Dependencies

You can use either Azure OpenAI or OpenAI for this project. Make sure to update the endpoint and key in appsetting.json. OpenAIExtensions reads the configuration when the app starts and they're required to authenticate and use either service.

To build this application, you need the following:

- ASP.NET Core: To create the web application and host the SignalR hub

- SignalR: For real-time communication between clients and the server

- Azure SignalR: For managing SignalR connections at scale

- OpenAI Client: To interact with OpenAI's API for generating AI responses

Implementation

In this section, we walk through the key parts of the code that integrate SignalR with OpenAI to create an AI-enhanced group chat experience.

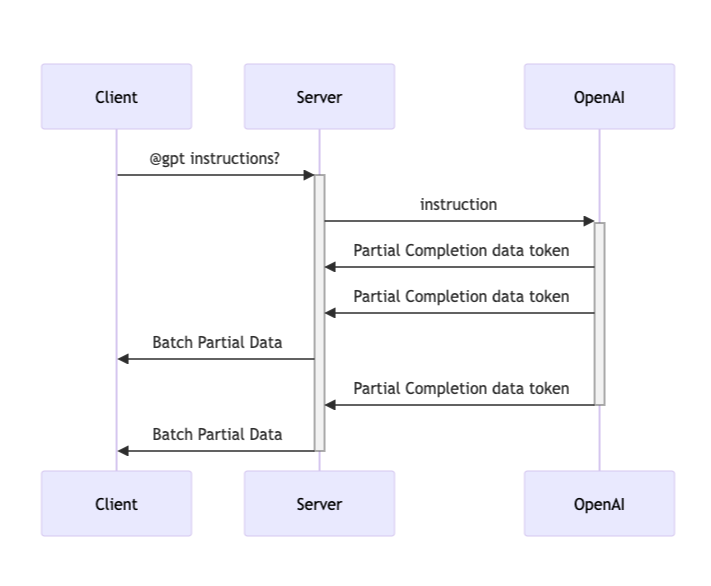

Data flow

SignalR Hub integration

The GroupChatHub class manages user connections, message broadcasting, and AI interactions. When a user sends a message starting with @gpt, the hub forwards it to OpenAI, which generates a response. The AI's response is streamed back to the group in real-time.

var chatClient = _openAI.GetChatClient(_options.Model);

await foreach (var completion in chatClient.CompleteChatStreamingAsync(messagesIncludeHistory))

{

// ...

// Buffering and sending the AI's response in chunks

await Clients.Group(groupName).SendAsync("newMessageWithId", "ChatGPT", id, totalCompletion.ToString());

// ...

}

Maintain context with history

Every request to OpenAI's Chat Completions API is stateless - OpenAI doesn't store past interactions. In a chat application, what a user or an assistant has said is important for generating a response that's contextually relevant. We can achieve this by including chat history in every request to the Completions API.

The GroupHistoryStore class manages chat history for each group. It stores messages posted by both the users and AI assistants, ensuring that the conversation context is preserved across interactions. This context is crucial for generating coherent AI responses.

// Store message generated by AI-assistant in memory

public void UpdateGroupHistoryForAssistant(string groupName, string message)

{

var chatMessages = _store.GetOrAdd(groupName, _ => InitiateChatMessages());

chatMessages.Add(new AssistantChatMessage(message));

}

// Store message generated by users in memory

_history.GetOrAddGroupHistory(groupName, userName, message);

Stream AI responses

The CompleteChatStreamingAsync() method streams responses from OpenAI incrementally, which allows the application to send partial responses to the client as they're generated.

The code uses a StringBuilder to accumulate the AI's response. It checks the length of the buffered content and sends it to the clients when it exceeds a certain threshold (for example, 20 characters). This approach ensures that users see the AI’s response as it forms, mimicking a human-like typing effect.

totalCompletion.Append(content);

if (totalCompletion.Length - lastSentTokenLength > 20)

{

await Clients.Group(groupName).SendAsync("newMessageWithId", "ChatGPT", id, totalCompletion.ToString());

lastSentTokenLength = totalCompletion.Length;

}

Explore further

This project opens up exciting possibilities for further enhancement:

- Advanced AI features: Use other OpenAI capabilities like sentiment analysis, translation, or summarization.

- Incorporating multiple AI agents: You can introduce multiple AI agents with distinct roles or expertise areas within the same chat. For example, one agent might focus on text generation and the other provides image or audio generation. This interaction can create a richer and more dynamic user experience where different AI agents interact seamlessly with users and each other.

- Share chat history between server instances: Implement a database layer to persist chat history across sessions, allowing conversations to resume even after a disconnect. Beyond SQL or NO SQL based solutions, you can also explore using a caching service like Redis. It can significantly improve performance by storing frequently accessed data, such as chat history or AI responses, in memory. This reduces latency and offloads database operations, leading to faster response times, particularly in high-traffic scenarios.