Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Log ingestion is the process of collecting, transforming, and preparing log data from applications, servers, containers, and cloud services so you can store, analyze, and monitor it. Logs capture information such as errors, warnings, usage patterns, and system performance. Reliable log ingestion ensures that operational and security data is available in near real-time for troubleshooting and insights. This article explains how to send logs from Fluentd to Azure Data Explorer (Kusto), including installation, configuration, and validation steps.

Overview

What is Fluentd?

Fluentd is an open-source data collector you can use to unify log collection and routing across multiple systems. It supports more than 1,000 plugins and provides flexible options for filtering, buffering, and transforming data. You can use Fluentd in cloud‑native and enterprise environments for centralized log aggregation and forwarding.

What is Azure Data Explorer?

Azure Data Explorer (ADX) is a fast, fully managed analytics service optimized for real‑time analysis of large volumes of structured, semi‑structured, and unstructured data. ADX uses Kusto Query Language (KQL) and is widely used for telemetry, monitoring, diagnostics, and interactive data exploration.

Prerequisites

- Ruby installed on the node where logs have to be ingested. To install fluentd dependencies using gem package manager, see the Ruby installation instructions

- Access to an Azure Data Explorer cluster and database.

- Azure Active Directory application with permissions to ingest data.

How to get started with Fluentd and Azure Data Explorer

Install Fluentd by using RubyGems:

gem install fluentdInstall the Fluentd Kusto plugin:

gem install fluent-plugin-kustoConfigure Fluentd by creating a configuration file (for example,

fluent.conf) with the following content. Replace the placeholders with your Azure and plugin values:<match <tag-from-source-logs>> @type kusto endpoint https://<your-cluster>.<region>.kusto.windows.net database_name <your-database> table_name <your-table> logger_path <your-fluentd-log-file-path> # Authentication options auth_type <your-authentication-type> # AAD authentication tenant_id <your-tenant-id> client_id <your-client-id> client_secret <your-client-secret> # Managed identity authentication (optional) managed_identity_client_id <your-managed-identity-client-id> # Workload identity authentication (optional) workload_identity_tenant_id <your-workload-identity-tenant-id> workload_identity_client_id <your-workload-identity-client-id> # Non-buffered mode buffered false delayed false # Buffered mode # buffered true # delayed <true/false> <buffer> @type memory timekey 1m flush_interval 10s </buffer> </match>

For more configuration and authentication details, see the Fluentd Kusto plugin documentation

Prepare Azure Data Explorer for ingestion:

Create an ADX cluster and database. See Create an Azure Data Explorer cluster and database.

Create an Azure Active Directory application and grant permissions to ingest data into the ADX database. See Create a Microsoft Entra application registration in Azure Data Explorer.

Note

Save the app key and application ID for future use.

Create a table for log ingestion. For example:

.create table LogTable ( tag:string, timestamp:datetime, record:dynamic )Run Fluentd with the configuration file:

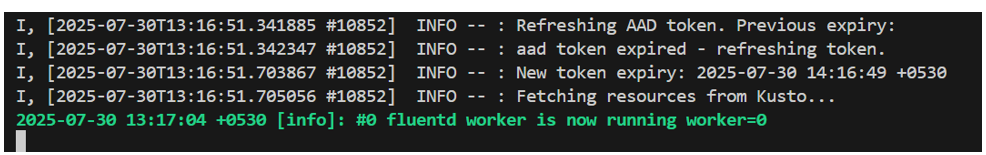

fluentd -c fluent.confValidate log ingestion by:

Checking the Fluentd log file, confirming there are no errors, and that the ingestion requests are sent successfully.

Querying the ADX table to ensure logs are ingested correctly:

LogTable | take 10Ingestion mapping: Use the pre-defined ingestion mappings in Kusto to transform data the default 3-column format into your desired schema. For more information, see Ingestion mappings support.