Enable Azure Key Vault for Workflow Orchestration Manager

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

Note

Workflow Orchestration Manager is powered by Apache Airflow.

Apache Airflow offers various back ends for securely storing sensitive information such as variables and connections. One of these options is Azure Key Vault. This article walks you through the process of configuring Key Vault as the secret back end for Apache Airflow within a Workflow Orchestration Manager environment.

Note

Workflow Orchestration Manager for Azure Data Factory relies on the open-source Apache Airflow application. For documentation and more tutorials for Airflow, see the Apache Airflow Documentation or Community webpages.

Prerequisites

- Azure subscription: If you don't have an Azure subscription, create a free Azure account before you begin.

- Azure Storage account: If you don't have a storage account, see Create an Azure Storage account for steps to create one. Ensure the storage account allows access only from selected networks.

- Azure Key Vault: You can follow this tutorial to create a new Key Vault instance if you don't have one.

- Service principal: You can create a new service principal or use an existing one and grant it permission to access your Key Vault instance. For example, you can grant the key-vault-contributor role to the service principal name (SPN) for your Key Vault instance so that the SPN can manage it. You also need to get the service principal's Client ID and Client Secret (API Key) to add them as environment variables, as described later in this article.

Permissions

Assign your SPN the following roles in your Key Vault instance from the built-in roles:

- Key Vault Contributor

- Key Vault Secrets User

Enable the Key Vault back end for a Workflow Orchestration Manager instance

To enable Key Vault as the secret back end for your Workflow Orchestration Manager instance:

Go to the Workflow Orchestration Manager instance's integration runtime environment.

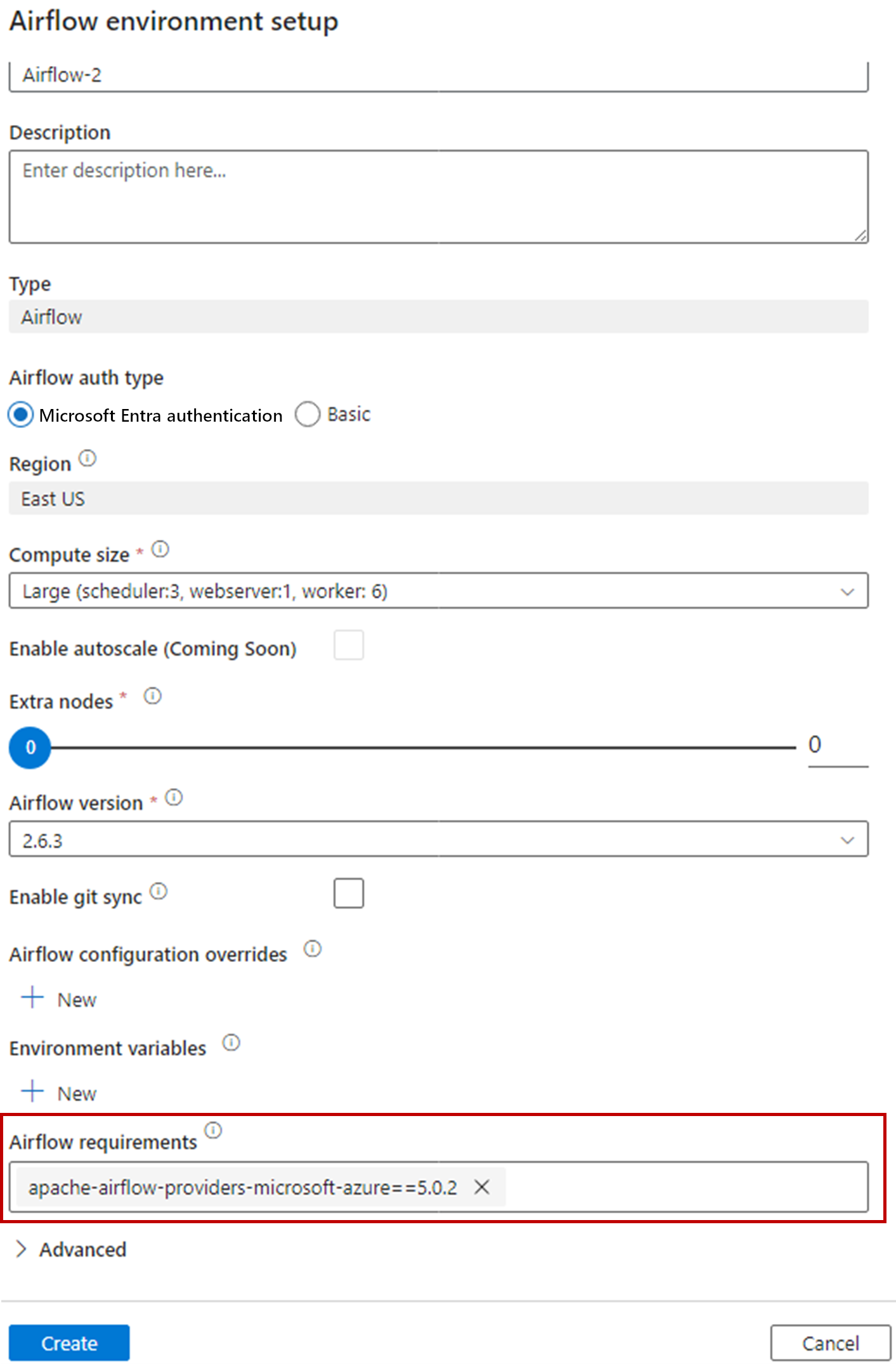

Install apache-airflow-providers-microsoft-azure for the Airflow requirements during your initial Airflow environment setup.

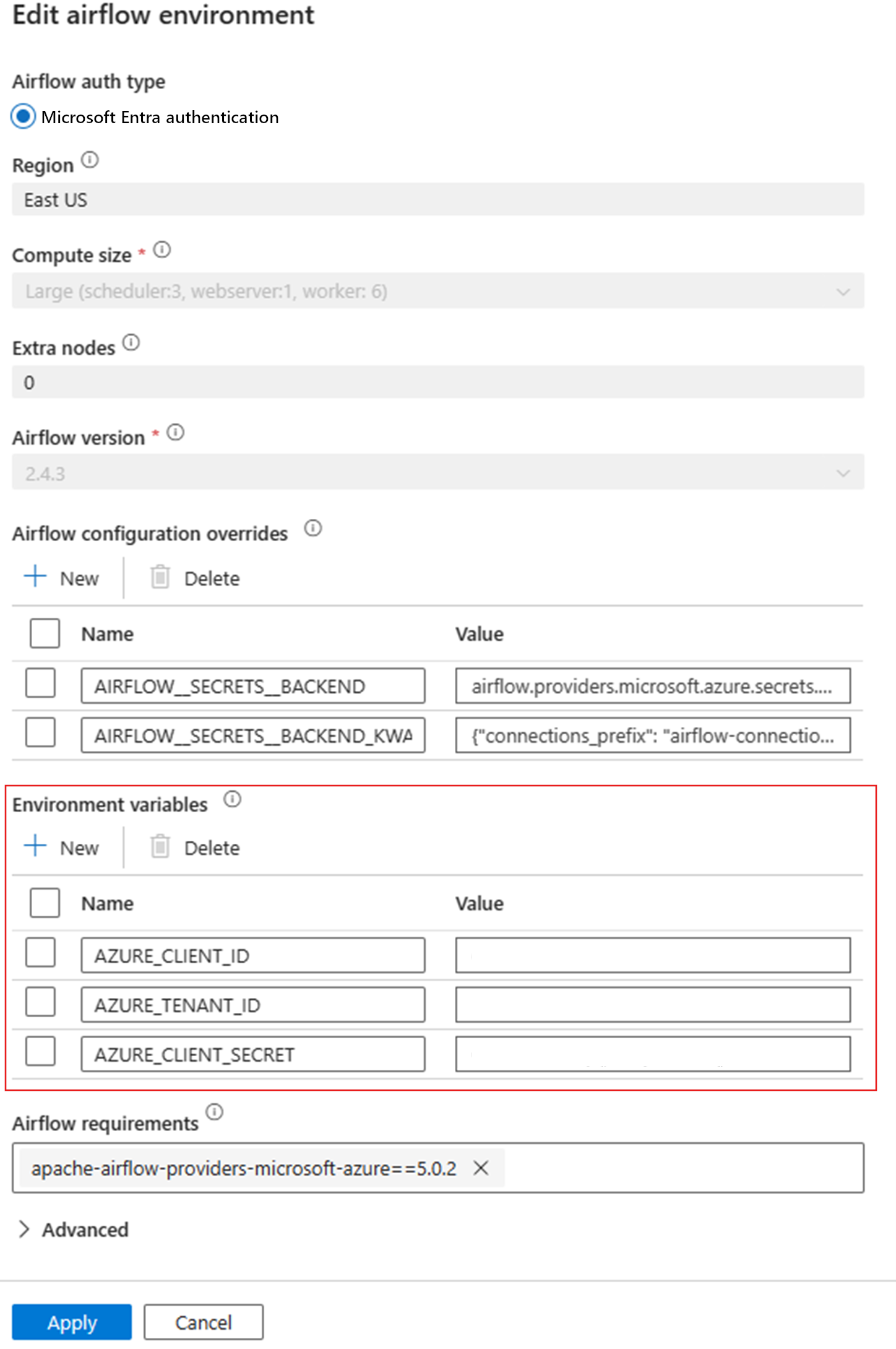

Add the following settings for the Airflow configuration overrides in integration runtime properties:

- AIRFLOW__SECRETS__BACKEND:

airflow.providers.microsoft.azure.secrets.key_vault.AzureKeyVaultBackend - AIRFLOW__SECRETS__BACKEND_KWARGS:

{"connections_prefix": "airflow-connections", "variables_prefix": "airflow-variables", "vault_url": **\<your keyvault uri\>**}

- AIRFLOW__SECRETS__BACKEND:

Add the following variables for the Environment variables configuration in the Airflow integration runtime properties:

- AZURE_CLIENT_ID = <Client ID of SPN>

- AZURE_TENANT_ID = <Tenant Id>

- AZURE_CLIENT_SECRET = <Client Secret of SPN>

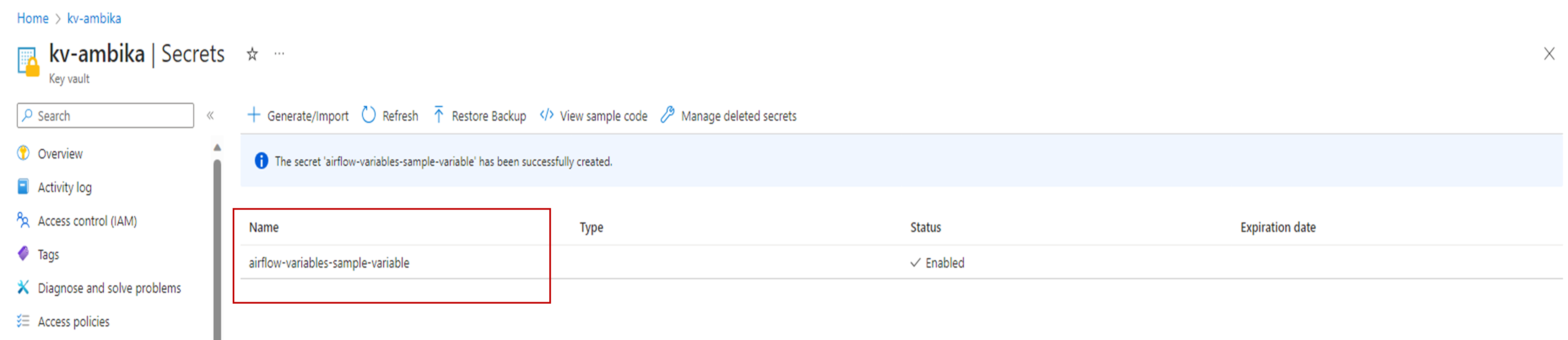

Then you can use variables and connections and they're stored automatically in Key Vault. The names of the connections and variables need to follow

AIRFLOW__SECRETS__BACKEND_KWARGS, as defined previously. For more information, see Azure Key Vault as secret back end.

Sample DAG using Key Vault as the back end

Create the new Python file

adf.pywith the following contents:from datetime import datetime, timedelta from airflow.operators.python_operator import PythonOperator from textwrap import dedent from airflow.models import Variable from airflow import DAG import logging def retrieve_variable_from_akv(): variable_value = Variable.get("sample-variable") logger = logging.getLogger(__name__) logger.info(variable_value) with DAG( "tutorial", default_args={ "depends_on_past": False, "email": ["airflow@example.com"], "email_on_failure": False, "email_on_retry": False, "retries": 1, "retry_delay": timedelta(minutes=5), }, description="This DAG shows how to use Azure Key Vault to retrieve variables in Apache Airflow DAG", schedule_interval=timedelta(days=1), start_date=datetime(2021, 1, 1), catchup=False, tags=["example"], ) as dag: get_variable_task = PythonOperator( task_id="get_variable", python_callable=retrieve_variable_from_akv, ) get_variable_taskStore variables for connections in Key Vault. For more information, see Store credentials in Azure Key Vault.

Related content

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for