Dedupe rows and find nulls by using data flow snippets

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

By using code snippets in mapping data flows, you can easily perform common tasks such as data deduplication and null filtering. This article explains how to easily add those functions to your pipelines by using data flow script snippets.

Create a pipeline

Select New Pipeline.

Add a data flow activity.

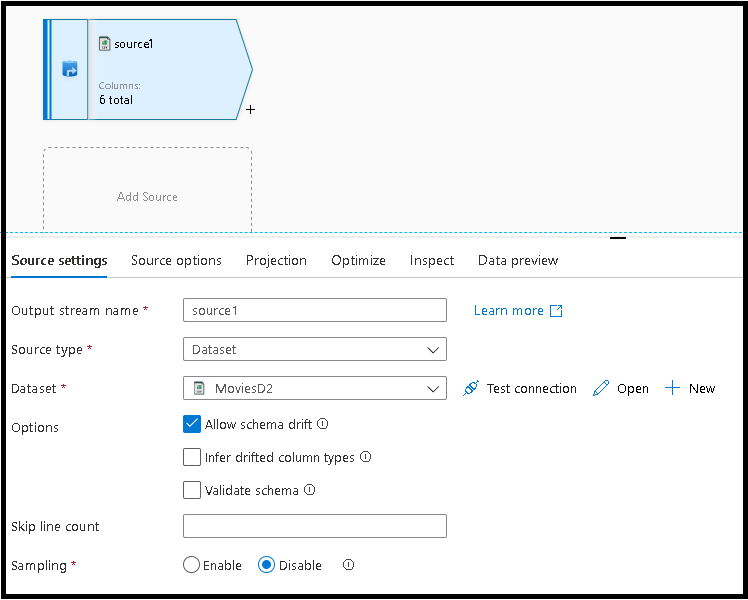

Select the Source settings tab, add a source transformation, and then connect it to one of your datasets.

The dedupe and null check snippets use generic patterns that take advantage of data flow schema drift. The snippets work with any schema from your dataset, or with datasets that have no pre-defined schema.

In the "Distinct row using all columns" section of Data flow script (DFS), copy the code snippet for DistinctRows.

Go to the Data Flow Script documentation page and copy the code snippet for Distinct Rows.

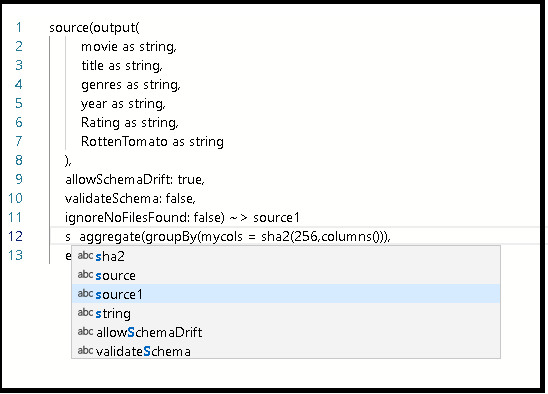

In your script, after the definition for

source1, hit Enter, and then paste the code snippet.Do either of the following:

Connect this pasted code snippet to the source transformation that you created earlier in the graph by typing source1 in front of the pasted code.

Alternatively, you can connect the new transformation in the designer by selecting the incoming stream from the new transformation node in the graph.

Now your data flow will remove duplicate rows from your source by using the aggregate transformation, which groups by all rows by using a general hash across all column values.

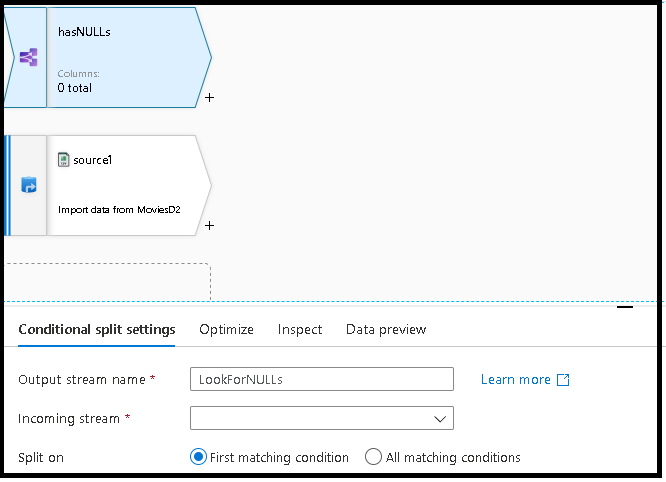

Add a code snippet for splitting your data into one stream that contains rows with nulls and another stream without nulls. To do so:

Go back to the Snippet library and this time copy the code for the NULL checks.

b. In your data flow designer, select Script again, and then paste this new transformation code at the bottom. This action connects the script to your previous transformation by placing the name of that transformation in front of the pasted snippet.

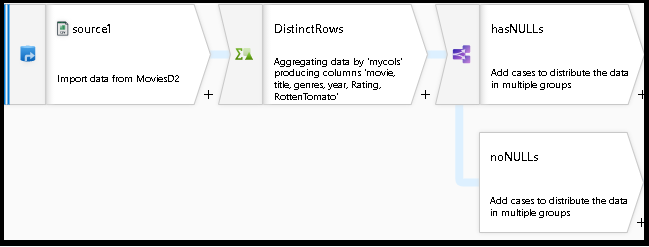

Your data flow graph should now look similar to this:

You have now created a working data flow with generic deduping and null checks by taking existing code snippets from the Data Flow Script library and adding them into your existing design.

Related content

- Build the rest of your data flow logic by using mapping data flows transformations.