Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Important

This documentation has been retired and might not be updated. The products, services, or technologies mentioned in this content are no longer supported. See Databricks Light 2.4 Extended Support (EoS).

Databricks Light is the Databricks packaging of the open source Apache Spark runtime. It provides a runtime option for jobs that don't need the advanced performance, reliability, or autoscaling benefits provided by Databricks Runtime. In particular, Databricks Light does not support:

- Delta Lake

- Autopilot features such as autoscaling

- Highly concurrent, all-purpose clusters

- Notebooks, dashboards, and collaboration features

- Connectors to various data sources and BI tools

Databricks Light is a runtime environment for jobs (or “automated workloads”). When you run jobs on Databricks Light clusters, they are subject to lower jobs light compute pricing. You can select Databricks Light only when you create or schedule a JAR, Python, or spark-submit job and attach a cluster to that job; you cannot use Databricks Light to run notebook jobs or interactive workloads.

Databricks Light can be used in the same workspace with clusters running on other Databricks runtimes and pricing tiers. You don't need to request a separate workspace to get started.

What's in Databricks Light?

The release schedule of Databricks Light runtime follows the Apache Spark runtime release schedule. Any Databricks Light version is based on a specific version of Apache Spark. See the following release notes for more information:

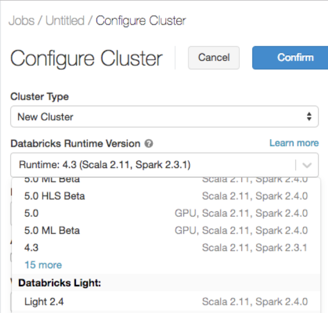

Create a cluster using Databricks Light

When you create a job cluster, select a Databricks Light version from the Databricks Runtime Version drop-down.

Important

Support for Databricks Light on pool-backed job clusters is in Public Preview.