Bloom filter indexes

Note

When using Photon-enabled compute and Databricks Runtime 12.2 or above, predictive I/O outperforms bloom filters for read performance. See What is predictive I/O?.

In Databricks Runtime 13.3 and above, Databricks recommends using clustering for Delta table layout. See Use liquid clustering for Delta tables.

Databricks only recommends using Bloom filters when using compute that does not support these features.

A Bloom filter index is a space-efficient data structure that enables data skipping on chosen columns, particularly for fields containing arbitrary text.

How Bloom filter indexes work

Azure Databricks Bloom filter indexes consist of a data skipping index for each data file. The Bloom filter index can be used to determine that a column value is definitively not in the file, or that it is probably in the file. Before reading a file Azure Databricks checks the index file, and the file is read only if the index indicates that the file might match a data filter.

Bloom filters support columns with the following input data types: byte, short, int, long, float, double, date, timestamp, and string. Nulls are not added to the Bloom filter, so any null related filter requires reading the data file. Azure Databricks supports the following data source filters: and, or, in, equals, and equalsnullsafe. Bloom filters are not supported on nested columns.

Configuration and reference

Use the following syntax to enable a Bloom filter:

CREATE BLOOMFILTER INDEX

ON TABLE table_name

FOR COLUMNS(column_name OPTIONS (fpp=0.1, numItems=5000))

For syntax details, see CREATE BLOOM FILTER INDEX and DROP BLOOM FILTER INDEX.

To disable Bloom filter operations, set the session level spark.databricks.io.skipping.bloomFilter.enabled configuration to false.

Display the list of Bloom filter indexes

To display the list of indexes, run:

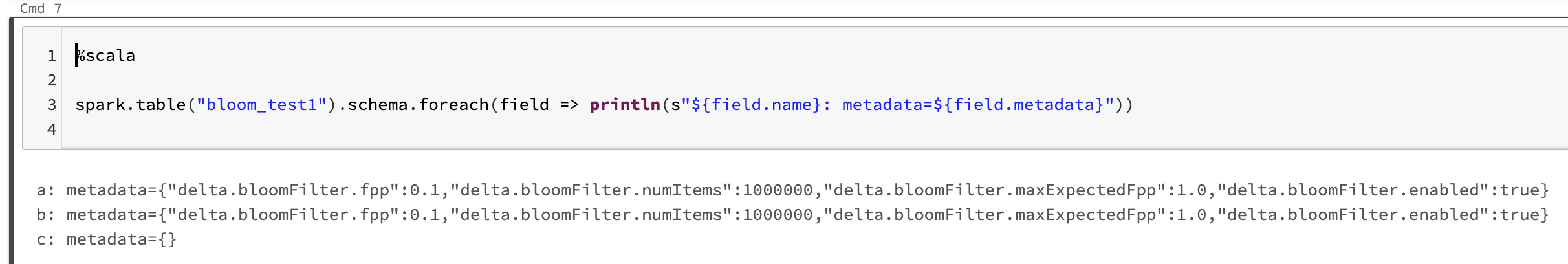

spark.table("<table-with-indexes>").schema.foreach(field => println(s"${field.name}: metadata=${field.metadata}"))

For example:

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for