Security guide

This guide provides an overview of security features and capabilities that an enterprise data team can use to harden their Azure Databricks environment according to their risk profile and governance policy.

This guide does not cover information about securing your data. For that information, see Data governance with Unity Catalog.

Authentication and access control

In Azure Databricks, a workspace is an Azure Databricks deployment in the cloud that functions as the unified environment that a specified set of users use for accessing all of their Azure Databricks assets. Your organization can choose to have multiple workspaces or just one, depending on your needs. An Azure Databricks account represents a single entity for purposes of billing, user management, and support. An account can include multiple workspaces and Unity Catalog metastores.

Account admins handle general account management, and workspace admins manage the settings and features of individual workspaces in the account. Both account and workspace admins manage Azure Databricks users, service principals, and groups, as well as authentication settings and access control.

Azure Databricks provides security features, such as single sign-on, to configure strong authentication. Admins can configure these settings to help prevent account takeovers, in which credentials belonging to a user are compromised using methods like phishing or brute force, giving an attacker access to all of the data accessible from the environment.

Access control lists determine who can view and perform operations on objects in Azure Databricks workspaces, such as notebooks and SQL warehouses.

To learn more about authentication and access control in Azure Databricks, see Authentication and access control.

Networking

Azure Databricks provides network protections that enable you to secure Azure Databricks workspaces and help prevent users from exfiltrating sensitive data. You can use IP access lists to enforce the network location of Azure Databricks users. Using VNet injection (a customer-managed VNet), you can lock down outbound network access. To learn more, see Networking.

Data security and encryption

Security-minded customers sometimes voice a concern that Databricks itself might be compromised, which could result in the compromise of their environment. Azure Databricks has an extremely strong security program which manages the risk of such an incident. See the Security and Trust Center for an overview on the program. That said, no company can completely eliminate all risk, and Azure Databricks provides encryption features for additional control of your data. See Data security and encryption.

Secret management

Sometimes accessing data requires that you authenticate to external data sources. Databricks recommends that you use Databricks secrets to store your credentials instead of directly entering your credentials into a notebook. For more infromation, see Secret management.

Auditing, privacy, and compliance

Azure Databricks provides auditing features to enable admins to monitor user activities to detect security anomalies. For example, you can monitior account takeovers by alerting on unusual time of logins or simultaneous remote logins.

For more information, see Auditing, privacy, and compliance.

Security Analysis Tool

Important

The Security Analysis Tool (SAT) is a productivity tool in an Experimental state. It’s not meant to be used as a certification of your deployments. The SAT project is regularly updated to improve correctness of checks, add new checks, and fix bugs.

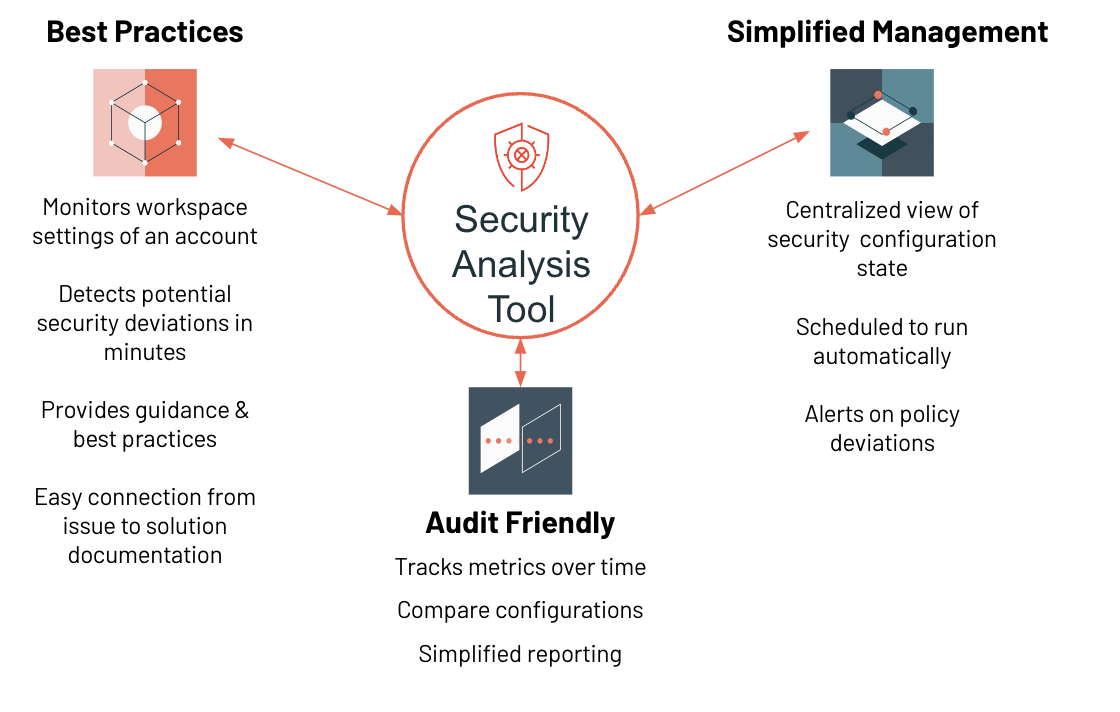

You can use the Security Analysis Tool (SAT) to analyze your Azure Databricks account and workspace security configurations. SAT provides recommendations that help you follow Databricks security best practices. SAT is typically run daily as an automated workflow. The details of these check results are persisted in Delta tables in your storage so that trends can be analyzed over time. These results are displayed in a centralized Azure Databricks dashboard.

For more information, see the Security Analysis Tool GitHub repo.

Learn more

Here are some resources to help you build a comprehensive security solution that meets your organization’s needs:

- The Databricks Security and Trust Center, which provides information about the ways in which security is built into every layer of the Databricks platform.

- Security Best Practices, which provides a checklist of security practices, considerations, and patterns that you can apply to your deployment, learned from our enterprise engagements.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for