Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure DevOps Services | Azure DevOps Server 2022 - Azure DevOps Server 2019

A task performs an action in a pipeline and is a packaged script or procedure that's abstracted with a set of inputs. Tasks are the building blocks for defining automation in a pipeline.

When you run a job, all the tasks are run in sequence, one after the other. To run the same set of tasks in parallel on multiple agents, or to run some tasks without using an agent, see jobs.

By default, all tasks run in the same context, whether that's on the host or in a job container.

You might optionally use step targets to control context for an individual task.

Learn more about how to specify properties for a task with the built-in tasks.

To learn more about the general attributes supported by tasks, see the YAML Reference for steps.task.

Custom tasks

Azure DevOps includes built-in tasks to enable fundamental build and deployment scenarios. You also can create your own custom task.

In addition, Visual Studio Marketplace offers many extensions; each of which, when installed to your subscription or collection, extends the task catalog with one or more tasks. You can also write your own custom extensions to add tasks to Azure Pipelines.

In YAML pipelines, you refer to tasks by name. If a name matches both an in-box task and a custom task, the in-box task takes precedence. You can use the task GUID or a fully qualified name for the custom task to avoid this risk:

steps:

- task: myPublisherId.myExtensionId.myContributionId.myTaskName@1 #format example

- task: qetza.replacetokens.replacetokens-task.replacetokens@3 #working example

To find myPublisherId and myExtensionId, select Get on a task in the marketplace. The values after the itemName in your URL string are myPublisherId and myExtensionId. You can also find the fully qualified name by adding the task to a Release pipeline and selecting View YAML when editing the task.

Task versions

Tasks are versioned, and you must specify the major version of the task used in your pipeline. This can help to prevent issues when new versions of a task are released. Tasks are typically backwards compatible, but in some scenarios you may encounter unpredictable errors when a task is automatically updated.

When a new minor version is released (for example, 1.2 to 1.3), your pipeline automatically uses the new version. However, if a new major version is released (for example 2.0), your pipeline continues to use the major version you specified until you edit the pipeline and manually change to the new major version. The log will include an alert that a new major version is available.

You can set which minor version gets used by specifying the full version number of a task after the @ sign (example: GoTool@0.3.1). You can only use task versions that exist for your organization.

In YAML, you specify the major version using @ in the task name.

For example, to pin to version 2 of the PublishTestResults task:

steps:

- task: PublishTestResults@2

Task control options

Each task offers you some Control Options.

Control options are available as keys on the task section.

- task: string # Required as first property. Name of the task to run.

inputs: # Inputs for the task.

string: string # Name/value pairs

condition: string # Evaluate this condition expression to determine whether to run this task.

continueOnError: boolean # Continue running even on failure?

displayName: string # Human-readable name for the task.

target: string | target # Environment in which to run this task.

enabled: boolean # Run this task when the job runs?

env: # Variables to map into the process's environment.

string: string # Name/value pairs

name: string # ID of the step.

timeoutInMinutes: string # Time to wait for this task to complete before the server kills it.

Control options are available as keys on the task section.

- task: string # Required as first property. Name of the task to run.

inputs: # Inputs for the task.

string: string # Name/value pairs

condition: string # Evaluate this condition expression to determine whether to run this task.

continueOnError: boolean # Continue running even on failure?

displayName: string # Human-readable name for the task.

target: string | target # Environment in which to run this task.

enabled: boolean # Run this task when the job runs?

env: # Variables to map into the process's environment.

string: string # Name/value pairs

name: string # ID of the step.

timeoutInMinutes: string # Time to wait for this task to complete before the server kills it.

retryCountOnTaskFailure: string # Number of retries if the task fails.

Note

A given task or job can't unilaterally decide whether the job/stage continues. What it can do is offer a status of succeeded or failed, and downstream tasks/jobs each have a condition computation that lets them decide whether to run or not. The default condition which is effectively "run if we're in a successful state".

Continue on error alters this in a subtle way. It effectively "tricks" all downstream steps/jobs into treating any result as "success" for the purposes of making that decision. Or to put it another way, it says "don't consider the failure of this task when you're making a decision about the condition of the containing structure".

The timeout period begins when the task starts running. It doesn't include the time the task is queued or is waiting for an agent.

Note

Pipelines may have a job level timeout specified in addition to a task level timeout. If the job level timeout interval elapses before your step completes, the running job is terminated, even if the step is configured with a longer timeout interval. For more information, see Timeouts.

In this YAML, PublishTestResults@2 runs even if the previous step fails because of the succeededOrFailed() condition.

steps:

- task: UsePythonVersion@0

inputs:

versionSpec: '3.x'

architecture: 'x64'

- task: PublishTestResults@2

inputs:

testResultsFiles: "**/TEST-*.xml"

condition: succeededOrFailed()

Conditions

Only when all previous direct and indirect dependencies with the same agent pool succeed. If you have different agent pools, those stages or jobs run concurrently. This condition is the default if no condition is set in the YAML.

Even if a previous dependency fails, unless the run is canceled. Use

succeededOrFailed()in the YAML for this condition.Even if a previous dependency fails, and even if the run is canceled. Use

always()in the YAML for this condition.Only when a previous dependency fails. Use

failed()in the YAML for this condition.

- Custom conditions, which are composed of expressions

Step target

Tasks run in an execution context, which is either the agent host or a container.

An individual step might override its context by specifying a target.

Available options are the word host to target the agent host plus any containers defined in the pipeline.

For example:

resources:

containers:

- container: pycontainer

image: python:3.11

steps:

- task: SampleTask@1

target: host

- task: AnotherTask@1

target: pycontainer

Here, the SampleTask runs on the host and AnotherTask runs in a container.

Number of retries if task failed

Use retryCountOnTaskFailure to specify the number of retries if the task fails. The default is zero retries. For more information on task properties, see steps.task in the YAML Schema.

- task: <name of task>

retryCountOnTaskFailure: <max number of retries>

...

Note

- Requires agent version 2.194.0 or later. On Azure DevOps Server 2022, retries are not supported for agentless tasks. For more information, see Azure DevOps service update November 16, 2021 - Automatic retries for a task, and Azure DevOps service update June 14, 2025 - Retries for server tasks.

- The maximum number of retries allowed is 10.

- The wait time between each retry increases after each failed attempt, following an exponential backoff strategy. The 1st retry happens after 1 second, the 2nd retry after 4 seconds, and the 10th retry after 100 seconds.

- There is no assumption about the idempotency of the task. If the task has side-effects (for instance, if it created an external resource partially), then it may fail the second time it is run.

- There is no information about the retry count made available to the task.

- A warning is added to the task logs indicating that it has failed before it is retried.

- All of the attempts to retry a task are shown in the UI as part of the same task node.

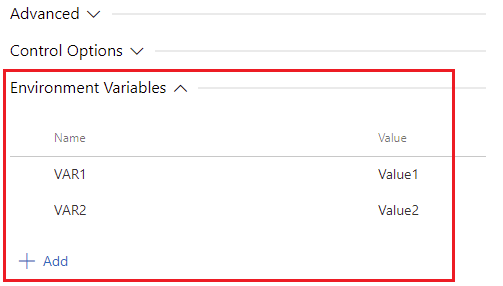

Environment variables

Each task has an env property that is a list of string pairs that represent environment variables mapped into the task process.

- task: AzureCLI@2

displayName: Azure CLI

inputs: # Specific to each task

env:

ENV_VARIABLE_NAME: value

ENV_VARIABLE_NAME2: value

...

The following example runs the script step, which is a shortcut for the Command line task, followed by the equivalent task syntax. This example assigns a value to the AZURE_DEVOPS_EXT_PAT environment variable, which is used to authenticating with Azure DevOps CLI.

# Using the script shortcut syntax

- script: az pipelines variable-group list --output table

env:

AZURE_DEVOPS_EXT_PAT: $(System.AccessToken)

displayName: 'List variable groups using the script step'

# Using the task syntax

- task: CmdLine@2

inputs:

script: az pipelines variable-group list --output table

env:

AZURE_DEVOPS_EXT_PAT: $(System.AccessToken)

displayName: 'List variable groups using the command line task'

- task: Bash@3

inputs:

targetType: # specific to each task

env:

ENV_VARIABLE_NAME: value

ENV_VARIABLE_NAME2: value

...

The following example runs the script step, which is a shortcut for the Bash@3, followed by the equivalent task syntax. This example assigns a value to the ENV_VARIABLE_NAME environment variable and echoes the value.

# Using the script shortcut syntax

- script: echo "This is " $ENV_VARIABLE_NAME

env:

ENV_VARIABLE_NAME: "my value"

displayName: 'echo environment variable'

# Using the task syntax

- task: Bash@2

inputs:

script: echo "This is " $ENV_VARIABLE_NAME

env:

ENV_VARIABLE_NAME: "my value"

displayName: 'echo environment variable'

Build tool installers (Azure Pipelines)

Tool installers enable your build pipeline to install and control your dependencies. Specifically, you can:

Install a tool or runtime on the fly (even on Microsoft-hosted agents) just in time for your CI build.

Validate your app or library against multiple versions of a dependency such as Node.js.

For example, you can set up your build pipeline to run and validate your app for multiple versions of Node.js.

Example: Test and validate your app on multiple versions of Node.js

Create an azure-pipelines.yml file in your project's base directory with the following contents.

pool:

vmImage: ubuntu-latest

steps:

# Node install

- task: UseNode@1

displayName: Node install

inputs:

version: '16.x' # The version we're installing

# Write the installed version to the command line

- script: which node

Create a new build pipeline and run it. Observe how the build is run. The Node.js Tool Installer downloads the Node.js version if it isn't already on the agent. The Command Line script logs the location of the Node.js version on disk.

Tool installer tasks

For a list of our tool installer tasks, see Tool installer tasks.

Disabling in-box and Marketplace tasks

On the organization settings page, you can disable Marketplace tasks, in-box tasks, or both.

Disabling Marketplace tasks can help increase security of your pipelines.

If you disable both in-box and Marketplace tasks, only tasks you install using tfx is available.

Related articles

Help and support

- Explore troubleshooting tips.

- Get advice on Stack Overflow.

- Post your questions, search for answers, or suggest a feature in the Azure DevOps Developer Community.

- Get support for Azure DevOps.

Tool: Node.js Installer

Tool: Node.js Installer Utility: Command Line

Utility: Command Line