Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Learn how to configure Apache Ranger policies for Enterprise Security Package (ESP) Apache Kafka clusters. ESP clusters are connected to a domain allowing users to authenticate with domain credentials. In this tutorial, you create two Ranger policies to restrict access to sales and marketingspend topics.

In this tutorial, you learn how to:

- Create domain users.

- Create Ranger policies.

- Create topics in a Kafka cluster.

- Test Ranger policies.

Prerequisite

An HDInsight Kafka cluster with Enterprise Security Package.

Connect to Apache Ranger Admin UI

From a browser, connect to the Ranger Admin user interface (UI) by using the URL

https://ClusterName.azurehdinsight.net/Ranger/. Remember to changeClusterNameto the name of your Kafka cluster. Ranger credentials aren't the same as Hadoop cluster credentials. To prevent browsers from using cached Hadoop credentials, use a new InPrivate browser window to connect to the Ranger Admin UI.Sign in by using your Microsoft Entra admin credentials. The Microsoft Entra admin credentials aren't the same as HDInsight cluster credentials or Linux HDInsight node SSH credentials.

Create domain users

To learn how to create the sales_user and marketing_user domain users, see Create a HDInsight cluster with Enterprise Security Package. In a production scenario, domain users come from your Microsoft Entra ID tenant.

Create a Ranger policy

Create a Ranger policy for sales_user and marketing_user.

Open the Ranger Admin UI.

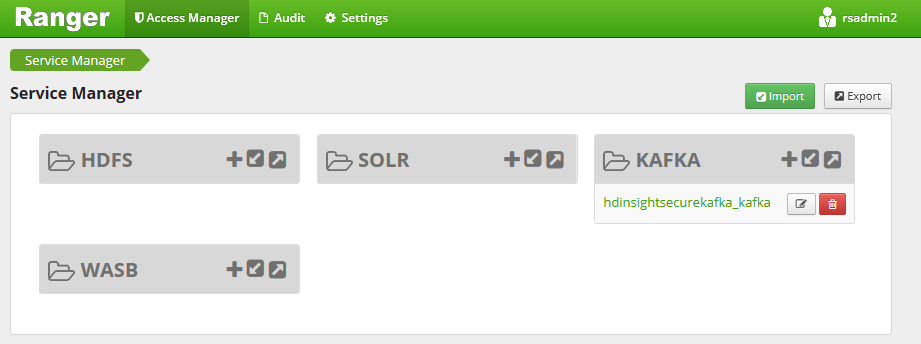

Under Kafka, select <ClusterName>_kafka. One preconfigured policy might be listed.

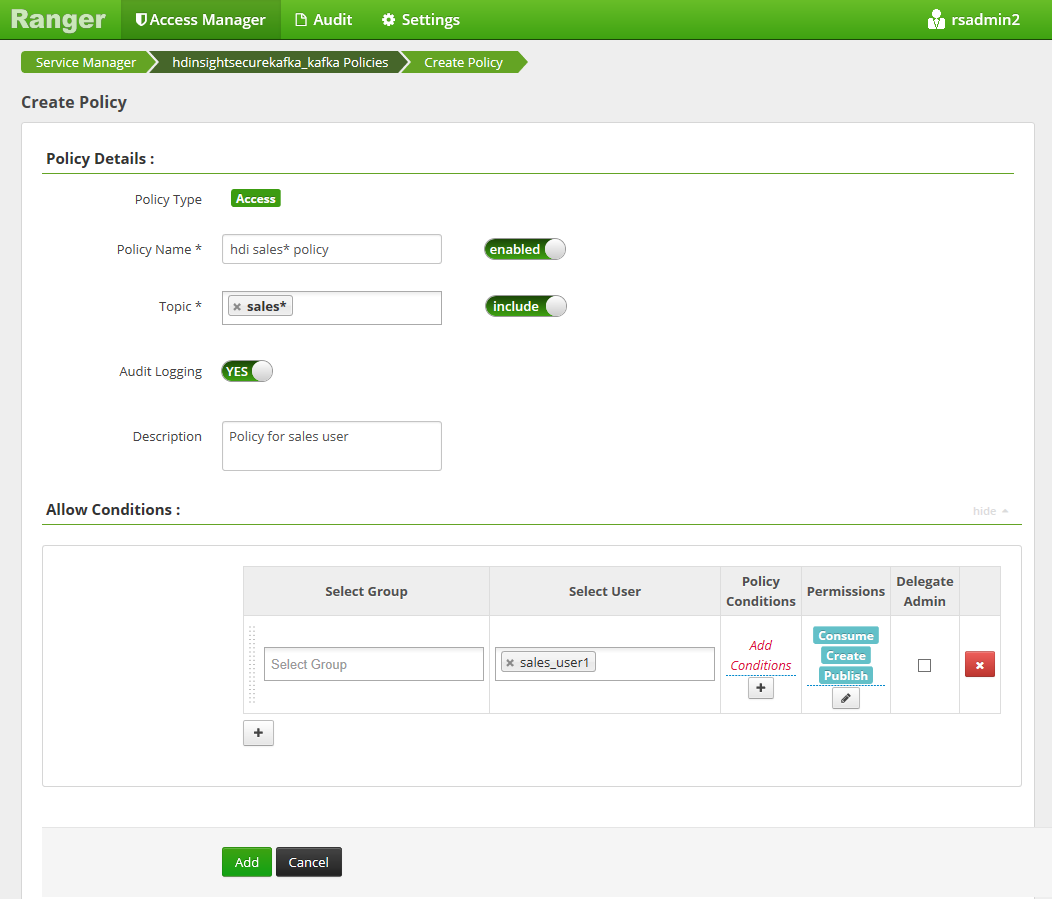

Select Add New Policy and enter the following values:

Setting Suggested value Policy Name HDInsight sales* policy Topic sales* Select User sales_user1 Permissions publish, consume, create The following wildcards can be included in the topic name:

*indicates zero or more occurrences of characters.?indicates single character.

Wait a few moments for Ranger to sync with Microsoft Entra ID if a domain user isn't automatically populated for Select User.

Select Add to save the policy.

Select Add New Policy and then enter the following values:

Setting Suggested value Policy Name HDInsight marketing policy Topic marketingspendSelect User marketing_user1 Permissions publish, consume, create

Select Add to save the policy.

Create topics in a Kafka cluster with ESP

To create two topics, salesevents and marketingspend:

Use the following command to open a Secure Shell (SSH) connection to the cluster:

ssh DOMAINADMIN@CLUSTERNAME-ssh.azurehdinsight.netReplace

DOMAINADMINwith the admin user for your cluster configured during cluster creation. ReplaceCLUSTERNAMEwith the name of your cluster. If prompted, enter the password for the admin user account. For more information on usingSSHwith HDInsight, see Use SSH with HDInsight.Use the following commands to save the cluster name to a variable and install a JSON parsing utility

jq. When prompted, enter the Kafka cluster name.sudo apt -y install jq read -p 'Enter your Kafka cluster name:' CLUSTERNAMEUse the following commands to get the Kafka broker hosts. When prompted, enter the password for the cluster admin account.

export KAFKABROKERS=`curl -sS -u admin -G https://$CLUSTERNAME.azurehdinsight.net/api/v1/clusters/$CLUSTERNAME/services/KAFKA/components/KAFKA_BROKER | jq -r '["\(.host_components[].HostRoles.host_name):9092"] | join(",")' | cut -d',' -f1,2`; \Before you proceeding, you might need to set up your development environment if you haven't already done so. You need components such as the Java JDK, Apache Maven, and an SSH client with Secure Copy (SCP). For more information, see Setup instructions.

Download the Apache Kafka domain-joined producer consumer examples.

Follow steps 2 and 3 under Build and deploy the example in Tutorial: Use the Apache Kafka Producer and Consumer APIs.

Note

For this tutorial, use

kafka-producer-consumer.jarunder theDomainJoined-Producer-Consumerproject. Don't use the one under theProducer-Consumerproject, which is for non-domain-joined scenarios.Run the following commands:

java -jar -Djava.security.auth.login.config=/usr/hdp/current/kafka-broker/conf/kafka_client_jaas.conf kafka-producer-consumer.jar create salesevents $KAFKABROKERS java -jar -Djava.security.auth.login.config=/usr/hdp/current/kafka-broker/conf/kafka_client_jaas.conf kafka-producer-consumer.jar create marketingspend $KAFKABROKERS

Test the Ranger policies

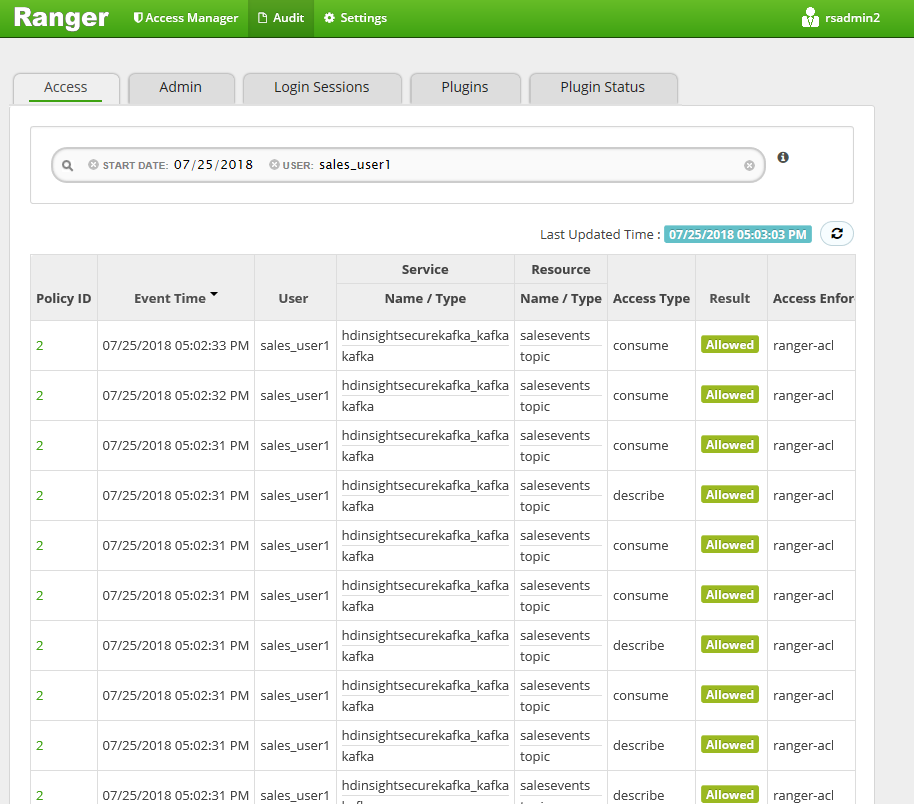

Based on the Ranger policies configured, sales_user can produce/consume the topic salesevents but not the topic marketingspend. Conversely, marketing_user can produce/consume the topic marketingspend but not the topic salesevents.

Open a new SSH connection to the cluster. Use the following command to sign in as sales_user1:

ssh sales_user1@CLUSTERNAME-ssh.azurehdinsight.netUse the broker names from the previous section to set the following environment variable:

export KAFKABROKERS=<brokerlist>:9092Example:

export KAFKABROKERS=<brokername1>.contoso.com:9092,<brokername2>.contoso.com:9092Follow step 3 under Build and deploy the example in Tutorial: Use the Apache Kafka Producer and Consumer APIs to ensure that

kafka-producer-consumer.jaris also available to sales_user.Note

For this tutorial, use

kafka-producer-consumer.jarunder the "DomainJoined-Producer-Consumer" project. Don't use the one under the "Producer-Consumer" project, which is for non-domain-joined scenarios.Verify that sales_user1 can produce to topic

saleseventsby running the following command:java -jar -Djava.security.auth.login.config=/usr/hdp/current/kafka-broker/conf/kafka_client_jaas.conf kafka-producer-consumer.jar producer salesevents $KAFKABROKERSRun the following command to consume from the topic

salesevents:java -jar -Djava.security.auth.login.config=/usr/hdp/current/kafka-broker/conf/kafka_client_jaas.conf kafka-producer-consumer.jar consumer salesevents $KAFKABROKERSVerify that you can read the messages.

Verify that the sales_user1 can't produce to the topic

marketingspendby running the following command in the same SSH window:java -jar -Djava.security.auth.login.config=/usr/hdp/current/kafka-broker/conf/kafka_client_jaas.conf kafka-producer-consumer.jar producer marketingspend $KAFKABROKERSAn authorization error occurs and can be ignored.

Notice that marketing_user1 can't consume from the topic

salesevents.Repeat the preceding steps 1 to 3, but this time as marketing_user1.

Run the following command to consume from the topic

salesevents:java -jar -Djava.security.auth.login.config=/usr/hdp/current/kafka-broker/conf/kafka_client_jaas.conf kafka-producer-consumer.jar consumer salesevents $KAFKABROKERSPrevious messages can't be seen.

View the audit access events from the Ranger UI.

Produce and consume topics in ESP Kafka by using the console

Note

You can't use console commands to create topics. Instead, you must use the Java code demonstrated in the preceding section. For more information, see Create topics in a Kafka cluster with ESP.

To produce and consume topics in ESP Kafka by using the console:

Use

kinitwith the user's username. Enter the password when prompted.kinit sales_user1Set environment variables:

export KAFKA_OPTS="-Djava.security.auth.login.config=/usr/hdp/current/kafka-broker/conf/kafka_client_jaas.conf" export KAFKABROKERS=<brokerlist>:9092Produce messages to the topic

salesevents:/usr/hdp/current/kafka-broker/bin/kafka-console-producer.sh --topic salesevents --broker-list $KAFKABROKERS --producer-property security.protocol=SASL_PLAINTEXTConsume messages from the topic

salesevents:/usr/hdp/current/kafka-broker/bin/kafka-console-consumer.sh --topic salesevents --from-beginning --bootstrap-server $KAFKABROKERS --consumer-property security.protocol=SASL_PLAINTEXT

Produce and consume topics for a long-running session in ESP Kafka

The Kerberos ticket cache has an expiration limitation. For a long-running session, use a keytab instead of renewing the ticket cache manually.

To use a keytab in a long-running session without kinit:

Create a new keytab for your domain user:

ktutil addent -password -p <user@domain> -k 1 -e RC4-HMAC wkt /tmp/<user>.keytab qCreate

/home/sshuser/kafka_client_jaas.conf. It should have the following lines:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true storeKey=true keyTab="/tmp/<user>.keytab" useTicketCache=false serviceName="kafka" principal="<user@domain>"; };Replace

java.security.auth.login.configwith/home/sshuser/kafka_client_jaas.confand produce or consume the topic by using the console or API:export KAFKABROKERS=<brokerlist>:9092 # console tool export KAFKA_OPTS="-Djava.security.auth.login.config=/home/sshuser/kafka_client_jaas.conf" /usr/hdp/current/kafka-broker/bin/kafka-console-producer.sh --topic salesevents --broker-list $KAFKABROKERS --producer-property security.protocol=SASL_PLAINTEXT /usr/hdp/current/kafka-broker/bin/kafka-console-consumer.sh --topic salesevents --from-beginning --bootstrap-server $KAFKABROKERS --consumer-property security.protocol=SASL_PLAINTEXT # API java -jar -Djava.security.auth.login.config=/home/sshuser/kafka_client_jaas.conf kafka-producer-consumer.jar producer salesevents $KAFKABROKERS java -jar -Djava.security.auth.login.config=/home/sshuser/kafka_client_jaas.conf kafka-producer-consumer.jar consumer salesevents $KAFKABROKERS

Clean up resources

If you aren't going to continue to use this application, delete the Kafka cluster that you created:

- Sign in to the Azure portal.

- In the Search box at the top, enter HDInsight.

- Under Services, select HDInsight clusters.

- In the list of HDInsight clusters that appears, select the ... next to the cluster that you created for this tutorial.

- Select Delete > Yes.

Troubleshooting

If kafka-producer-consumer.jar doesn't work in a domain-joined cluster, make sure that you're using kafka-producer-consumer.jar under the DomainJoined-Producer-Consumer project. Don't use the one under the Producer-Consumer project, which is for non-domain-joined scenarios.