Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure Monitor Logs is an Azure Monitor service that monitors your cloud and on-premises environments. The monitoring helps maintain their availability and performance.

Azure Monitor Logs collects data generated by resources in your cloud, resources in on-premises environments, and other monitoring tools. It uses the data to provide analysis across multiple sources. To get the analysis, you enable the selective logging feature by using a script action for HDInsight in the Azure portal.

About selective logging

Selective logging is a part of the overall monitoring system in Azure. After you connect your cluster to a Log Analytics workspace and enable selective logging, you can see logs and metrics like HDInsight security logs, Yarn Resource Manager, and system metrics. You can monitor workloads and see how they're affecting cluster stability.

Selective logging allows you to enable or disable all the tables, or enable selected tables, in the Log Analytics workspace. You can adjust the source type for each table.

Note

If Log Analytics is reinstalled in a cluster, you'll have to disable all the tables and log types again. Reinstallation resets all the configuration files to their original state.

Considerations for script actions

- The monitoring system uses the Metadata Server Daemon (a monitoring agent) and Fluentd for collecting logs by using a unified logging layer.

- Selective logging uses a script action to disable or enable tables and their log types. Because selective logging doesn't open any new ports or change any existing security settings, there are no security changes.

- The script action runs in parallel on all specified nodes and changes the configuration files for disabling or enabling tables and their log types.

Prerequisites

- A Log Analytics workspace. You can think of this workspace as a unique Azure Monitor Logs environment with its own data repository, data sources, and solutions. For instructions, see Create a Log Analytics workspace.

- An Azure HDInsight cluster. Currently, you can use the selective logging feature with the following HDInsight cluster types:

- Hadoop

- HBase

- Interactive Query

- Spark

For instructions on how to create an HDInsight cluster, see Get started with Azure HDInsight.

Enable or disable logs by using a script action for multiple tables and log types

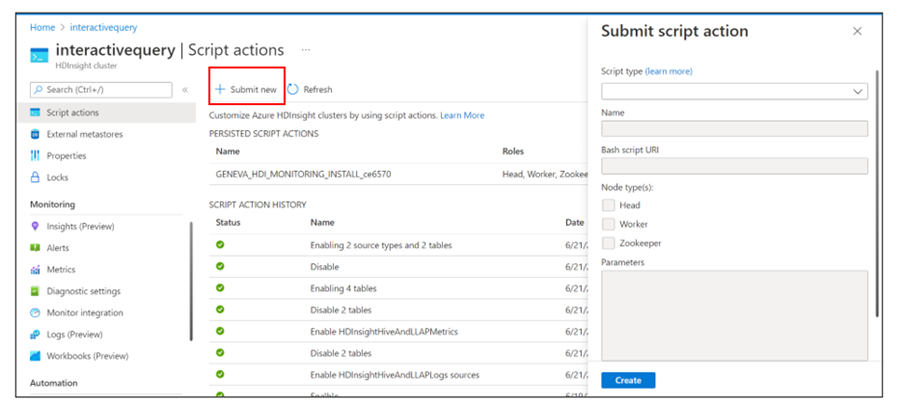

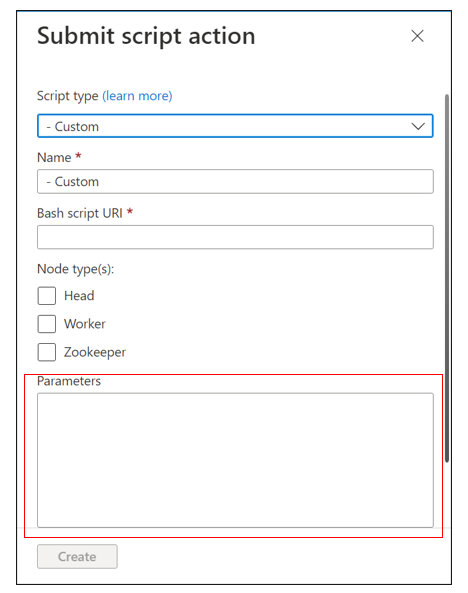

Go to Script actions in your cluster and select Submit new to start the process of creating a script action.

The Submit script action pane appears.

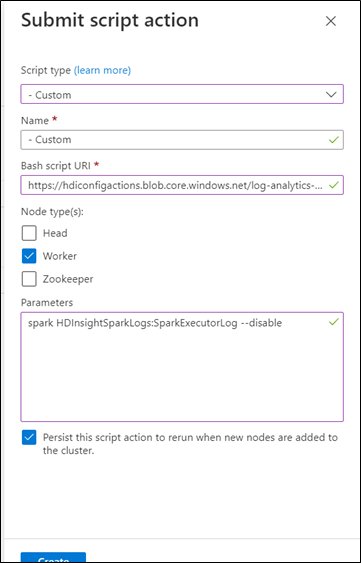

For the script type, select Custom.

Name the script. For example: Disable two tables and two sources.

The Bash script URI must be a link to selectiveLoggingScript.sh.

Select all the node types that apply for the cluster. The options are head node, worker node, and ZooKeeper node.

Define the parameters. For example:

- Spark:

spark HDInsightSparkLogs:SparkExecutorLog --disable - Interactive Query:

interactivehive HDInsightHadoopAndYarnLogs:NodeManager --enable - Hadoop:

hadoop HDInsightHiveAndLLAPLogs:HiveServer2Log --disable - HBase:

hbase HDInsightHBaseLogs:HBaseRegionServerLog --enable

For more information, see the Parameter syntax section.

- Spark:

Select Create.

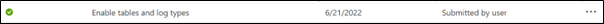

After a few minutes, a green check mark appears next to your script action history. It means the script has successfully run.

You see your changes in the Log Analytics workspace.

Troubleshooting

No changes appear in the Log Analytics workspace

If you submit your script action but there are no changes in the Log Analytics workspace:

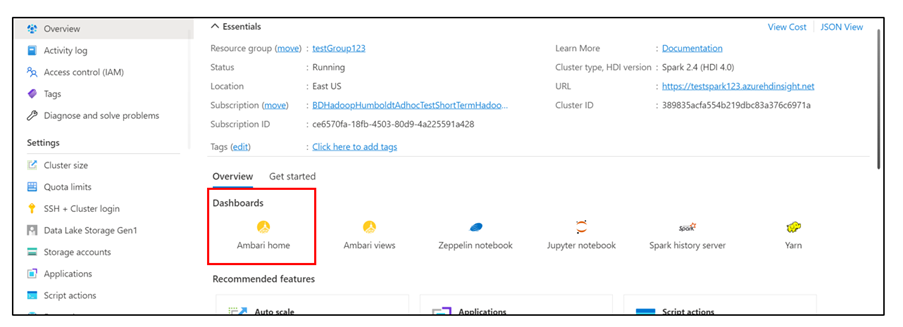

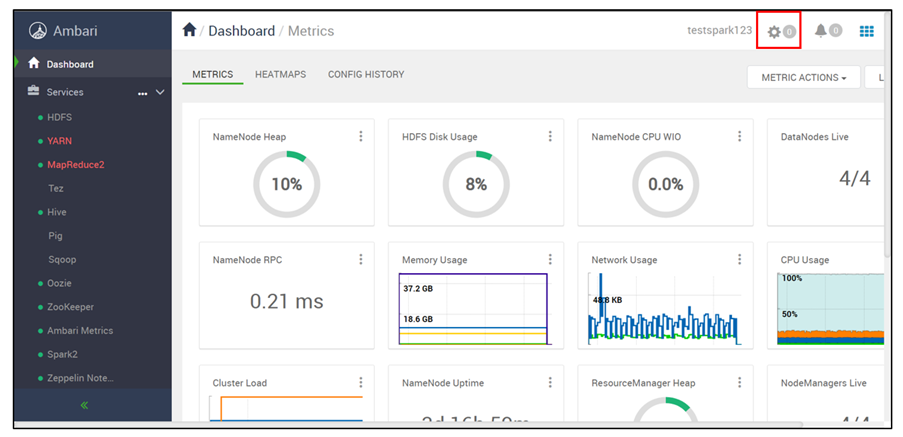

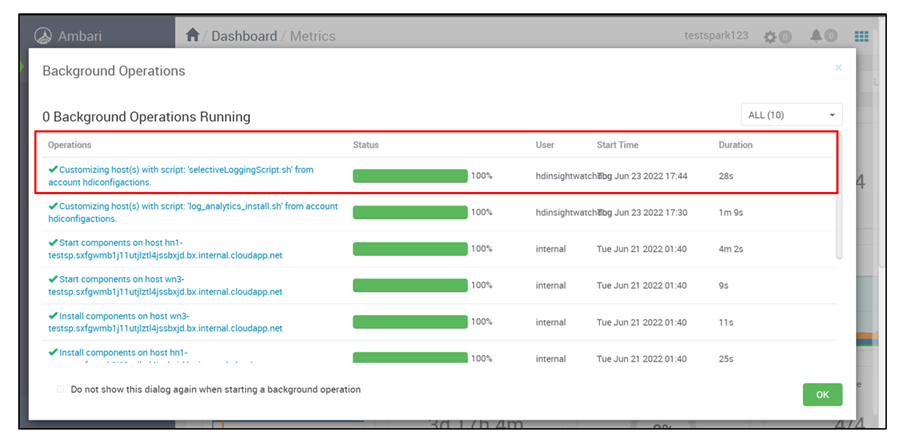

Under Dashboards, select Ambari home to check the debug information.

Select the Settings button.

Select your latest script run at the top of the list of background operations.

Verify the script run status in all the nodes individually.

Check that the parameter syntax from the parameter syntax section is correct.

Check that the Log Analytics workspace is connected to the cluster and that Log Analytics monitoring is turned on.

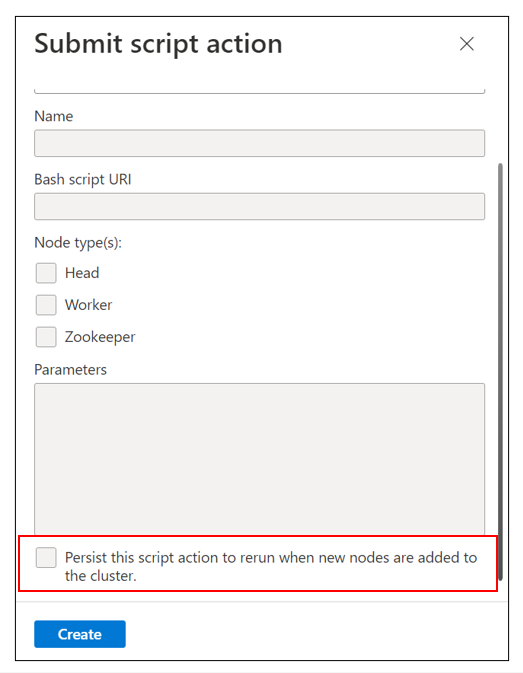

Check that you selected the Persist this script action to rerun when new nodes are added to the cluster checkbox for the script action that you ran.

See if a new node has been added to the cluster recently.

Note

For the script to run in the latest cluster, the script must persist.

Make sure that you selected all the node types that you wanted for the script action.

The script action failed

If the script action shows a failure status in the script action history:

- Check that the parameter syntax from the parameter syntax section is correct.

- Check that the script link is correct. It should be:

https://hdiconfigactions.blob.core.windows.net/log-analytics-patch/selectiveLoggingScriptsAma/selectiveLoggingScript.sh.

Table names

For a complete listing of table names for different log types (sources), see Azure Monitor Logs tables.

Parameter syntax

Parameters define the cluster type, table names, source names, and action.

A parameter contains three parts:

- Cluster type

- Tables and log types

- Action (either

--disableor--enable)

Syntax for multiple tables

When you have multiple tables, they're separated with a comma. For example:

spark HDInsightSecurityLogs, HDInsightAmbariSystemMetrics --disable

hbase HDInsightSecurityLogs, HDInsightAmbariSystemMetrics --enable

Syntax for multiple source types or log types

When you have multiple source types or log types, they're separated with a space.

To disable a source, write the table name that contains the log types, followed by a colon and then the real log type name:

TableName : LogTypeName

For example, assume that spark HDInsightSecurityLogs is a table that has two log types: AmbariAuditLog and AuthLog. To disable both the log types, the correct syntax would be:

spark HDInsightSecurityLogs: AmbariAuditLog AuthLog --disable

Syntax for multiple tables and source types

If you need to disable two tables and two source types, use the following syntax:

- Spark:

InteractiveHiveMetastoreLoglog type in theHDInsightHiveAndLLAPLogstable - HBase:

InteractiveHiveHSILoglog type in theHDInsightHiveAndLLAPLogstable - Hadoop:

HDInsightHiveAndLLAPMetricstable - Hadoop:

HDInsightHiveTezAppStatstable

Separate the tables with a comma. Denote sources by using a colon after the table name in which they reside.

The correct parameter syntax for these cases would be:

interactivehive HDInsightHiveAndLLAPLogs: InteractiveHiveMetastoreLog, HDInsightHiveAndLLAPMetrics, HDInsightHiveTezAppStats, HDInsightHiveAndLLAPLogs: InteractiveHiveHSILog --enable