Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Important

The QnA Maker service is being retired on the 31st of March, 2025. A newer version of the question and answering capability is now available as part of Azure Cognitive Service for Language. We recommend using Question Answering service and integrate this with the healthcare agent service. You can find out how to do this here

QnA Maker is a cloud-based API service that creates a conversational, question and answer layer over your data. The QnA Maker service answers your users' natural language questions by matching it with the best possible answer, and it can be used to extend the healthcare agent service experience by connecting it to your knowledge-base (KB) or to easily add a set of chit-chat as a starting point for your healthcare agent service's personality.

In this tutorial, we'll follow the steps required to extend your healthcare agent service with a QnA Maker language model. Our model understands when a user is asking a question from the semi-structured content in your KB and reply with the corresponding answer.

Create your Knowledge Base

You should first navigate to your QnA Maker portal and create a new knowledge base.

Add your content such as Frequently Asked Question (FAQ) URLs, product manuals, support documents and custom questions and answers to your knowledge-base.

Select the ‘Publish’ button from the top menu to get knowledge-base endpoints. You'll then be able to use the following endpoint details to extend your bot:

You can always find the deployment details in your service’s knowledge-base settings page.

Create the healthcare agent service QnA Language model

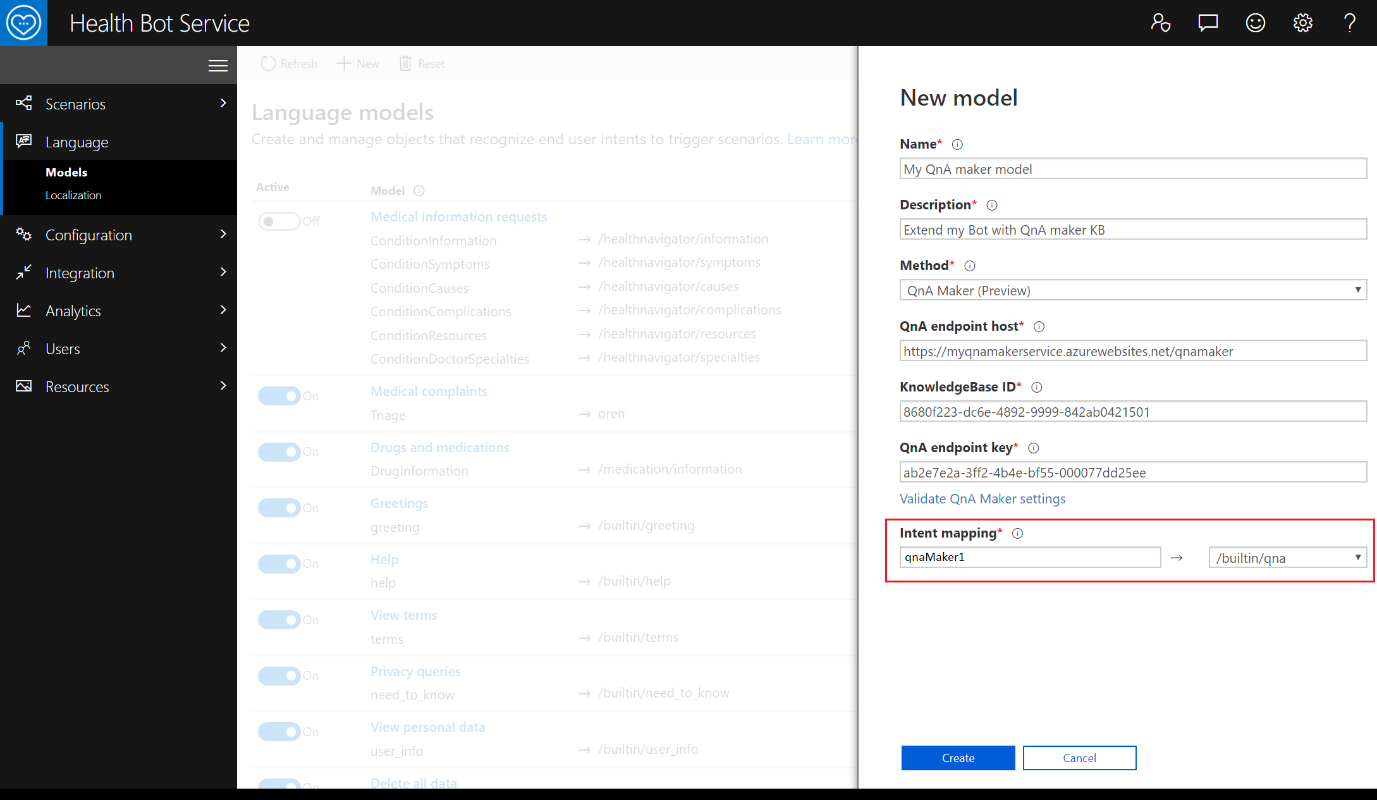

Sign in to the healthcare agent service Management portal and navigate to Language >> Models. Here you should add a new model and select the QnA maker recognition method.

Provide the required name and description fields. These can be any name you like and are only seen internally to recognize the model.

Provide the endpoint host knowledge-base ID and QnA endpoint key as per steps three. If the credentials have been provided correctly, you'll be able to validate the connection to the QnA endpoint successfully.

You should then set a unique intent name and define the triggered scenario. Use the built-in QnA scenario to reply with the top scoring answer of QnA.

For more advanced flows, you can map the intent to your custom scenario. Your custom scenario is triggered with the following input arguments:

- The raw QnA endpoint response.

- The unique intent name that can be used to identify the model that had triggered your custom scenario.

You can now save the language model and test it. Select ‘Create’ and open the webchat in the management portal. Type one of the supported questions from your KB and see how the healthcare agent service can now answer your users' natural language questions by matching it with the best possible answer from your Knowledge base or chit-chat file.

Using multiple LUIS, RegEx and QnA models

When a user query is matched against a knowledge base, QnA Maker returns relevant answers, along with a confidence score. This score indicates the confidence that the answer is the right match for the given user query. The healthcare agent service filters QnA response with a score lower than 50 (or 0.5 in a scale of 0-1).

When a user query is matched against the model, it indicates which language model is most appropriately processed the utterance. The code in this bot routes the request to the corresponding scenario as specified in details here.