Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This article discusses how to query Avro data to efficiently route messages from Azure IoT Hub to Azure services. Message Routing allows you to filter data using rich queries based on message properties, message body, device twin tags, and device twin properties. To learn more about the querying capabilities in Message Routing, see the article about message routing query syntax.

The challenge has been that when Azure IoT Hub routes messages to Azure Blob storage, by default IoT Hub writes the content in Avro format, which has both a message body property and a message property. The Avro format isn't used for any other endpoints. Although the Avro format is great for data and message preservation, it's a challenge to use it to query data. In comparison, JSON or CSV format is easier for querying data. IoT Hub now supports writing data to Blob storage in JSON and AVRO.

For more information, see Using Azure Storage as a routing endpoint.

To address non-relational big-data needs and formats and overcome this challenge, you can use many of the big-data patterns for both transforming and scaling data. One of the patterns, "pay per query", is Azure Data Lake Analytics, which is the focus of this article. Although you can easily execute the query in Hadoop or other solutions, Data Lake Analytics is often better suited for this "pay per query" approach.

There is an "extractor" for Avro in U-SQL. For more information, see U-SQL Avro example.

Query and export Avro data to a CSV file

In this section, you query Avro data and export it to a CSV file in Azure Blob storage, although you could easily place the data in other repositories or data stores.

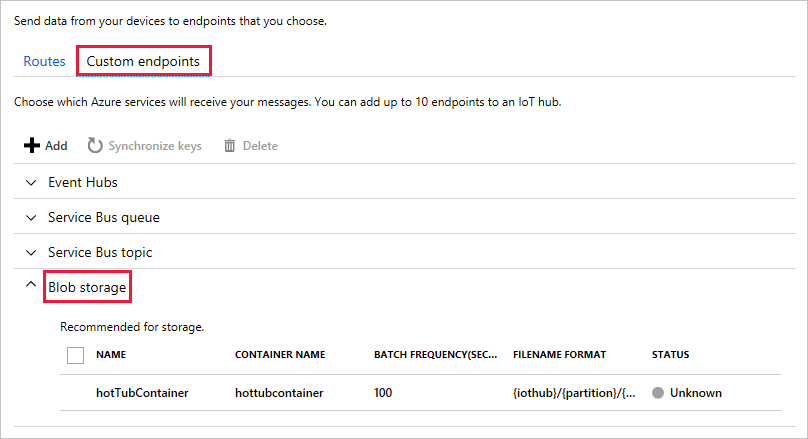

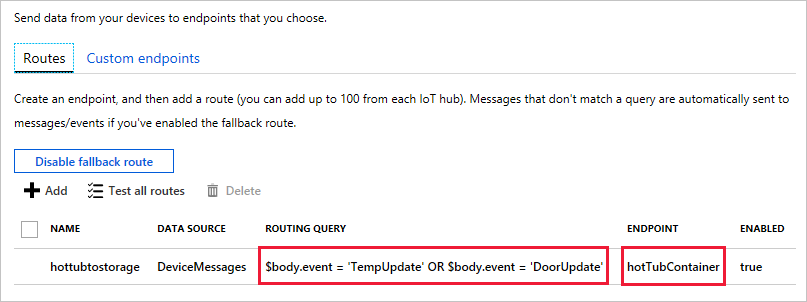

Set up Azure IoT Hub to route data to an Azure Blob storage endpoint by using a property in the message body to select messages.

For more information on settings up routes and custom endpoints, see Message Routing for an IoT hub.

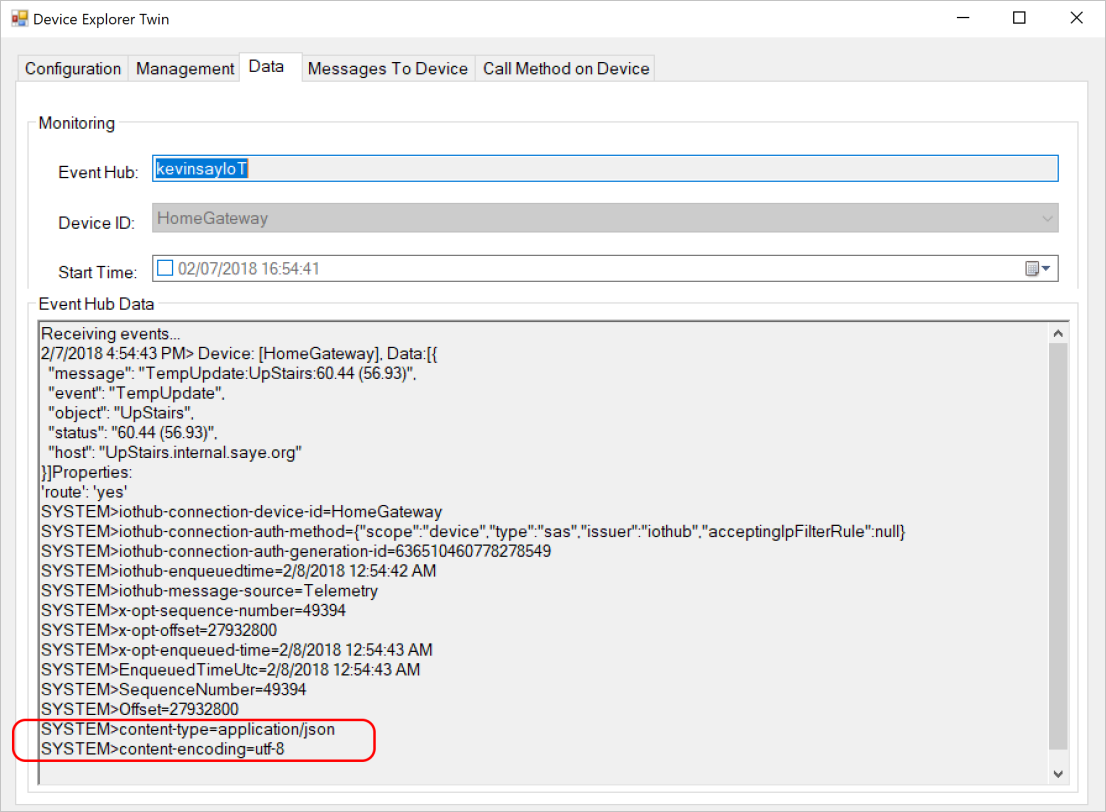

Ensure that your device has the encoding, content type, and needed data in either the properties or the message body, as referenced in the product documentation. When you view these attributes in Device Explorer, as shown here, you can verify that they're set correctly.

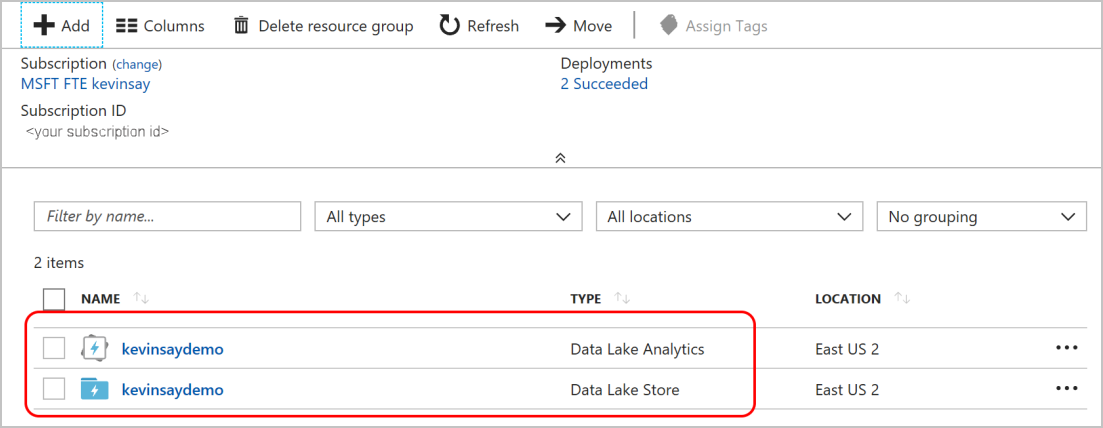

Set up an Azure Data Lake Store instance and a Data Lake Analytics instance. Azure IoT Hub doesn't route to a Data Lake Store instance, but a Data Lake Analytics instance requires one.

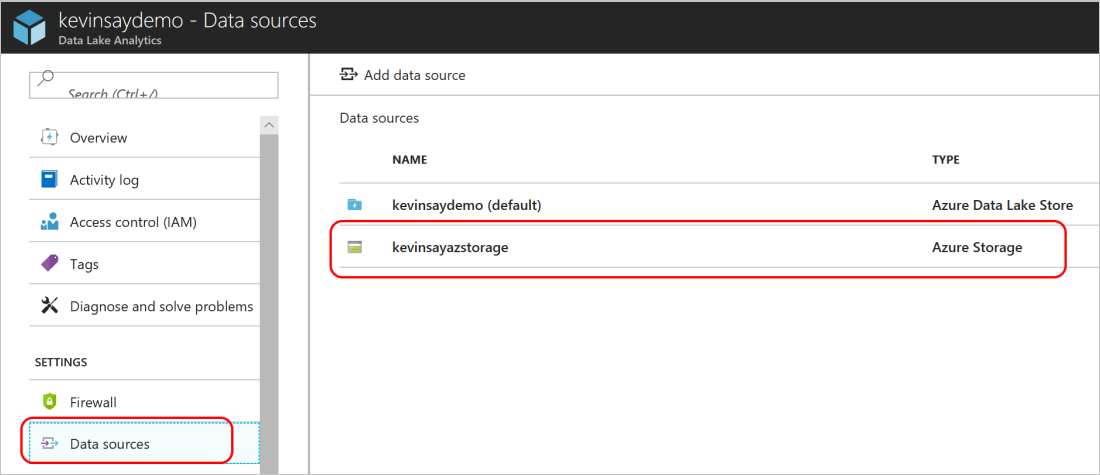

In Data Lake Analytics, configure Azure Blob storage as an additional store, the same Blob storage that Azure IoT Hub routes data to.

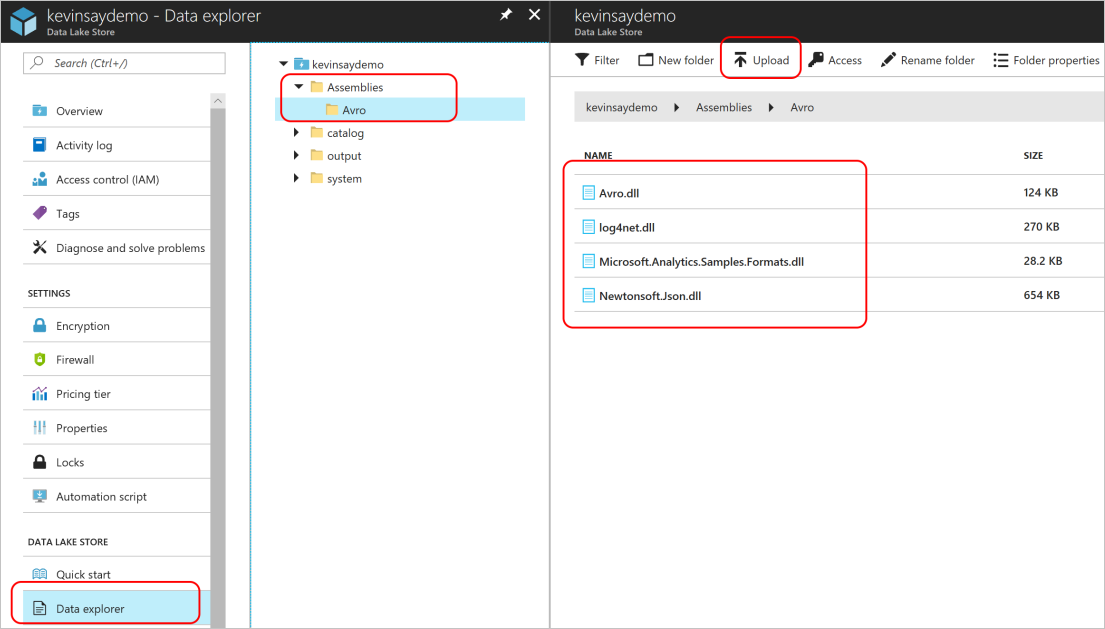

As discussed in the U-SQL Avro example, you need four DLL files. Upload these files to a location in your Data Lake Store instance.

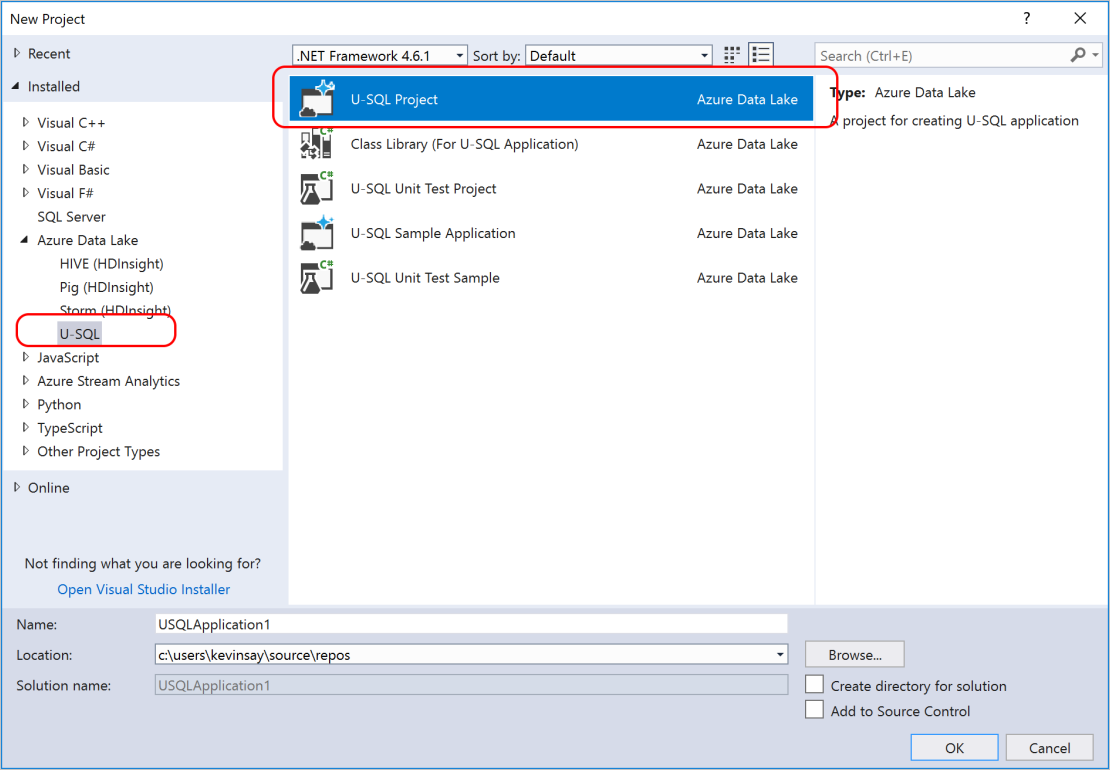

In Visual Studio, create a U-SQL project.

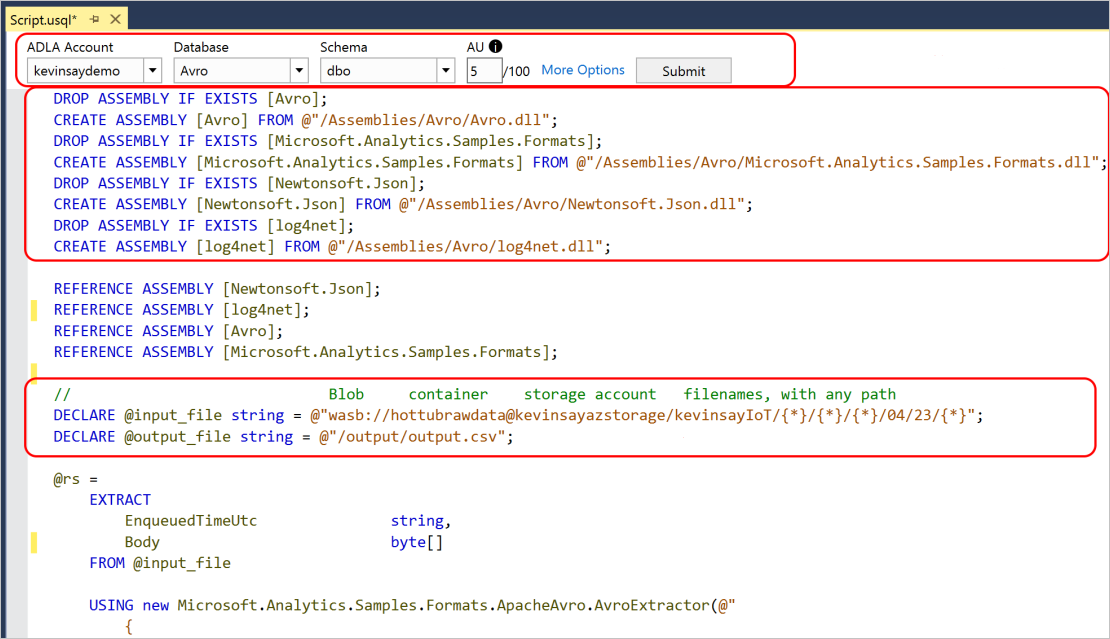

Paste the content of the following script into the newly created file. Modify the three highlighted sections: your Data Lake Analytics account, the associated DLL file paths, and the correct path for your storage account.

The actual U-SQL script for simple output to a CSV file:

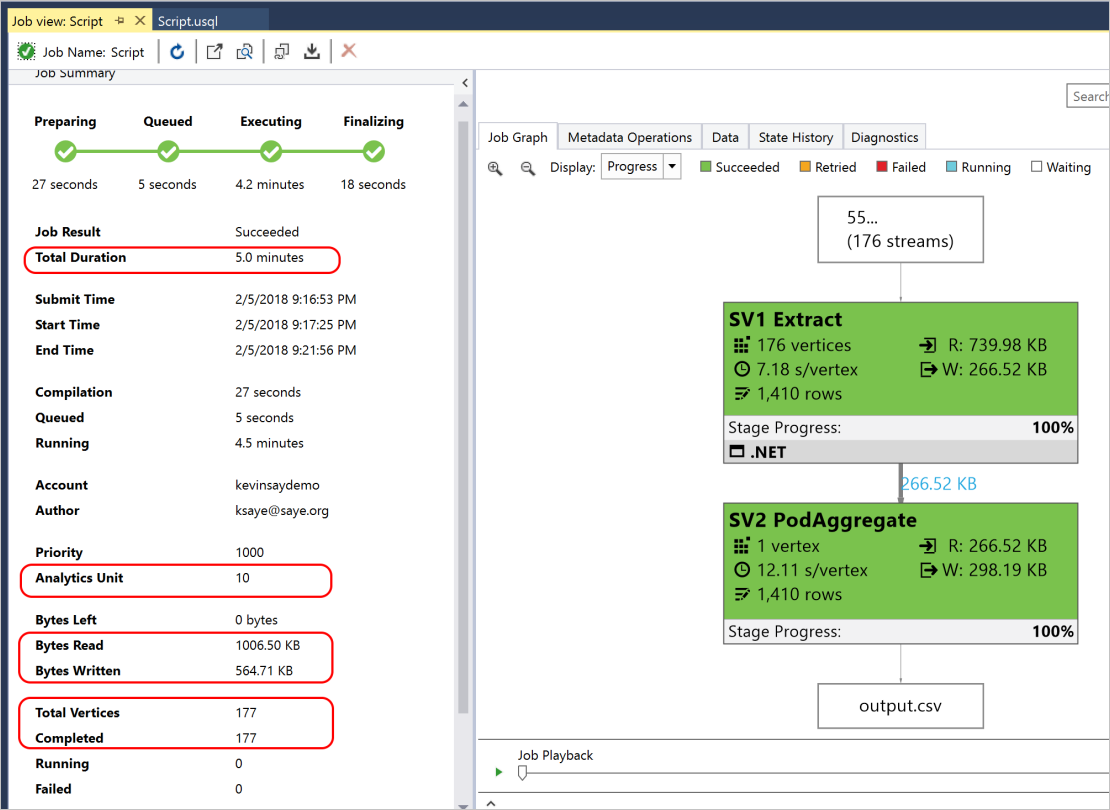

DROP ASSEMBLY IF EXISTS [Avro]; CREATE ASSEMBLY [Avro] FROM @"/Assemblies/Avro/Avro.dll"; DROP ASSEMBLY IF EXISTS [Microsoft.Analytics.Samples.Formats]; CREATE ASSEMBLY [Microsoft.Analytics.Samples.Formats] FROM @"/Assemblies/Avro/Microsoft.Analytics.Samples.Formats.dll"; DROP ASSEMBLY IF EXISTS [Newtonsoft.Json]; CREATE ASSEMBLY [Newtonsoft.Json] FROM @"/Assemblies/Avro/Newtonsoft.Json.dll"; DROP ASSEMBLY IF EXISTS [log4net]; CREATE ASSEMBLY [log4net] FROM @"/Assemblies/Avro/log4net.dll"; REFERENCE ASSEMBLY [Newtonsoft.Json]; REFERENCE ASSEMBLY [log4net]; REFERENCE ASSEMBLY [Avro]; REFERENCE ASSEMBLY [Microsoft.Analytics.Samples.Formats]; // Blob container storage account filenames, with any path DECLARE @input_file string = @"wasb://hottubrawdata@kevinsayazstorage/kevinsayIoT/{*}/{*}/{*}/{*}/{*}/{*}"; DECLARE @output_file string = @"/output/output.csv"; @rs = EXTRACT EnqueuedTimeUtc string, Body byte[] FROM @input_file USING new Microsoft.Analytics.Samples.Formats.ApacheAvro.AvroExtractor(@" { ""type"":""record"", ""name"":""Message"", ""namespace"":""Microsoft.Azure.Devices"", ""fields"": [{ ""name"":""EnqueuedTimeUtc"", ""type"":""string"" }, { ""name"":""Properties"", ""type"": { ""type"":""map"", ""values"":""string"" } }, { ""name"":""SystemProperties"", ""type"": { ""type"":""map"", ""values"":""string"" } }, { ""name"":""Body"", ""type"":[""null"",""bytes""] }] }" ); @cnt = SELECT EnqueuedTimeUtc AS time, Encoding.UTF8.GetString(Body) AS jsonmessage FROM @rs; OUTPUT @cnt TO @output_file USING Outputters.Text();It took Data Lake Analytics five minutes to run the following script, which was limited to 10 analytic units and processed 177 files. The result is shown in the CSV-file output that's displayed in the following image:

To parse the JSON, continue to step 8.

Most IoT messages are in JSON file format. By adding the following lines, you can parse the message into a JSON file, which lets you add the WHERE clauses and output only the needed data.

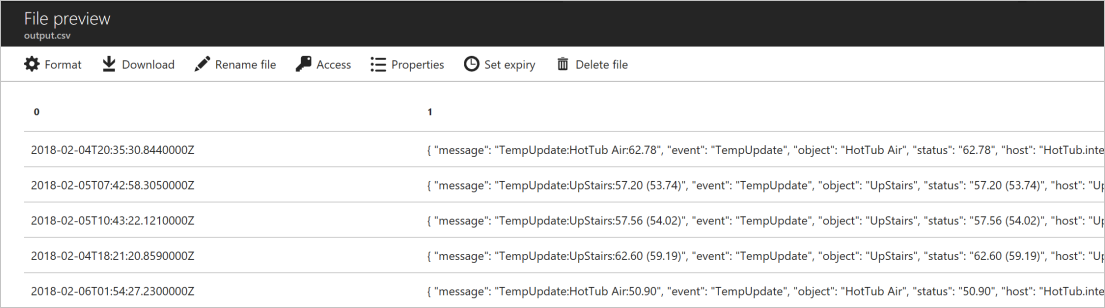

@jsonify = SELECT Microsoft.Analytics.Samples.Formats.Json.JsonFunctions.JsonTuple(Encoding.UTF8.GetString(Body)) AS message FROM @rs; /* @cnt = SELECT EnqueuedTimeUtc AS time, Encoding.UTF8.GetString(Body) AS jsonmessage FROM @rs; OUTPUT @cnt TO @output_file USING Outputters.Text(); */ @cnt = SELECT message["message"] AS iotmessage, message["event"] AS msgevent, message["object"] AS msgobject, message["status"] AS msgstatus, message["host"] AS msghost FROM @jsonify; OUTPUT @cnt TO @output_file USING Outputters.Text();The output displays a column for each item in the

SELECTcommand.

Next steps

In this tutorial, you learned how to query Avro data to efficiently route messages from Azure IoT Hub to Azure services.

To learn more about message routing in IoT Hub, see Use IoT Hub message routing.

To learn more about routing query syntax, see IoT Hub message routing query syntax.