Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This tutorial is part four of a series. It shows how to use ELK (Elasticsearch, Logstash, and Kibana) to monitor Service Fabric applications running in Azure.

In part four of the series, you learn how to:

- Set up ELK server in Azure

- Configure Logstash to receive logs from Event Hubs

- Visualize platform and application logs in Kibana

In this tutorial series you learn how to:

- Build a Java Service Fabric Reliable Services application

- Deploy and debug the application on a local cluster

- Deploy application to an Azure cluster

- Set up monitoring and diagnostics for the application

- Set up CI/CD

Prerequisites

Before you begin this tutorial:

- If you don't have an Azure subscription, create a free account

- Set up your application to emit logs to the location specified in part two.

- Complete part three and have a running Service Fabric cluster configured to send logs to Event Hubs.

- The policy in Event Hubs that has the 'Listen' permission and the associated primary key from series three.

Download the Voting sample application

If you did not build the Voting sample application in part one of this tutorial series, you can download it. In a command window, run the following command to clone the sample app repository to your local machine.

git clone https://github.com/Azure-Samples/service-fabric-java-quickstart

Create an ELK server in Azure

You can use a preconfigured ELK environment for this tutorial and if you already have one, skip to the Setup Logstash section. However, if you do not have one, the following steps creates one in Azure.

Create an ELK Certified by Bitnami in Azure. For the purposes of the tutorial, there are not any particular specifications to follow for the creation of this server.

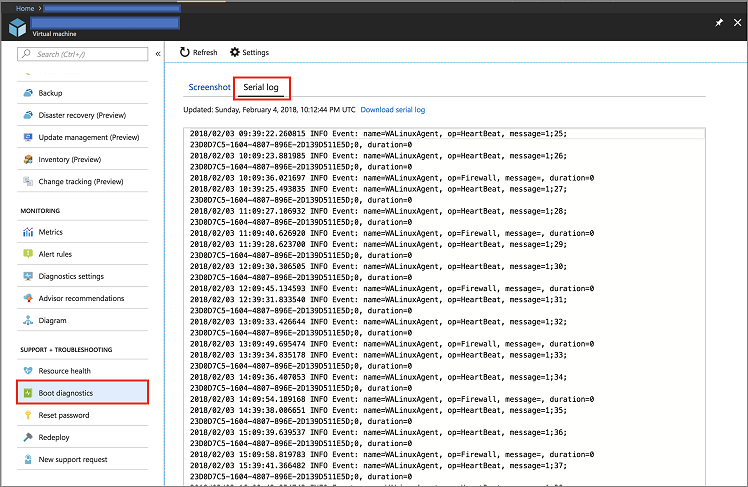

Go to your resource in Azure portal and enter the Boot Diagnostics tab under the Support + Troubleshooting section. Next, click on the Serial Log tab.

Do a search on the logs for the password is required to access the Kibana instance. It resembles the following snippet:

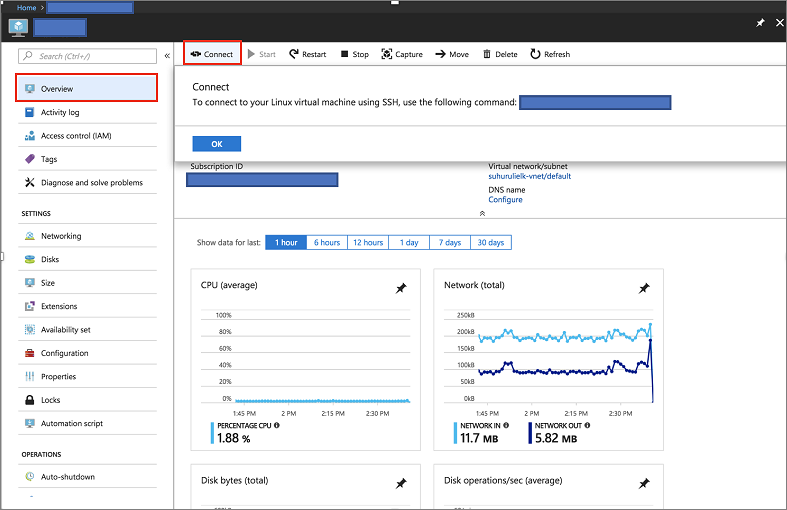

[ 25.932766] bitnami[1496]: ######################################################################### [ 25.948656] bitnami[1496]: # # [ 25.962448] bitnami[1496]: # Setting Bitnami application password to '[PASSWORD]' # [ 25.978137] bitnami[1496]: # (the default application username is 'user') # [ 26.004770] bitnami[1496]: # # [ 26.029413] bitnami[1496]: #########################################################################Press the connect button on the Overview page of the server in Azure portal to get the login details.

SSH into the server hosting the ELK image using the following command

ssh [USERNAME]@[CONNECTION-IP-OF-SERVER] Example: ssh testaccount@104.40.63.157

Set up ELK

The first step is to load the ELK environment

sudo /opt/bitnami/use_elkIf you are using an existing environment, you have to run the following command to stop the Logstash service

sudo /opt/bitnami/ctlscript.sh stop logstashRun the following command to install the Logstash plugin for Event Hubs.

logstash-plugin install logstash-input-azureeventhubCreate or modify your existing Logstash config file with the following contents: If you are creating the file, it has to be created at

/opt/bitnami/logstash/conf/access-log.confif using the ELK Bitnami image in Azure.input { azureeventhub { key => "[RECEIVER-POLICY-KEY-FOR-EVENT-HUB]" username => "[RECEIVER-POLICY-NAME]" namespace => "[EVENTHUB-NAMESPACENAME]" eventhub => "[EVENTHUB-NAME]" partitions => 4 } } output { elasticsearch { hosts => [ "127.0.0.1:9200" ] } }To verify the configuration, run the following command:

/opt/bitnami/logstash/bin/logstash -f /opt/bitnami/logstash/conf/ --config.test_and_exitStart the Logstash service

sudo /opt/bitnami/ctlscript.sh start logstashCheck your Elasticsearch to make sure you are receiving data

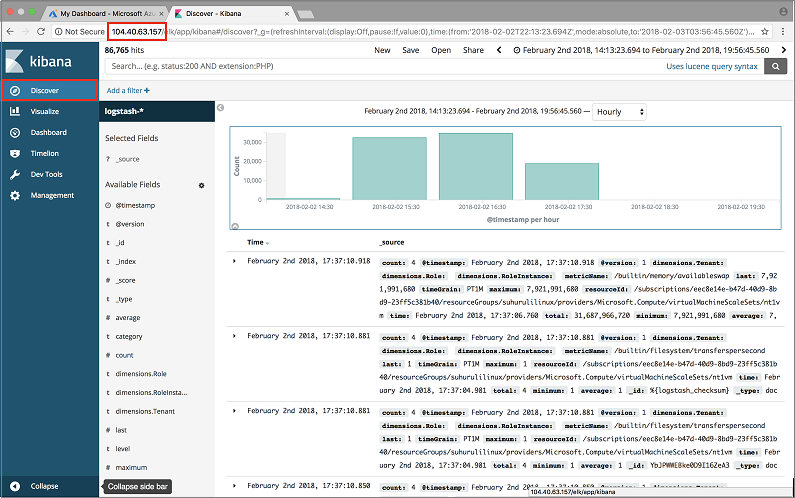

curl 'localhost:9200/_cat/indices?v'Access your Kibana dashboard at http://SERVER-IP and enter the username and password for Kibana. If you used the ELK image in Azure, the default username is 'user' and the password is the one obtained from the Boot Diagnostics.

Next steps

In this tutorial, you learned how to:

- Get an ELK server up and running in Azure

- Configure the server to receive diagnostic information from your Service Fabric cluster

Advance to the next tutorial: