Tutorial: Build machine learning applications using Synapse Machine Learning

In this article, you will learn how to use Synapse Machine Learning (SynapseML) to create machine learning applications. SynapseML expands the distributed machine learning solution of Apache Spark by adding many deep learning and data science tools, such as Azure AI services, OpenCV, LightGBM and more. SynapseML allows you to build powerful and highly scalable predictive and analytical models from various Spark data sources. Synapse Spark provide built-in SynapseML libraries including:

- Vowpal Wabbit – Library services for Machine learning to enable Text analytics like sentiment analysis in tweets.

- MMLSpark: Unifying Machine Learning Ecosystems at Massive Scales – To combine the feature of Azure AI services in SparkML pipelines in order to derive solution design for cognitive data modeling services like anomaly detection.

- LightGBM – LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed and higher efficiency.

- Conditional KNN - Scalable KNN Models with Conditional Queries.

- HTTP on Spark – Enables distributed Microservices orchestration in integrating Spark and HTTP protocol-based accessibility.

This tutorial covers samples using Azure AI services in SynapseML for

- Text Analytics - get the sentiment (or mood) of a set of sentences.

- Computer Vision - get the tags (one-word descriptions) associated with a set of images.

- Bing Image Search - search the web for images related to a natural language query.

- Anomaly Detector - detect anomalies within a time series data.

If you don't have an Azure subscription, create a free account before you begin.

Prerequisites

- Azure Synapse Analytics workspace with an Azure Data Lake Storage Gen2 storage account configured as the default storage. You need to be the Storage Blob Data Contributor of the Data Lake Storage Gen2 file system that you work with.

- Spark pool in your Azure Synapse Analytics workspace. For details, see Create a Spark pool in Azure Synapse.

- Pre-configuration steps described in the tutorial Configure Azure AI services in Azure Synapse.

Get started

To get started, import SynapseML and configure service keys.

import synapse.ml

from synapse.ml.cognitive import *

from notebookutils import mssparkutils

# An Azure AI services multi-service resource key for Text Analytics and Computer Vision (or use separate keys that belong to each service)

ai_service_key = mssparkutils.credentials.getSecret("ADD_YOUR_KEY_VAULT_NAME", "ADD_YOUR_SERVICE_KEY","ADD_YOUR_KEY_VAULT_LINKED_SERVICE_NAME")

# A Bing Search v7 subscription key

bingsearch_service_key = mssparkutils.credentials.getSecret("ADD_YOUR_KEY_VAULT_NAME", "ADD_YOUR_BING_SEARCH_KEY","ADD_YOUR_KEY_VAULT_LINKED_SERVICE_NAME")

# An Anomaly Detector subscription key

anomalydetector_key = mssparkutils.credentials.getSecret("ADD_YOUR_KEY_VAULT_NAME", "ADD_YOUR_ANOMALY_KEY","ADD_YOUR_KEY_VAULT_LINKED_SERVICE_NAME")

Text analytics sample

The Text Analytics service provides several algorithms for extracting intelligent insights from text. For example, we can find the sentiment of given input text. The service will return a score between 0.0 and 1.0 where low scores indicate negative sentiment and high score indicates positive sentiment. This sample uses three simple sentences and returns the sentiment for each.

from pyspark.sql.functions import col

# Create a dataframe that's tied to it's column names

df_sentences = spark.createDataFrame([

("I am so happy today, its sunny!", "en-US"),

("this is a dog", "en-US"),

("I am frustrated by this rush hour traffic!", "en-US")

], ["text", "language"])

# Run the Text Analytics service with options

sentiment = (TextSentiment()

.setTextCol("text")

.setLocation("eastasia") # Set the location of your Azure AI services resource

.setSubscriptionKey(ai_service_key)

.setOutputCol("sentiment")

.setErrorCol("error")

.setLanguageCol("language"))

# Show the results of your text query in a table format

display(sentiment.transform(df_sentences).select("text", col("sentiment")[0].getItem("sentiment").alias("sentiment")))

Expected results

| text | sentiment |

|---|---|

| I am frustrated by this rush hour traffic! | negative |

| this is a dog | neutral |

| I am so happy today, its sunny! | positive |

Computer vision sample

Computer Vision analyzes images to identify structure such as faces, objects, and natural-language descriptions. In this sample, we tag the following image. Tags are one-word descriptions of things in the image like recognizable objects, people, scenery, and actions.

# Create a dataframe with the image URL

df_images = spark.createDataFrame([

("https://raw.githubusercontent.com/Azure-Samples/cognitive-services-sample-data-files/master/ComputerVision/Images/objects.jpg", )

], ["image", ])

# Run the Computer Vision service. Analyze Image extracts information from/about the images.

analysis = (AnalyzeImage()

.setLocation("eastasia") # Set the location of your Azure AI services resource

.setSubscriptionKey(ai_service_key)

.setVisualFeatures(["Categories","Color","Description","Faces","Objects","Tags"])

.setOutputCol("analysis_results")

.setImageUrlCol("image")

.setErrorCol("error"))

# Show the results of what you wanted to pull out of the images.

display(analysis.transform(df_images).select("image", "analysis_results.description.tags"))

Expected results

| image | tags |

|---|---|

https://raw.githubusercontent.com/Azure-Samples/cognitive-services-sample-data-files/master/ComputerVision/Images/objects.jpg |

[skating, person, man, outdoor, riding, sport, skateboard, young, board, shirt, air, park, boy, side, jumping, ramp, trick, doing, flying] |

Bing image search sample

Bing Image Search searches the web to retrieve images related to a user's natural language query. In this sample, we use a text query that looks for images with quotes. It returns a list of image URLs that contain photos related to our query.

from pyspark.ml import PipelineModel

# Number of images Bing will return per query

imgsPerBatch = 2

# A list of offsets, used to page into the search results

offsets = [(i*imgsPerBatch,) for i in range(10)]

# Since web content is our data, we create a dataframe with options on that data: offsets

bingParameters = spark.createDataFrame(offsets, ["offset"])

# Run the Bing Image Search service with our text query

bingSearch = (BingImageSearch()

.setSubscriptionKey(bingsearch_service_key)

.setOffsetCol("offset")

.setQuery("Martin Luther King Jr. quotes")

.setCount(imgsPerBatch)

.setOutputCol("images"))

# Transformer that extracts and flattens the richly structured output of Bing Image Search into a simple URL column

getUrls = BingImageSearch.getUrlTransformer("images", "url")

pipeline_bingsearch = PipelineModel(stages=[bingSearch, getUrls])

# Show the results of your search: image URLs

res_bingsearch = pipeline_bingsearch.transform(bingParameters)

display(res_bingsearch.dropDuplicates())

Expected results

| image |

|---|

http://everydaypowerblog.com/wp-content/uploads/2014/01/Martin-Luther-King-Jr.-Quotes-16.jpg |

http://www.scrolldroll.com/wp-content/uploads/2017/06/6-25.png |

http://abettertodaymedia.com/wp-content/uploads/2017/01/86783bd7a92960aedd058c91a1d10253.jpg |

https://weneedfun.com/wp-content/uploads/2016/05/martin-luther-king-jr-quotes-11.jpg |

http://www.sofreshandsogreen.com/wp-content/uploads/2012/01/martin-luther-king-jr-quote-sofreshandsogreendotcom.jpg |

https://cdn.quotesgram.com/img/72/57/1104209728-martin_luther_king_jr_quotes_16.jpg |

http://comicbookandbeyond.com/wp-content/uploads/2019/05/Martin-Luther-King-Jr.-Quotes.jpg |

https://exposingthepain.files.wordpress.com/2015/01/martin-luther-king-jr-quotes-08.png |

https://topmemes.me/wp-content/uploads/2020/01/Top-10-Martin-Luther-King-jr.-Quotes2-1024x538.jpg |

http://img.picturequotes.com/2/581/580286/dr-martin-luther-king-jr-quote-1-picture-quote-1.jpg |

http://parryz.com/wp-content/uploads/2017/06/Amazing-Martin-Luther-King-Jr-Quotes.jpg |

http://everydaypowerblog.com/wp-content/uploads/2014/01/Martin-Luther-King-Jr.-Quotes1.jpg |

https://lessonslearnedinlife.net/wp-content/uploads/2020/05/Martin-Luther-King-Jr.-Quotes-2020.jpg |

https://quotesblog.net/wp-content/uploads/2015/10/Martin-Luther-King-Jr-Quotes-Wallpaper.jpg |

Anomaly detector sample

Anomaly Detector is great for detecting irregularities in your time series data. In this sample, we use the service to find anomalies in the entire time series.

from pyspark.sql.functions import lit

# Create a dataframe with the point data that Anomaly Detector requires

df_timeseriesdata = spark.createDataFrame([

("1972-01-01T00:00:00Z", 826.0),

("1972-02-01T00:00:00Z", 799.0),

("1972-03-01T00:00:00Z", 890.0),

("1972-04-01T00:00:00Z", 900.0),

("1972-05-01T00:00:00Z", 766.0),

("1972-06-01T00:00:00Z", 805.0),

("1972-07-01T00:00:00Z", 821.0),

("1972-08-01T00:00:00Z", 20000.0), # anomaly

("1972-09-01T00:00:00Z", 883.0),

("1972-10-01T00:00:00Z", 898.0),

("1972-11-01T00:00:00Z", 957.0),

("1972-12-01T00:00:00Z", 924.0),

("1973-01-01T00:00:00Z", 881.0),

("1973-02-01T00:00:00Z", 837.0),

("1973-03-01T00:00:00Z", 9000.0) # anomaly

], ["timestamp", "value"]).withColumn("group", lit("series1"))

# Run the Anomaly Detector service to look for irregular data

anomaly_detector = (SimpleDetectAnomalies()

.setSubscriptionKey(anomalydetector_key)

.setLocation("eastasia")

.setTimestampCol("timestamp")

.setValueCol("value")

.setOutputCol("anomalies")

.setGroupbyCol("group")

.setGranularity("monthly"))

# Show the full results of the analysis with the anomalies marked as "True"

display(anomaly_detector.transform(df_timeseriesdata).select("timestamp", "value", "anomalies.isAnomaly"))

Expected results

| timestamp | value | isAnomaly |

|---|---|---|

| 1972-01-01T00:00:00Z | 826.0 | false |

| 1972-02-01T00:00:00Z | 799.0 | false |

| 1972-03-01T00:00:00Z | 890.0 | false |

| 1972-04-01T00:00:00Z | 900.0 | false |

| 1972-05-01T00:00:00Z | 766.0 | false |

| 1972-06-01T00:00:00Z | 805.0 | false |

| 1972-07-01T00:00:00Z | 821.0 | false |

| 1972-08-01T00:00:00Z | 20000.0 | true |

| 1972-09-01T00:00:00Z | 883.0 | false |

| 1972-10-01T00:00:00Z | 898.0 | false |

| 1972-11-01T00:00:00Z | 957.0 | false |

| 1972-12-01T00:00:00Z | 924.0 | false |

| 1973-01-01T00:00:00Z | 881.0 | false |

| 1973-02-01T00:00:00Z | 837.0 | false |

| 1973-03-01T00:00:00Z | 9000.0 | true |

Speech-to-text sample

The Speech-to-text service converts streams or files of spoken audio to text. In this sample, we transcribe one audio file to text.

# Create a dataframe with our audio URLs, tied to the column called "url"

df = spark.createDataFrame([("https://mmlspark.blob.core.windows.net/datasets/Speech/audio2.wav",)

], ["url"])

# Run the Speech-to-text service to translate the audio into text

speech_to_text = (SpeechToTextSDK()

.setSubscriptionKey(service_key)

.setLocation("northeurope") # Set the location of your Azure AI services resource

.setOutputCol("text")

.setAudioDataCol("url")

.setLanguage("en-US")

.setProfanity("Masked"))

# Show the results of the translation

display(speech_to_text.transform(df).select("url", "text.DisplayText"))

Expected results

| url | DisplayText |

|---|---|

https://mmlspark.blob.core.windows.net/datasets/Speech/audio2.wav |

Custom Speech provides tools that allow you to visually inspect the recognition quality of a model by comparing audio data with the corresponding recognition result from the custom speech portal. You can playback uploaded audio and determine if the provided recognition result is correct. This tool allows you to quickly inspect quality of Microsoft's baseline speech to text model or a trained custom model without having to transcribe any audio data. |

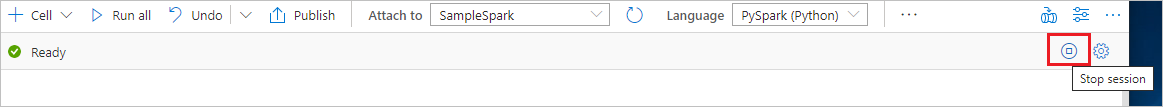

Clean up resources

To ensure the Spark instance is shut down, end any connected sessions(notebooks). The pool shuts down when the idle time specified in the Apache Spark pool is reached. You can also select stop session from the status bar at the upper right of the notebook.