Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Within Microsoft 365, OneDrive is built on top of the SharePoint file platform. In this article, only SharePoint is used to refer to both products. This article also applies to any other products that store data in SharePoint, such as cloud attachments, files shared in Teams, Teams meeting recordings and transcripts, Loop components, and Whiteboards.

There are two primary assets that make up the core content storage of SharePoint:

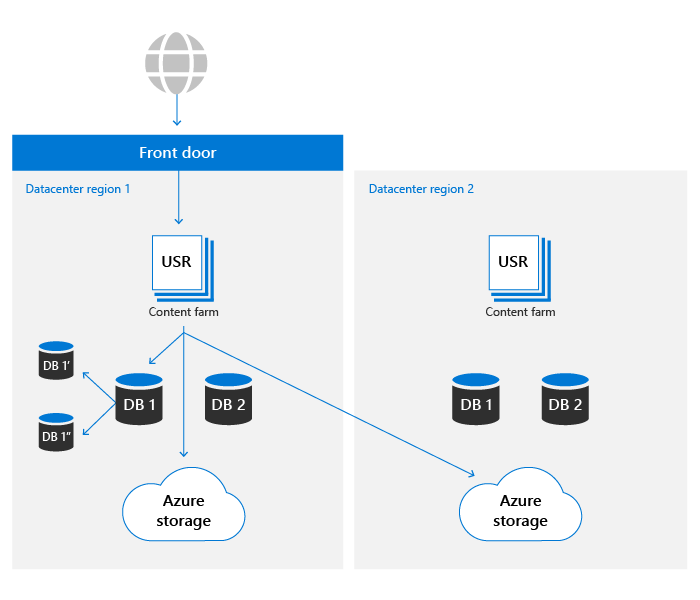

- Blob storage: User content that is uploaded into SharePoint is stored in Azure Storage. SharePoint has built a custom resiliency plan on top of Azure Storage to ensure near real-time duplication of user content and a truly active/active system.

- Metadata: Metadata about each file is stored in Azure SQL Database. Azure SQL offers a complete business continuity story that SharePoint uses and details are covered later in this article.

The complete set of controls to ensure data resiliency is explained in further sections.

Blob storage resilience

SharePoint has a custom-built solution for storage of customer data in Azure Storage. Every file is simultaneously written into both a primary and a secondary datacenter region. If the writes to either Azure region fail, the file save fails. After the contents are written into Azure Storage, checksums are stored separately with metadata, and are used to ensure that the committed write is identical to the original file during all future reads. This same technique is used in all workflows to prevent propagation of any corruption that should occur. Within each region, Azure Locally Redundant Storage (LRS) provides a high level of reliability.

SharePoint uses Append-Only storage, meaning that Microsoft can only add new blobs and can never change old ones until they're permanently deleted. This process ensures that files can't be changed or corrupted after an initial save, protecting against attackers that try to corrupt old versions. Since version integrity protection is built into SharePoint's architecture, previous versions of the file contents can be retrieved, depending on individual administrator settings.

SharePoint environments in either datacenter can access storage containers in both Azure regions. For performance reasons the storage container in the same local datacenter is always preferred, however, read requests that don't see results within a desired threshold have the same content requested from the remote datacenter to ensure data is always available.

Trial or unpaid accounts (e.g., Unlicensed OneDrive for Business) may be stored in a single Azure region only.

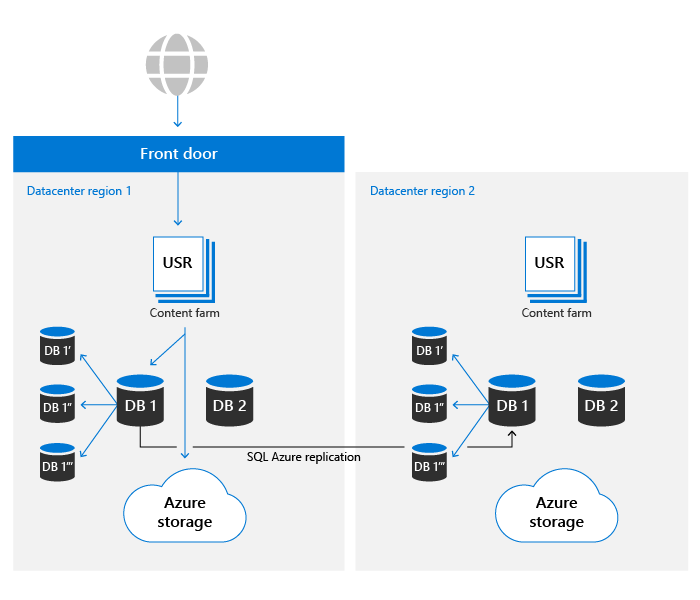

Metadata resilience

SharePoint metadata is also critical to accessing user content as it stores the location of and access keys to the content stored in Azure Storage. These databases are stored in Azure SQL, which has an extensive business continuity plan.

SharePoint uses the replication model provided by Azure SQL and has built a proprietary automation technology to determine a failover is required and to initiate the operation if necessary. As such, it falls into the 'Manual database failover' category from an Azure SQL perspective. The latest metrics for Azure SQL database recoverability are available here.

SharePoint uses Azure SQL's backup system to enable Point in Time Restores (PITR) for up to 14 days.

Automated failover

SharePoint uses a custom-built automated failover to minimize the impact on customer experience when a location-specific event occurs. Monitoring-driven automation detecting a single or multi-component failure beyond certain thresholds result in automated redirection of all users' activity out of the problematic environment and over to a warm secondary. A failover results in metadata and compute storage being served entirely out of the new datacenter. As blob storage always runs entirely active/active, no change is required for a failover. The compute tier prefers the nearest blob container but use both local and remote blob storage locations at any time to ensure availability.

SharePoint uses the Azure Front Door service to provide routing internal to the Microsoft network. This configuration allows failover redirection independent of DNS and reduces the effect of local-machine caching. Most failover operations are transparent to end users. If there's a failover, customers don't need to make any changes in order to maintain access to the service.

Versioning and Files Restore

For newly created document libraries, SharePoint defaults to 500 versions on every file and can be configured to retain more versions if desired. The UI doesn't allow a value fewer than 100 versions to be set, but it's possible to set the system to store fewer versions using public APIs. For reliability, any value less than 100 isn't recommended and can result in user activity causing inadvertent data loss.

For more information about versioning, see Versioning in SharePoint.

Files Restore is the ability to go 'back in time' on any Document Library in SharePoint to any second of time in the last 30 days. This process can be used to recover from ransomware, mass deletions, corruption, or any other event. This feature uses file versions so reducing default versions can reduce the effectiveness of this restore.

The Files Restore feature is documented for both OneDrive and SharePoint.

Deletion, backup, and Point in Time Restore

User content deleted from SharePoint goes through the following deletion flow.

Deleted items are retained in recycle bins for a certain period of time. For SharePoint, the retention time is 93 days. It begins when you delete the item from its original location. When you delete the item from the site recycle bin, it goes into the site collection recycle bin. It stays there for the remainder of the 93 days, and then is permanently deleted. More information about how to use the recycle bin is available at these links:

This process is the default deletion flow and doesn't take into account retention policies or labels. For more information, see Learn about retention for SharePoint and OneDrive.

After the 93-day recycle pipeline is complete, deletion takes place independently for Metadata and for Blob Storage. Metadata is removed immediately from the database, which makes the content unreadable unless the metadata is restored from backup. SharePoint maintains 14 days-worth of backups of metadata. These backups are taken locally in near real time and then pushed to storage in redundant Azure Storage containers on, according to documentation at the time of this publication, a 5-10-minute schedule.

In addition, customers also have the option to utilize Microsoft 365 Backup for data recovery. Microsoft 365 Backup offers a longer protection time and provides uniquely fast recovery from common business continuity and disaster recovery (BCDR) scenarios like ransomware or accidental/malicious employee content overwrite/deletion. Additional BCDR scenario protections are also built directly into the service, offering an enhanced level of data protection.

When Blob Storage content is deleted, SharePoint utilizes the soft delete feature for Azure Blob Storage to protect against accidental or malicious deletion. With this feature, there are a total of 14 days in which to restore content before it's permanently deleted. Also, because blobs are immutable, Microsoft can always restore the state of a file for a 14 day period.

Note

While Microsoft applications will send content to the recycle bin for the standard process, SharePoint does provide APIs that allow for skipping the recycle bin and forcing an immediate delete. Review your applications to ensure this is only done when necessary for compliance reasons.

Integrity Checks

SharePoint uses various methods to ensure the integrity of blobs and metadata at all stages of the data lifecycle:

- File hash stored in metadata: Hash of the entire file is stored with file metadata to ensure document level data integrity is maintained during all operations

- Blob hash stored in metadata: Each blob-item stores a hash of the encrypted content to protect against corruption in underlying Azure storage.

- Data integrity job: Every 14 days, each site is scanned for integrity by listing items in the database and matching those up with listed blobs in Azure storage. The job reports any blob-references missing storage-blobs and can retrieve those blobs through the Azure storage soft-delete feature if needed.