Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Note

Apache Airflow job is powered by Apache Airflow.

In today's data-driven world, maintaining up-to-date and accurate data models is crucial for informed business decisions. As data evolves, it's essential to refresh these models regularly to ensure that reports and dashboards reflect the most current information. Manual refreshes can be time-consuming and prone to errors, which is where Apache Airflow's orchestration, scheduling, and monitoring capabilities come into play. By leveraging Airflow, organizations can automate the refresh process of Power BI semantic models, ensuring timely and accurate data updates with minimal manual intervention.

This article talks about the integration of Apache Airflow with Power BI to automate semantic model refreshes using Data Workflows. It provides a step-by-step guide to setting up the environment, configuring connections, and creating workflows to seamlessly update Power BI semantic models.

Prerequisites

To get started, you must complete the following prerequisites:

Your tenant-level admin must enable "Service principals can call Fabric public APIs":

- Go to the Admin Portal of Microsoft Fabric and navigate to Tenant Settings.

- Under Developer Settings, expand the "Service principals can call Fabric public APIs" section.

- Toggle the "Enabled" button and choose either "The entire organization" or "Specific security groups."

- Select Apply.

Create the Service Principal. You need to add your service principal as the Contributor in your Power BI workspace.

Add Apache Airflow requirement

Navigate to "Settings" and select "Environment Configuration".

Under "Apache Airflow Requirements", include "airflow-powerbi-plugin".

Select "Apply," to save the changes.

Create an Apache Airflow connection to connect with Power BI workspace

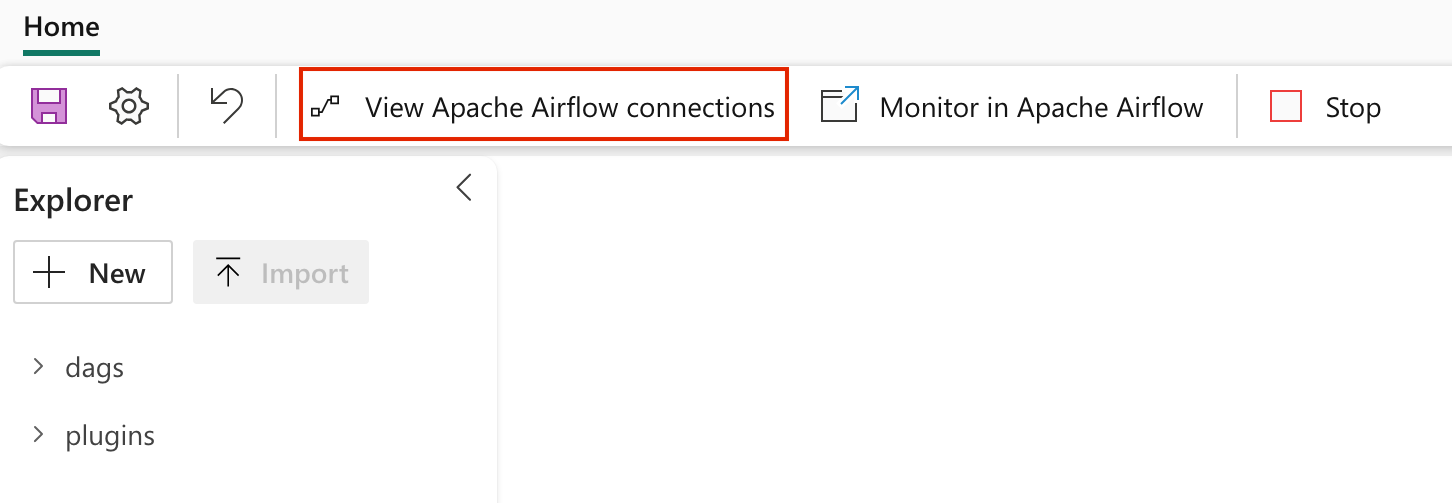

Select on the "View Airflow connections" to see a list of all the connections are configured.

Add the new connection. You may use

Genericconnection type. Store the following fields:- Connection ID: The Connection ID.

- Connection Type:Generic

- Login:The Client ID of your service principal.

- Password:The Client secret of your service principal.

- Extra:{"tenantId": The Tenant ID of your service principal.}

Select Save.

Create Apache Airflow DAG

Start by selecting the "New DAG File" card. Then, assign a name to the file and select the "Create".

Once created, you're presented with a boilerplate DAG code. Edit the file to include the sample DAG. This DAG triggers the Power BI semantic model refresh synchronously. Update the

dataset_idandworkspace_idargument with the Power BI semantic model ID and workspace ID respectively.

from datetime import datetime

# The DAG object

from airflow import DAG

from airflow.operators.bash import BashOperator

from airflow_powerbi_plugin.operators.powerbi import PowerBIDatasetRefreshOperator

with DAG(

dag_id='refresh_dataset_powerbi',

schedule_interval=None,

start_date=datetime(2023, 8, 7),

catchup=False,

concurrency=20,

) as dag:

# [START howto_operator_powerbi_refresh_dataset]

dataset_refresh = PowerBIDatasetRefreshOperator(

powerbi_conn_id= "powerbi_default",

task_id="sync_dataset_refresh",

dataset_id="<dataset_id>",

group_id="<workspace_id>",

)

# [END howto_operator_powerbi_refresh_dataset]

dataset_refresh

Create a plugin file for Power BI (Optional)

If you wish to enable an external monitoring link to Power BI from the Airflow UI, follow these steps:

Create a new file under the 'plugins' folder.

Paste the contents provided below into the file.

from airflow.plugins_manager import AirflowPlugin

from airflow_powerbi_plugin.hooks.powerbi import PowerBIHook

from airflow_powerbi_plugin.operators.powerbi import PowerBILink

# Defining the plugin class

class AirflowExtraLinkPlugin(AirflowPlugin):

"""

PowerBI plugin.

"""

name = "powerbi_plugin"

operator_extra_links = [

PowerBILink(),

]

hooks= [

PowerBIHook,

]

- Upon completion, you will observe the DAG loaded with the external monitoring link to the Power BI semantic model refresh.

Monitor the data workflow DAG and run it from Apache Airflow UI

After you click on save, files are automatically loaded into the Apache Airflow UI. To monitor them, select on the "Monitor in Apache Airflow" button.