Data pipelines storage event triggers in Data Factory (Preview)

Introduction

A common use case for invoking Data Factory data pipelines is to trigger a pipeline upon file events like file arrival and file delete. For customers moving from Azure Data Factory (ADF) to Microsoft Fabric, using ADLS/Blob storage events is common. New Fabric users not previously familiar with ADF might generally use file events from OneLake. Triggers in Fabric Data Factory use Fabric platform capabilities including eventstreams and Reflex triggers. Inside of the Fabric Data Factory pipeline design canvas, there's a Trigger button to create a Reflex trigger for your pipeline, or you can create the trigger directly from the Fabric Activator experience.

Note

Storage event triggers are currently in public preview.

How to set storage event triggers on a pipeline

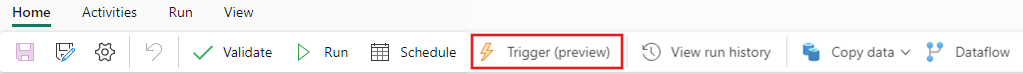

Select the Trigger (preview) button on the Home ribbon at the top of the pipeline canvas editor window

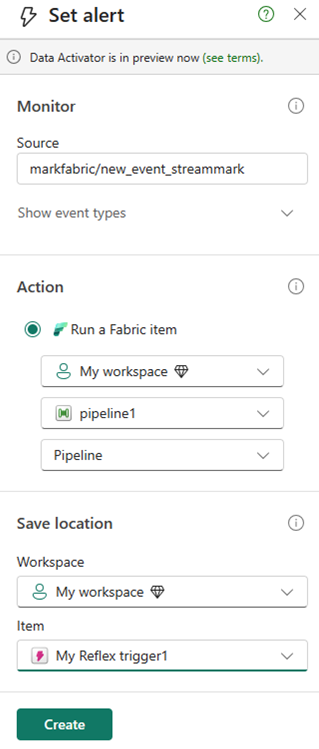

The Set alert panel opens where you can select Azure Blob storage source events.

Select Source and then Select events to select the storage events you wish to listen to in the trigger.

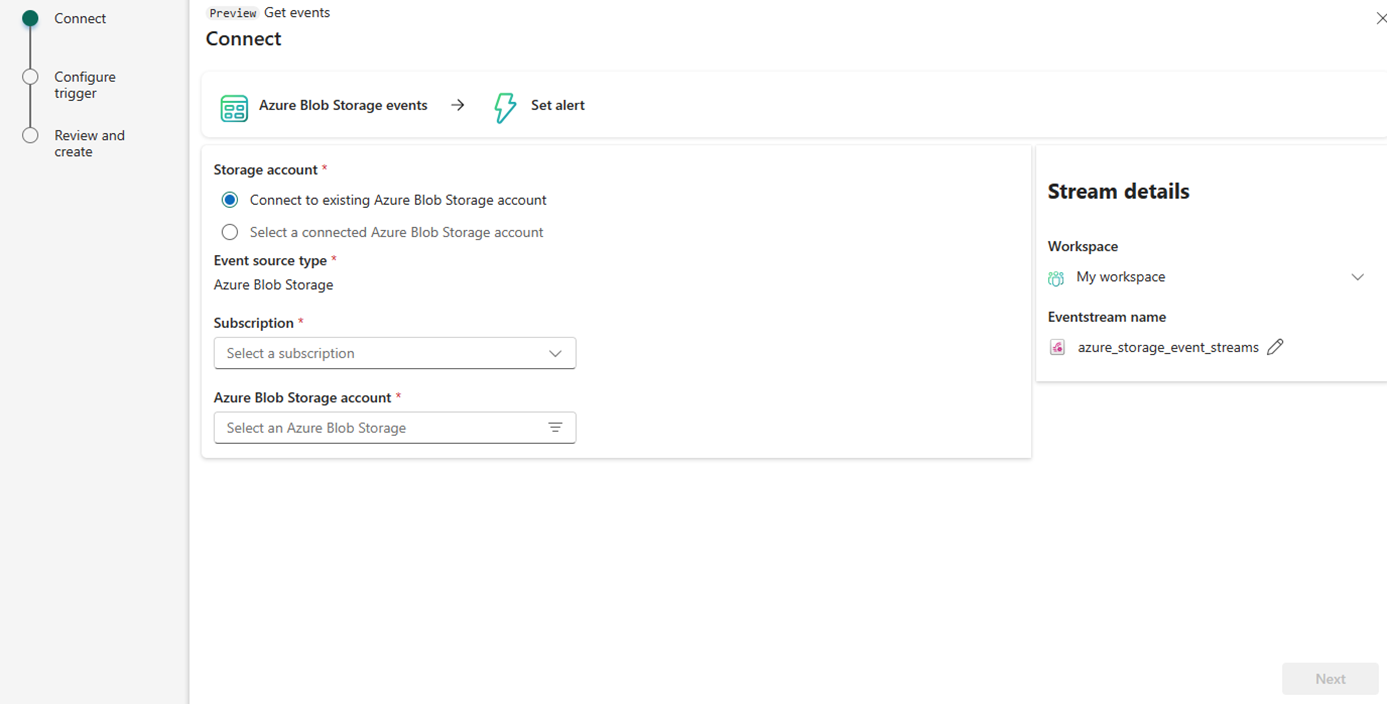

Choose your Azure subscription and Blob Storage account.

A new eventstream object is created in your Fabric workspace, so be sure to select the correct workspace under Stream details.

Select Next.

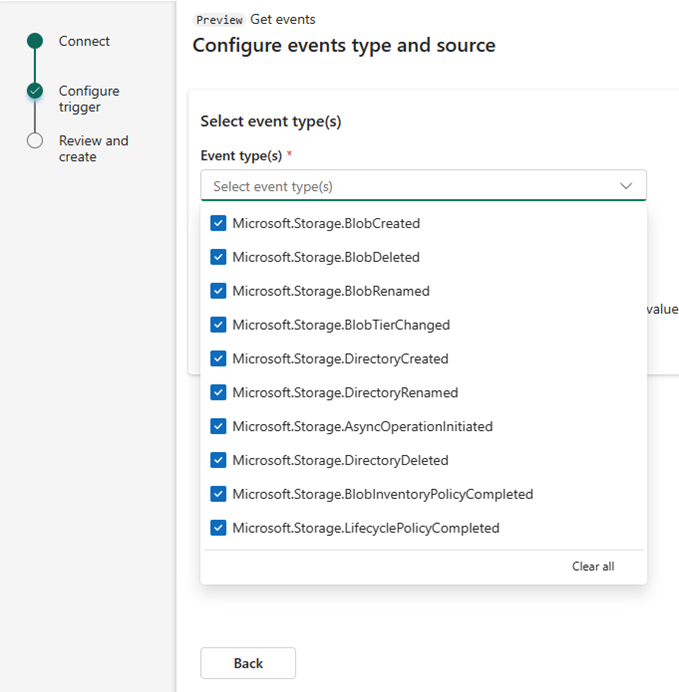

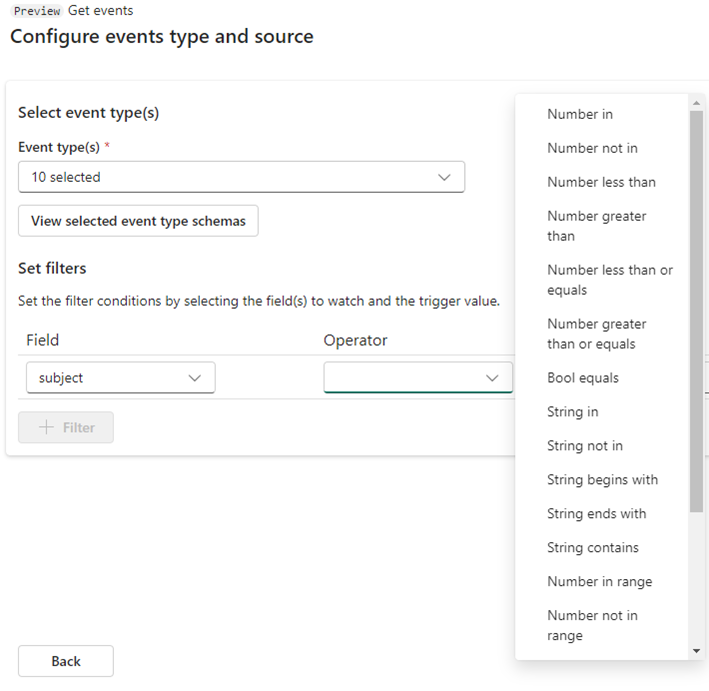

Choose event types. You can see there are many more event options you can choose other than file created and file deleted.

You can filter events to matching files and folders by specifying folder name, file name, file type, and container, using the Subject field.

An event has the following top-level data:

Property Type Description Example source string Full resource path to the event source. This field isn't writeable. Event Grid provides this value. /subscriptions/{subscription-id}/resourceGroups/Storage/providers/Microsoft.Storage/storageAccounts/my-storage-account subject string Publisher-defined path to the event subject. /blobServices/default/containers/my-file-system/blobs/new-file.txt type string One of the registered event types for this event source. Microsoft.Storage.BlobCreated time string The time the event is generated based on the provider's UTC time. 2017-06-26T18:41:00.9584103Z id string Unique identifier for the event. 00000000-0000-0000-0000-000000000000 data object Blob storage event data. {{Data object}} specversion string CloudEvents schema specification version. 1.0

Note

File name and folder name will be part of the Subject field.

Back on the trigger configuration panel, choose the workspace to store the trigger items, pipeline name, pipeline action, and name of your trigger as a Reflex item using item name.

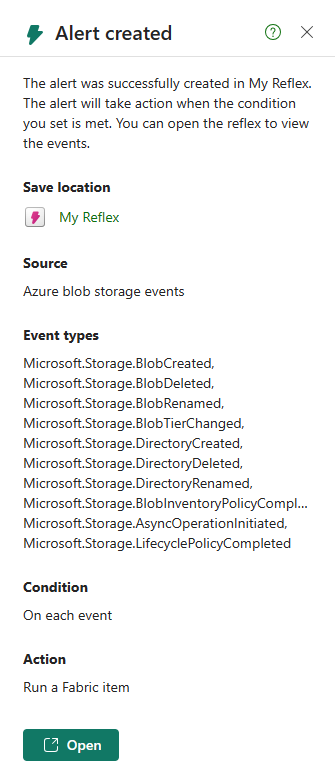

Select Create to create the trigger. The trigger is now active on your pipeline and reacts to the storage events that you defined for it.

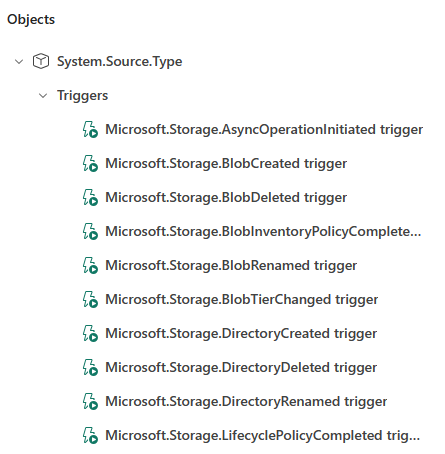

To view the trigger, navigate to your workspace list view and find the Reflex object by name from your Fabric browser.

The type of the object is Reflex.

Select the trigger to open the Reflex object for viewing and editing.

Setting expression values with the trigger file name and folder name

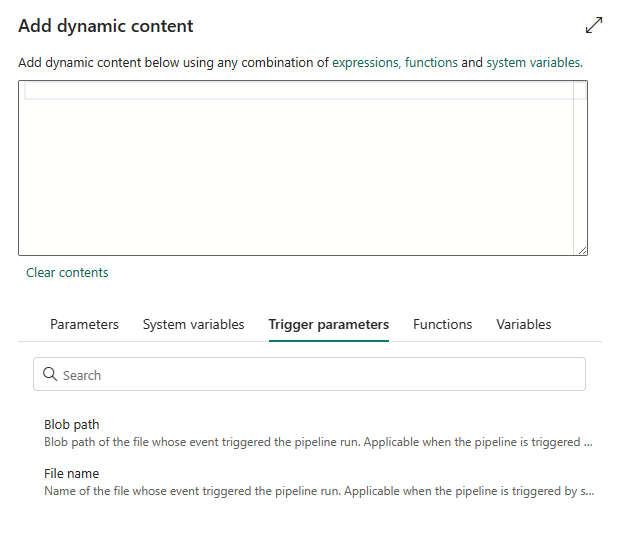

Inside of your pipeline, you can utilize the name of the file and folder path from the storage event using built-in trigger parameters. Data Factory sets these parameters when it receives the storage event. Blob path and file name parameters are set by the trigger activation. Select the trigger parameters tab on the expression builder inside of your pipeline and Data Factory automatically parses the file name and folder names for you, allowing you to dynamically add them to your pipeline expression.

These built-in parameters are set from the Subject and Topic fields of the arriving file event and are automatically created for you to use in your pipeline logic.

@pipeline()?.TriggerEvent?.FileName

You might notice the use of a ? after the pipeline() object reference, which is a method used in the pipeline expression language to handle NULL values. You need this syntax when testing a pipeline that uses the trigger parameters because during manual testing, file and folder name parameters are not set, returning a NULL value. But when you trigger the pipeline from a file event, you see the file name and folder name filled out in those fields.