Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

The Spark Job Definition activity in Data Factory for Microsoft Fabric allows you to create connections to your Spark Job Definitions and run them from a data pipeline.

Prerequisites

To get started, you must complete the following prerequisites:

- A tenant account with an active subscription. Create an account for free.

- A workspace is created.

Add a Spark Job Definition activity to a pipeline with UI

Create a new data pipeline in your workspace.

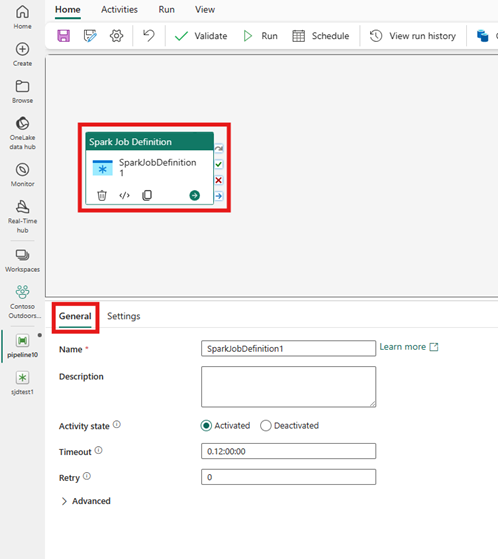

Search for Spark Job Definition from the home screen card and select it or select the activity from the Activities bar to add it to the pipeline canvas.

Select the new Spark Job Definition activity on the pipeline editor canvas if it isn't already selected.

Refer to the General settings guidance to configure the options found in the General settings tab.

Spark Job Definition activity settings

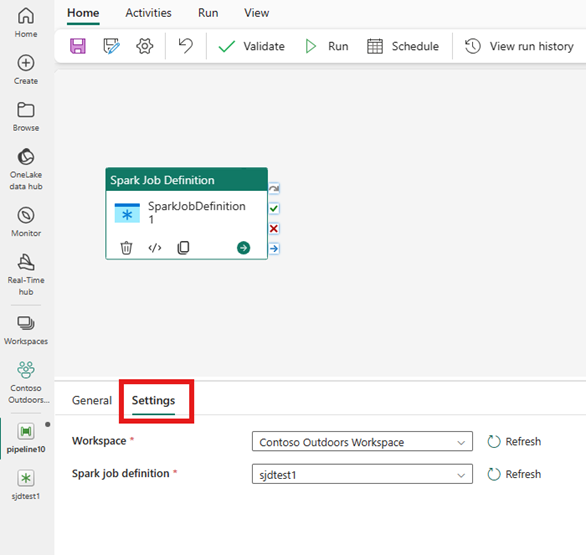

Select the Settings tab in the activity properties pane, then select the Fabric Workspace that contains the Spark Job Definition you would like to run.

In the Settings tab, you can configure your connection, workspace, and Spark job definition. If no Spark iob definition exists yet, you can create a new Spark job definition from your pipeline editor by selecting the +New button next to Spark job definition.

After you set a name and select create, you will be taken to your Spark job definition to set your configurations.

Within the Settings tab, you can configure more settings under Advanced settings.

You can also parameterize these setting fields to orchestrate your Spark job definition item. The values passed will override your Spark job definition original configurations.

Known limitations

Current limitations in the Spark Job Definition activity for Fabric Data Factory are listed here. This section is subject to change.

- Although we support monitoring the activity via the output tab, you aren't able to monitor the Spark Job Definition at a more granular level yet. For example, links to the monitoring page, status, duration, and previous Spark Job Definition runs aren't available directly in the Data Factory. However, you can see more granular details in the Spark Job Definition monitoring page.

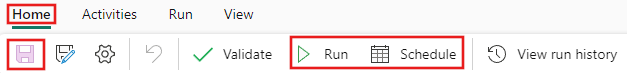

Save and run or schedule the pipeline

After you configure any other activities required for your pipeline, switch to the Home tab at the top of the pipeline editor, and select the save button to save your pipeline. Select Run to run it directly, or Schedule to schedule it. You can also view the run history here or configure other settings.