Ingest, filter, and transform real-time events and send them to a Microsoft Fabric lakehouse

This tutorial shows you how to use the Microsoft Fabric event streams feature to ingest, filter, and transform real-time events and send them in Delta Lake format from your Azure event hub to a lakehouse. You also learn how to build a Power BI report to visualize business insights in your events data.

In this tutorial, you learn how to:

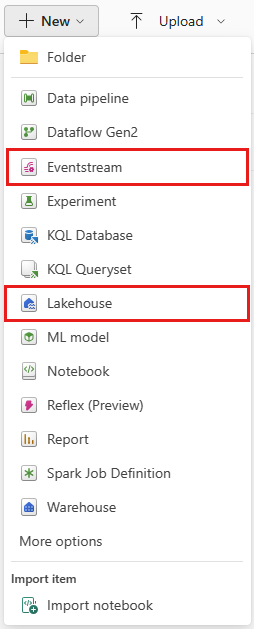

- Create Eventstream and Lakehouse items in Microsoft Fabric

- Add an Azure Event Hubs source to the eventstream

- Create an event hub cloud connection

- Add a Lakehouse destination to the eventstream

- Define real-time events processing logic with event processor

- Verify the data in lakehouse

- Build Power BI report with the event data ingested in the lakehouse

Prerequisites

Before you start, you must have:

- Access to a premium workspace with Contributor or above permissions where your Eventstream and Lakehouse items are located.

- An Azure event hub with event data and appropriate permission available to access the policy keys. The event hub must be publicly accessible and not be behind a firewall or secured in a virtual network. To create an event hub, see Quickstart: Create an event hub using Azure portal.

Create a lakehouse and an eventstream

You can create an eventstream and a lakehouse from the Workspace page or the Create hub page. Follow these steps to create a lakehouse, and then again to create an eventstream:

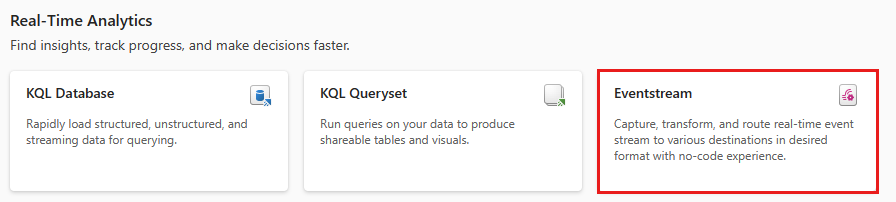

Change your Fabric experience to Real-Time Intelligence and select Lakehouse or Eventstream to create these items in your workspace or the Create hub. (For best results, create the Lakehouse item first and the Eventstream item second.)

Enter the name for the new eventstream or lakehouse and select Create. For the examples in this article, we use citypwr-es for the eventstream and citypwrdata for the lakehouse.

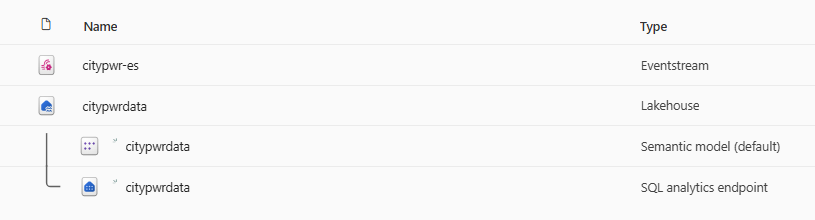

After you create both your new eventstream and lakehouse successfully, these items appear in your workspace:

citypwr-es: an Eventstream item

citypwrdata: a Lakehouse item, a semantic model (default) item, and a SQL analytics endpoint item.

Add an Azure Event Hubs source to the eventstream

After you create the lakehouse and eventstream, follow these steps to add an Azure event hub as your eventstream source.

Select New source on the ribbon or "+" in the main editor canvas and then select Azure Event Hubs.

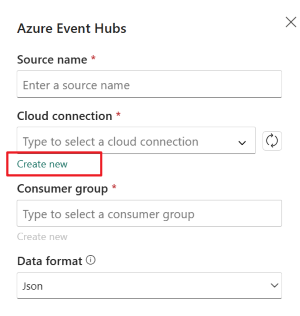

Enter a source name for the new source and select Create new connection to your Azure event hub.

Fill in the information about your Azure event hub on the New connection page.

Event Hub namespace: Enter the name of your Azure event hub namespace.

Event Hub: Enter the name of your Azure event hub in the Azure portal.

Connection name: Enter a name for the cloud connection.

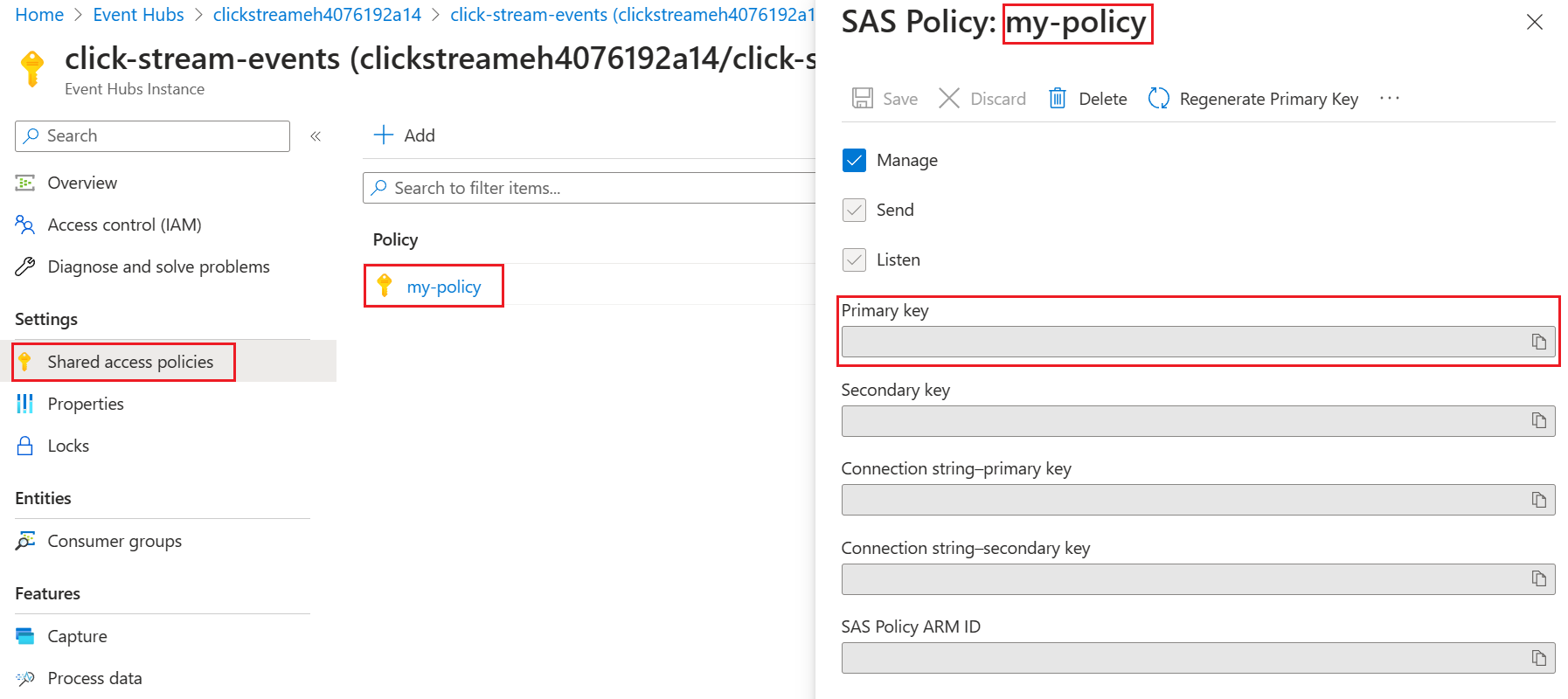

Shared access key name and Shared access key: Go to your Azure event hub and create a policy with

ManageorListenpermission under Share access policies. Then use policy name and primary key as the Shared Access Key Name and Shared Access Key.

Select a Consumer group that reads the event data from your Azure event hub and then Add.

Select a Data format of the incoming real-time events that you want to get from your Azure event hub.

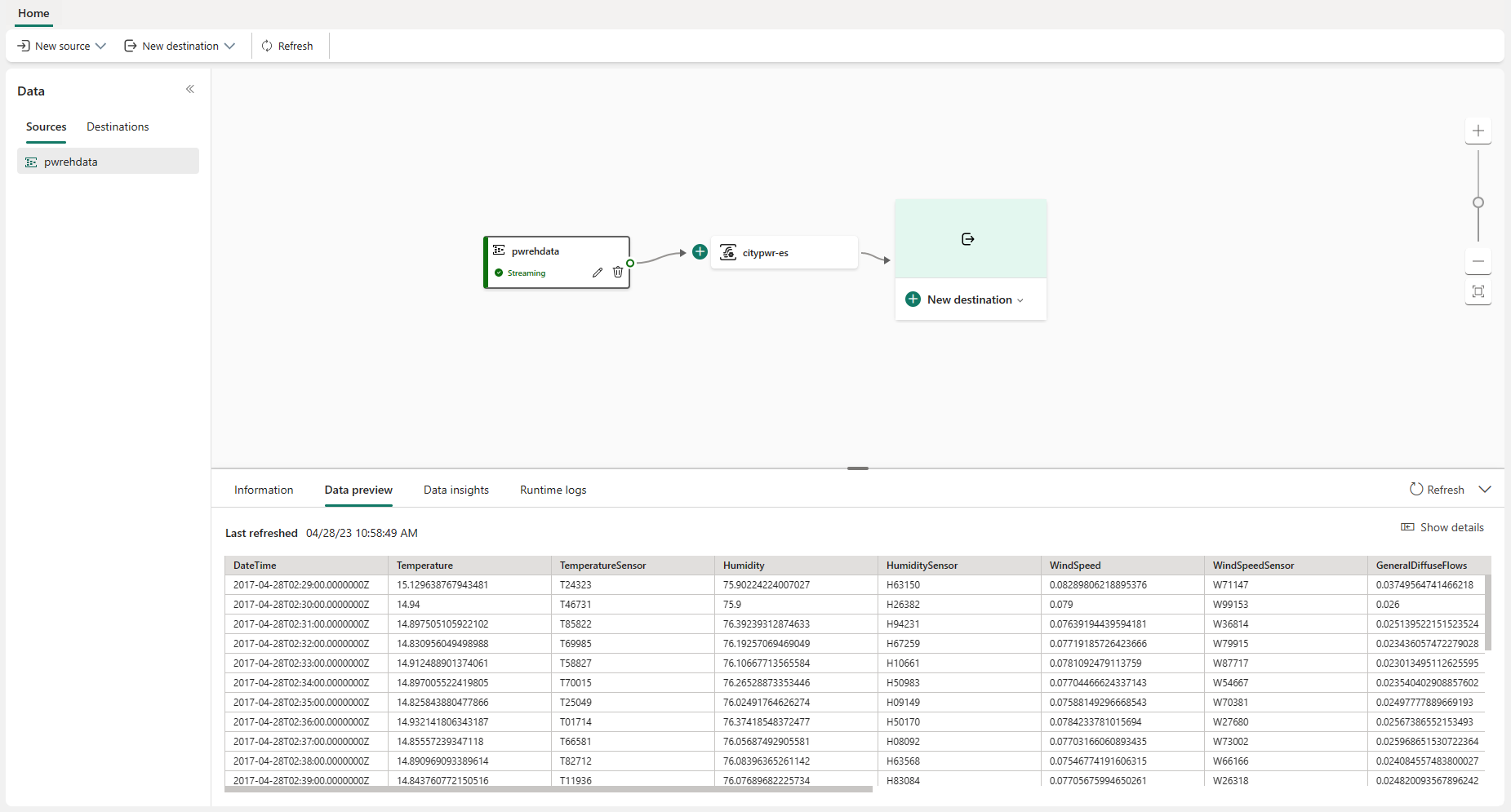

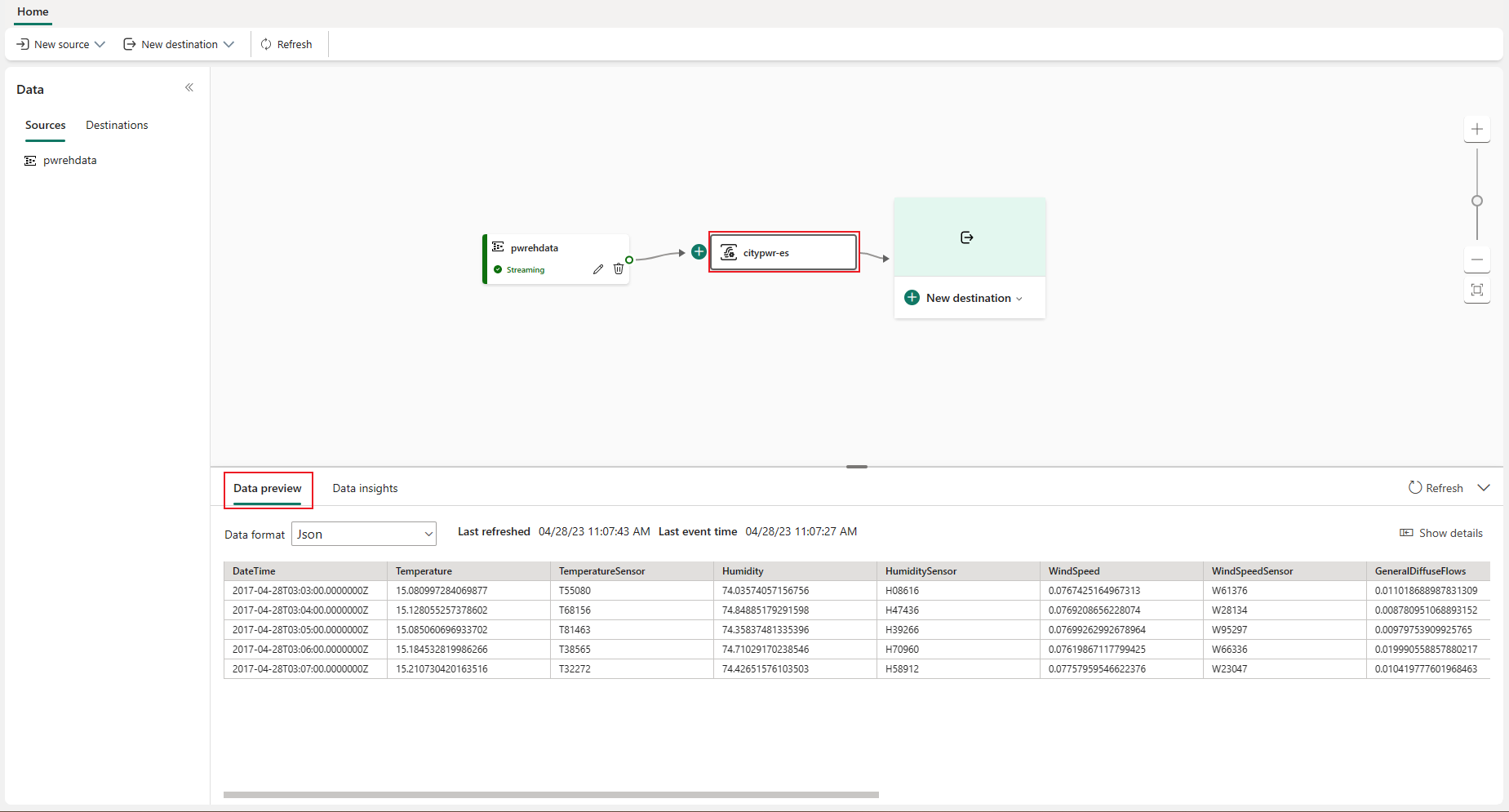

The new source node appears in the canvas after the event hub source is created successfully. Select the event hub node in the canvas, then the Data preview tab in the bottom pane to view the data inside the event hub.

Select the eventstream node in the canvas, then the Data preview tab in the bottom pane to view the data inside the eventstream.

To find data insight details, select the Data insights tab for the event hub source node and the eventstream node.

Add a lakehouse destination to the eventstream

After your eventstream ingests your event hub events, you can add a lakehouse destination to receive the events from your eventstream. Follow these steps to add a lakehouse destination.

Select New destination on the ribbon or "+" in the main editor canvas, and then select Lakehouse.

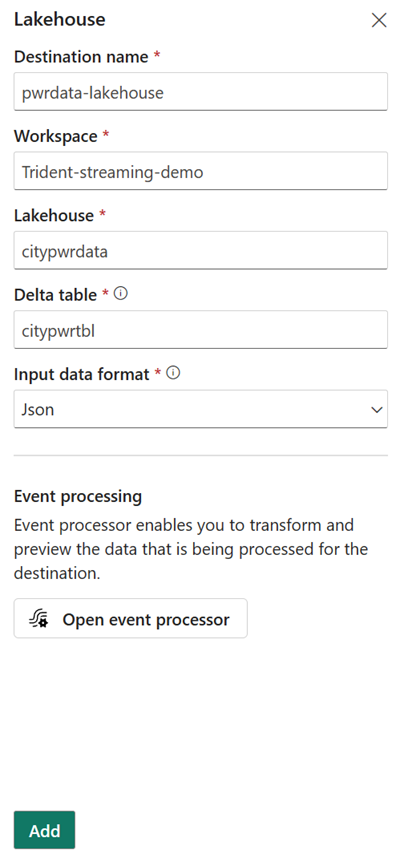

Enter a name for the eventstream destination and fill in the information about your lakehouse.

- Lakehouse: Select an existing lakehouse item from the workspace you specified. We selected the newly created lakehouse citypwrdata in our example.

- Delta table: Select an existing delta table or create a new one to receive data. For this example, we selected the new delta table citypwrtbl.

- Input data format: Select the format for your data.

If you don't want to process your events while ingesting them to your lakehouse, select Add to complete the configuration of your lakehouse destination. If you would like to process your events, skip to the next section.

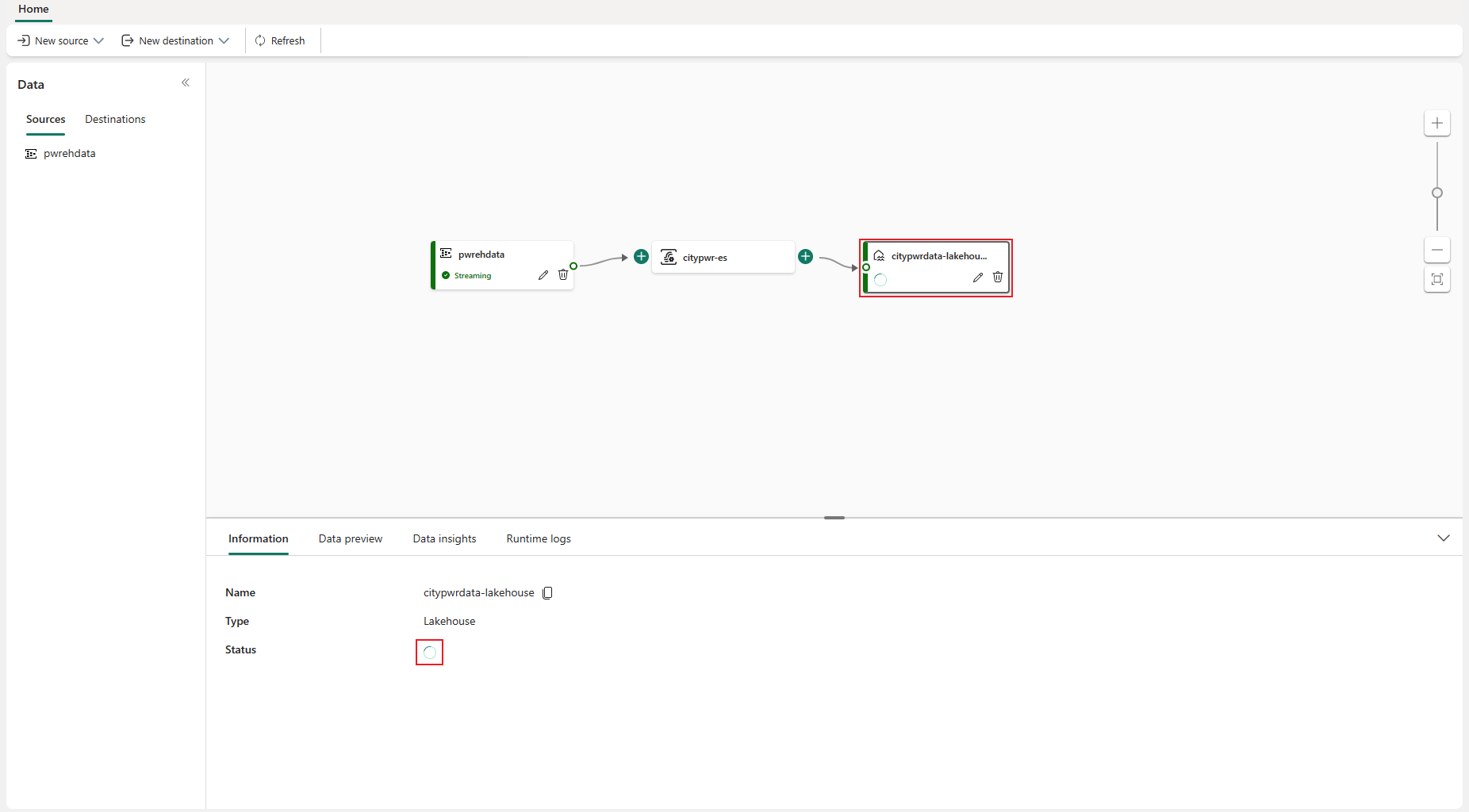

A Lakehouse destination node appears on the canvas with a spinning status indicator. The system takes a few minutes to change the status to Ingesting.

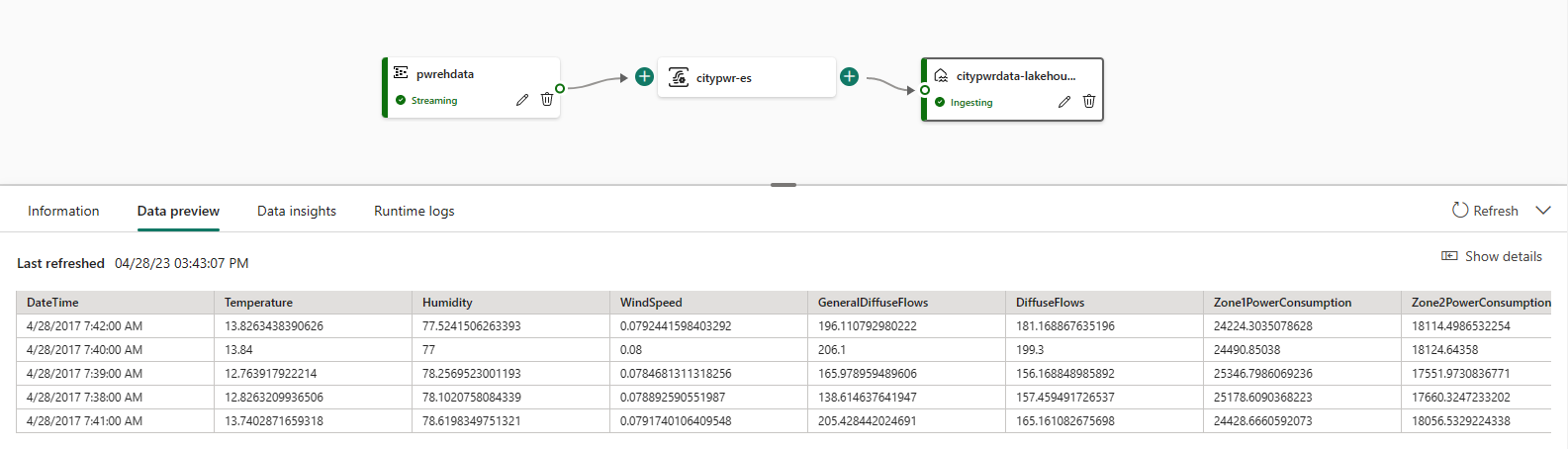

After the destination status changes to Ingesting, select the Data preview tab in the bottom pane to verify your event data is correctly ingested into the lakehouse.

Define real-time events processing logic with event processor

In this section, after you have started the process of adding a lakehouse destination in the previous section, you'll define event processing logic using the event processor editor. For our example, we don't want to store the sensor IDs data in the lakehouse, so we use the event processor to remove the column.

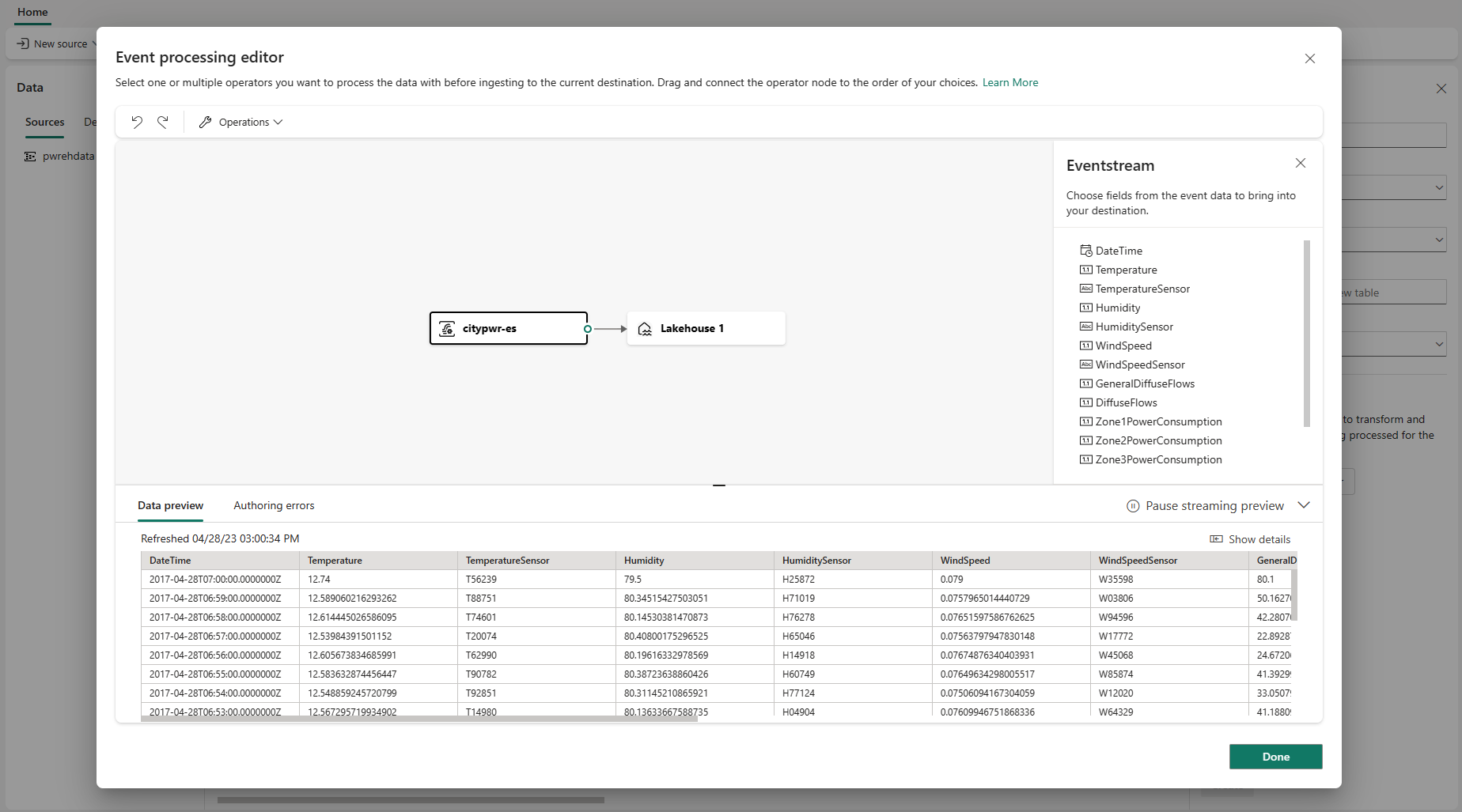

From the completed Lakehouse destination screen (don't select Add yet), select Open event processor . The Event processing editor screen opens.

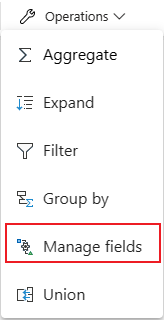

To add the event processing logic, select the Operations menu in the ribbon, and then select Manage fields.

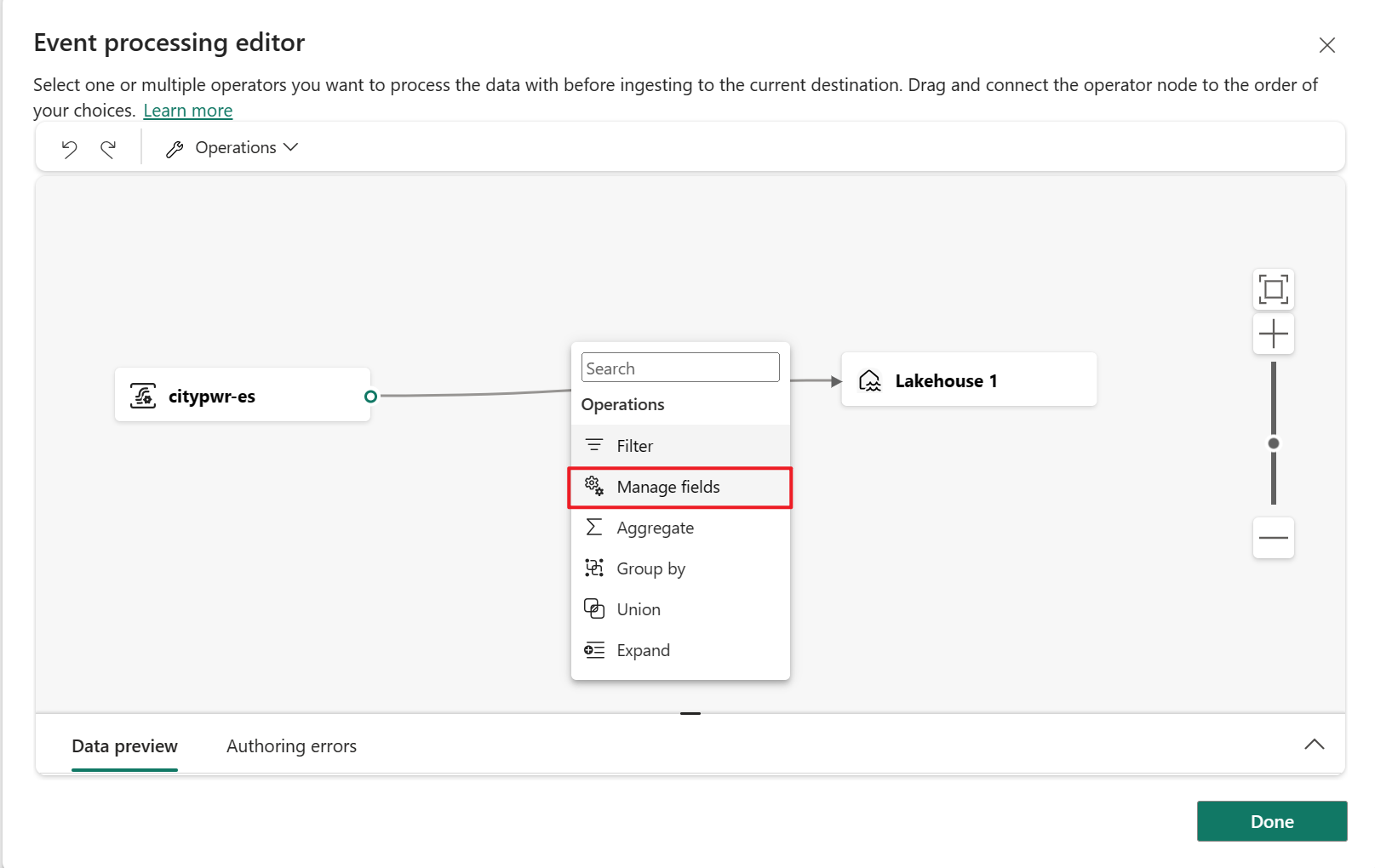

Hover on the connection line and then select the "+" button. A drop-down menu appears on the connection line, and you can insert the Manage fields operator between them.

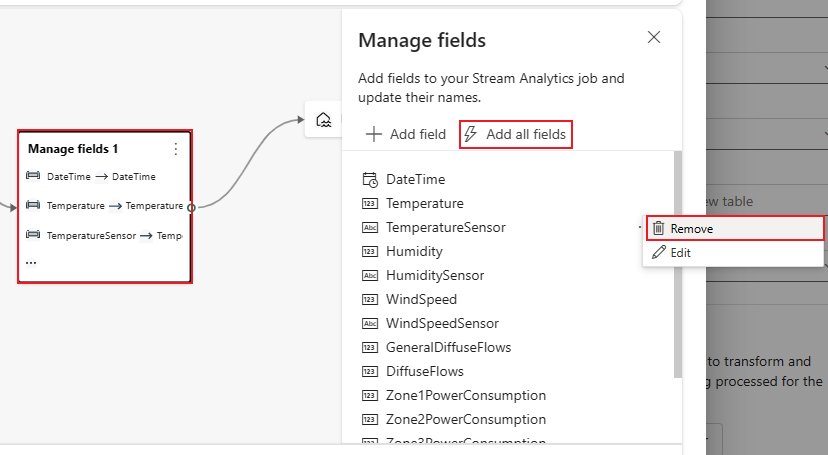

Select the Manage fields operator node. In the Manage fields configuration panel, select Add all fields. Then hover your mouse over the sensor column, select ... and Remove to remove the column.

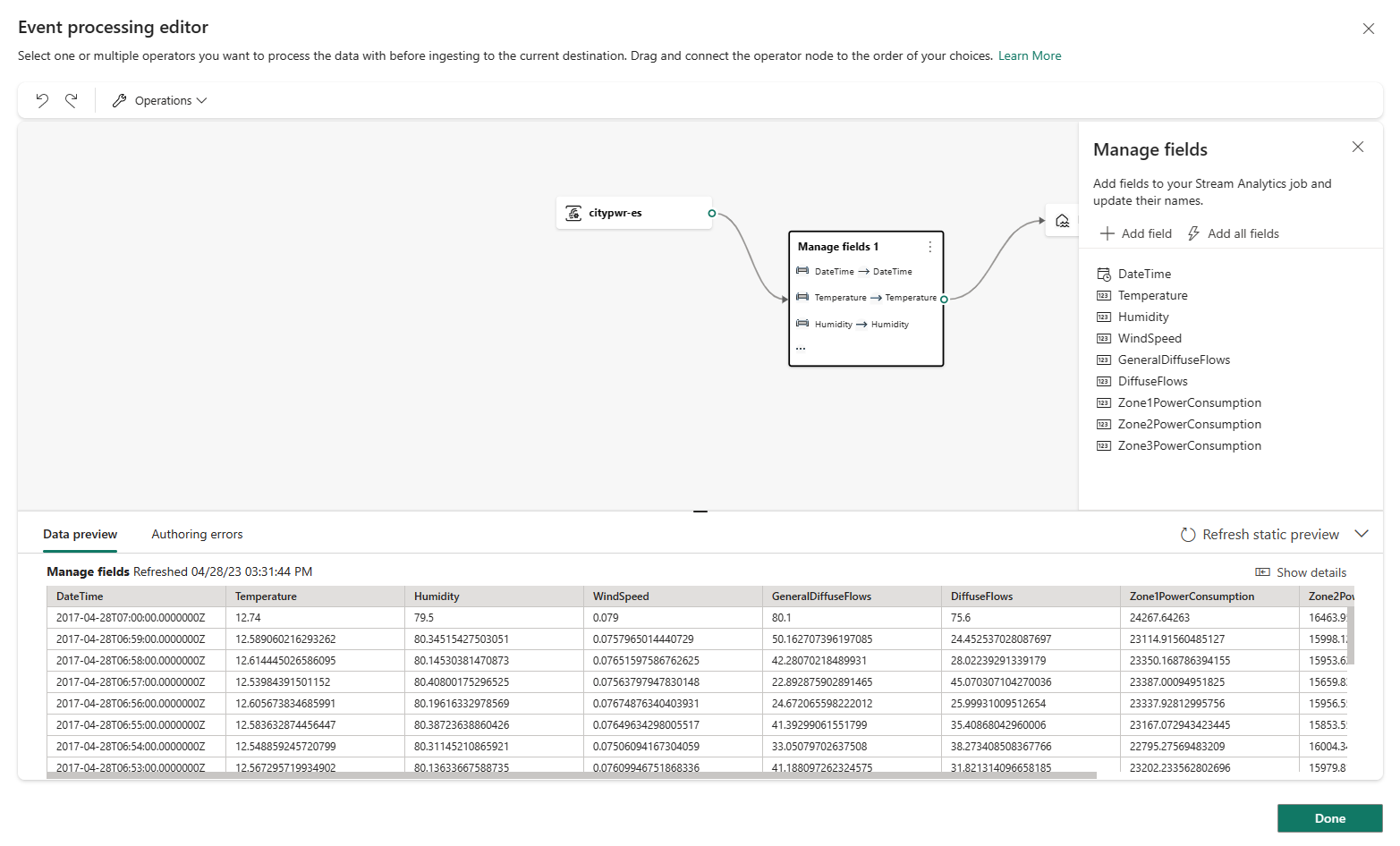

After you have configured the Manage fields operator, preview the data that this operator produces by clicking Refresh static preview.

Select Done to save the event processing logic and return to the Lakehouse destination configuration screen.

Select Add to complete the configuration of your lakehouse destination.

A Lakehouse destination node appears on the canvas with a spinning status indicator. The system takes a few minutes to change the status to Ingesting.

After the destination status changes to Ingesting, select the Data preview tab in the bottom pane to verify your event data is correctly ingested into the lakehouse.

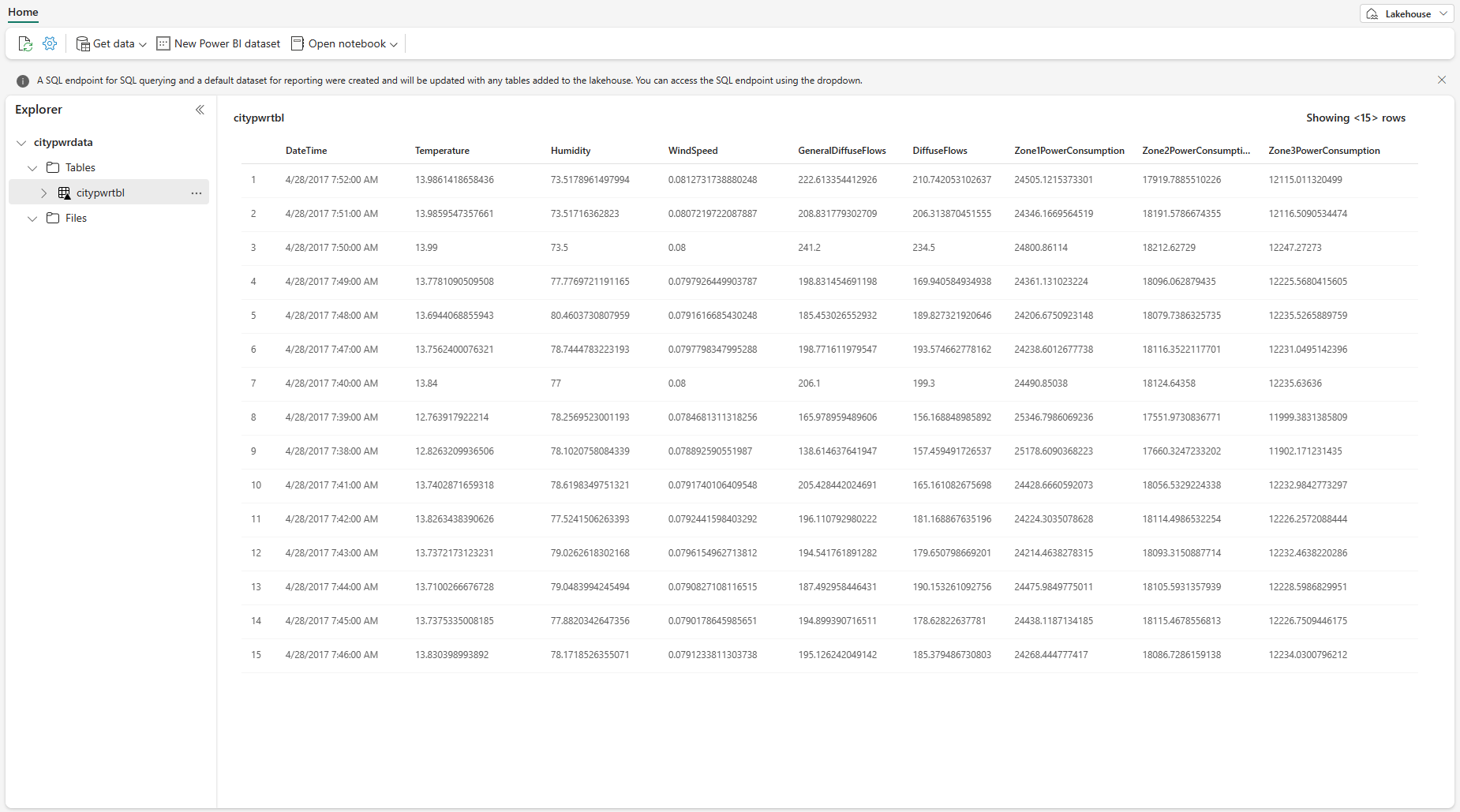

Verify data in the lakehouse

To verify the event data in your new lakehouse, open the citypwrdata lakehouse from your workspace, then select the citypwrtbl table to view its data.

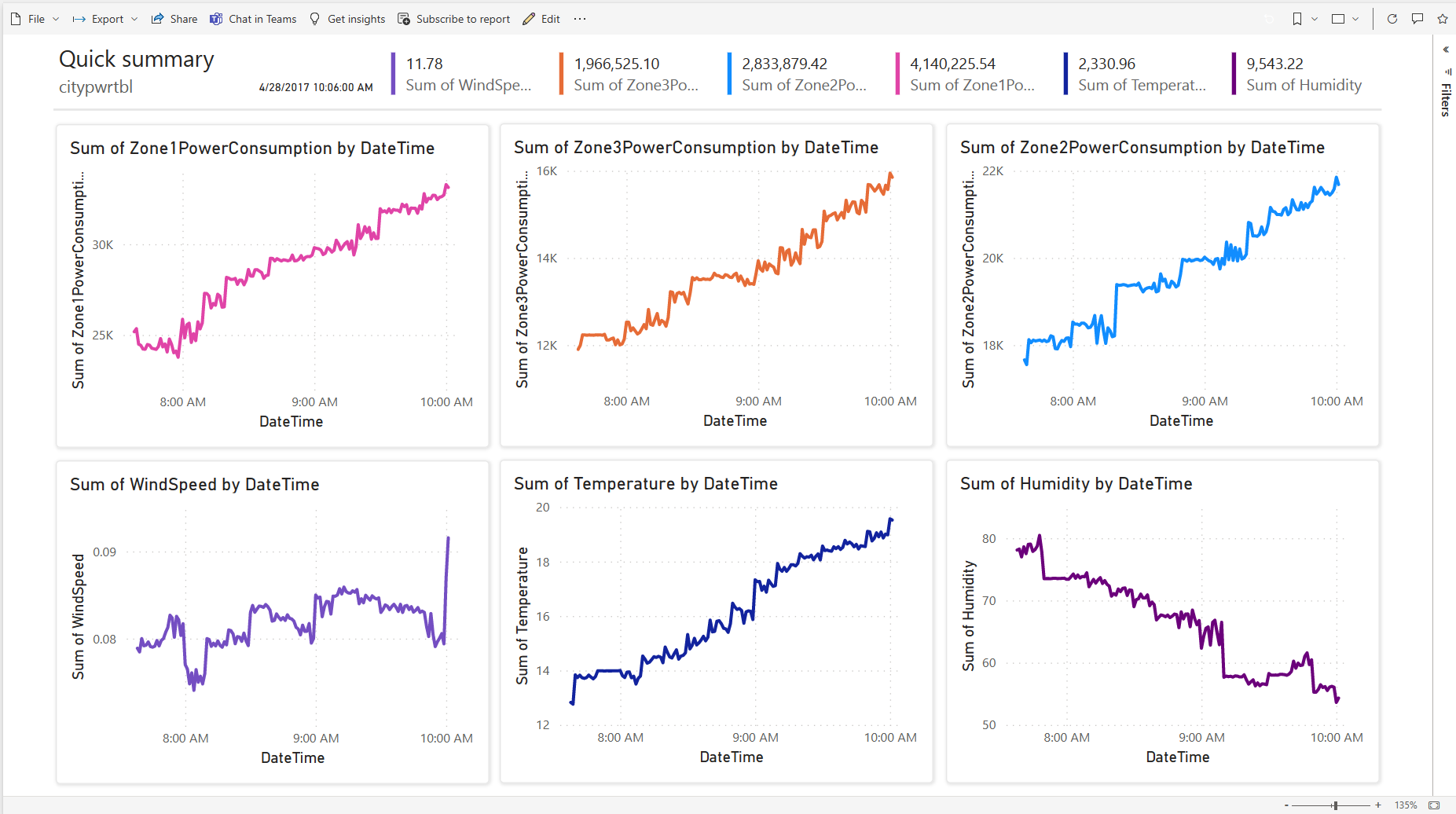

Build a Power BI report with the ingested events data

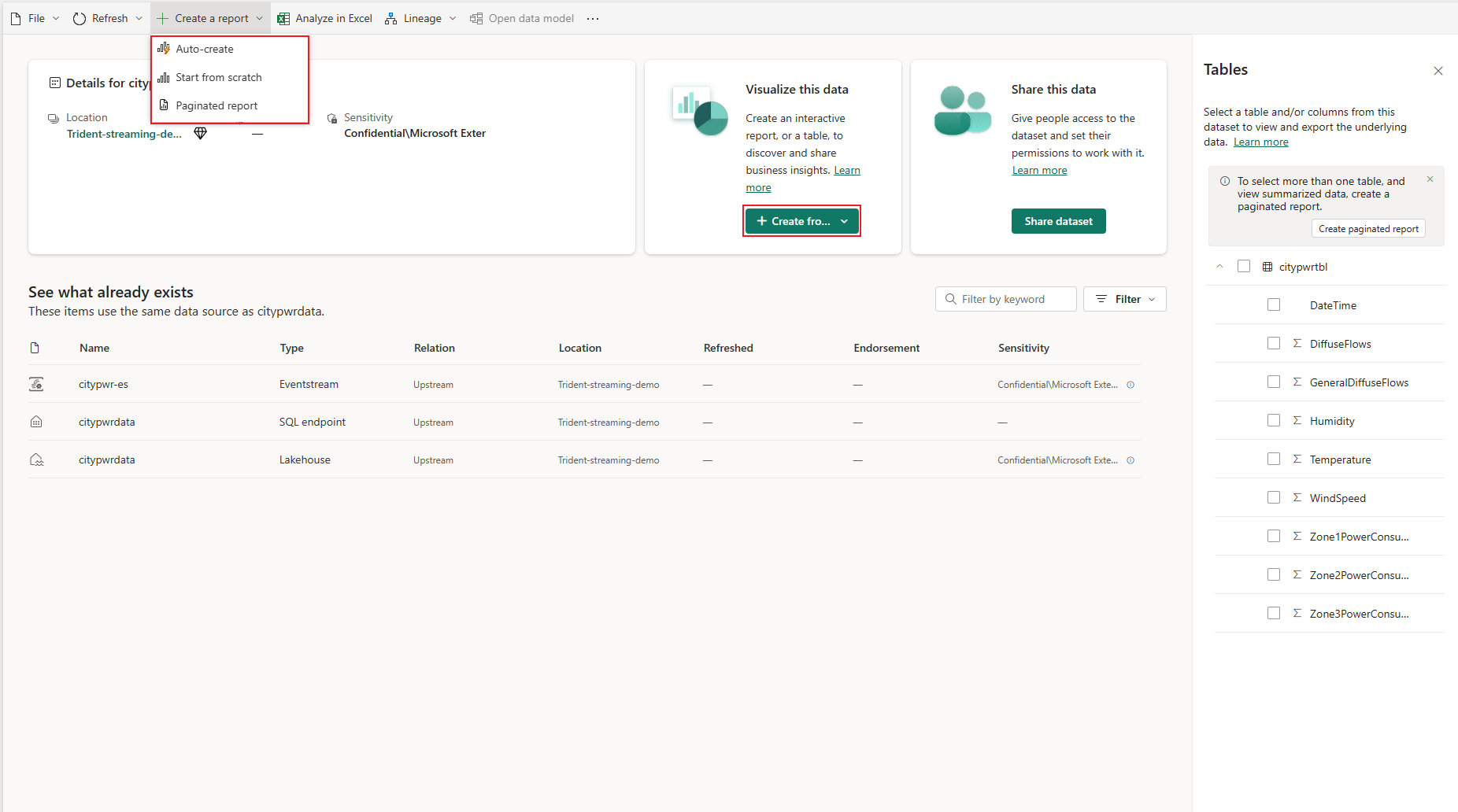

Go to your workspace and select the citypwrdata semantic model, which the system automatically added when you created the new citypwrdata lakehouse.

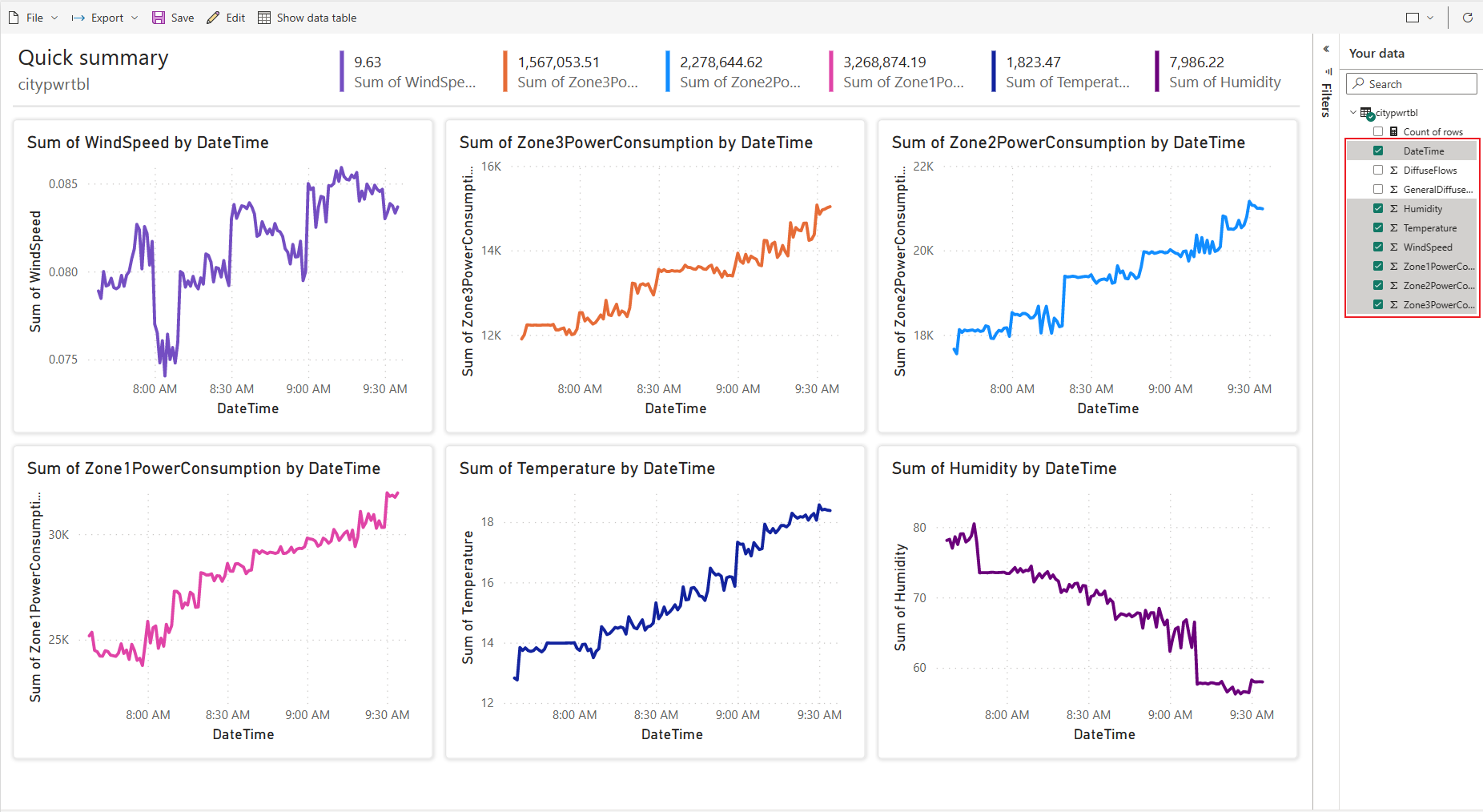

From the Create a report menu in the ribbon, select Auto-create or Start from scratch.

Adjust the report by selecting the temperature, humidity, WindSpeed, and three zones' power consumption so you can monitor these data.

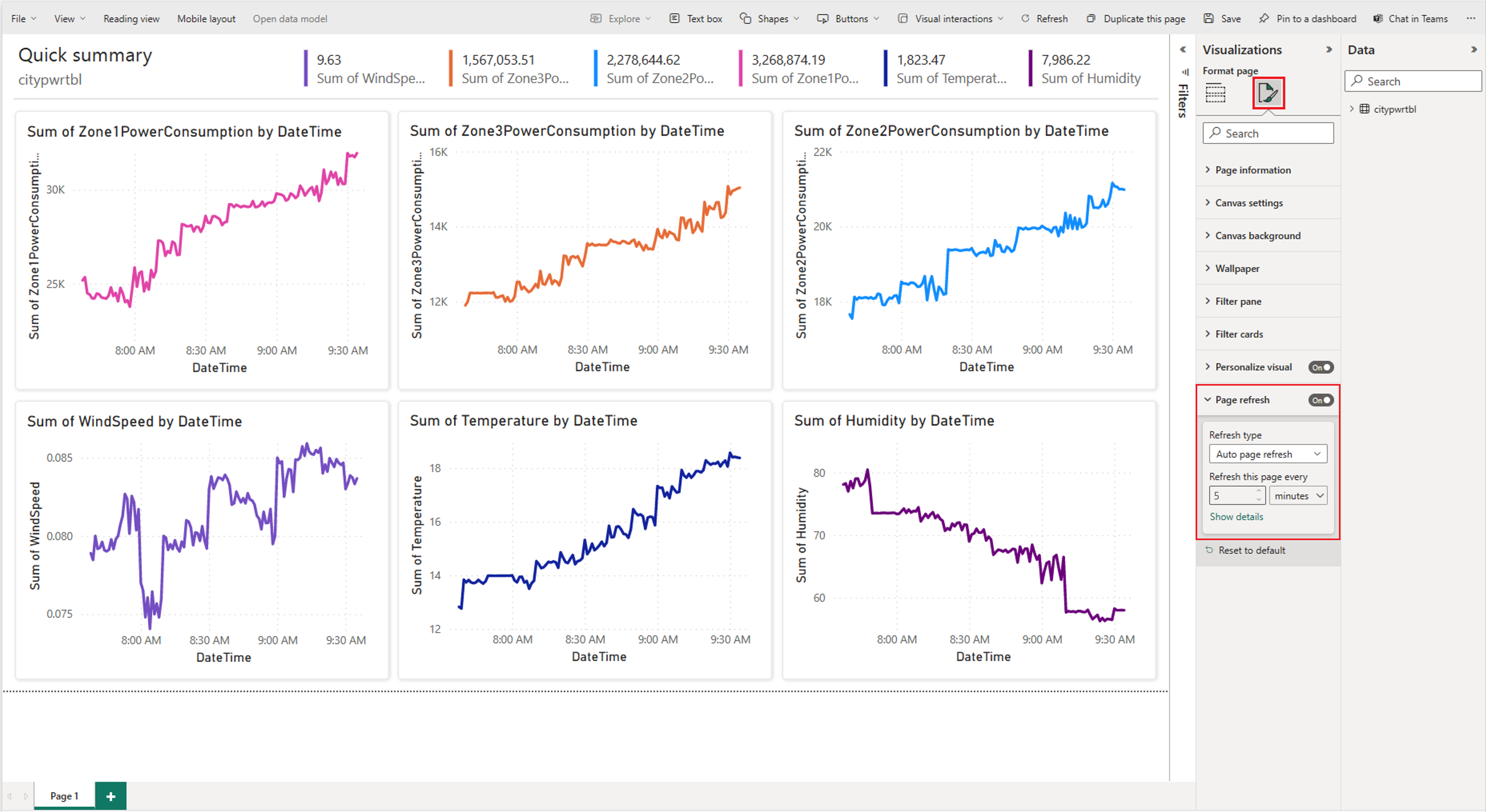

If you want to automatically refresh the data, select the Edit button in the autogenerated report. Then navigate to Format page under Visualizations and select Page refresh to set the refresh interval.

Note

- Admin interval controls the minimum refresh interval.

- The current interval for writing events data to the lakehouse is two minutes.

When you're done adjusting the report, select the Save button and enter a name to save it.

Related content

In this tutorial, you learned how to ingest, filter, and transform real-time events and send them from your Azure event hub to a lakehouse in Delta Lake format. You also learned how to use the lakehouse to build Power BI reports to visualize the business insights in your events data. If you're interested in discovering more advanced features for working with the Fabric event streams feature, you may find the following resources helpful.