Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

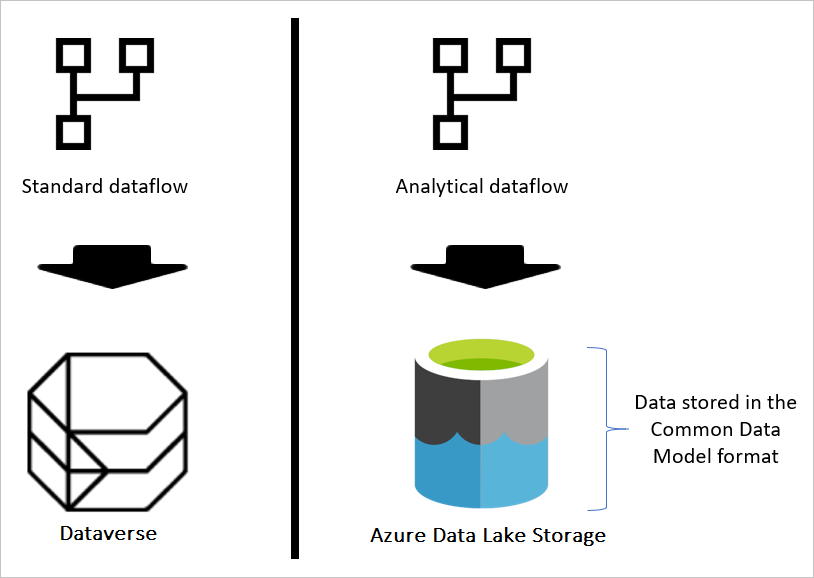

Analytical dataflows store both data and metadata in Azure Data Lake Storage. Dataflows use a standard structure to store and describe data created in the lake, which is called Common Data Model folders. In this article, you learn more about the storage standard that dataflows use behind the scenes.

Storage needs a structure for an analytical dataflow

If the dataflow is standard, then the data is stored in Dataverse. Dataverse is like a database system; it has the concept of tables, views, and so on. Dataverse is a structured data storage option used by standard dataflows.

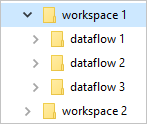

However, when the dataflow is analytical, the data is stored in Azure Data Lake Storage. A dataflow’s data and metadata is stored in a Common Data Model folder. Since a storage account might have multiple dataflows stored in it, a hierarchy of folders and subfolders is introduced to help organize the data. Depending on the product the dataflow was created in, the folders and subfolders might represent workspaces (or environments), and then the dataflow’s Common Data Model folder. Inside the Common Data Model folder, both schema and data of the dataflow tables are stored. This structure follows the standards defined for Common Data Model.

What is the Common Data Model storage structure?

Common Data Model is a metadata structure defined to bring conformity and consistency for using data across multiple platforms. Common Data Model isn't data storage, it's the way that data is stored and defined.

Common Data Model folders define how a table's schema and its data should be stored. In Azure Data Lake Storage, data is organized in folders. Folders can represent a workspace or environment. Under those folders, subfolders for each dataflow are created.

What's in a dataflow folder?

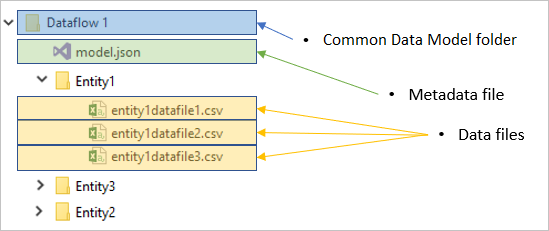

Each dataflow folder contains a subfolder for each table and a metadata file named model.json.

The metadata file: model.json

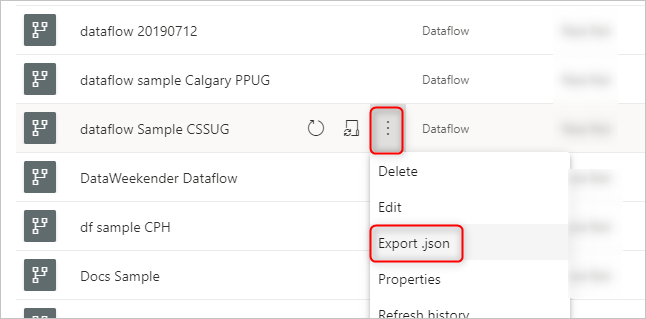

The model.json file is the metadata definition of the dataflow. This file is the one file that contains all the dataflow metadata. It includes a list of tables, the columns, and their data types in each table, the relationship between tables, and so on. You can export this file from a dataflow easily, even if you don't have access to the Common Data Model folder structure.

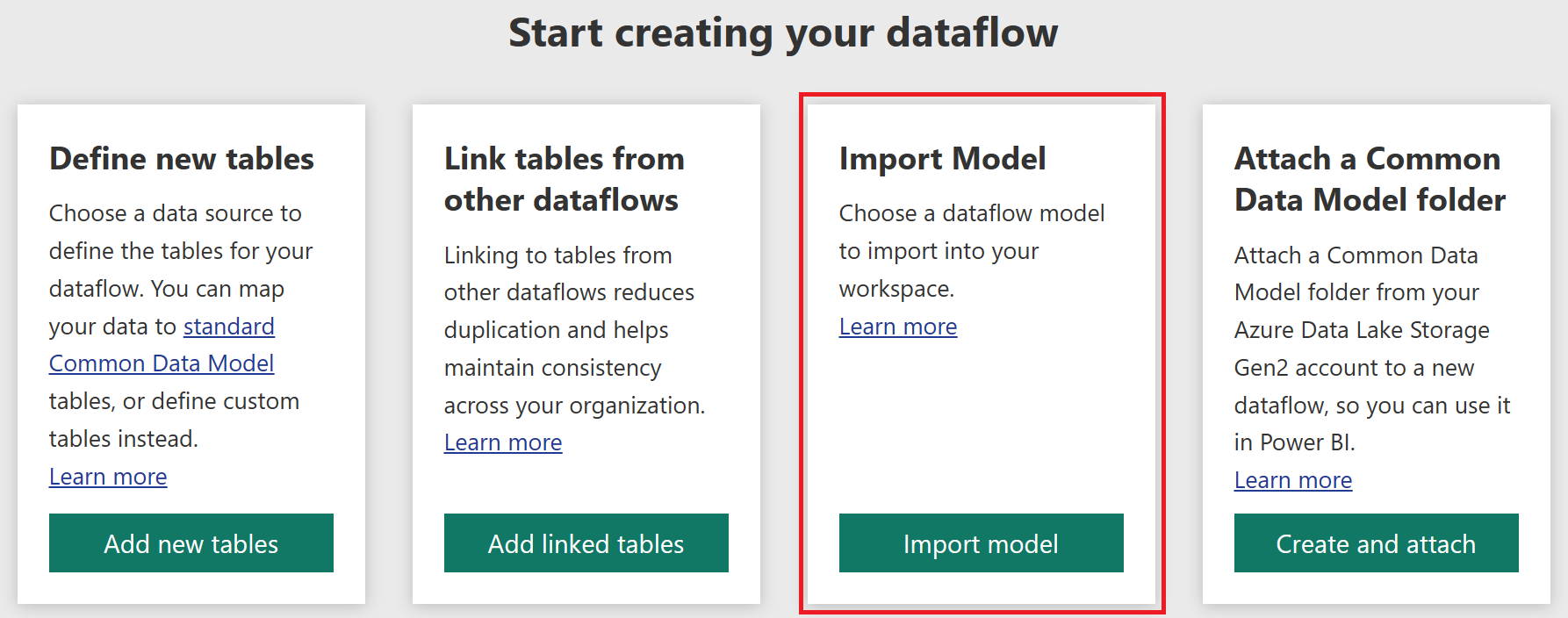

You can use this JSON file to migrate (or import) your dataflow into another workspace or environment.

To learn exactly what the model.json metadata file includes, go to The metadata file (model.json) for Common Data Model.

Data files

In addition to the metadata file, the dataflow folder includes other subfolders. A dataflow stores the data for each table in a subfolder with the table's name. Data for a table might be split into multiple data partitions, stored in CSV format.

How to see or access Common Data Model folders

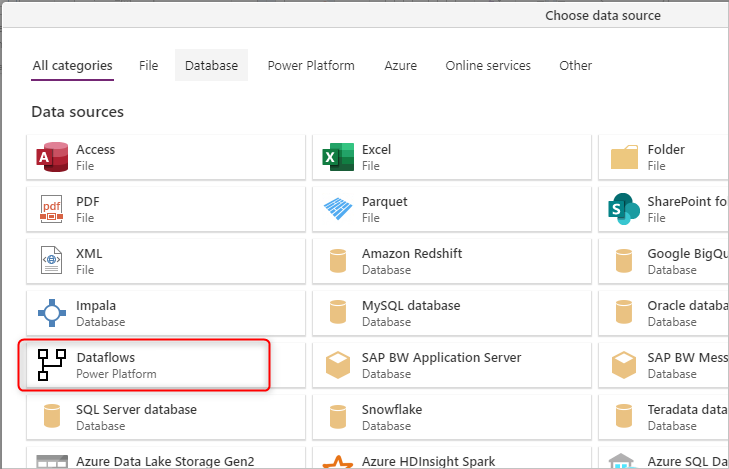

If you're using dataflows that use storage provided by the product they were created in, you don't have access to those folders directly. In such cases, getting data from the dataflows requires using the Microsoft Power Platform dataflow connector available in the Get data experience in the Power BI service, Power Apps, and Dynamics 35 Customer Insights products, or in Power BI Desktop.

To learn how dataflows and the internal Data Lake Storage integration work, go to Dataflows and Azure Data Lake integration (Preview).

If your organization enabled dataflows to take advantage of its Data Lake Storage account and was selected as a load target for dataflows, you can still get data from the dataflow by using the Power Platform dataflow connector as mentioned previously. But you can also access the dataflow's Common Data Model folder directly through the lake, even outside of Power Platform tools and services. Access to the lake is possible through the Azure portal, Microsoft Azure Storage Explorer, or any other service or experience that supports Azure Data Lake Storage. For more information, go to Connect Azure Data Lake Storage Gen2 for dataflow storage.