Introduction to Caching with Windows Server AppFabric (Beta 1)

Writers: Nithya Sampathkumar, Muralidhar Krishnaprasad, Anil Nori

Microsoft Corporation

Applies To:

- Windows Server AppFabric

- .NET Framework

- ASP.NET

- Distributed Cache

- High Availability

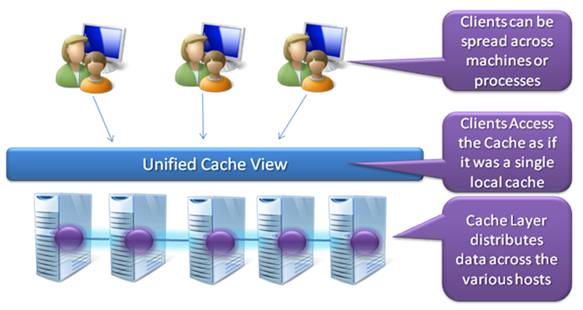

Summary: Windows Server AppFabric provides a distributed in-memory application cache platform for developing scalable, available, and high-performance applications. AppFabric fuses memory across multiple computers to give a single unified cache view to applications. Applications can store any serializable CLR object without worrying about where the object gets stored. Scalability can be achieved by simply adding more computers on demand. The cache also allows for copies of data to be stored across the cluster, thus protecting data against failures. It runs as a service accessed over the network. In addition, Windows Server AppFabric provides seamless integration with ASP.NET that enables ASP.NET session objects to be stored in the distributed cache without having to write to databases. This increases both the performance and scalability of ASP.NET applications.

Introduction

Most business, consumer, and entertainment media is now conceived and delivered in digital form. Dramatic advances in storage technology allow us to capture and store this data relatively inexpensively. Managing this data—organizing and accessing it, however is becoming more of a challenge. The advances in processors, memory, storage, and connectivity have paved the way for next-generation applications that are data-driven, whose data can reside anywhere (that is, on the server, desktop, mobile devices, embedded in applications) and that require access from anywhere (that is, local, remote, over the network, from mobile devices, in connected and disconnected mode). This naturally leads to distributed, multi-tiered, and composite application architectures.

Distributed applications require support for a large number of users, and high performance, throughput, and response time. Service orientation has a low cost of service, thereby allowing the scalability and performance to also be at low cost. Another important requirement is the support for distribution and heterogeneity of data and applications. Applications are composing data and business logic (for example, mashups) from sources that can be local, federated, or cloud-based. Composite applications require aggregated data to be shaped in a form that is most suitable for the application. Data and logic sharing is also an important requirement in composite applications.

In this paper, we describe a new caching technology available in Windows Server AppFabric.

Windows Server AppFabric Caching Capabilities

Windows Server AppFabric includes a distributed in-memory cache that provides .NET applications with high-speed access, scale, and high availability to application data.

Figure 1: Windows Server AppFabric Caching Capabilities

Key Features

- Caches any serializable CLR object and provides access through simple cache APIs.

- Supports enterprise scale: tens to hundreds of computers.

- Configurable to run as a service accessed over the network

- Supports dynamic scaling-out by adding new nodes.

- Backup copy provides high availability.

- Automatic load balancing.

- Integration with administration and monitoring tools such as PowerShell, Event Tracing for Windows, System Center, etc.

- Provides seamless integration with ASP.NET to be able to cache session data in without having to write it to source databases. It can also be used as a cache for application data to be able to cache application data across the entire Web farm.

- Follows the cache-aside architecture (also known as Explicit Caching) for V1. That is, you must decide explicitly which objects to put/remove in your applications and the cache does not synchronize with any source database automatically.

Types of Data

In this section, we show how application data can be broadly classified into three categories, and define their access patterns and what they mean for caching requirements.

Understanding the different types of data and their semantics helps to understand the different caching needs that come with usage of that data type.

Reference Data

Reference data is a version of the authoritative data. It is either a direct copy (version) of the original data or aggregated and transformed from multiple data sources. Reference data is practically immutable—changing the reference data (or the corresponding authoritative data) creates a new version of the reference data. Reference data is an ideal candidate for caching; because the reference data does not change, it can be shared across multiple applications (and users), thereby increasing the scale and performance.

Consider a product catalog application aggregating product information across multiple data source applications. The most common operation on the catalog data is read (or browse); a typical catalog browse operation iterates over a large amount of product data, filters it, personalizes it, and then presents the selected data to the users. Key-based and query-based access is a common form of operation. Caching is a critical requirement for catalog access because if not cached, operations against such an aggregate catalog require the operations to be decomposed into operations on the underlying sources, invoke the underlying operations, collect responses, and aggregate the results into cohesive responses.

Accessing the large sets of source data for every catalog operation can be prohibitively expensive, and can significantly impact the response time and throughput of the application. Caching the source product data closer to the catalog application can significantly improve the performance and the scalability of the application.

Referenced data is refreshed periodically, usually at configured intervals, from its sources, or refreshed when the authoritative data sources change. Access to reference data, although shared, is mostly read. Local updates are often performed for tagging (to better organize the data). To support a large scale, reference data can be replicated in multiple caches on different computers in a cluster. As mentioned above, reference data is easy to cache, which provides high scalability.

Activity Data

Activity data is generated by the currently executing activity as part of a business transaction. The data originates as part of the business transaction and, at the close of the business transaction, it is retired to the data source as historical (or log) information.

Consider the shopping cart data in an online buying application. There is one exclusive shopping cart for each online buying session. During the buying session, the shopping cart is cached and updated with selected products. The shopping cart is visible and accessible only to the buying transaction. Upon checkout, once the payment is applied, the shopping cart is retired from the cache to a source application for further processing. Once the business transaction is processed by the source application, the shopping cart information is logged for auditing and historical purposes.

While the buying session is active, the shopping cart is accessed both for read and write but it is not shared. This exclusive nature of the activity data makes it suitable for distributed caching. To support large scalability of the buying application, the shopping carts can be distributed across the cluster of caches. Because the shopping carts are not shared, the set of shopping carts can be partitioned across the distributed cache.By dynamically configuring the distributed cache, the degree of scale can be controlled.

Resource Data

Both reference (shared read) and activity (exclusive write) data are ideal for caching, but not all application data falls into these two categories. There is data that is shared, concurrently read and written into, and accessed by a large number of transactions. Consider an inventory management application. The inventory of an item includes the description of the item and the current quantity. The quantity information is authoritative, volatile, and concurrently accessed by a large number of users for read/write. Such data is known as the resource data; the business logic (the order application logic) runs close to the resource data (such as quantity data). Resource data is usually collected in data stores. For better performance, organizations often cache it in the application tier with technologies like AppFabric.

Although caching the quantity data in memory on a single computer can provide performance improvements, a single cache cannot provide availability or scalability when the order volume is high. For this purpose, quantity data can be replicated in multiple caches across the distributed cache. However, because quantity information is shared and concurrently updated, the consistency of quantity values needs to be maintained across the distributed cache.

Maintaining consistency in distributed caches requires data replication, transactions, data change notifications, and invalidations. However, support for high performance and scale requires relaxed notions of consistency and transactions. For example, the order application may over-sell the item (above the available quantity) in order to allow concurrent updates to quantity for increased scale and performance. Typically, such conflicts are resolved by vendor intervention.

Scenarios

In this section, we discuss couple of real-world scenarios and the category of data that they are dealing with and their caching requirements.

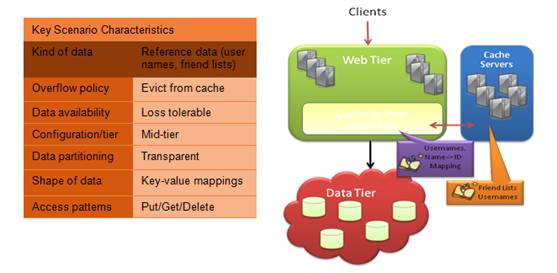

Social Networking Site

Consider a social networking site that people use for keeping up with friends. The site allows the uploading of an unlimited number of photos, sharing links and videos, and learning more about the people they meet. Such a site can accelerate performance by caching users and friend lists. User names and friend lists fall under reference data, making it very attractive for caching.

Figure 2: Use of cache in a social networking site

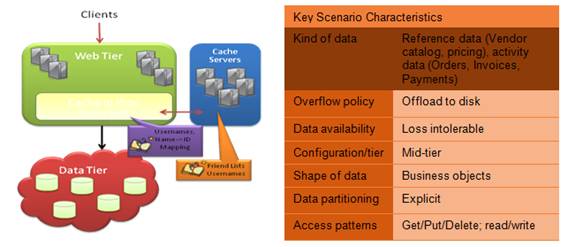

Enterprise LOB

Consider the use of distributed cache in a large enterprise for both scalability and the availability of business data. Assume that it is an enterprise that provides a Web-based invoice (payment, vendor analysis) and expense management system for large enterprises, with a focus on litigation expense management. Vendor analysis is an infrequent and periodic operation that enterprises perform. Once the desired vendor catalogs are created, they can be cached as reference data so that purchase and payment processes can gain performance benefits. The vendor catalogs include information like vendor names, offered services, price etc. The purchase process includes activities like order submission, invoicing, and payment. The data in these activities forms activity data, where the data is primarily accessed and manipulated by a specific business transaction. Again, such data can be cached for performance, scale, and availability

Vendor sourcing and analysis is involves aggregating data from multiple vendor sources; the aggregated data is cached and order, invoice, and payment data is also cached while these business operations are active.

Figure 3: Use of cache in an enterprise scenario

AppFabric Caching Concepts

In this section we look at caching concepts that will be useful when programming against AppFabric.

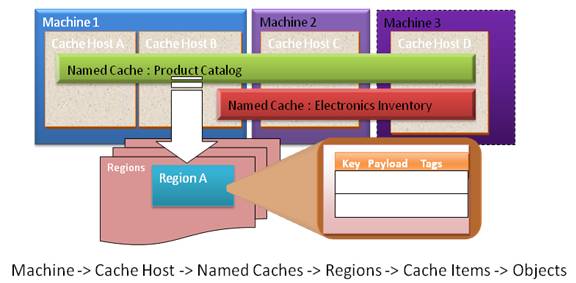

Logical Hierarchy

Named caches and regions are the two basic building blocks of caching in AppFabric. A named cache is used for storing a logical grouping of data together. Regions are logical groupings of objects within named caches.

A user can run multiple processes that host a cache instance, called cache hosts. The cache hosts can access named caches that can be stored across all the cluster nodes. The named cache consists of regions and regions store cache items. Figure 4 shows the logical hierarchy.

Figure 4: Logical hierarchy

Named Cache

You can think of a named cache as equivalent to a database. A named cache is used for storing a logical grouping of data. Also, all physical configurations and cache policies such as failover, expiration, eviction and so forth, are specified at this levelAll the applications that need to communicate to the same named cache must instantiate the same named cache using the DataCacheFactory.

A cluster or node can contain one or more named caches. An application may use one or more named caches based on the policies for the various caches. For example, activity data may be stored in a partitioned named cache that is partitioned while a reference data (a catalog for example) may be stored in a named cache that is replicated.

Region

Regions are logical grouping of objects within a named cache. You can think of regions as equivalent to tables, although regions can also store arbitrary sets of key value pairs. Items within a region are guaranteed to reside on a single node and are the logical units for replication and node placement. A named cache consists of one of more regions.

An application is not required to use regions and can use the put/get/remove APIs using only the key to the object. In fact, the application will scale better when not using regions because the keys can be distributed across the named cache. If no region is specified, the system automatically partitions the keys into multiple implicitly created regions.

Cache Item

A cache item represents the lowest level of caching that contains the object to be cached along with the key, the object payload, tags, and the time to live (TTL), the created timestamp, the version, and other internal bookkeeping information. A region contains one or more of these cache items.

The following is a code example that shows the creation of a named cache and region.

// CacheFactory class provides methods to return cache objects

// Create instance of CacheFactory (reads appconfig)

DataCacheFactory fac = new DataCacheFactory();

// Get a named cache from the factory

DataCache catalog = fac.GetCache("catalogcache");

//-------------------------------------------------------

// Simple Get/Put

catalog.Put("toy-101", new Toy("thomas", .,.));

// From the same or a different client

Toy toyObj = (Toy)catalog.Get("toy-101");

// ------------------------------------------------------

// Region based Get/Put

catalog.CreateRegion("toyRegion", true);

// Both toy and toyparts are put in the same region

catalog.Put("toy-101", new Toy( .,.), "toyRegion");

catalog.Put("toypart-100", new ToyParts(…), "toyRegion");

Toy toyObj = (Toy)catalog.Get("toy-101", "toyRegion");

Cache Concepts

We will be referring to primary and secondary nodes often when we talk about caching in AppFabric.

Primary Node

The node where a region is located is the primary node for that region. All access to this region will be routed to the primary node for that region.

Secondary Nodes

If the named cache is configured to have a “backup” for high availability, then another node is chosen to contain a copy of this data. This node is called the secondary node for that region. All changes made to the primary node are also reflected on the secondary node. Thus if the primary node for a region fails, the secondary node can be used to retrieve the data without having to have logs written to disk.

Cache Types

AppFabric supports two common cache types – partitioned cache and local cache. Depending on the type of data, applications can choose the appropriate type of cache.

Partitioned Cache

In partitioned cache, regions are partitioned among all of the available nodes on which the named cache is defined. The combined memory of all the computers across the cluster can be used to cache data, thus increasing the amount of memory available to the cache. All reads and writes to a region are directed to the node that contains the primary copy of the region.

Scale

Partitioned cache can be used to achieve a desired scale.

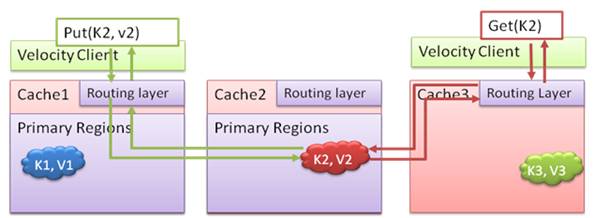

The figure in the next section shows how Put and Get operations work in the partitioned cache. In the case where the client performs a Put operation on the cache, the Put assigns the value “v2” for key “K2”. The routing layer component in Cache1 determines that the key “K2” really belongs to Cache2 and routes the request to that cache host. Similarly, Get requests for the same key also get routed to Cache2. AppFabric also supports the configuration where routing layer can be part of the client.

Figure 5: Routing in a partitioned cache

Applications can increase scale in this partitioned cache model by adding more computers. New computers can be added to achieve two goals:

When new computers are added to the cluster, automatic load balancing occurs and some partitions on existing nodes get migrated to the new computers. This results in keys being distributed across all those computers. This results in the access requests being routed to more computers now, resulting in increased throughput

When new computers are added, there is more memory to store more data. Applications can now easily scaled by increasing the data being cached.

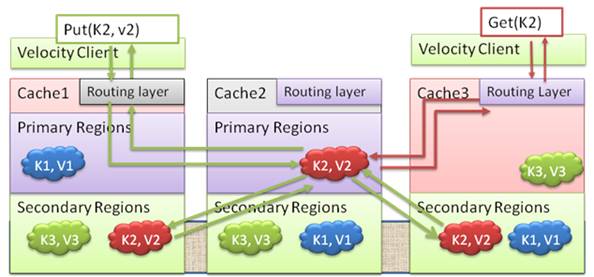

Availability

When using a partitioned cache, it possible to specify a number of nodes as secondary caches. This allows the same data to be stored on multiple computers. When a primary node fails, one of the secondary nodes becomes the primary node, enabling applications to continue accessing the data that was stored on the computer.

The following example shows how adding an object and retrieving it will work for a partitioned cache with two secondary caches configured. Take the case where the client is sending a request to put the value “v2” with key “K2” to Cache1. The routing layer in Cache1 determines that the key “K2” belongs to Cache2 and routes it appropriately. Cache2 performs the operation locally and, in addition, sends it to two other secondary nodes. It waits for an acknowledgement from the secondary nodes that they have received the message and then acknowledges the success of the operation back to Cache1. Cache1 then relays the success back to the client.

A Get operation behaves the same way as it does in a partitioned cache without secondary caches; the operation gets routed to the appropriate primary node.

Figure 6: Routing in a partitioned cache with secondary caches

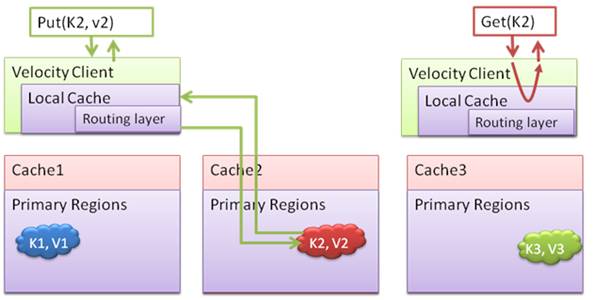

Local Cache

Applications can also maintain a local cache in the application process space for frequently accessed items. In the local cache, payload is kept in the object form. This helps applications save the deserialization cost as well as the network hop to the primary computer, resulting in increased performance.

Figure 7: Routing when using a local cache

Cache Clients

There are two types of cache clients: simple and routing.

The simple client has no routing capabilities and does not track where each cached object is stored. A simple client is configured to contact only one cache host in the cluster. If the simple client requests an object from a cache host that is not located in its memory, that particular cache host retrieves the object from the cluster and then returns it to the simple client.

The routing client contains a routing table to keep track of cached object placement across all cache hosts in the cluster. Because the routing client keeps track of where each of the cached objects are, it can make requests directly to the cache host that stores the object in memory.

The diagram below shows a routing client used with a partitioned cache.

Figure 8: Routing client used with a partitioned cache

Expiration and Eviction

AppFabric ensures the validity of cache items by supporting expiration and eviction. When adding objects to the cache, it is possible to optionally specify a time to live (TTL). Expired objects are removed from the cache.

Applications can specify a “high watermark” that determines when eviction kicks inand items will be evicted according to a least-recently-used algorithm.

Consistency Models

AppFabric supports a strong consistency model of access for the different cache types. This means that the cache operations (e.g. Get, Put) are performed synchronously before returning to the client.

Concurrency Model

AppFabric supports both optimistic version-based updates and pessimistic locking.

Optimistic Version-based Updates

This model is based on the cache associating a version with each cache item. Any update to the object increments the version internally. When an application retrieves the cache item, its version is also returned. An update of the cache item succeeds only if the version of the passed-in cache item is the same as the one stored in the cache.

Version-based Update

| Time | Client1 | Client2 (Different Thread or process) |

|---|---|---|

T0 |

DataCacheItem |

item =catalog.GetCacheItem( ”Zune”,"PlayerRegion"); DataCacheItem item =catalog.GetCacheItem( ”Zune”,"PlayerRegion"); |

T1 |

((ZuneObject)item.Object).inventory --; |

((ZuneObject)item.Object).inventory--; |

T2 |

catalog.Put( “Zune”, item.Object, item.Version, “PlayerRegion”); // Operation succeeds, item version incremented in the cache |

|

T3 |

catalog.Put( “Zune”, item.Object, item.Version, “PlayerRegion”,); // Version mismatch // Client must retry again |

Pessimistic Locking

An application can take a lock when retrieving an object from the cache. When using the GetandLock method, a lock handle is returned along with the object. Any further calls to GetandLock for that object will fail. However, regular Gets will succeed.

Using PutAndLock, an object is updated in the cache and the lock is released. Regular Puts remove the lock and overwrite the object. Also, the UnLock method explicitly unlocks the object.

Notifications

In a distributed architecture, because many clients work with the same data, it may be useful for a client to know when a cache item is changed by another client. Notifications can be set on a region or a cache item level. When set, a notification event is generated for any change (add, put, remove) to the region or cache items.

Deployment Topology

The AppFabric cache runs as a service that can be accessed from client applications running on the same computer or on other client computers. Applications can access the cache through the .NET client APIs. The client API uses TCP/IP to communicate with the service.

Figure 10: Deployment Topology

ASP.NET Integration

AppFabric provides a SessionStoreProvider class that plugs into the ASP.NET session storage provider model and stores session states in a partitioned cache. This enables non-sticky routing and ensures that session information is available across the cluster. ASP.NET applications using AppFabric can scale-out by adding more nodes and also by configuring secondary caches for the session data and thereby increasing availability.

Performance Testing

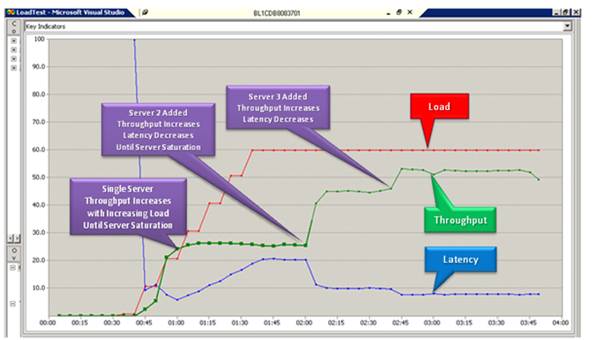

We created a test application to see how well caching in AppFabric scales. The application consisted of simulating lots of users on many distributed client computers. Each user thread performs operations like Get, Put, and Remove on a 20K payload. We plotted the throughput as the load gradually increased and also as new servers were added.

Figure 11 shows the benefits of caching very clearly. The throughput increases as the load increases until it reaches saturation and stabilizes. At this point, if we add more servers, load balancing kicks in and the throughput increases again and the response time goes down.

Figure 11: Performance Testing Results

Conclusion

AppFabric can help scale your .NET applications easily and cheaply by allowing you to bring the memory capacity of multiple computers into a single unified cache. In addition, it also helps in dynamically scaling your application as your application needs grow by allowing the dynamic addition of computers to the cluster. AppFabric can store all your application data—reference, activity or resource data—while giving you the performance, scale, and availability that you need.