Create a Data Factory, Blob source, SQL sink and Pipeline

Please do the following steps before deploying the template:

- Complete the prerequisites mentioned in Overview and prerequisites article.

- Update values for the following parameters in azuredeploy.parameters.json file.

- storageAccountName

- storageAccountKey

- sqlServerName

- databaseName

- sqlServerUserName

- sqlServerPassword

When you deploy this Azure Resource Template, a data factory is created with the following entities:

- Azure Storage linked service

- Azure SQL Database linked service

- Azure Blob dataset

- Azure SQL dataset

- Pipeline with a copy activity

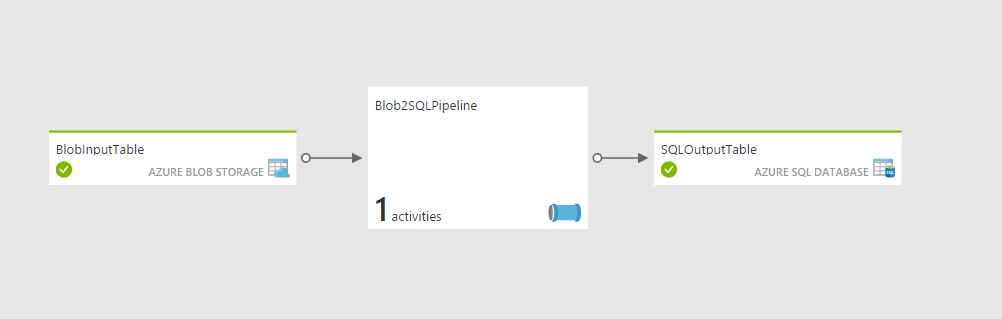

The copy activity in the pipeline copies data from an Azure blob to an Azure SQL database. You should see a diagram similar to the following one in the diagram view of the data factory.

Deploying sample

You can deploy this sample directly through the Azure Portal or by using the scripts supplied in the root of the repository.

To deploy a sample using the Azure Portal, click the Deploy to Azure button at the top of the article.

To deploy the sample via the command line (using Azure PowerShell or the Azure CLI) you can use the scripts.

Simply execute the script and pass in the folder name of the sample. For example:

.\Deploy-AzureResourceGroup.ps1 -ResourceGroupLocation 'eastus' -ArtifactStagingDirectory 101-data-factory-blob-to-sql-copy

azure-group-deploy.sh -a 101-data-factory-blob-to-sql-copy -l eastus

`Tags: Microsoft.DataFactory/datafactories, linkedservices, AzureStorage, AzureSqlDatabase, datasets, AzureBlob, string, TextFormat, AzureSqlTable, datapipelines, Copy, BlobSource, SqlSink, TabularTranslator`