Events

Mar 31, 11 PM - Apr 2, 11 PM

The biggest SQL, Fabric and Power BI learning event. March 31 – April 2. Use code FABINSIDER to save $400.

Register todayThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Applies to:

SQL Server

SSIS Integration Runtime in Azure Data Factory

The Hadoop Connection Manager enables a SQL Server Integration Services (SSIS) package to connect to a Hadoop cluster, by using the values you specify for the properties.

In the Add SSIS Connection Manager dialog box, select Hadoop > Add. The Hadoop Connection Manager Editor dialog box opens.

To configure related Hadoop cluster information, choose the WebHCat or WebHDFS tab in the left pane.

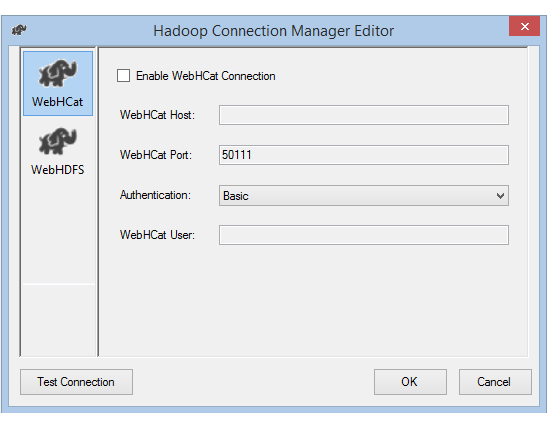

If you enable the WebHCat option to invoke a Hive or Pig job on Hadoop, do the following:

For WebHCat Host, enter the server that hosts the WebHCat service.

For WebHCat Port, enter the port of the WebHCat service, which by default is 50111.

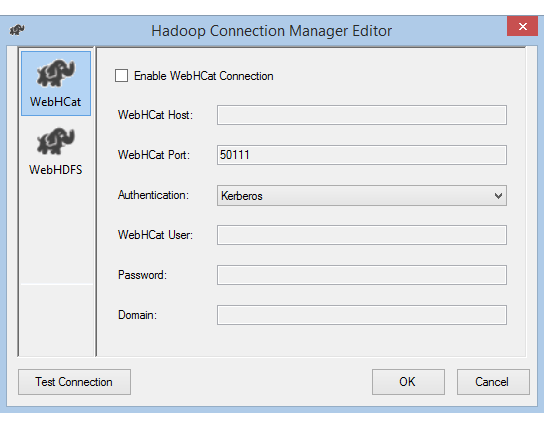

Select the Authentication method for accessing the WebHCat service. The available values are Basic and Kerberos.

For WebHCat User, enter the User authorized to access WebHCat.

If you select Kerberos authentication, enter the user's Password and Domain.

If you enable the WebHDFS option to copy data from or to HDFS, do the following:

For WebHDFS Host, enter the server that hosts the WebHDFS service.

For WebHDFS Port, enter the port of the WebHDFS service, which by default is 50070.

Select the Authentication method for accessing the WebHDFS service. The available values are Basic and Kerberos.

For WebHDFS User, enter the user authorized to access HDFS.

If you select Kerberos authentication, enter the user's Password and Domain.

Select Test Connection. (Only the connection that you enabled is tested.)

Select OK to close the dialog box.

There are two options to set up the on-premises environment so you can use Kerberos authentication with the Hadoop Connection Manager. You can choose the option that better fits your circumstances.

On the SSIS computer:

Run the Ksetup utility to configure the Kerberos Key Distribution Center (KDC) server and realm.

The computer must be configured as a member of a workgroup, because a Kerberos realm is different from a Windows domain. Set the Kerberos realm and add a KDC server, as shown in the following example. Replace REALM.COM with your own respective realm, as needed.

C:> Ksetup /setdomain REALM.COM`

C:> Ksetup /addkdc REALM.COM <your_kdc_server_address>

After running these commands, restart the computer.

Verify the configuration with Ksetup command. The output should look like the following sample:

C:> Ksetup

default realm = REALM.COM (external)

REALM.com:

kdc = <your_kdc_server_address>

Note

Replace REALM.COM and AD.COM in the following tutorial with your own respective realm and domain controller, as needed.

On the KDC server:

Edit the KDC configuration in the krb5.conf file. Allow KDC to trust the Windows domain by referring to the following configuration template. By default, the configuration is located at /etc/krb5.conf.

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = REALM.COM

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

[realms]

REALM.COM = {

kdc = node.REALM.COM

admin_server = node.REALM.COM

}

AD.COM = {

kdc = windc.ad.com

admin_server = windc.ad.com

}

[domain_realm]

.REALM.COM = REALM.COM

REALM.COM = REALM.COM

.ad.com = AD.COM

ad.com = AD.COM

[capaths]

AD.COM = {

REALM.COM = .

}

Restart the KDC service after configuration.

Prepare a principal named krbtgt/REALM.COM@AD.COM on the KDC server. Use the following command:

Kadmin> addprinc krbtgt/REALM.COM@AD.COM

In the hadoop.security.auth_to_local HDFS service configuration file, add RULE:[1:$1@$0](.*@AD.COM)s/@.*//.

On the domain controller:

Run the following Ksetup commands to add a realm entry:

C:> Ksetup /addkdc REALM.COM <your_kdc_server_address>

C:> ksetup /addhosttorealmmap HDFS-service-FQDN REALM.COM

Establish trust from the Windows domain to the Kerberos realm. In the following example, [password] is the password for the principal krbtgt/REALM.COM@AD.COM.

C:> netdom trust REALM.COM /Domain: AD.COM /add /realm /password:[password]

Select an encryption algorithm to use with Kerberos.

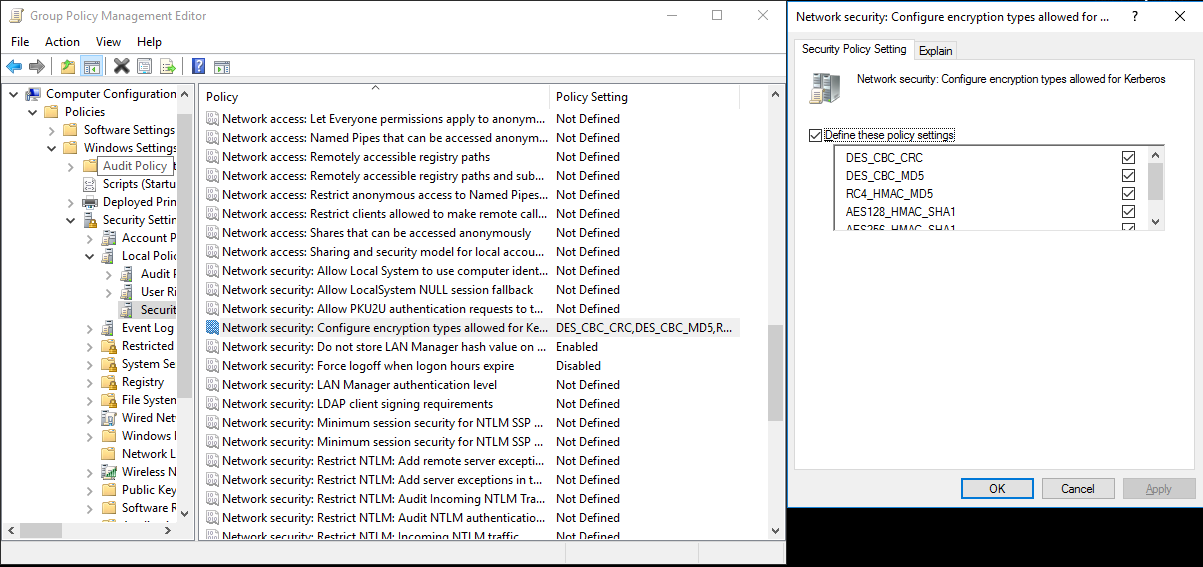

Go to Server Manager > Group Policy Management > Domain. From there, go to Group Policy Objects > Default or Active Domain Policy > Edit.

In the Group Policy Management Editor pop-up window, go to Computer Configuration > Policies > Windows Settings. From there, go to Security Settings > Local Policies > Security Options. Configure Network security: Configure Encryption types allowed for Kerberos.

Select the encryption algorithm you want to use to connect to the KDC. Typically you can select any of the options.

Use the Ksetup command to specify the encryption algorithm to be used on the specific realm.

C:> ksetup /SetEncTypeAttr REALM.COM DES-CBC-CRC DES-CBC-MD5 RC4-HMAC-MD5 AES128-CTS-HMAC-SHA1-96 AES256-CTS-HMAC-SHA1-96

To use the Kerberos principal in the Windows domain, create the mapping between the domain account and Kerberos principal.

Go to Administrative tools > Active Directory Users and Computers.

Configure advanced features by selecting View > Advanced Features.

Locate the account to which you want to create mappings, right-click to view Name Mappings, and then select the Kerberos Names tab.

Add a principal from the realm.

On the gateway computer, run the following Ksetup commands to add a realm entry.

C:> Ksetup /addkdc REALM.COM <your_kdc_server_address>

C:> ksetup /addhosttorealmmap HDFS-service-FQDN REALM.COM

Events

Mar 31, 11 PM - Apr 2, 11 PM

The biggest SQL, Fabric and Power BI learning event. March 31 – April 2. Use code FABINSIDER to save $400.

Register todayTraining

Learning path

Run high-performance computing (HPC) applications on Azure - Training

Azure HPC is a purpose-built cloud capability for HPC & AI workload, using leading-edge processors and HPC-class InfiniBand interconnect, to deliver the best application performance, scalability, and value. Azure HPC enables users to unlock innovation, productivity, and business agility, through a highly available range of HPC & AI technologies that can be dynamically allocated as your business and technical needs change. This learning path is a series of modules that help you get started on Azure HPC - you

Certification

Microsoft Certified: Fabric Data Engineer Associate - Certifications

As a Fabric Data Engineer, you should have subject matter expertise with data loading patterns, data architectures, and orchestration processes.

Documentation

Hadoop Hive Task - SQL Server Integration Services (SSIS)

Hadoop Hive Task

Hadoop File System Task - SQL Server Integration Services (SSIS)

Hadoop File System Task

Hadoop and HDFS Support in Integration Services (SSIS) - SQL Server Integration Services (SSIS)

Hadoop and HDFS Support in Integration Services (SSIS)