Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Applies to:

Azure Synapse Analytics

Create a SQL Server Integration Services (SSIS) package to load data into a dedicated SQL pool in Azure Synapse Analytics. You can optionally restructure, transform, and cleanse the data as it passes through the SSIS data flow.

This article shows you how to do the following things:

- Create a new Integration Services project in Visual Studio.

- Design an SSIS package that loads data from the source into the destination.

- Run the SSIS package to load the data.

Basic concepts

The package is the basic unit of work in SSIS. Related packages are grouped in projects. You create projects and design packages in Visual Studio with SQL Server Data Tools. The design process is a visual process in which you drag and drop components from the Toolbox to the design surface, connect them, and set their properties. After you finish your package, you can run it, and you can optionally deploy it to SQL Server or SQL Database for comprehensive management, monitoring, and security.

A detailed introduction to SSIS is beyond the scope of this article. To learn more, see the following articles:

Options for loading data into Azure Synapse Analytics with SSIS

SQL Server Integration Services (SSIS) is a flexible set of tools that provides various options for connecting to, and loading data into, Azure Synapse Analytics.

The preferred method, which provides the best performance, is to create a package that uses the Azure SQL DW Upload Task to load the data. This task encapsulates both source and destination information. It assumes that your source data is stored locally in delimited text files.

Alternately, you can create a package that uses a Data Flow task that contains a source and a destination. This approach supports a wide range of data sources, including SQL Server and Azure Synapse Analytics.

Prerequisites

To step through this tutorial, you need the following things:

SQL Server Integration Services (SSIS). SSIS is a component of SQL Server and requires a licensed version, or the developer or evaluation version, of SQL Server. To get an evaluation version of SQL Server, see Evaluate SQL Server.

Visual Studio (optional). To get the free Visual Studio Community Edition, see Visual Studio Community. If you don't want to install Visual Studio, you can install SQL Server Data Tools (SSDT) only. SSDT installs a version of Visual Studio with limited functionality.

SQL Server Data Tools for Visual Studio (SSDT). To get SQL Server Data Tools for Visual Studio, see Download SQL Server Data Tools (SSDT).

An Azure Synapse Analytics database and permissions. This tutorial connects to a dedicated SQL pool in Azure Synapse Analytics instance and loads data into it. You have to have permission to connect, to create a table, and to load data.

Create a new Integration Services project

Launch Visual Studio.

On the File menu, select New | Project.

Navigate to the Installed | Templates | Business Intelligence | Integration Services project types.

Select Integration Services Project. Provide values for Name and Location, and then select OK.

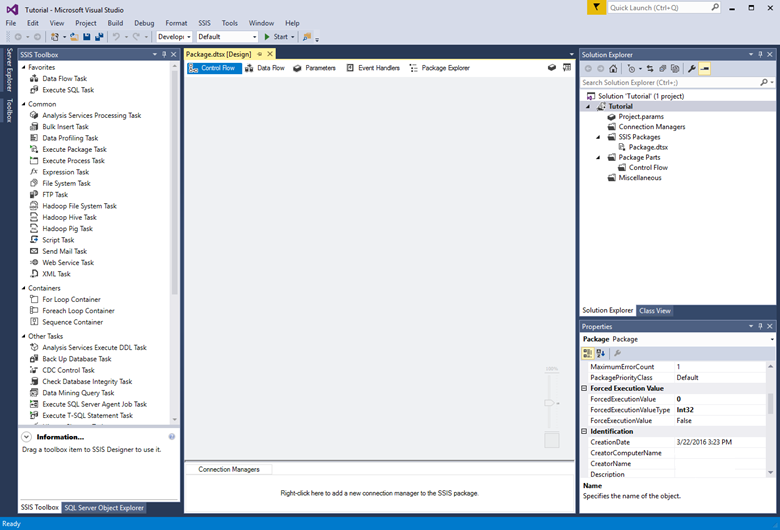

Visual Studio opens and creates a new Integration Services (SSIS) project. Then Visual Studio opens the designer for the single new SSIS package (Package.dtsx) in the project. You see the following screen areas:

On the left, the Toolbox of SSIS components.

In the middle, the design surface, with multiple tabs. You typically use at least the Control Flow and the Data Flow tabs.

On the right, the Solution Explorer and the Properties panes.

Option 1 - Use the SQL DW Upload task

The first approach is a package that uses the SQL DW Upload task. This task encapsulates both source and destination information. It assumes that your source data is stored in delimited text files, either locally or in Azure Blob Storage.

Prerequisites for Option 1

To continue the tutorial with this option, you need the following things:

The Microsoft SQL Server Integration Services Feature Pack for Azure. The SQL DW Upload task is a component of the Feature Pack.

An Azure Blob Storage account. The SQL DW Upload task loads data from Azure Blob Storage into Azure Synapse Analytics. You can load files that are already in Blob Storage, or you can load files from your computer. If you select files on your computer, the SQL DW Upload task uploads them to Blob Storage first for staging, and then loads them into your dedicated SQL pool.

Add and configure the SQL DW Upload Task

Drag a SQL DW Upload Task from the Toolbox to the center of the design surface (on the Control Flow tab).

Double-click the task to open the SQL DW Upload Task Editor.

Configure the task with the help of the guidance in the article Azure SQL DW Upload Task. Since this task encapsulates both source and destination information, and the mappings between source and destination tables, the task editor has several pages of settings to configure.

Create a similar solution manually

For more control, you can manually create a package that emulates the work done by the SQL DW Upload task.

Use the Azure Blob Upload Task to stage the data in Azure Blob Storage. To get the Azure Blob Upload task, download the Microsoft SQL Server Integration Services Feature Pack for Azure.

Then use the SSIS Execute SQL task to launch a PolyBase script that loads the data into your dedicated SQL pool. For an example that loads data from Azure Blob Storage into dedicated SQL pool (but not with SSIS), see Tutorial: Load data to Azure Synapse Analytics.

Option 2 - Use a source and destination

The second approach is a typical package which uses a Data Flow task that contains a source and a destination. This approach supports a wide range of data sources, including SQL Server and Azure Synapse Analytics.

This tutorial uses SQL Server as the data source. SQL Server runs on premises or on an Azure virtual machine.

To connect to SQL Server and to a dedicated SQL pool, you can use an ADO.NET connection manager and source and destination, or an OLE DB connection manager and source and destination. This tutorial uses ADO.NET because it has the fewest configuration options. OLE DB might provide slightly better performance than ADO.NET.

As a shortcut, you can use the SQL Server Import and Export Wizard to create the basic package. Then, save the package, and open it in Visual Studio or SSDT to view and customize it. For more info, see Import and Export Data with the SQL Server Import and Export Wizard.

Prerequisites for Option 2

To continue the tutorial with this option, you need the following things:

Sample data. This tutorial uses sample data stored in SQL Server in the AdventureWorks sample database as the source data to be loaded into a dedicated SQL pool. To get the AdventureWorks sample database, see AdventureWorks Sample Databases.

A firewall rule. You have to create a firewall rule on your dedicated SQL pool with the IP address of your local computer before you can upload data to the dedicated SQL pool.

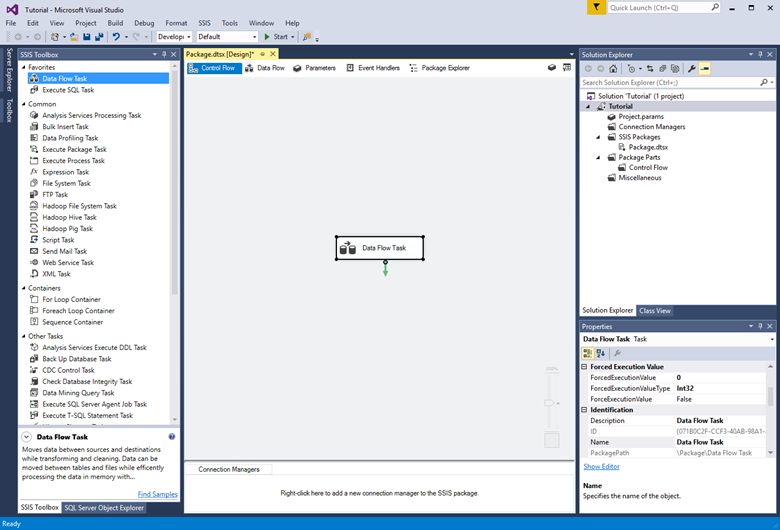

Create the basic data flow

Drag a Data Flow Task from the Toolbox to the center of the design surface (on the Control Flow tab).

Double-click the Data Flow Task to switch to the Data Flow tab.

From the Other Sources list in the Toolbox, drag an ADO.NET Source to the design surface. With the source adapter still selected, change its name to SQL Server source in the Properties pane.

From the Other Destinations list in the Toolbox, drag an ADO.NET Destination to the design surface under the ADO.NET Source. With the destination adapter still selected, change its name to SQL DW destination in the Properties pane.

Configure the source adapter

Double-click the source adapter to open the ADO.NET Source Editor.

On the Connection Manager tab of the ADO.NET Source Editor, select the New button next to the ADO.NET connection manager list to open the Configure ADO.NET Connection Manager dialog box and create connection settings for the SQL Server database from which this tutorial loads data.

In the Configure ADO.NET Connection Manager dialog box, select the New button to open the Connection Manager dialog box and create a new data connection.

In the Connection Manager dialog box, do the following things.

For Provider, select the SqlClient Data Provider.

For Server name, enter the SQL Server name.

In the Log on to the server section, select or enter authentication information.

In the Connect to a database section, select the AdventureWorks sample database.

Select Test Connection.

In the dialog box that reports the results of the connection test, select OK to return to the Connection Manager dialog box.

In the Connection Manager dialog box, select OK to return to the Configure ADO.NET Connection Manager dialog box.

In the Configure ADO.NET Connection Manager dialog box, select OK to return to the ADO.NET Source Editor.

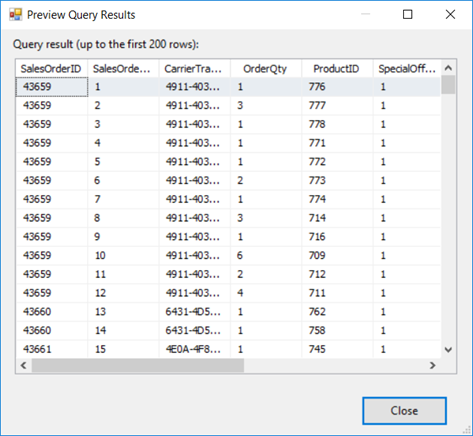

In the ADO.NET Source Editor, in the Name of the table or the view list, select the Sales.SalesOrderDetail table.

Select Preview to see the first 200 rows of data in the source table in the Preview Query Results dialog box.

In the Preview Query Results dialog box, select Close to return to the ADO.NET Source Editor.

In the ADO.NET Source Editor, select OK to finish configuring the data source.

Connect the source adapter to the destination adapter

Select the source adapter on the design surface.

Select the blue arrow that extends from the source adapter and drag it to the destination editor until it snaps into place.

In a typical SSIS package, you use several other components from the SSIS Toolbox in between the source and the destination to restructure, transform, and cleanse your data as it passes through the SSIS data flow. To keep this example as simple as possible, we're connecting the source directly to the destination.

Configure the destination adapter

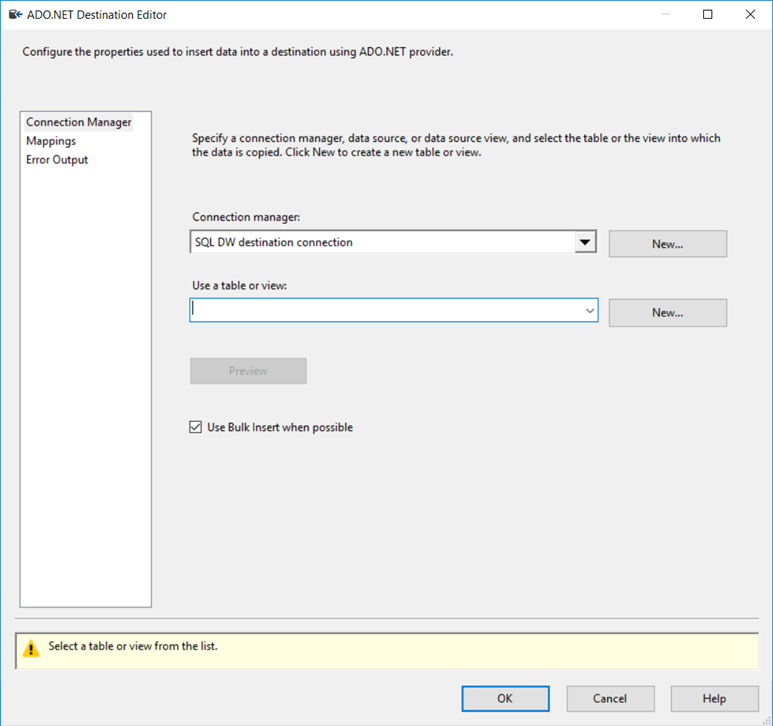

Double-click the destination adapter to open the ADO.NET Destination Editor.

On the Connection Manager tab of the ADO.NET Destination Editor, select the New button next to the Connection manager list to open the Configure ADO.NET Connection Manager dialog box and create connection settings for the Azure Synapse Analytics database into which this tutorial loads data.

In the Configure ADO.NET Connection Manager dialog box, select the New button to open the Connection Manager dialog box and create a new data connection.

In the Connection Manager dialog box, do the following things.

For Provider, select the SqlClient Data Provider.

For Server name, enter the dedicated SQL pool name.

In the Log on to the server section, select Use SQL Server authentication and enter authentication information.

In the Connect to a database section, select an existing dedicated SQL pool database.

Select Test Connection.

In the dialog box that reports the results of the connection test, select OK to return to the Connection Manager dialog box.

In the Connection Manager dialog box, select OK to return to the Configure ADO.NET Connection Manager dialog box.

In the Configure ADO.NET Connection Manager dialog box, select OK to return to the ADO.NET Destination Editor.

In the ADO.NET Destination Editor, select New next to the Use a table or view list to open the Create Table dialog box to create a new destination table with a column list that matches the source table.

In the Create Table dialog box, do the following things.

Change the name of the destination table to SalesOrderDetail.

Remove the rowguid column. The uniqueidentifier data type isn't supported in dedicated SQL pool.

Change the data type of the LineTotal column to money. The decimal data type isn't supported in dedicated SQL pool. For info about supported data types, see CREATE TABLE (Azure Synapse Analytics, Parallel Data Warehouse).

Select OK to create the table and return to the ADO.NET Destination Editor.

In the ADO.NET Destination Editor, select the Mappings tab to see how columns in the source are mapped to columns in the destination.

Select OK to finish configuring the destination.

Run the package to load the data

Run the package by selecting the Start button on the toolbar or by selecting one of the Run options on the Debug menu.

The following paragraphs describe what you see if you created the package with the second option described in this article, that is, with a data flow containing a source and destination.

As the package begins to run, you see yellow spinning wheels to indicate activity and the number of rows processed so far.

When the package finishes running, you see green check marks to indicate success and the total number of rows of data loaded from the source to the destination.

Congratulations, you've successfully used SQL Server Integration Services to load data into Azure Synapse Analytics.