Events

Mar 31, 11 PM - Apr 2, 11 PM

The biggest SQL, Fabric and Power BI learning event. March 31 – April 2. Use code FABINSIDER to save $400.

Register todayThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Applies to:

SQL Server

The article explains how to use PolyBase on a SQL Server instance to query external data in MongoDB.

If you haven't installed PolyBase, see PolyBase installation.

Before you create a database scoped credential, the database must have a master key to protect the credential. For more information, see CREATE MASTER KEY.

To query the data from a MongoDB data source, you must create external tables to reference the external data. This section provides sample code to create these external tables.

The following Transact-SQL commands are used in this section:

Create a database scoped credential for accessing the MongoDB source.

The following script creates a database scoped credential. Before you run the script update it for your environment:

<credential_name> with a name for the credential.<username> with the user name for the external source.<password> with the appropriate password.CREATE DATABASE SCOPED CREDENTIAL [<credential_name>] WITH IDENTITY = '<username>', Secret = '<password>';

Important

The MongoDB ODBC Connector for PolyBase supports only basic authentication, not Kerberos authentication.

Create an external data source.

The following script creates the external data source. For reference, see CREATE EXTERNAL DATA SOURCE. Before you run the script update it for your environment:

<server> and <port> for your environment.<credential_name> with the name of the credential you created in the previous step.PUSHDOWN = ON or PUSHDOWN = OFF if you want to specify pushdown computation to the external source.CREATE EXTERNAL DATA SOURCE external_data_source_name

WITH (LOCATION = '<mongodb://<server>[:<port>]>'

[ [ , ] CREDENTIAL = <credential_name> ]

[ [ , ] CONNECTION_OPTIONS = '<key_value_pairs>'[,...]]

[ [ , ] PUSHDOWN = { ON | OFF } ])

[ ; ]

Query the external schema in MongoDB.

You can use the Data Virtualization extension for Azure Data Studio to connect to and generate a CREATE EXTERNAL TABLE statement based on the schema detected by the PolyBase ODBC Driver for MongoDB driver. You can also manually customize a script based on the output of the system stored procedure sp_data_source_objects (Transact-SQL). The Data Virtualization extension for Azure Data Studio and sp_data_source_table_columns use the same internal stored procedures to query the external schema schema.

To create external tables to MongoDB collections that contain arrays, the recommendation is to use Data Virtualization extension for Azure Data Studio. The flattening actions are performed automatically by the driver. The sp_data_source_table_columns stored procedure also automatically performs the flattening via the PolyBase ODBC Driver for MongoDB driver.

Create an external table.

If you use the Data Virtualization extension for Azure Data Studio, you can skip this step, as the CREATE EXTERNAL TABLE statement is generated for you. To provide the schema manually, consider the following sample script to create an external table. For reference, see CREATE EXTERNAL TABLE.

Before you run the script, update it for your environment:

friends is a custom data type.system.profile table. Also you can't specify a view because it can't obtain the metadata from it.CREATE EXTERNAL TABLE [MongoDbRandomData](

[_id] NVARCHAR(24) COLLATE SQL_Latin1_General_CP1_CI_AS NOT NULL,

[RandomData_friends_id] INT,

[RandomData_tags] NVARCHAR(MAX) COLLATE SQL_Latin1_General_CP1_CI_AS)

WITH (

LOCATION='MyDb.RandomData',

DATA_SOURCE=[MongoDb])

Optional: Create statistics on an external table.

We recommend creating statistics on external table columns, especially the ones used for joins, filters and aggregates, for optimal query performance.

CREATE STATISTICS statistics_name ON customer (C_CUSTKEY) WITH FULLSCAN;

Important

Once you have created an external data source, you can use the CREATE EXTERNAL TABLE command to create a query-able table over that source.

For an example, see Create an external table for MongoDB.

For information about MongoDB connection options, see MongoDB documentation: Connection String URI Format.

Flattening is enabled for nested and repeated data from MongoDB document collections. User is required to enable create an external table and explicitly specify a relational schema over MongoDB document collections that may have nested and/or repeated data.

JSON nested/repeated data types will be flattened as follows

Object: unordered key/value collection enclosed in curly braces (nested)

SQL Server creates a table column for each object key

Array: ordered values, separated by commas, enclosed in square brackets (repeated)

SQL Server adds a new table row for each array item

SQL Server creates a column per array to store the array item index

Column Name: arrayname_index

Data Type: bigint

There are several potential issues with this technique, two of them being:

An empty repeated field will effectively mask the data contained in the flat fields of the same record

The presence of multiple repeated fields can result in an explosion of the number of produced rows

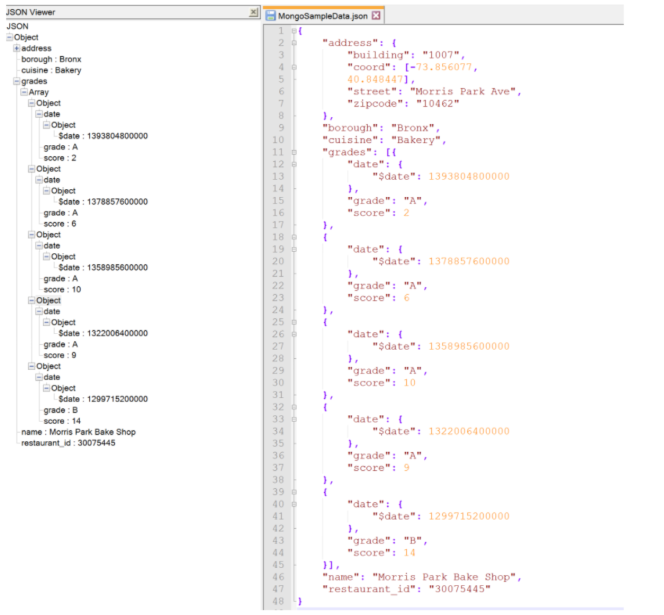

As an example, SQL Server evaluates the MongoDB sample dataset restaurant collection stored in non-relational JSON format. Each restaurant has a nested address field and an array of grades it was assigned on different days. The figure below illustrates a typical restaurant with nested address and nested-repeated grades.

Object address will be flattened as below:

restaurant.address.building becomes restaurant.address_buildingrestaurant.address.coord becomes restaurant.address_coordrestaurant.address.street becomes restaurant.address_streetrestaurant.address.zipcode becomes restaurant.address_zipcodeArray grades will be flattened as below:

| grades_date | grades_grade | games_score |

|---|---|---|

| 1393804800000 | A | 2 |

| 1378857600000 | A | 6 |

| 135898560000 | A | 10 |

| 1322006400000 | A | 9 |

| 1299715200000 | B | 14 |

Using the Cosmos DB Mongo API and the Mongo DB PolyBase connector you can create an external table of a Cosmos DB instance. This accomplished by following the same steps listed above. Make sure the Database scoped credential, Server address, port, and location string reflect that of the Cosmos DB server.

The following example creates an external data source with the following parameters:

| Parameter | Value |

|---|---|

| Name | external_data_source_name |

| Service | mongodb0.example.com |

| Instance | 27017 |

| Replica set | myRepl |

| TLS | true |

| Pushdown computation | On |

CREATE EXTERNAL DATA SOURCE external_data_source_name

WITH (LOCATION = 'mongodb://mongodb0.example.com:27017',

CONNECTION_OPTIONS = 'replicaSet=myRepl; tls=true',

PUSHDOWN = ON ,

CREDENTIAL = credential_name);

For more tutorials on creating external data sources and external tables to a variety of data sources, see PolyBase Transact-SQL reference.

To learn more about PolyBase, see Overview of SQL Server PolyBase.

Events

Mar 31, 11 PM - Apr 2, 11 PM

The biggest SQL, Fabric and Power BI learning event. March 31 – April 2. Use code FABINSIDER to save $400.

Register todayTraining

Module

Get started with the MongoDB API in Azure Cosmos DB - Training

Learn Azure Cosmos DB for MongoDB basics.

Certification

Microsoft Certified: Azure Cosmos DB Developer Specialty - Certifications

Write efficient queries, create indexing policies, manage, and provision resources in the SQL API and SDK with Microsoft Azure Cosmos DB.

Documentation

Access external data: SQL Server - PolyBase - SQL Server

Learn how to use PolyBase on a SQL Server instance to query external data in another SQL Server instance. Create external tables to reference external data.

Performance considerations in PolyBase for SQL Server - SQL Server

Performance considerations for PolyBase in your SQL Server instance.

Access external data: Hadoop - PolyBase - SQL Server

The article uses PolyBase on a SQL Server instance with Hadoop. PolyBase is suited for ad hoc queries of external tables and data import/export.