What is Azure Batch?

Few organizations have the resources to support permanent deployments of super-powerful compute platforms that might only occasionally be used to capacity. More typically, you need a flexible and scalable compute solution such as Azure Batch to provide the computational power.

As part of the process of performing Optical Character Recognition (OCR) on the uploaded pictures of water-purification meters using Azure Batch, you need to have an understanding of the main components of an Azure Batch workflow.

Here, you look at what Azure Batch is designed to do, and how Batch components are used in a typical workflow.

Compute-intensive tasks and parallel workloads

Some workloads require massive computational power. Examples include financial risk modeling, 3D image rendering, media transcoding, and genetic sequence analysis. In some cases, these workloads can be broken down into separate subtasks and can be run in parallel so that the tasks are performed in much less time. Three virtual machines (VMs) can do the work of one in a third of the time.

As part of the OCR project for the nonprofit, you can use Azure Batch to partition the work of performing the OCR, allowing the processing to be done in parallel so that it takes much less time to complete.

The components of Azure Batch

You can use Azure Batch for these large-scale parallel and computationally intensive tasks on Azure. At a high level, Azure Batch involves many components working together. Everything needs to take place within the context of an Azure Batch account, which acts as a container for all Batch resources.

For some use cases, you might wish to associate this account with an Azure Storage account to download input files and applications for use during execution and storing their results. Within your Azure Batch account, you can create pools of virtual compute nodes, running either Windows or Linux. You specify the number, CPU type, and memory size of the nodes, which the Azure batch service manages and schedules for you. A Batch account can contain many Batch pools.

Once you create one or more pools, you create individual jobs, which act like logical containers for all the tasks you schedule and can share common properties. Again, it's perfectly possible to have many jobs.

And finally, you create tasks, which describe how the work actually gets done. You can either use tasks to directly invoke the command line, or the tasks can run applications that you upload to Azure Storage. We'll see all the main components of Azure Batch working together in the next exercise.

Typical Azure Batch workflow

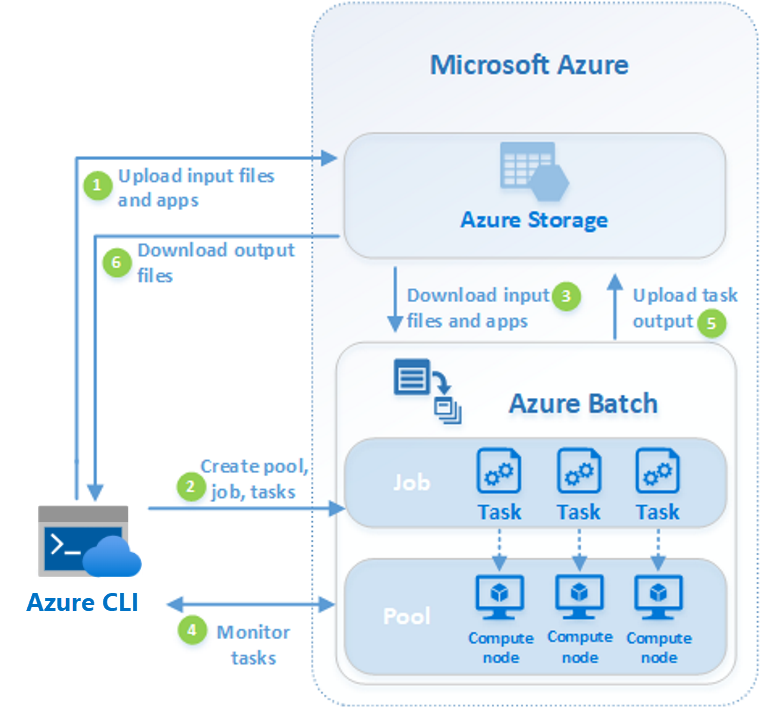

So what does a typical Azure Batch workflow actually look like? As shown in the following graphic, a typical real-world Azure Batch scenario requires data and application files. The Batch workflow begins by uploading these data and application files to an Azure storage account. You then create a Batch pool with as many Windows or Linux virtual compute nodes as needed. You can also autoscale them accordingly in case the demands of your workloads vary over time.

The Batch service then handles bringing the nodes online and scheduling tasks for execution onto the nodes. Before the tasks begin execution, they might download any data and application files from storage that they need for processing. While the tasks are executing, it's possible to query the status of the nodes and the progress of the tasks. Once the nodes complete their tasks, their task output can be examined or pushed to Azure storage.