GestureRecognizer Class

Definition

Important

Some information relates to prerelease product that may be substantially modified before it’s released. Microsoft makes no warranties, express or implied, with respect to the information provided here.

Provides gesture and manipulation recognition, event listeners, and settings.

public ref class GestureRecognizer sealed/// [Windows.Foundation.Metadata.Activatable(65536, Windows.Foundation.UniversalApiContract)]

/// [Windows.Foundation.Metadata.ContractVersion(Windows.Foundation.UniversalApiContract, 65536)]

/// [Windows.Foundation.Metadata.MarshalingBehavior(Windows.Foundation.Metadata.MarshalingType.None)]

class GestureRecognizer final/// [Windows.Foundation.Metadata.ContractVersion(Windows.Foundation.UniversalApiContract, 65536)]

/// [Windows.Foundation.Metadata.MarshalingBehavior(Windows.Foundation.Metadata.MarshalingType.None)]

/// [Windows.Foundation.Metadata.Activatable(65536, "Windows.Foundation.UniversalApiContract")]

class GestureRecognizer final/// [Windows.Foundation.Metadata.ContractVersion(Windows.Foundation.UniversalApiContract, 65536)]

/// [Windows.Foundation.Metadata.MarshalingBehavior(Windows.Foundation.Metadata.MarshalingType.None)]

/// [Windows.Foundation.Metadata.Activatable(65536, "Windows.Foundation.UniversalApiContract")]

/// [Windows.Foundation.Metadata.Threading(Windows.Foundation.Metadata.ThreadingModel.Both)]

class GestureRecognizer final[Windows.Foundation.Metadata.Activatable(65536, typeof(Windows.Foundation.UniversalApiContract))]

[Windows.Foundation.Metadata.ContractVersion(typeof(Windows.Foundation.UniversalApiContract), 65536)]

[Windows.Foundation.Metadata.MarshalingBehavior(Windows.Foundation.Metadata.MarshalingType.None)]

public sealed class GestureRecognizer[Windows.Foundation.Metadata.ContractVersion(typeof(Windows.Foundation.UniversalApiContract), 65536)]

[Windows.Foundation.Metadata.MarshalingBehavior(Windows.Foundation.Metadata.MarshalingType.None)]

[Windows.Foundation.Metadata.Activatable(65536, "Windows.Foundation.UniversalApiContract")]

public sealed class GestureRecognizer[Windows.Foundation.Metadata.ContractVersion(typeof(Windows.Foundation.UniversalApiContract), 65536)]

[Windows.Foundation.Metadata.MarshalingBehavior(Windows.Foundation.Metadata.MarshalingType.None)]

[Windows.Foundation.Metadata.Activatable(65536, "Windows.Foundation.UniversalApiContract")]

[Windows.Foundation.Metadata.Threading(Windows.Foundation.Metadata.ThreadingModel.Both)]

public sealed class GestureRecognizerfunction GestureRecognizer()Public NotInheritable Class GestureRecognizer- Inheritance

- Attributes

Windows requirements

| Device family |

Windows 10 (introduced in 10.0.10240.0)

|

| API contract |

Windows.Foundation.UniversalApiContract (introduced in v1.0)

|

Examples

Here we set up a GestureRecognizer object with a collection of input event handlers for processing both pointer and gesture input. For more information on how to listen to and handle Windows Runtime events, see Events and routed events overview. See the Basic input sample for the full implementation.

class ManipulationInputProcessor

{

GestureRecognizer recognizer;

UIElement element;

UIElement reference;

TransformGroup cumulativeTransform;

MatrixTransform previousTransform;

CompositeTransform deltaTransform;

public ManipulationInputProcessor(GestureRecognizer gestureRecognizer, UIElement target, UIElement referenceFrame)

{

recognizer = gestureRecognizer;

element = target;

reference = referenceFrame;

// Initialize the transforms that will be used to manipulate the shape

InitializeTransforms();

// The GestureSettings property dictates what manipulation events the

// Gesture Recognizer will listen to. This will set it to a limited

// subset of these events.

recognizer.GestureSettings = GenerateDefaultSettings();

// Set up pointer event handlers. These receive input events that are used by the gesture recognizer.

element.PointerPressed += OnPointerPressed;

element.PointerMoved += OnPointerMoved;

element.PointerReleased += OnPointerReleased;

element.PointerCanceled += OnPointerCanceled;

// Set up event handlers to respond to gesture recognizer output

recognizer.ManipulationStarted += OnManipulationStarted;

recognizer.ManipulationUpdated += OnManipulationUpdated;

recognizer.ManipulationCompleted += OnManipulationCompleted;

recognizer.ManipulationInertiaStarting += OnManipulationInertiaStarting;

}

public void InitializeTransforms()

{

cumulativeTransform = new TransformGroup();

deltaTransform = new CompositeTransform();

previousTransform = new MatrixTransform() { Matrix = Matrix.Identity };

cumulativeTransform.Children.Add(previousTransform);

cumulativeTransform.Children.Add(deltaTransform);

element.RenderTransform = cumulativeTransform;

}

// Return the default GestureSettings for this sample

GestureSettings GenerateDefaultSettings()

{

return GestureSettings.ManipulationTranslateX |

GestureSettings.ManipulationTranslateY |

GestureSettings.ManipulationRotate |

GestureSettings.ManipulationTranslateInertia |

GestureSettings.ManipulationRotateInertia;

}

// Route the pointer pressed event to the gesture recognizer.

// The points are in the reference frame of the canvas that contains the rectangle element.

void OnPointerPressed(object sender, PointerRoutedEventArgs args)

{

// Set the pointer capture to the element being interacted with so that only it

// will fire pointer-related events

element.CapturePointer(args.Pointer);

// Feed the current point into the gesture recognizer as a down event

recognizer.ProcessDownEvent(args.GetCurrentPoint(reference));

}

// Route the pointer moved event to the gesture recognizer.

// The points are in the reference frame of the canvas that contains the rectangle element.

void OnPointerMoved(object sender, PointerRoutedEventArgs args)

{

// Feed the set of points into the gesture recognizer as a move event

recognizer.ProcessMoveEvents(args.GetIntermediatePoints(reference));

}

// Route the pointer released event to the gesture recognizer.

// The points are in the reference frame of the canvas that contains the rectangle element.

void OnPointerReleased(object sender, PointerRoutedEventArgs args)

{

// Feed the current point into the gesture recognizer as an up event

recognizer.ProcessUpEvent(args.GetCurrentPoint(reference));

// Release the pointer

element.ReleasePointerCapture(args.Pointer);

}

// Route the pointer canceled event to the gesture recognizer.

// The points are in the reference frame of the canvas that contains the rectangle element.

void OnPointerCanceled(object sender, PointerRoutedEventArgs args)

{

recognizer.CompleteGesture();

element.ReleasePointerCapture(args.Pointer);

}

// When a manipulation begins, change the color of the object to reflect

// that a manipulation is in progress

void OnManipulationStarted(object sender, ManipulationStartedEventArgs e)

{

Border b = element as Border;

b.Background = new SolidColorBrush(Windows.UI.Colors.DeepSkyBlue);

}

// Process the change resulting from a manipulation

void OnManipulationUpdated(object sender, ManipulationUpdatedEventArgs e)

{

previousTransform.Matrix = cumulativeTransform.Value;

// Get the center point of the manipulation for rotation

Point center = new Point(e.Position.X, e.Position.Y);

deltaTransform.CenterX = center.X;

deltaTransform.CenterY = center.Y;

// Look at the Delta property of the ManipulationDeltaRoutedEventArgs to retrieve

// the rotation, X, and Y changes

deltaTransform.Rotation = e.Delta.Rotation;

deltaTransform.TranslateX = e.Delta.Translation.X;

deltaTransform.TranslateY = e.Delta.Translation.Y;

}

// When a manipulation that's a result of inertia begins, change the color of the

// the object to reflect that inertia has taken over

void OnManipulationInertiaStarting(object sender, ManipulationInertiaStartingEventArgs e)

{

Border b = element as Border;

b.Background = new SolidColorBrush(Windows.UI.Colors.RoyalBlue);

}

// When a manipulation has finished, reset the color of the object

void OnManipulationCompleted(object sender, ManipulationCompletedEventArgs e)

{

Border b = element as Border;

b.Background = new SolidColorBrush(Windows.UI.Colors.LightGray);

}

// Modify the GestureSettings property to only allow movement on the X axis

public void LockToXAxis()

{

recognizer.CompleteGesture();

recognizer.GestureSettings |= GestureSettings.ManipulationTranslateY | GestureSettings.ManipulationTranslateX;

recognizer.GestureSettings ^= GestureSettings.ManipulationTranslateY;

}

// Modify the GestureSettings property to only allow movement on the Y axis

public void LockToYAxis()

{

recognizer.CompleteGesture();

recognizer.GestureSettings |= GestureSettings.ManipulationTranslateY | GestureSettings.ManipulationTranslateX;

recognizer.GestureSettings ^= GestureSettings.ManipulationTranslateX;

}

// Modify the GestureSettings property to allow movement on both the X and Y axes

public void MoveOnXAndYAxes()

{

recognizer.CompleteGesture();

recognizer.GestureSettings |= GestureSettings.ManipulationTranslateX | GestureSettings.ManipulationTranslateY;

}

// Modify the GestureSettings property to enable or disable inertia based on the passed-in value

public void UseInertia(bool inertia)

{

if (!inertia)

{

recognizer.CompleteGesture();

recognizer.GestureSettings ^= GestureSettings.ManipulationTranslateInertia | GestureSettings.ManipulationRotateInertia;

}

else

{

recognizer.GestureSettings |= GestureSettings.ManipulationTranslateInertia | GestureSettings.ManipulationRotateInertia;

}

}

public void Reset()

{

element.RenderTransform = null;

recognizer.CompleteGesture();

InitializeTransforms();

recognizer.GestureSettings = GenerateDefaultSettings();

}

}

Samples

- User interaction mode sample

- Focus visuals sample

- Basic input sample

- Direct3D game sample

- DirectX and XAML game sample

Archived samples

- Input: Manipulations and gestures sample

- Input: XAML user input events sample

- XAML scrolling, panning, and zooming sample

- DirectX touch input sample

Remarks

You can create a gesture object for each appropriate element when your app starts. However, this approach might not scale well depending on the number of gesture objects you need to create (for example, a jigsaw puzzle with hundreds of pieces).

In this case, you can create gesture objects dynamically on a pointerdown event and destroy them on an MSGestureEnd event. This approach scales well, but does incur some overhead due to creating and releasing these objects.

Alternatively, you can statically allocate and dynamically manage a pool of reusable gesture objects.

Note

This class is not agile, which means that you need to consider its threading model and marshaling behavior. For more info, see Threading and Marshaling (C++/CX) and Using Windows Runtime objects in a multithreaded environment (.NET).

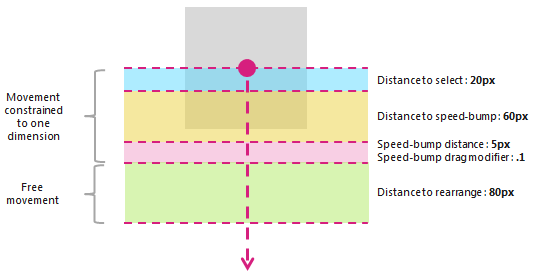

For more detail on how to use cross-slide functionality, see Guidelines for cross-slide. The threshold distances used by the cross-slide interaction are shown in the following diagram.

The PivotRadius and PivotCenter properties are used only when single pointer input is detected. They have no effect on multiple pointer input. The value for these properties should be updated regularly during the interaction.

Rotation is supported by a GestureRecognizer only when manipulationRotate is set through the GestureSettings property.

Rotation is not supported for single pointer input if the value of PivotRadius is set to 0.

Version history

| Windows version | SDK version | Value added |

|---|---|---|

| 2004 | 19041 | HoldMaxContactCount |

| 2004 | 19041 | HoldMinContactCount |

| 2004 | 19041 | HoldRadius |

| 2004 | 19041 | HoldStartDelay |

| 2004 | 19041 | TapMaxContactCount |

| 2004 | 19041 | TapMinContactCount |

| 2004 | 19041 | TranslationMaxContactCount |

| 2004 | 19041 | TranslationMinContactCount |

Constructors

| GestureRecognizer() |

Initializes a new instance of a GestureRecognizer object. |

Properties

| AutoProcessInertia |

Gets or sets a value that indicates whether manipulations during inertia are generated automatically. |

| CrossSlideExact |

Gets or sets a value that indicates whether the exact distance from initial contact to end of the cross-slide interaction is reported.By default, a small distance threshold is subtracted from the first position reported by the system for cross-slide interactions. If this flag is set, the distance threshold is not subtracted from the initial position. Note This distance threshold is intended to account for any slight movement of the contact after initial detection. It helps the system differentiate between cross-sliding and panning, and helps ensure that a tap gesture is not interpreted as either. |

| CrossSlideHorizontally |

Gets or sets a value that indicates whether the cross-slide axis is horizontal. |

| CrossSlideThresholds |

Gets or sets values that indicate the distance thresholds for a CrossSliding interaction. |

| GestureSettings |

Gets or sets a value that indicates the gesture and manipulation settings supported by an application. |

| HoldMaxContactCount |

Gets or sets the maximum number of contact points needed to recognize a Windows.UI.Input.GestureRecognizer.Holding event. |

| HoldMinContactCount |

Gets or sets the minimum number of contact points needed to recognize a Windows.UI.Input.GestureRecognizer.Holding event. |

| HoldRadius |

Gets or sets the radius of the contact points recognized for the Windows.UI.Input.GestureRecognizer.Holding event. |

| HoldStartDelay |

Gets or sets the time threshold at which the contacts are recognized for the Windows.UI.Input.GestureRecognizer.Holding event. |

| InertiaExpansion |

Gets or sets a value that indicates the relative change in size of an object from the start of inertia to the end of inertia (when resizing, or scaling, is complete). |

| InertiaExpansionDeceleration |

Gets or sets a value that indicates the rate of deceleration from the start of inertia to the end of inertia (when the resizing, or expansion, manipulation is complete). |

| InertiaRotationAngle |

Gets or sets a value that indicates the final angle of rotation of an object at the end of inertia (when the rotation manipulation is complete). |

| InertiaRotationDeceleration |

Gets or sets a value that indicates the rate of deceleration from the start of inertia to the end of inertia (when the rotation manipulation is complete). |

| InertiaTranslationDeceleration |

Gets or sets a value that indicates the rate of deceleration from the start of inertia to the end of inertia (when the translation manipulation is complete). |

| InertiaTranslationDisplacement |

Gets or sets a value that indicates the relative change in the screen location of an object from the start of inertia to the end of inertia (when the translation manipulation is complete). |

| IsActive |

Gets a value that indicates whether an interaction is being processed. |

| IsInertial |

Gets a value that indicates whether a manipulation is still being processed during inertia (no input points are active). |

| ManipulationExact |

Gets or sets a value that indicates whether the exact distance from initial contact to end of the interaction is reported. By default, a small distance threshold is subtracted from the first delta reported by the system. This distance threshold is intended to account for slight movements of the contact when processing a tap gesture. If this flag is set, the distance threshold is not subtracted from the first delta. |

| MouseWheelParameters |

Gets a set of properties that are associated with the wheel button of a mouse device. |

| PivotCenter |

Gets or sets the center point for a rotation interaction when single pointer input is detected. |

| PivotRadius |

Gets or sets the radius, from the PivotCenter to the pointer input, for a rotation interaction when single pointer input is detected. |

| ShowGestureFeedback |

Gets or sets a value that indicates whether visual feedback is displayed during an interaction. |

| TapMaxContactCount |

Gets or sets the maximum number of contact points needed to recognize a Windows.UI.Input.GestureRecognizer.Tapped event. |

| TapMinContactCount |

Gets or sets the minimum number of contact points needed to recognize a Windows.UI.Input.GestureRecognizer.Tapped event. |

| TranslationMaxContactCount |

Gets or sets the maximum number of contact points needed to recognize a translation (or panning) event. |

| TranslationMinContactCount |

Gets or sets the minimum number of contact points needed to recognize a translation (or panning) event. |

Methods

| CanBeDoubleTap(PointerPoint) |

Identifies whether a tap can still be interpreted as the second tap of a double tap gesture. |

| CompleteGesture() |

Causes the gesture recognizer to finalize an interaction. |

| ProcessDownEvent(PointerPoint) |

Processes pointer input and raises the GestureRecognizer events appropriate to a pointer down action for the gestures and manipulations specified by the GestureSettings property. |

| ProcessInertia() |

Performs inertia calculations and raises the various inertia events. |

| ProcessMouseWheelEvent(PointerPoint, Boolean, Boolean) |

Processes pointer input and raises the GestureRecognizer events appropriate to a mouse wheel action for the gestures and manipulations specified by the GestureSettings property. |

| ProcessMoveEvents(IVector<PointerPoint>) |

Processes pointer input and raises the GestureRecognizer events appropriate to a pointer move action for the gestures and manipulations specified by the GestureSettings property. |

| ProcessUpEvent(PointerPoint) |

Processes pointer input and raises the GestureRecognizer events appropriate to a pointer up action for the gestures and manipulations specified by the GestureSettings property. |

Events

| CrossSliding |

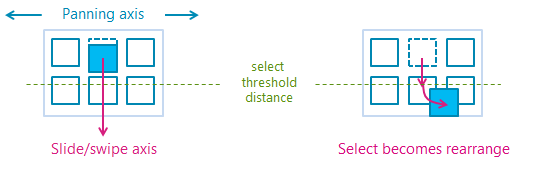

Occurs when a user performs a slide or swipe gesture (through a single touch contact) within a content area that supports panning along a single axis only. The gesture must occur in a direction that is perpendicular to this panning axis. Note A swipe is a short sliding gesture that results in a selection action while the longer slide gesture crosses a distance threshold and results in a rearrange action. The swipe and slide gestures are demonstrated in the following diagram.

|

| Dragging |

Occurs when a user performs a slide or swipe gesture with a mouse or pen/stylus (single contact). |

| Holding |

Occurs when a user performs a press and hold gesture (with a single touch, mouse, or pen/stylus contact). |

| ManipulationCompleted |

Occurs when the input points are lifted and all subsequent motion (translation, expansion, or rotation) through inertia has ended. |

| ManipulationInertiaStarting |

Occurs when all contact points are lifted during a manipulation and the velocity of the manipulation is significant enough to initiate inertia behavior (translation, expansion, or rotation continue after the input pointers are lifted). |

| ManipulationStarted |

Occurs when one or more input points have been initiated and subsequent motion (translation, expansion, or rotation) has begun. |

| ManipulationUpdated |

Occurs after one or more input points have been initiated and subsequent motion (translation, expansion, or rotation) is under way. |

| RightTapped |

Occurs when the pointer input is interpreted as a right-tap gesture, regardless of input device.

|

| Tapped |

Occurs when the pointer input is interpreted as a tap gesture. |