Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This topic describes how to use the Source Reader to process media data.

To use the Source Reader, follow these basic steps:

- Create an instance of the Source Reader.

- Enumerate the possible output formats.

- Set the actual output format for each stream.

- Process data from the source.

The remainder of this topic describes these steps in detail.

- Creating the Source Reader

- Enumerating Output Formats

- Setting Output Formats

- Processing Media Data

- Draining the Data Pipeline

- Getting the File Duration

- Seeking

- Playback Rate

- Hardware Acceleration

- Related topics

Creating the Source Reader

To create an instance of the Source Reader, call one of the following functions:

| Function | Description |

|---|---|

| MFCreateSourceReaderFromURL |

Takes a URL as input. This function uses the Source Resolver to create a media source from the URL. |

| MFCreateSourceReaderFromByteStream |

Takes a pointer to a byte stream. This function also uses the Source Resolver to create the media source. |

| MFCreateSourceReaderFromMediaSource |

Takes a pointer to a media source that has already been created. This function is useful for media sources that the Source Resolver cannot create, such capture devices or custom media sources. |

Typically, for media files, use MFCreateSourceReaderFromURL. For devices, such as webcams, use MFCreateSourceReaderFromMediaSource. (For more information about capture devices in Microsoft Media Foundation, see Audio/Video Capture.)

Each of these functions takes an optional IMFAttributes pointer, which is used to set various options on the Source Reader, as described in the reference topics for these functions. To get the default behavior, set this parameter to NULL. Each function returns an IMFSourceReader pointer as an output parameter. You must call CoInitialize(Ex) and MFStartup function before calling any of these functions.

The following code creates the Source Reader from a URL.

int __cdecl wmain(int argc, __in_ecount(argc) PCWSTR* argv)

{

if (argc < 2)

{

return 1;

}

const WCHAR *pszURL = argv[1];

// Initialize the COM runtime.

HRESULT hr = CoInitializeEx(0, COINIT_MULTITHREADED);

if (SUCCEEDED(hr))

{

// Initialize the Media Foundation platform.

hr = MFStartup(MF_VERSION);

if (SUCCEEDED(hr))

{

// Create the source reader.

IMFSourceReader *pReader;

hr = MFCreateSourceReaderFromURL(pszURL, NULL, &pReader);

if (SUCCEEDED(hr))

{

ReadMediaFile(pReader);

pReader->Release();

}

// Shut down Media Foundation.

MFShutdown();

}

CoUninitialize();

}

}

Enumerating Output Formats

Every media source has at least one stream. For example, a video file might contain a video stream and an audio stream. The format of each stream is described using a media type, represented by the IMFMediaType interface. For more information about media types, see Media Types. You must examine the media type to understand the format of the data that you get from the Source Reader.

Initially, every stream has a default format, which you can find by calling the IMFSourceReader::GetCurrentMediaType method:

For each stream, the media source offers a list of possible media types for that stream. The number of types depends on the source. If the source represents a media file, there is typically only one type per stream. A webcam, on the other hand, might be able to stream video in several different formats. In that case, the application can select which format to use from the list of media types.

To get one of the media types for a stream, call the IMFSourceReader::GetNativeMediaType method. This method takes two index parameters: The index of the stream, and an index into the list of media types for the stream. To enumerate all the types for a stream, increment the list index while keeping the stream index constant. When the list index goes out of bounds, GetNativeMediaType returns MF_E_NO_MORE_TYPES.

HRESULT EnumerateTypesForStream(IMFSourceReader *pReader, DWORD dwStreamIndex)

{

HRESULT hr = S_OK;

DWORD dwMediaTypeIndex = 0;

while (SUCCEEDED(hr))

{

IMFMediaType *pType = NULL;

hr = pReader->GetNativeMediaType(dwStreamIndex, dwMediaTypeIndex, &pType);

if (hr == MF_E_NO_MORE_TYPES)

{

hr = S_OK;

break;

}

else if (SUCCEEDED(hr))

{

// Examine the media type. (Not shown.)

pType->Release();

}

++dwMediaTypeIndex;

}

return hr;

}

To enumerate the media types for every stream, increment the stream index. When the stream index goes out of bounds, GetNativeMediaType returns MF_E_INVALIDSTREAMNUMBER.

HRESULT EnumerateMediaTypes(IMFSourceReader *pReader)

{

HRESULT hr = S_OK;

DWORD dwStreamIndex = 0;

while (SUCCEEDED(hr))

{

hr = EnumerateTypesForStream(pReader, dwStreamIndex);

if (hr == MF_E_INVALIDSTREAMNUMBER)

{

hr = S_OK;

break;

}

++dwStreamIndex;

}

return hr;

}

Setting Output Formats

To change the output format, call the IMFSourceReader::SetCurrentMediaType method. This method takes the stream index and a media type:

hr = pReader->SetCurrentMediaType(dwStreamIndex, pMediaType);

For the media type, it depends on whether you want to insert a decoder.

- To get data directly from the source without decoding it, use one of the types returned by GetNativeMediaType.

- To decode the stream, create a new media type that describes the desired uncompressed format.

In the case of the decoder, create the media type as follows:

- Call MFCreateMediaType to create a new media type.

- Set the MF_MT_MAJOR_TYPE attribute to specify audio or video.

- Set the MF_MT_SUBTYPE attribute to specify the subtype of the decoding format. (See Audio Subtype GUIDs and Video Subtype GUIDs.)

- Call IMFSourceReader::SetCurrentMediaType.

The Source Reader will automatically load the decoder. To get the complete details of the decoded format, call IMFSourceReader::GetCurrentMediaType after the call to SetCurrentMediaType

The following code configures the video stream for RGB-32 and the audio stream for PCM audio.

HRESULT ConfigureDecoder(IMFSourceReader *pReader, DWORD dwStreamIndex)

{

IMFMediaType *pNativeType = NULL;

IMFMediaType *pType = NULL;

// Find the native format of the stream.

HRESULT hr = pReader->GetNativeMediaType(dwStreamIndex, 0, &pNativeType);

if (FAILED(hr))

{

return hr;

}

GUID majorType, subtype;

// Find the major type.

hr = pNativeType->GetGUID(MF_MT_MAJOR_TYPE, &majorType);

if (FAILED(hr))

{

goto done;

}

// Define the output type.

hr = MFCreateMediaType(&pType);

if (FAILED(hr))

{

goto done;

}

hr = pType->SetGUID(MF_MT_MAJOR_TYPE, majorType);

if (FAILED(hr))

{

goto done;

}

// Select a subtype.

if (majorType == MFMediaType_Video)

{

subtype= MFVideoFormat_RGB32;

}

else if (majorType == MFMediaType_Audio)

{

subtype = MFAudioFormat_PCM;

}

else

{

// Unrecognized type. Skip.

goto done;

}

hr = pType->SetGUID(MF_MT_SUBTYPE, subtype);

if (FAILED(hr))

{

goto done;

}

// Set the uncompressed format.

hr = pReader->SetCurrentMediaType(dwStreamIndex, NULL, pType);

if (FAILED(hr))

{

goto done;

}

done:

SafeRelease(&pNativeType);

SafeRelease(&pType);

return hr;

}

Processing Media Data

To get media data from the source, call the IMFSourceReader::ReadSample method, as shown in the following code.

DWORD streamIndex, flags;

LONGLONG llTimeStamp;

hr = pReader->ReadSample(

MF_SOURCE_READER_ANY_STREAM, // Stream index.

0, // Flags.

&streamIndex, // Receives the actual stream index.

&flags, // Receives status flags.

&llTimeStamp, // Receives the time stamp.

&pSample // Receives the sample or NULL.

);

The first parameter is the index of the stream for which you want to get data. You can also specify MF_SOURCE_READER_ANY_STREAM to get the next available data from any stream. The second parameter contains optional flags; see MF_SOURCE_READER_CONTROL_FLAG for a list of these. The third parameter receives the index of the stream that actually produces the data. You will need this information if you set the first parameter to MF_SOURCE_READER_ANY_STREAM. The fourth parameter receives status flags, indicating various events that can occur while reading the data, such as format changes in the stream. For a list of status flags, see MF_SOURCE_READER_FLAG.

If the media source is able to produce data for the requested stream, the last parameter of ReadSample receives a pointer to the IMFSample interface of a media sample object. Use the media sample to:

- Get a pointer to the media data.

- Get the presentation time and sample duration.

- Get attributes that describe interlacing, field dominance, and other aspects of the sample.

The contents of the media data depend on the format of the stream. For an uncompressed video stream, each media sample contains a single video frame. For an uncompressed audio stream, each media sample contains a sequence of audio frames.

The ReadSample method can return S_OK and yet not return a media sample in the pSample parameter. For example, when you reach the end of the file, ReadSample sets the MF_SOURCE_READERF_ENDOFSTREAM flag in dwFlags and sets pSample to NULL. In this case, the ReadSample method returns S_OK because no error has occurred, even though the pSample parameter is set to NULL. Therefore, always check the value of pSample before you dereference it.

The following code shows how to call ReadSample in a loop and check the information returned by the method, until the end of the media file is reached.

HRESULT ProcessSamples(IMFSourceReader *pReader)

{

HRESULT hr = S_OK;

IMFSample *pSample = NULL;

size_t cSamples = 0;

bool quit = false;

while (!quit)

{

DWORD streamIndex, flags;

LONGLONG llTimeStamp;

hr = pReader->ReadSample(

MF_SOURCE_READER_ANY_STREAM, // Stream index.

0, // Flags.

&streamIndex, // Receives the actual stream index.

&flags, // Receives status flags.

&llTimeStamp, // Receives the time stamp.

&pSample // Receives the sample or NULL.

);

if (FAILED(hr))

{

break;

}

wprintf(L"Stream %d (%I64d)\n", streamIndex, llTimeStamp);

if (flags & MF_SOURCE_READERF_ENDOFSTREAM)

{

wprintf(L"\tEnd of stream\n");

quit = true;

}

if (flags & MF_SOURCE_READERF_NEWSTREAM)

{

wprintf(L"\tNew stream\n");

}

if (flags & MF_SOURCE_READERF_NATIVEMEDIATYPECHANGED)

{

wprintf(L"\tNative type changed\n");

}

if (flags & MF_SOURCE_READERF_CURRENTMEDIATYPECHANGED)

{

wprintf(L"\tCurrent type changed\n");

}

if (flags & MF_SOURCE_READERF_STREAMTICK)

{

wprintf(L"\tStream tick\n");

}

if (flags & MF_SOURCE_READERF_NATIVEMEDIATYPECHANGED)

{

// The format changed. Reconfigure the decoder.

hr = ConfigureDecoder(pReader, streamIndex);

if (FAILED(hr))

{

break;

}

}

if (pSample)

{

++cSamples;

}

SafeRelease(&pSample);

}

if (FAILED(hr))

{

wprintf(L"ProcessSamples FAILED, hr = 0x%x\n", hr);

}

else

{

wprintf(L"Processed %d samples\n", cSamples);

}

SafeRelease(&pSample);

return hr;

}

Draining the Data Pipeline

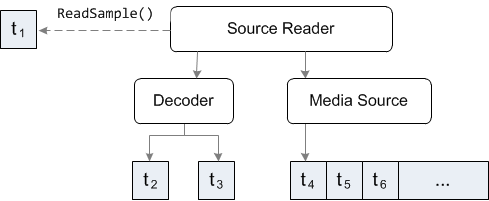

During data processing, a decoder or other transform might buffer input samples. In the following diagram, the application calls ReadSample and receives a sample with a presentation time equal to t1. The decoder is holding samples for t2 and t3.

On the next call to ReadSample, the Source Reader might give t4 to the decoder and return t2 to the application.

If you want to decode all of the samples that are currently buffered in the decoder, without passing any new samples to the decoder, set the MF_SOURCE_READER_CONTROLF_DRAIN flag in the dwControlFlags parameter of ReadSample. Continue to do this in a loop until ReadSample returns a NULL sample pointer. Depending on how the decoder buffers samples, that might happen immediately or after several calls to ReadSample.

Getting the File Duration

To get the duration of a media file, call the IMFSourceReader::GetPresentationAttribute method and request the MF_PD_DURATION attribute, as shown in the following code.

HRESULT GetDuration(IMFSourceReader *pReader, LONGLONG *phnsDuration)

{

PROPVARIANT var;

HRESULT hr = pReader->GetPresentationAttribute(MF_SOURCE_READER_MEDIASOURCE,

MF_PD_DURATION, &var);

if (SUCCEEDED(hr))

{

hr = PropVariantToInt64(var, phnsDuration);

PropVariantClear(&var);

}

return hr;

}

The function shown here gets the duration in 100-nanosecond units. Divide by 10,000,000 to get the duration in seconds.

Seeking

A media source that gets data from a local file can usually seek to arbitrary positions in the file. Capture devices such as webcams generally cannot seek, because the data is live. A source that streams data over a network might be able to seek, depending on the network streaming protocol.

To find out whether a media source can seek, call IMFSourceReader::GetPresentationAttribute and request the MF_SOURCE_READER_MEDIASOURCE_CHARACTERISTICS attribute, as shown in the following code:

HRESULT GetSourceFlags(IMFSourceReader *pReader, ULONG *pulFlags)

{

ULONG flags = 0;

PROPVARIANT var;

PropVariantInit(&var);

HRESULT hr = pReader->GetPresentationAttribute(

MF_SOURCE_READER_MEDIASOURCE,

MF_SOURCE_READER_MEDIASOURCE_CHARACTERISTICS,

&var);

if (SUCCEEDED(hr))

{

hr = PropVariantToUInt32(var, &flags);

}

if (SUCCEEDED(hr))

{

*pulFlags = flags;

}

PropVariantClear(&var);

return hr;

}

This function gets a set of capabilities flags from the source. These flags are defined in the MFMEDIASOURCE_CHARACTERISTICS enumeration. Two flags relate to seeking:

| Flag | Description |

|---|---|

| MFMEDIASOURCE_CAN_SEEK |

The source can seek. |

| MFMEDIASOURCE_HAS_SLOW_SEEK |

Seeking might take a long time to complete. For example, the source might need to download the entire file before it can seek. (There are no strict criteria for a source to return this flag.) |

The following code tests for the MFMEDIASOURCE_CAN_SEEK flag.

BOOL SourceCanSeek(IMFSourceReader *pReader)

{

BOOL bCanSeek = FALSE;

ULONG flags;

if (SUCCEEDED(GetSourceFlags(pReader, &flags)))

{

bCanSeek = ((flags & MFMEDIASOURCE_CAN_SEEK) == MFMEDIASOURCE_CAN_SEEK);

}

return bCanSeek;

}

To seek, call the IMFSourceReader::SetCurrentPosition method, as shown in the following code.

HRESULT SetPosition(IMFSourceReader *pReader, const LONGLONG& hnsPosition)

{

PROPVARIANT var;

HRESULT hr = InitPropVariantFromInt64(hnsPosition, &var);

if (SUCCEEDED(hr))

{

hr = pReader->SetCurrentPosition(GUID_NULL, var);

PropVariantClear(&var);

}

return hr;

}

The first parameter gives the time format that you are using to specify the seek position. All media sources in Media Foundation are required to support 100-nanosecond units, indicated by the value GUID_NULL. The second parameter is a PROPVARIANT that contains the seek position. For 100-nanosecond time units, the data type is LONGLONG.

Be aware that not every media source provides frame-accurate seeking. The accuracy of seeking depends on several factors, such as the key frame interval, whether the media file contains an index, and whether the data has a constant or variable bit rate. Therefore, after you seek to a position in a file, there is no guarantee that the time stamp on the next sample will exactly match the requested position. Generally, the actual position will not be later than the requested position, so you can discard samples until you reach the desired point in the stream.

Playback Rate

Although you can set the playback rate using the Source Reader, doing is typically not very useful, for the following reasons:

- The Source Reader does not support reverse playback, even if the media source does.

- The application controls the presentation times, so the application can implement fast or slow play without setting the rate on the source.

- Some media sources support thinning mode, where the source delivers fewer samples—typically just the key frames. However, if you want to drop non-key frames, you can check each sample for the MFSampleExtension_CleanPoint attribute.

To set the playback rate using the Source Reader, call the IMFSourceReader::GetServiceForStream method to get the IMFRateSupport and IMFRateControl interfaces from the media source.

Hardware Acceleration

The Source Reader is compatible with Microsoft DirectX Video Acceleration (DXVA) 2.0 for hardware accelerated video decoding. To use DXVA with the Source Reader, perform the following steps.

- Create a Microsoft Direct3D device.

- Call the DXVA2CreateDirect3DDeviceManager9 function to create the Direct3D device manager. This function gets a pointer to the IDirect3DDeviceManager9 interface.

- Call the IDirect3DDeviceManager9::ResetDevice method with a pointer to the Direct3D device.

- Create an attribute store by calling the MFCreateAttributes function.

- Create the Source Reader. Pass the attribute store in the pAttributes parameter of the creation function.

When you provide a Direct3D device, the Source Reader allocates video samples that are compatible with the DXVA video processor API. You can use DXVA video processing to perform hardware deinterlacing or video mixing. For more information, see DXVA Video Processing. Also, if the decoder supports DXVA 2.0, it will use the Direct3D device to perform hardware-accelerated decoding.

Important

Beginning in Windows 8, IMFDXGIDeviceManager can be used instead of the IDirect3DDeviceManager9. For Windows Store apps, you must use IMFDXGIDeviceManager. For more info, see the Direct3D 11 Video APIs.

Related topics