Hi All

I have a problem with upgrading DC from 2016 to 2019.

Background:

1 forest ,7 DC in 3 sites (let says site A/B/C, all of them are Vmware VM).All DC in site A and B

performed in-place upgrade from 2016 to 2019 and now running smoothly.

On site C ,something wrong happened.

Site C , including 3 DC (lets day DC 1/2/3, host on same ESXI host, same software installed, like Antivirus etc.)

DC 1 in-place upgrade to 2019 successfully and running smooth until now.

DC 2 /3 perform same upgrade just like all the others.

(I take VM snapshot before upgrade,after each upgrade success,I backup the entire VM with a NAS backup)

Upgrade was success at that moment,but after few days (maybe 2 to 3 days) ,all service on that DC 2/3 were not working.

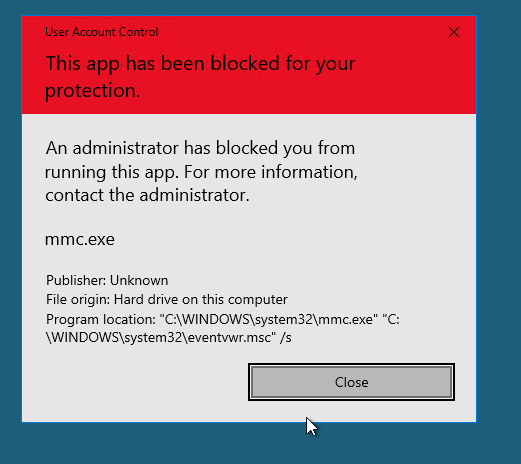

Even if I start [Event viewer] or [ Control Panel ] ,shows the following.

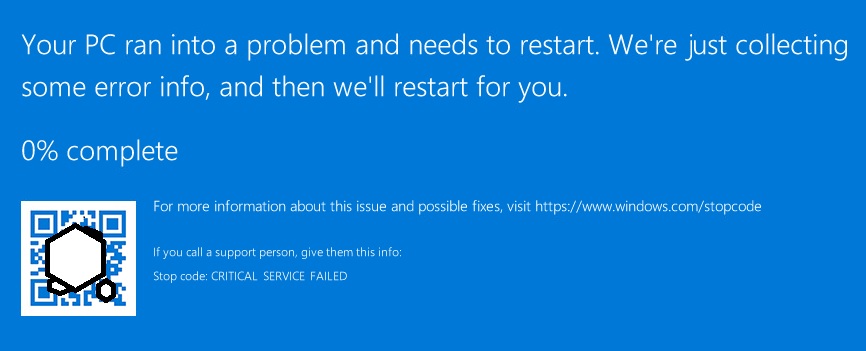

Then I reboot the DC ,Windows corrupted,like below.

I have no way to recover the DC (tired safe mode..last known good...) . Finally restore VM snapshot back to 2016,and everything resumed.

After few days,I tired the same upgrade again,same result.corrupted after few days.

Then I start investigate what happened.

I am quite sure all DC 1/2/3 having same customized task in task scheduler.

Then, I look for what different in the task scheduler on DC 1/2/3.

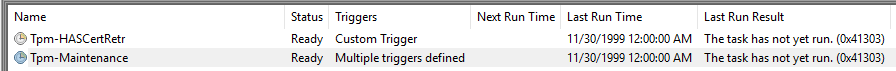

I found that a Windows task call [TPM-maintenance] on DC 1/2/3

On DC 1,the task never been run. (all the other DC in Site A and B also never been run.)

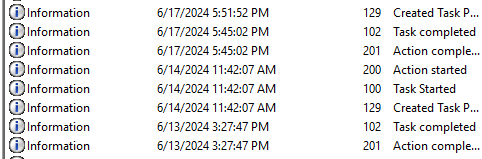

On DC 2/3,that task started sometime.not in a fixed schedule.I don't know what and how it triggered.

On DC 2/3,that task started sometime.not in a fixed schedule.I don't know what and how it triggered.

Then I restore the DC 2/3 VM (backup of after upgrade 2019 success) from the NAS backup (without connecting network) for testing whats going wrong.

I found that if I run the [TPM-Maintenance] task manually,the Windows will corrupt on next reboot ,exactly same as what happening before.

I checked all VMs are not TPM enabled.

I would like to know what the hell is [TPM-maintenance] and why it corrupt the Windows.

Anyone have same experience?

Thanks and have a nice day.