Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

The native execution engine is a groundbreaking enhancement for Apache Spark job executions in Microsoft Fabric. This vectorized engine optimizes the performance and efficiency of your Spark queries by running them directly on your lakehouse infrastructure. The engine's seamless integration means it requires no code modifications and avoids vendor lock-in. It supports Apache Spark APIs and is compatible with Runtime 1.3 (Apache Spark 3.5), and works with both Parquet and Delta formats. Regardless of your data's location within OneLake, or if you access data via shortcuts, the native execution engine maximizes efficiency and performance.

The native execution engine significantly elevates query performance while minimizing operational costs. It delivers a remarkable speed enhancement, achieving up to four times faster performance compared to traditional OSS (open source software) Spark, as validated by the TPC-DS 1-TB benchmark. The engine is adept at managing a wide array of data processing scenarios, ranging from routine data ingestion, batch jobs, and ETL (extract, transform, load) tasks, to complex data science analytics and responsive interactive queries. Users benefit from accelerated processing times, heightened throughput, and optimized resource utilization.

The Native Execution Engine is based on two key OSS components: Velox, a C++ database acceleration library introduced by Meta, and Apache Gluten (incubating), a middle layer responsible for offloading JVM-based SQL engines’ execution to native engines introduced by Intel.

When to use the native execution engine

The native execution engine offers a solution for running queries on large-scale data sets; it optimizes performance by using the native capabilities of underlying data sources and minimizing the overhead typically associated with data movement and serialization in traditional Spark environments. The engine supports various operators and data types, including rollup hash aggregate, broadcast nested loop join (BNLJ), and precise timestamp formats. However, to fully benefit from the engine's capabilities, you should consider its optimal use cases:

- The engine is effective when working with data in Parquet and Delta formats, which it can process natively and efficiently.

- Queries that involve intricate transformations and aggregations benefit significantly from the columnar processing and vectorization capabilities of the engine.

- Performance enhancement is most notable in scenarios where the queries don't trigger the fallback mechanism by avoiding unsupported features or expressions.

- The engine is well-suited for queries that are computationally intensive, rather than simple or I/O-bound.

For information on the operators and functions supported by the native execution engine, see Apache Gluten documentation.

Enable the native execution engine

To use the full capabilities of the native execution engine during the preview phase, specific configurations are necessary. The following procedures show how to activate this feature for notebooks, Spark job definitions, and entire environments.

Important

The native execution engine supports the latest GA runtime version, which is Runtime 1.3 (Apache Spark 3.5, Delta Lake 3.2). With the release of the native execution engine in Runtime 1.3, support for the previous version—Runtime 1.2 (Apache Spark 3.4, Delta Lake 2.4)—is discontinued. We encourage all customers to upgrade to the latest Runtime 1.3. If you're using the Native Execution Engine on Runtime 1.2, native acceleration will be disabled.

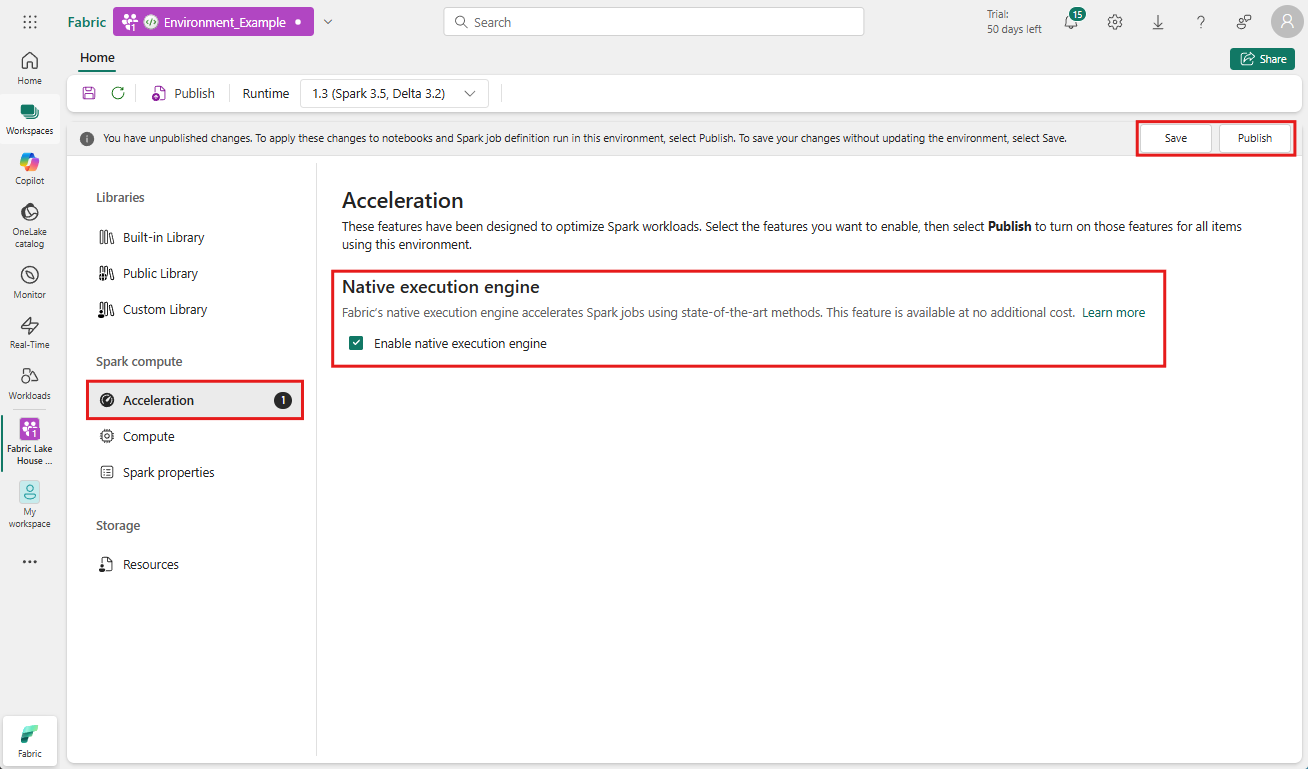

Enable at the environment level

To ensure uniform performance enhancement, enable the native execution engine across all jobs and notebooks associated with your environment:

Navigate to the workspace containing your environment and select the environment. If you don't have environment created, see Create, configure, and use an environment in Fabric.

Under Spark compute select Acceleration.

Check the box labeled Enable native execution engine.

Save and Publish the changes.

When enabled at the environment level, all subsequent jobs and notebooks inherit the setting. This inheritance ensures that any new sessions or resources created in the environment automatically benefit from the enhanced execution capabilities.

Important

Previously, the native execution engine was enabled through Spark settings within the environment configuration. The native execution engine can now be enabled more easily using a toggle in the Acceleration tab of the environment settings. To continue using it, go to the Acceleration tab and turn on the toggle. You can also enable it via Spark properties if preferred.

Enable for a notebook or Spark job definition

You can also enable the native execution engine for a single notebook or Spark job definition, you must incorporate the necessary configurations at the beginning of your execution script:

%%configure

{

"conf": {

"spark.native.enabled": "true",

}

}

For notebooks, insert the required configuration commands in the first cell. For Spark job definitions, include the configurations in the frontline of your Spark job definition. The Native Execution Engine is integrated with live pools, so once you enable the feature, it takes effect immediately without requiring you to initiate a new session.

Control on the query level

The mechanisms to enable the Native Execution Engine at the tenant, workspace, and environment levels, seamlessly integrated with the UI, are under active development. In the meantime, you can disable the native execution engine for specific queries, particularly if they involve operators that aren't currently supported (see limitations). To disable, set the Spark configuration spark.native.enabled to false for the specific cell containing your query.

%%sql

SET spark.native.enabled=FALSE;

After executing the query in which the native execution engine is disabled, you must re-enable it for subsequent cells by setting spark.native.enabled to true. This step is necessary because Spark executes code cells sequentially.

%%sql

SET spark.native.enabled=TRUE;

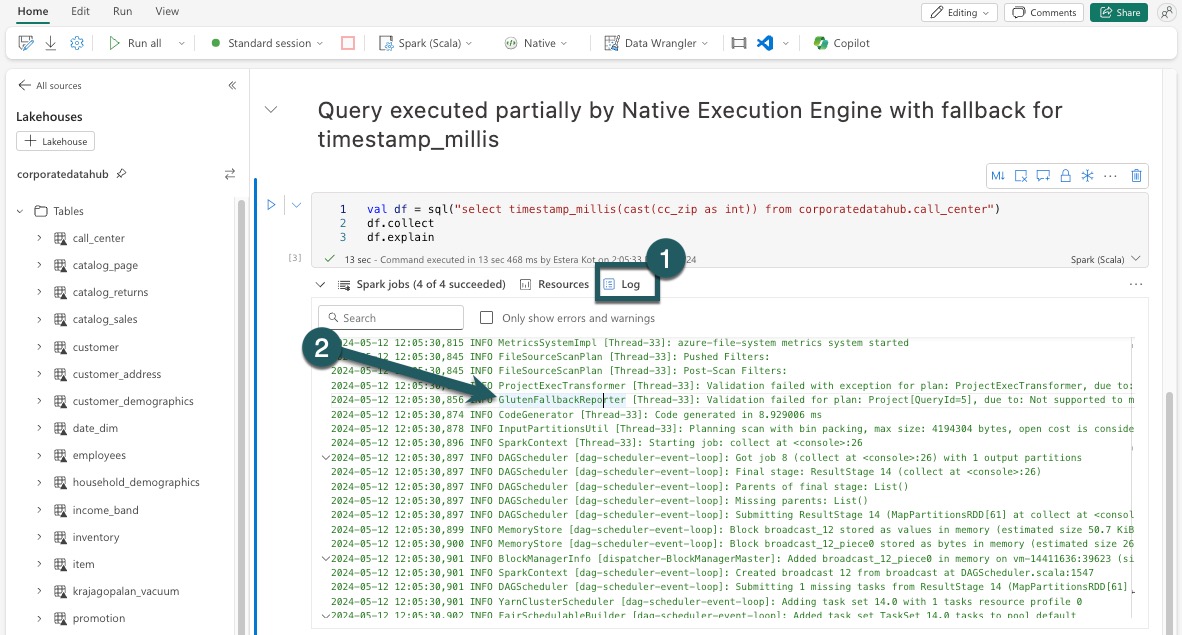

Identify operations executed by the engine

There are several methods to determine if an operator in your Apache Spark job was processed using the native execution engine.

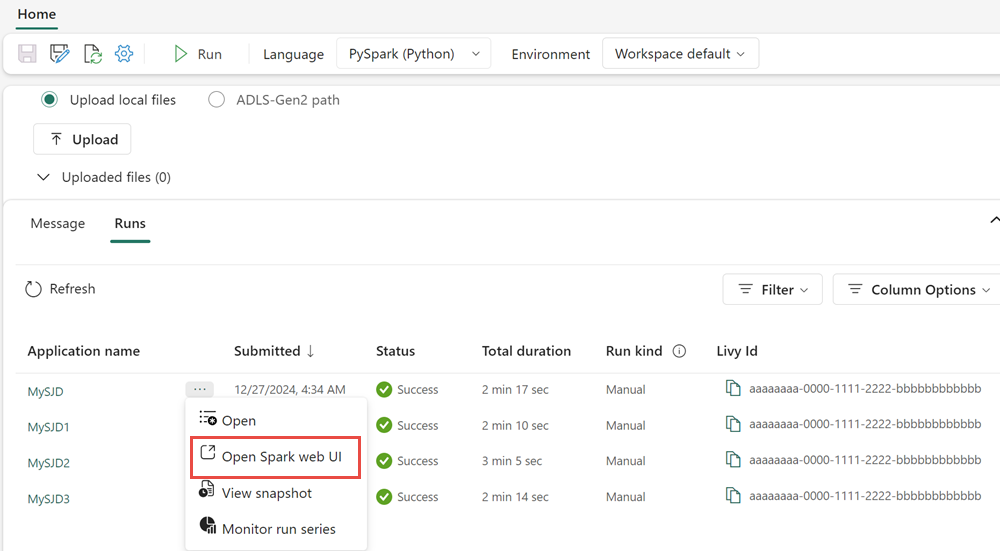

Spark UI and Spark history server

Access the Spark UI or Spark history server to locate the query you need to inspect. To access the Spark web UI, navigate to your Spark Job Definition and run it. From the Runs tab, select the ... next to the Application name and select Open Spark web UI. You can also access the Spark UI from the Monitor tab in the workspace. Select the notebook or pipeline, from the monitoring page, there's a direct link to the Spark UI for active jobs.

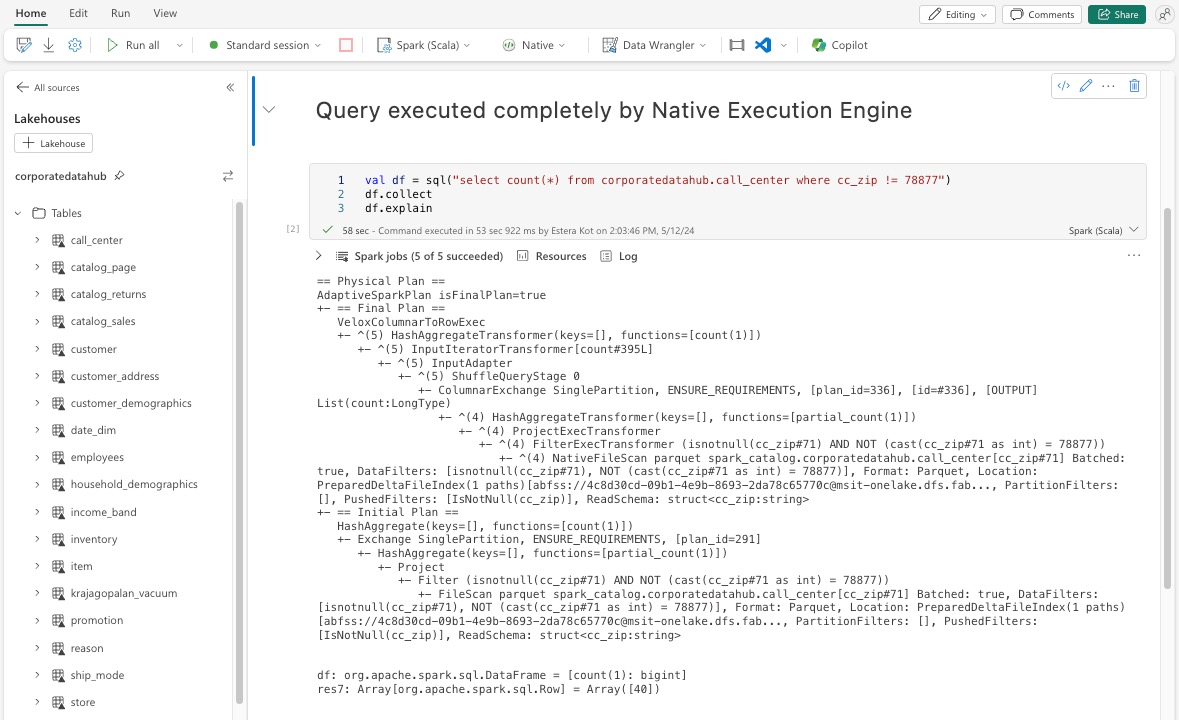

In the query plan displayed within the Spark UI interface, look for any node names that end with the suffix Transformer, *NativeFileScan, or VeloxColumnarToRowExec. The suffix indicates that the native execution engine executed the operation. For instance, nodes might be labeled as RollUpHashAggregateTransformer, ProjectExecTransformer, BroadcastHashJoinExecTransformer, ShuffledHashJoinExecTransformer, or BroadcastNestedLoopJoinExecTransformer.

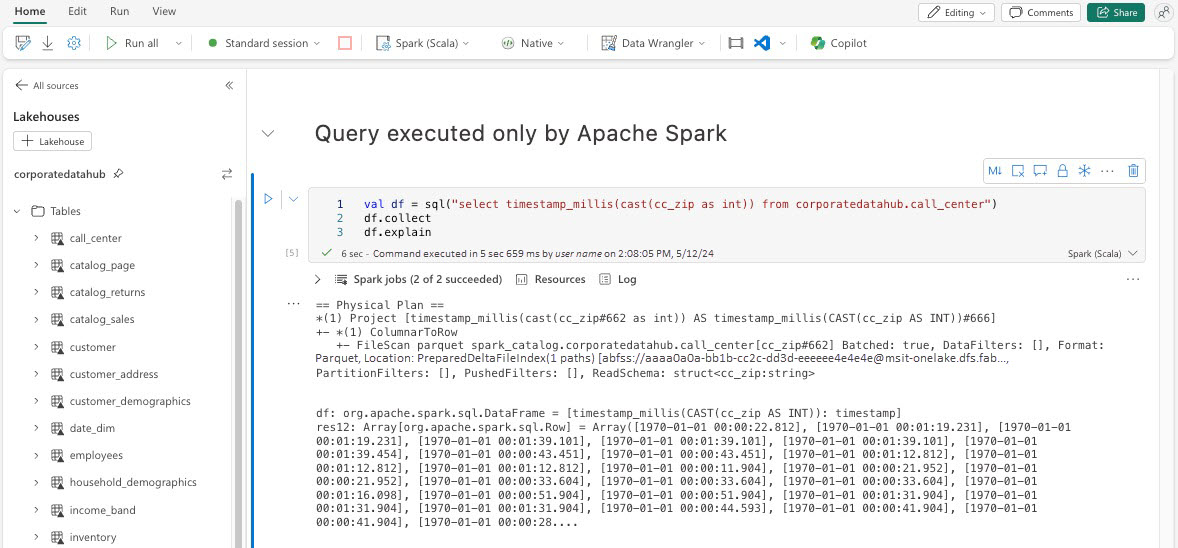

DataFrame explain

Alternatively, you can execute the df.explain() command in your notebook to view the execution plan. Within the output, look for the same Transformer, *NativeFileScan, or VeloxColumnarToRowExec suffixes. This method provides a quick way to confirm whether specific operations are being handled by the native execution engine.

Fallback mechanism

In some instances, the native execution engine might not be able to execute a query due to reasons such as unsupported features. In these cases, the operation falls back to the traditional Spark engine. This automatic fallback mechanism ensures that there's no interruption to your workflow.

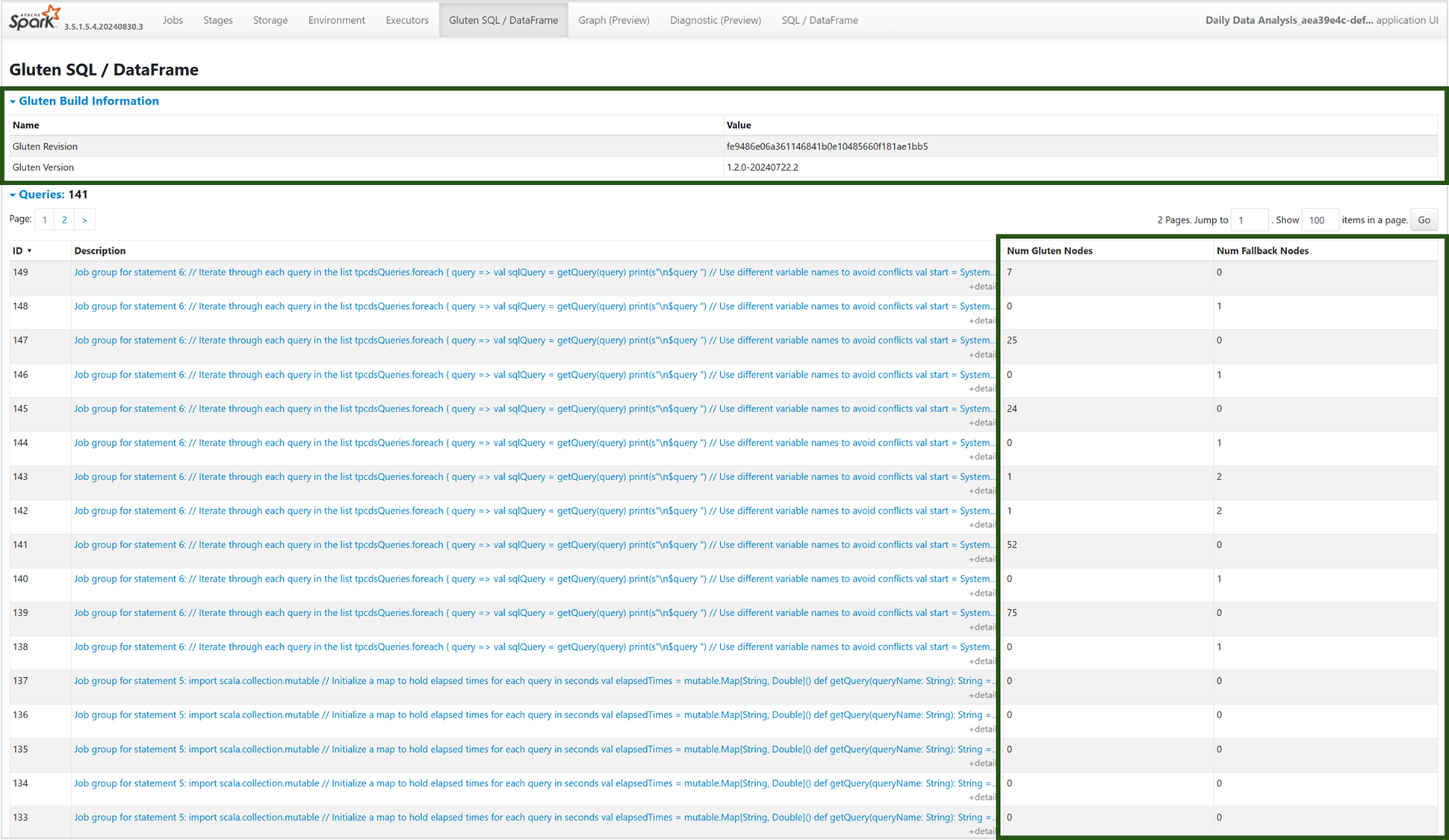

Monitor Queries and DataFrames executed by the engine

To better understand how the Native Execution engine is applied to SQL queries and DataFrame operations, and to drill down to the stage and operator levels, you can refer to the Spark UI and Spark History Server for more detailed information about the native engine execution.

Native Execution Engine Tab

You can navigate to the new 'Gluten SQL / DataFrame' tab to view the Gluten build information and query execution details. The Queries table provides insights into the number of nodes running on the Native engine and those falling back to the JVM for each query.

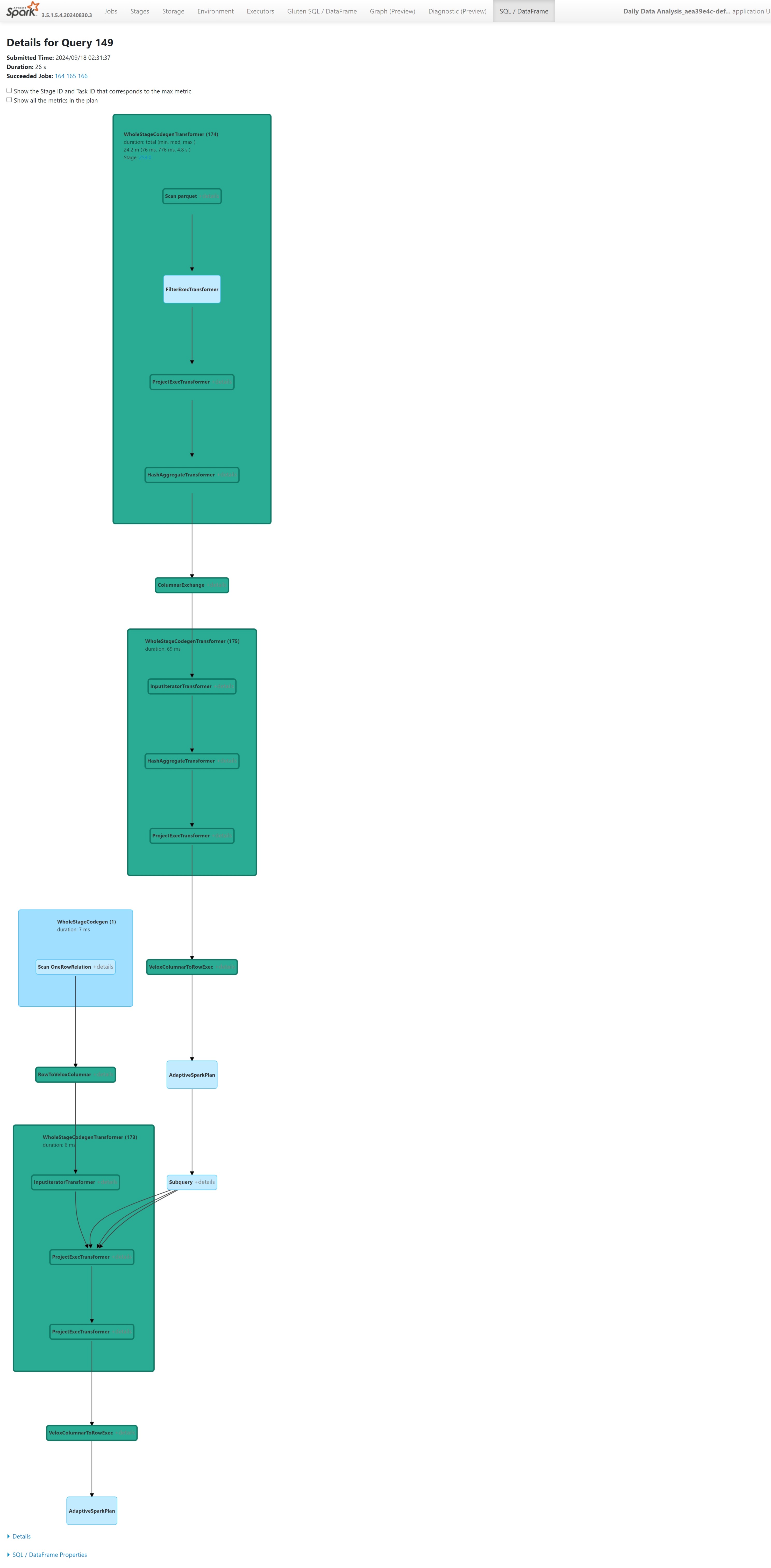

Query Execution Graph

You can also select on the query description for the Apache Spark query execution plan visualization. The execution graph provides native execution details across stages and their respective operations. Background colors differentiate the execution engines: green represents the Native Execution Engine, while light blue indicates that the operation is running on the default JVM Engine.

Limitations

While the Native Execution Engine (NEE) in Microsoft Fabric significantly boosts performance for Apache Spark jobs, it currently has the following limitations:

Existing limitations

Incompatible Spark features: Native execution engine doesn't currently support user-defined functions (UDFs), the

array_containsfunction, or structured streaming. If these functions or unsupported features are used either directly or through imported libraries, Spark will revert to its default engine.Unsupported file formats: Queries against

JSON,XML, andCSVformats aren't accelerated by native execution engine. These default back to the regular Spark JVM engine for execution.ANSI mode not supported: Native execution engine doesn't support ANSI SQL mode. If enabled, execution falls back to the vanilla Spark engine.

Date filter type mismatches: To benefit from native execution engine's acceleration, ensure that both sides of a date comparison match in data type. For example, instead of comparing a

DATETIMEcolumn with a string literal, cast it explicitly as shown:CAST(order_date AS DATE) = '2024-05-20'

Other considerations and limitations

Decimal to Float casting mismatch: When casting from

DECIMALtoFLOAT, Spark preserves precision by converting to a string and parsing it. NEE (via Velox) performs a direct cast from the internalint128_trepresentation, which can result in rounding discrepancies.Timezone configuration errors : Setting an unrecognized timezone in Spark causes the job to fail under NEE, whereas Spark JVM handles it gracefully. For example:

"spark.sql.session.timeZone": "-08:00" // May cause failure under NEEInconsistent rounding behavior: The

round()function behaves differently in NEE due to reliance onstd::round, which doesn't replicate Spark’s rounding logic. This can lead to numeric inconsistencies in rounding results.Missing duplicate key check in

map()function: Whenspark.sql.mapKeyDedupPolicyis set to EXCEPTION, Spark throws an error for duplicate keys. NEE currently skips this check and allows the query to succeed incorrectly.

Example:SELECT map(1, 'a', 1, 'b'); -- Should fail, but returns {1: 'b'}Order variance in

collect_list()with sorting: When usingDISTRIBUTE BYandSORT BY, Spark preserves the element order incollect_list(). NEE might return values in a different order due to shuffle differences, which can result in mismatched expectations for ordering-sensitive logic.Intermediate type mismatch for

collect_list()/collect_set(): Spark usesBINARYas the intermediate type for these aggregations, whereas NEE usesARRAY. This mismatch might lead to compatibility issues during query planning or execution.Managed private endpoints required for storage access: When Native Execution Engine (NEE) is enabled, and if spark jobs are trying to access a storage accont using a managed private endpoint, users must configure separate managed private endpoints for both the Blob (blob.core.windows.net) and DFS / File System (dfs.core.windows.net) endpoints, even if they point to the same storage account. A single endpoint cannot be reused for both. This is a current limitation and may require additional network configuration when enabling native execution engine in a workspace that has managed private endpoints to storage accounts.