Reflections on troubleshooting

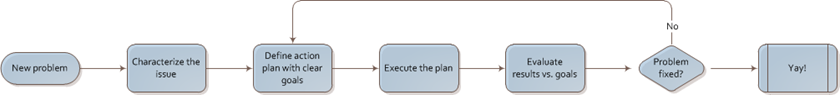

I spend most of my work time troubleshooting or managing the process of troubleshooting technical issues onsite, with the customer teams and with Microsoft’s support people. Doing that so often, I’m found wondering on what knowledge can I take from each problem to the next and how can that be organized and used to improve how efficiently my approach to the problem is.

Don’t get me wrong, I’m not talking only about technical knowledge. My goal for this post is to reflect on how is the troubleshooting process usually done, the different approaches and the pros and cons of each, and also the different steps in each approach.

I’m not really following any methodology or have read any literature about this topic, I’m just venting and putting out some of the experiences I’ve had in the past.

“Houston, we have a problem.”

Something similar to this is frequently heard at 9am or 5pm. I’m not sure if there is a statistical correlation between time of the day and problem occurrence, but I do know that the person carrying the message will most likely:

a) not have any information about the problem, except for the fact that he/she has one;

b) not tell you the correct information about the problem;

c) include several problems in one;

d) be very, very nervous or under pressure.

Points a), b) and c) all relate to the way the problem is characterized and scoped, in the next section. Point d) will need some management skills to keep the information flowing and prevent everyone involved from panic. Let’s think about each one of these topics in detail.

Detailing the issue

Gathering and organizing the information is one of the most critical steps in the whole process. Your very first step is to find out what is, in fact, the issue. Some crucial question arise here such as:

- What is the impact of issue?

- Does it affect every user?

- What symptoms are you seeing?

- Is it happening all the time?

- Is it happening now?

- Has it happened before? How did we fix it back then? (a good knowledge base is helpful here)

- Was there some change to the system lately? (a good change management process is helpful here)

- What actions have you already taken?

- Is your goal a root-cause analysis or just restoring the service? (setting the expectations with this question is important and may save you from some political problems later)

I find myself faced with systems I don’t know anything about, so identifying the architecture pieces and the way the problematic feature works with each is relevant. This will give you an idea of the machines, products, technologies and dependencies involved and may even give you some hints on the cause of the problem. You don’t need to go into the details of every machine, but typical information such as OS, patch level and the way the information flows between systems (identifying who is the client and server and the protocols involved) should be included.

If the problem is affecting just some of the different components of the system, breaking it down and defining the dependencies will allow you to choose which area to check first.

If you’re handling a really critical situation, then point d) above comes into play and you should worry about communication. Try to keep everyone aligned for example by drawing the picture of the system and listing the symptoms on a board. This is probably not the best time to stop and write an e-mail unless you have someone who can help you with that.

Defining an action plan

Assuming that you’ve got the information above and you trust it to be true, you should have a clearer picture of what are the users experiencing which is not the normal behavior of the system. Your next step, then, is to set up an action plan to start attacking the problem.

The action plan must start with a clear goal, i.e, what are you trying to gather, prove or fix, and then include the steps that will be taken to achieve that goal. Remember the communication concerns we mentioned before? Well, if you keep everyone up-to-date and aligned in terms of the actions that will be performed and the goals that we want to achieve, it will probably help reduce the tension and make everyone work together. Note that there may not be a single possible action plan nor will your first goal be "solve the issue”. After all, if you knew the cause of the problem, it would be easy to fix it, right?

If your plan has the goal of solving the issue, for example through some configuration change, how can you measure if the issue was solved? What are you metrics? Hard and factual data is relevant here, because it will prove the issue is gone.

There are different approaches to investigating a problem. If you’re really comfortable about the technology or system and you suspect some part of it to be the root cause, you could take a bottom-up approach and start by going really deep on one or only a few components. If your gut feeling is right, then this will be a fast path to success, but if it’s wrong it could prove to be a waste of time. Being able to do this takes some experience with the technology and a good set of similar problems in your luggage.

The opposite approach is obviously the top-down approach. It starts by taking a look at indicators and monitoring from the systems at a very high level and try to dig deeper when you find something strange. If you don’t know where to start, this might be a good way to organize the work, but it may take you some time to actually get to the core of things and be able to find out interesting information.

Another possible way to plan your work is something I find quite similar to binary searching. Like in the algorithm, try to divide the system in half (or at least in large enough parts) and work top-down on each one. If you find that a certain part of the system is working correctly then you can rule them out completely and focus on the other side. If you can isolate some components, then it’s easier to choose to ones you want to dig into. I try using this pattern when I’m troubleshooting because I feel it’s usually more effective. It requires a good knowledge of the way different components interface and communicate, especially transport and data protocols, and which monitoring and logging data is associated with the interface mechanisms.

Regardless of your chosen approach, follow your action plan, gather the information you needed and proceed to the next step.

Evaluate your last move

Once you’ve achieved the goal established for the action plan, it’s time to evaluate what was done and the information that was collected. Here, you need to recall the goals you established for your action plan. Were they accomplished?

If your plans were to gather more information, then what does that information show? Does it point to a suspect or is everything looking normal? If you were planning to change some setting and fix the problem, did it work?

Start over or celebrate!

Troubleshooting, much like the scientific method, is an iterative task and it may require you to go through the last 2 steps several times before you understand what’s going on.

If your action plan did not work or if only showed the need for further actions, plan them the same way you did before and set the wheels in motion. If your problem is gone, then your work is done.

At this point, it may be a good idea to debrief and discuss what happened and to work proactively on how the problem can be prevented or at least predicted. This way, you’ll learn something from the situation that can potentially save time, money and the day later on.

These were my thoughts on managing troubleshooting processes based on my personal experience. Feel free to comment and share yours.