Windows Azure Storage Abstractions and their Scalability Targets

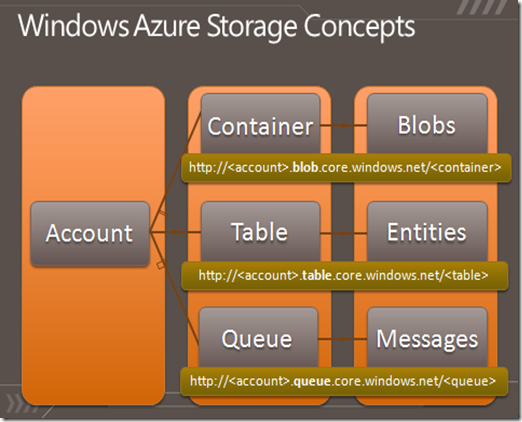

The four object abstractions Windows Azure Storage provides for application developers are:

- Blobs – Provides a simple interface for storing named files along with metadata for the file.

- Tables – Provides massively scalable structured storage. A Table is a set of entities, which contain a set of properties. An application can manipulate the entities and query over any of the properties stored in a Table.

- Queues – Provide reliable storage and delivery of messages for an application to build loosely coupled and scalable workflow between the different parts (roles) of your application.

- Drives – Provides durable NTFS volumes for Windows Azure applications to use. This allows applications to use existing NTFS APIs to access a network attached durable drive. Each drive is a network attached Page Blob formatted as a single volume NTFS VHD. In this post, we do not focus on drives, since their scalability is that of a single blob.

The following shows the Windows Azure Storage abstractions and the Uris used for Blobs, Tables and Queues. In this post we will (a) go through each of these concepts, (b) describe how they are partitioned (c) and then talk about the scalability targets for these storage abstractions.

Storage Accounts and Picking their Locations

In order to access any of the storage abstractions you first need to create a storage account by going to the Windows Azure Developer Portal. When creating the storage account you can specify what location to place your storage account in. The six locations we currently offer are:

- US North Central

- US South Central

- Europe North

- Europe West

- Asia East

- Asia Southeast

As a best practice, you should choose the same location for your storage account and your hosted services, which you can also do in the Developer Portal. This allows the computation to have high bandwidth and low latency to storage, and the bandwidth is free between computation and storage in the same location.

Then also shown in the above slide is the Uri used to access each data object, which is:

- Blobs

- Tables

- Queues

The first thing to notice is that the storage account name you registered in the Developer Portal is the first part of the hostname. This is used via DNS to direct your request to the location that holds all of the storage data for that storage account. Therefore, all of the requests to that storage account (inserts, updates, deletes, and gets) go to that location to access your data. Finally, notice in the above hostnames the keyword “blob”, “table” and “queue”. This directs your request to the appropriate Blob, Table and Queue service in that location. Note, since the Blob, Table and Queue are separate services, they each have their own namespace under the storage account. This means in the same storage account you can have a Blob Container, Table and Queue each called “music”.

Now that you have a storage account, you can store all of your blobs, entities and messages in that storage account. A storage account can hold up to 100TBs of data in it. There is no other storage capacity limit for a storage account. In particular, there is no limit on the number of Blob Containers, Blobs, Tables, Entities, Queues or Messages that can be stored in the account, other than they must all add up to be under 100TBs.

Windows Azure Blobs

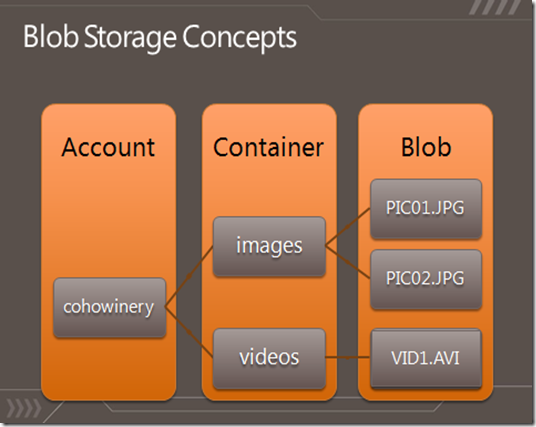

The figure below depicts the storage concepts of Windows Azure Blob, where we have a storage account called “cohowinery” and inside of this account we created a Blob Container called “images” and put two pictures in that blob container called “pic01.jpg” and “pic02.jpg”. We also created a second blob container called “videos” and stored a blob called “vid1.avi” there.

- Storage Account – All access to Windows Azure Storage is done through a storage account.

- This is the highest level of the namespace for accessing blobs

- An account can have many Blob Containers

- Blob Container – A container provides a grouping of a set of blobs. The container name is scoped by the account.

- Sharing policies are set at the container level, where a container can be set to private or to be publically accessible. When a container is set to Public, all its contents can be read by anyone without requiring authentication. When a container is Private, authentication is required to access the blobs in that container.

- Containers can also have metadata associated with them. Metadata is in the form of <name, value> pairs, and they are up to 8KB in size per container.

- The ability to list all of the blobs within the container is also provided.

- Blob – Blobs are stored in and scoped by Blob Containers. Blobs can have metadata associated with them, which are <name, value> pairs, and they are up to 8KB in size per blob. The blob metadata can be set and retrieved separately from the blob data bits.

The above namespace is used to perform all access to Windows Azure Blobs. The URI for a specific blob is structured as follows:

https://<account>.blob.core.windows.net/<container>/<blobname>

The storage account name is specified as the first part of the hostname followed by the keyword “blob”. This sends the request to the part of Windows Azure Storage that handles blob requests. The host name is followed by the container name, followed by “/”, and then the blob name. Accounts and containers have naming restrictions, for example, the container name cannot contain a “/”.

There are two types of blobs supported:

- Block Blobs – targeted at streaming workloads.

- Each blob consists of a sequence/list of blocks.

- Max block blob size is 200GB

- Commit-based Update Semantics – Modifying a block blob is a two-phase update process. It first consists of uploading blocks as uncommitted blocks for a blob. Then after they are all uploaded, the blocks to add/change/remove are committed via a PutBlockList to create the updated blob. Therefore, updating a block blob is a two-phase update process where you upload all changes, and then commit them atomically.

- Range reads can be from any byte offset in the blob.

- Page Blobs – targeted at random write workloads.

- Each blob consists of an array/index of pages.

- Max page blob size is 1TB

- Immediate Update Semantics – As soon as a write request for a sequential set of pages succeeds in the blob service, the write has committed, and success is returned back to the client. The update is immediate, so there is no commit step as there is for block blobs.

- Range reads can be done from any byte offset in the blob.

Windows Azure Tables

The figure below depicts the storage concepts for Windows Azure Tables, where we have a storage account called “cohowinery” and inside of this account we created a Table called “customers” and put entities representing customers into that table, where the entities have properties like their “name”, “email”, etc. We also created a table called “winephotos” and the entities stored in that table contain properties of “PhotoID”, “Date”, etc.

The following summarizes the data model for Windows Azure Table:

- Storage Account – All access to Windows Azure Storage is done through a storage account.

- This is the highest level of the namespace for accessing tables

- An account can have many Tables

- Table – contains a set of entities. Table names are scoped by the account. An application may create many tables within a storage account.

- Entity (Row) – Entities (an entity is analogous to a "row") are the basic data items stored in a table. An entity contains a set of properties. Each table has two properties, “PartitionKey and RowKey”, which form the unique key for the entity.

- An entity can hold up to 255 properties

- Combined size of all of the properties in an entity cannot exceed 1MB. This size includes the size of the property names as well as the size of the property values or their types.

- Property (Column) – This represents a single value in an entity. Property names are case sensitive.

- PartitionKey – The first key property of every table. The system uses this key to automatically distribute and load balance the table’s entities over many servers.

- RowKey – A second key property for the table. This is the unique ID of the entity within the partition it belongs to. The PartitionKey combined with the RowKey uniquely identifies an entity in a table.

- Timestamp – Every entity has a version maintained by the system. This is used for optimistic concurrency.

- Sort Order – There is a single index for Windows Azure Tables, where all entities in a table are sorted by PartitionKey and then RowKey. This means that queries specifying these keys will be more efficient, and all results are returned sorted by PartitionKey and then by RowKey.

- Types – PartitionKey and RowKey must be of type string and the rest of the properties can be any of the following types: Binary, Bool, DateTime, Double, GUID, Int, Int64, String.

- No Fixed Schema – No schema is stored by Windows Azure Table, so all of the properties are stored as <name, typed value> pairs. This means that two entities in the same table can have very different properties. A table can even have two entities with the same property name, but different types for the property value. However, property names must be unique within a single entity.

The above namespace is used to perform all access to Windows Azure Table. The URI for a specific table access is structured as follows:

https://<account>.table.core.windows.net/<TableName>

The storage account name is specified as the first part of the hostname followed by the keyword “table”. This sends the request to the part of Windows Azure Storage that handles table requests. The host name is followed by the table name, and then the rest of the Uri will specify what entity is being operated on or the query string to be looked up.

Windows Azure Queues

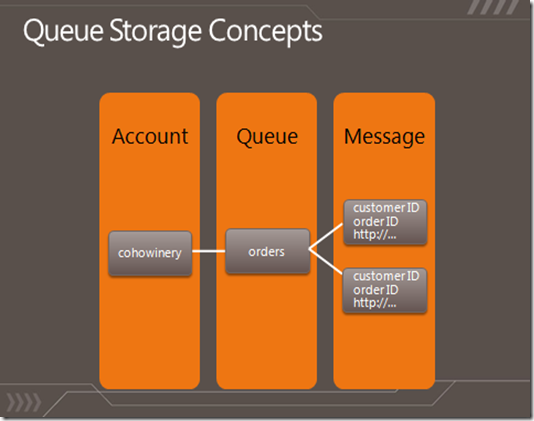

The figure below depicts the storage concepts of Windows Azure Queues, where we have a storage account called “cohowinery” and inside of this account we created a Queue called “orders”, which stores the orders in messages that are waiting to be processed. The messages in the example contain the customer ID, order ID, a link to order details, etc.

- Storage Account – All access to Windows Azure Storage is done through a storage account.

- This is the highest level of the namespace for accessing queues

- An account can have many Queues

- Queue – A queue contains many messages. The queue name is scoped by the account.

- There is no limit on the number of messages stored in a queue (the only limit is the 100TB size limit on the storage account).

- A message is stored for at most a week. The system will garbage collect the messages that are more than a week old.

- Queues can have metadata associated with them. Metadata is in the form of <name, value> pairs, and they are up to 8KB in size per queue.

- Messages – Messages are stored in queues. Each message can be up to 8KB in size. To store larger data, one can store the data in Azure Blob store or Azure Table store, and then store the blob/entity name in the message. Note that when you put a message into the store, the message data can be binary. But when you get the messages back from the store, the response is in XML format, and the message data is returned as base64 encoded.

The URI for a specific queue is structured as follows:

https://<account>.queue.core.windows.net/<QueueName>

The storage account name is specified as the first part of the hostname followed by the keyword “queue”. This sends the request to the part of Windows Azure Storage that handles queue requests. The host name is followed by the queue name.

Partitions

A very important concept to understand about the storage abstractions is their partitioning. Every data object (Blobs, Table Entities and Queue Messages) has a partition key. This is how we locate the objects in our service when accessing them, and how we load balance and partition the objects across our servers to meet the traffic needs of those objects. The following is the partition key used for our three storage abstractions:

- Blobs – ContainerName + BlobName

- Entities – TableName + PartitionKey

- Messages - QueueName

Our system automatically load balances these objects across our servers based upon these partitions. All objects with the same partition key value are grouped into the same partition and are accessed from the same partition server (see the upcoming post on Windows Azure Storage Architecture Overview). Grouping objects into partitions allow us to (a) easily perform atomic operations across objects in the same partition since their access goes to the same server, and (b) have caching locality of objects within the same partition to benefit from data access locality.

So what does this mean for Blobs, Entities and Messages with the above partition keys?

- Blobs – Since the partition key is down to the blob name, we can load balance access to different blobs across as many servers in order to scale out access to them. This allows the containers to grow as large as you need them to (within the storage account space limit). The tradeoff is that we don’t provide the ability to do atomic transactions across multiple blobs.

- Entities – Every Table has an application defined PartitionKey used by each entity. This means we can load balance the entities within a table across different servers at PartitionKey boundaries (meaning entities with different PartitionKey values). Then for the entities within the same partition (they have the same PartitionKey value) we allow applications to perform atomic batch transactions over them, since they are served from the same server.

- Messages – Since the partition key is the queue name, all messages to a given queue are processed by a single partition server, but different queues can be processed by different servers to spread out the load to the different queues a storage account may have.

Scalability and Performance Targets

Now that we have given a high level description of storage accounts, storage abstractions and how they are grouped into partitions, we want to talk about the scalability targets for storage accounts, objects and their partitions.

The following are the scalability targets for a single storage account:

- Capacity – Up to 100 TBs

- Transactions – Up to 5,000 entities/messages/blobs per second

- Bandwidth – Up to 3 gigabits per second

The 100TB is a strict limit for a storage account, whereas the transactions and bandwidth are the current targets we’ve built the system to for a single storage account. Note, the actual transaction and bandwidth achieved by your storage account will very much depend upon the size of objects, access patterns, and the type of workload your application exhibits. To go above these targets, a service should be built to use multiple storage accounts, and partition the blob containers, tables and queues and objects across those storage accounts. By default, a subscription gets 5 storage accounts, and you can contact customer support to get more storage accounts if you need to store more than that (e.g., petabytes) of data.

It is expected that a hosted service needs up to as many storage accounts to meet its performance targets given the above, which is typically a handful of storage accounts to up to 10s of storage accounts to store PBs of data. The point here is that a hosted service should not plan on creating a separate storage account for each of its customers. Instead, the hosted service should either represent a customer within a storage account (e.g., each customer could have its own Blob Container), or map/hash the customer’s data across the hosted service’s storage accounts.

Within a storage account, all of the objects are grouped into partitions as described above. Therefore, it is important to understand the performance targets of a single partition for our storage abstractions, which are:

- Single Queue – all of the messages in a queue are accessed via a single queue partition. A single queue is targeted to be able to process:

- Up to 500 messages per second

- Single Table Partition – a table partition are all of the entities in a table with the same partition key value, and most tables have many partitions. The throughput target for a single partition is:

- Up to 500 entities per second

- Note, this is for a single partition, and not a single table. Therefore, a table with good partitioning, can process up to a few thousand requests per second (up to the storage account target).

- Single Blob – the partition key for blobs is the “container name + blob name”, so we can partition blobs down to a single blob to spread out blob access across our servers. The target throughput of a single blob is:

- Up to 60 MBytes/sec

The above throughputs are the high end targets for the current system. What can be achieved by your application very much depends upon the size of the objects being accessed, the operations (workload) and the access patterns. We encourage all services to test the performance at the partition level for their workload.

When your application reaches the limit to what a partition can handle for your workload, it will start to get back “503 server busy” responses. When this occurs, the application should use exponential backoff for retries. The exponential backoff allows the load on the partition to decrease, and to ease out spikes in traffic to the partition. If this is a regular occurrence, then the application should try to improve its data partitioning and throughput as follows for the different storage abstractions:

- Blobs – consider using Windows Azure Content Delivery Network for delivering anonymous access to hot blobs to achieve higher throughput for commonly accessed blobs.

- Tables – use a more finely grained PartitionKey for the table in order to allow us to automatically spread the table partitions across more servers.

- Queues – batch together multiple work items into a single message to improve queue throughput or use multiple queues.

See the next set of upcoming posts on best practices for scaling the performance of Blobs, Tables and Queues.

Finally, one question we get is what the expected latency is for accessing small objects:

- The latency is typically around 100ms when accessing small objects (less than 10s of KB) from Windows Azure hosted services that are located in the same location (sub-region) as the storage account.

- Once in awhile the latency can increase to a few seconds during heavy spikes while automatic load balancing kicks in (see the upcoming post on Windows Azure Storage Architecture and Availability).

Brad Calder

Comments

Anonymous

May 10, 2010

500 transactions per second for the queue is really really low. Classical non-cloud systems are doing much better without even distributing the distributing the load (just a mirror for continuous backup). I would expect at least 100x better scalability. Basically, Twitter should be able to run their short messages on Azure Queues. The situation is even more frustrating because increasing queue throughput through queue sharding is not that hard actually (and could be done on the cloud side actually).Anonymous

May 14, 2010

@Joannes Yes, that is a good point. What we recommend is that customers represent multiple work items per message or to use multiple queues to get higher throughput out of queues. Note, we have architected the system to support higher throughputs for queues over time, and we need to see where exposing that rates in terms of customer priorities. Please rate the importance here relative to the other ideas: http://www.mygreatwindowsazureidea.com/forums/34192-windows-azure-feature-voting Thanks again for the feedback. BradAnonymous

August 10, 2010

For the transaction and bandwidth account scalability targets, as well as the latency during load balancing, can you quantify "a few"? Some people define it as 2-3, others say 3-5, etc.Anonymous

August 26, 2010

Great post, very informative. One comment. The article talks a lot about upper limits on performance--up to 500 transactions per second, up to 60MB/sec, etc. I'd be much more interested in lower limits--that's what has a business impact on my application. I understand there are edge cases where delays can kick in, but these could easily be avoided e.g. by using percentiles. I also understand that these figures might not form part of a binding SLA (but it would be great if they did). Even so, would it be possible to publish some target figures, or measured statistics?Anonymous

November 03, 2010

For Table Storage, you say the throughput target for a single partition is up to 500 transactions per second. How do entity group transactions fit into that? I know for billing purposes an entity group transaction only counts once, but is that true for throughput as well, or will 100 entities in a single entity group transaction contribute 100 transactions towards the throughput target?Anonymous

November 04, 2010

@Joe

- For transactions it is around 5,000 transactions per second for a single storage account.

- For bandwidth it is around 3 Gigabits per second for a single storage account.

- In terms of load balancing a partition, if I understood your question correctly, it is on the order of 10-15 seconds. @Jonathan It really depends upon the application, its access patterns, size of transactions, mix of transactions, etc. Our goal is to provide the scalability targets listed for as many workloads as possible, but applications need to benchmark their performance to understand what is it is. In general, applications should be able to achieve these scalability targets and consistently see those targets if they following the partitioning best practices we’ve gone over in the PDC talks, this blog, and technical papers. @Zach Sorry, should have made explained this better. The 500 transactions per second equates to:

- 500 entities per second for a single Table partition. So if you are doing batch requests with 100 entities per batch request, then that equates to being able to do 5 batch requests per second with 100 entities in each batch request to a single Table partition.

- 500 messages per second for a single Queue Thanks Brad

Anonymous

May 10, 2011

Check some code here: partitioncloudqueue.codeplex.com for how to scale past 500 messages per second for a single Azure QueueAnonymous

June 11, 2013

If the post on this page is of interest, this more recent post with more current information for newer storage accounts may also be of interest: blogs.msdn.com/.../windows-azure-s-flat-network-storage-and-2012-scalability-targets.aspxAnonymous

February 26, 2015

Taking an hypothetical scenario, if i have 2 tables completely in a single partition. Then if i understand correctly each table gets 500 entities per second scalability (which includes ADD/GET/UPDATE of entities) ?Anonymous

March 16, 2015

Aditya, you can't have two tables in a single partition. Each entities' partition key is the Table Name + Partition Key