Language models

Overview

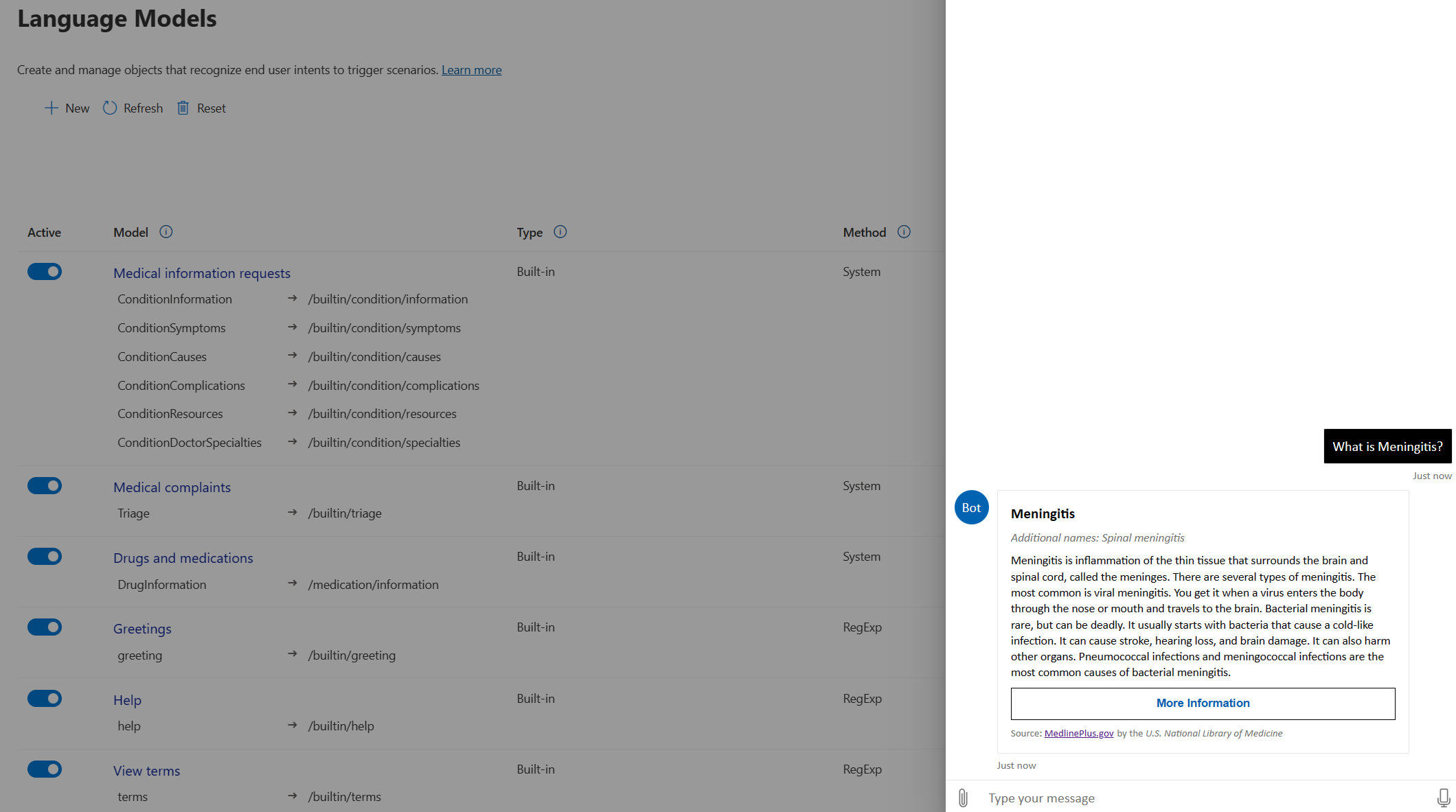

Language models are fundamental components for configuring your healthcare agent service experience. Language models interpret end user utterances and trigger the relevant scenario logic in response. For example, you can use a language model to trigger scheduling logic when an end user type “How do I schedule an appointment?”.

Language models are components that take textual unstructured utterances from end users and provide a structured response that includes the end user’s intention combined with a confidence score that reflects the likelihood the extracted intent is accurate.

Multiple models can be used in parallel. When more than one possible intent is identified, the confidence score for each intent is compared, and the highest score is used to invoke the mapped scenario. Each intent is unique and mapped to a single built-in or custom scenario.

How to guide: learn how to create your first language model.

Language model types

There are different types of language models. Some language models are built in to your bot and come out of the box. By default, the built-in models are used to trigger the built-in scenarios, however built-in models can be repurposed and mapped to your own custom scenarios by changing their configuration. You can also build your own custom models for tailored language understanding.

Language model methods

Models can use different language recognition methods. Each method has its own advantages and disadvantages. When planning your implementation, you should use a combination of recognition types best suited to the type of scenarios and capabilities you need. The next few sections explain each recognition method in more detail.

Regular Expression (RegEx) Models

RegEx models are great for optimizing performance when you need to understand simple and predictable commands from end users. RegEx models can extract a single intent from an utterance by matching the utterance to a RegEx pattern. For example, the RegEx pattern /.help./I would match the utterance “I need help”. Learn about Regular Expressions

The confidence score for the matched intent is calculated based on the number of characters in the matched part and the full length of the utterance. The longer the match, the higher the confidence score from the RegEx model.

LUIS models

Important

LUIS will be retired on October 1st 2025 and starting April 1st 2023 you will not be able to create new LUIS resources. We recommend migrating your LUIS applications to conversational language understanding to benefit from continued product support and multilingual capabilities.

LUIS models are great for natural language understanding. LUIS models understand broader intentions and improve recognition, but they also require an HTTPS call to an external service. LUIS models return a confidence score based on mathematical models used to extract the intent.

When creating a LUIS model, you'll need an account with the LUIS.ai service and the connection information for your LUIS application. LUIS is deeply integrated into the healthcare agent service and supports multiple LUIS features such as:

- Bing speller: Provide your Bing Speller subscription key in the endpoint URL to pass all utterances through a spell check before processing by the LUIS application.

- Staging: Use the staging version of your LUIS application if prevent testing impacting the learning of your production LUIS application.

- Verbose responses: Typically, the response from your LUIS application includes only the highest scoring intent for the model. To include all intents and their respective scores in the response you should use the verbose setting.

Nuance Mix NLU

The Nuance NLU (Natural Language Understanding) service turns text into meaning, extracting the underlying meaning of what your users say or write, in a form that an application can understand. NLU as a Service provides a semantic interpretation of the user’s input. To use Mix NLU, you'll need the following prerequisites

- Create a Mix project

- Create, train and build a model in the project.

- Create and deploy an application configuration for the project.

- Generate a client ID and a client secret of your Mix project. Later you'll use these credentials and configuration context tag to define the Mix language model inside the Language Models page.

Question Answering

Question answering provides cloud-based Natural Language Processing (NLP) that allows you to create a natural conversational layer over your data. It's used to find the most appropriate answer for any input from your custom knowledge base (KB) of information.

Question answering is commonly used to build conversational client applications, which include social media applications, chat bots, and speech-enabled desktop applications.

When creating a Question Answering model, you'll need a Cognitive Service for Language account. For more info on how to connect your Question Answering resource to the healthcare agent service, you can read more here

Conversational Language Understanding

Conversational language understanding is the next generation of Language Understanding (LUIS). It comes with state-of-the-art language models that understand the utterance's meaning and capture word variations, synonyms, and misspellings while being multilingual. It also automatically orchestrates bots powered by conversational language understanding, question answering, and classic LUIS.

When creating a Conversational Language Understanding model, you'll need a Cognitive Service for Language account and the connection information for your Conversational Language Understanding endpoint. For more info on how to connect your Conversational Language Understanding endpoint to the healthcare agent service, you can read more here

System models

System models use proprietary recognition methods. System models aren't open for editing, however you can override the default intent mapping. For example, you can use the medical complaint recognizer to trigger your own symptom checking scenarios.

The built-in medical models provide language understanding that is tuned for medical concepts and clinical terminology. All medical language models use system recognition methods.

- Medical complaints: This model understands when an end user if making a medical complaint. The model extracts key information such as the medical concept, the age and gender and additional information required to diagnose a complaint. This model triggers the built-in triage protocols.

- Medical Information: This model understands when a patient is asking for information about a medical concept. For example, the user can ask about symptoms or complications of a given condition. This model triggers a scenario that displays relevant information from our medical database.

- Drugs and medication: This model understands when an end user is asking for information about a type or brand of drugs. The model invokes a scenario that present information about the drug in question.

Intents

Intents are predefined keywords that are produced by your language model. An intent is a structured reference to the end user intention encoded in your language models.

Intents are mapped to scenarios and must be unique across all models to prevent conflicts. You won't be able to create your model if it includes a conflict with an existing intent.

Each intent can be mapped to a single scenario, and it's possible to map several intents to the same scenario or to leave an intent unmapped.

Confidence score

A score between 0 -1 that reflects the likelihood a model has correctly matched an intent with utterance. A score of 1 shows a high certainty that the identified intent is accurate.

Next steps

To get started with your language models, you can