SIMD/ARM-NEON support in Windows Phone Mango

This is an announcement only post, do subscribe to this blog feed or on to https://twitter.com/abhinaba as I’d be making more detailed posts on these topics as we get close to handing over these bits to our developer customers.

ARM processors support SIMD (Single Instructions Multiple Data) instructions through the ARM® NEON™technology that is available on ARMV7 ISA. SIMD allows parallelization/HW-acceleration of some operations and hence performance gains. Since the Windows Phone 7 chassis specification requires ARMV7-A; NEON is available by default on all WP7 devices. However, the CLR on Windows Phone 7 (NETCF) did not utilize this hardware functionality and hence it was not available to the managed application developers. We just announced in MIX11 that in the next version of Windows Phone release the NETCF runtime JIT will utilize SIMD capabilities on the phones.

What it means to the developers

Certain operations on some XNA types will be accelerated using the NEON/SIMD extensions available on the phone. Examples include operations on Vector2, Vector3, Vector4, Matrix from the Microsoft.Xna.Framework namespace will get this acceleration. NOTE: At the point the exact types and the exact operations on them are not closed yet and subject to change. Do note that user types will not get this acceleration. E.g. if you have rolled out your own vector type and use say dot operations on it, the CLR will not accelerate them. This is a targeted acceleration for some XNA types and not a vectorizing JIT compiler feature.

Apps and types heavily using these XNA types (our research shows a lot of games do) will see good performance gain. For example we took this Fluid simulation sample from a team (note this was not written specifically for us to demo) and saw huge gains because it heavily uses Matrix and Vector operations to simulate fluid particles and the forces that work in between them. Frame rates shot up from 18fps to 29fps on the very same device.

Based on the usage of these types and operations in your app you’d see varying amounts of gains. However, this feature should be a good motivation to move to these XNA types.

How does SIMD work

SIMD as the name suggests can process the same operation on multiple data in parallel.

Consider the following Vector addition

public static Vector2 Add(Vector2 value1, Vector2 value2)

{

Vector2 vector;

vector.X = value1.X + value2.X;<br> vector.Y = value1.Y + value2.Y;

return vector;

}

If you see the two lines in blue it’s essentially doing the same addition operation on two data sets and putting the result in two other locations. This today will be performed sequentially. Where the JITer will emit processor instructions to load the X values in registers, add them, store them, and then do the same thing again for the Y values. However, this is inherently parallelizable and the ARM NEON provides an easy way to load such values (vpop, vldr) in single instructions and use a single VADD NEON instruction to add the values in parallel.

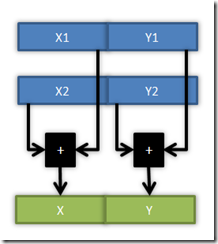

A way to visualize that is as follows

A single instruction both X1 and Y1 is loaded, another instruction loads both X2, Y2 and the 3rd in parallel adds them together.

Comments

Anonymous

May 27, 2011

SIMD is also extremely awaited on the Xbox and Windows CLR. It would be great to have a general solution to work with any kind of Math APIAnonymous

June 23, 2011

It would be even better if we were allowed to use the SIMD assembly directly ! I would love to push the performance to its limits and SIMD is a fantastic way to accomplish it for specific operations. Especially on a phone, where we don`t have the full SM 2.0, this would be a great way how to bypass this limitation by rolling out or own additional content rasterizer/processor.Anonymous

June 25, 2011

"allowed to use the SIMD assembly directly " VladR, this is harder than it seems at the surface. When you say expose SIMD assembly directly, the question naturally comes how? If you mean say inline assembly (__asm ??) as done by C++, the most important part to remember is that .NET is a managed language and at almost every point needs to know which object are in use and how (for safe GC). When directly asm is modifying or accessing objects, it's not possible to do so. Also the whole system is inside security sandbox and hence everything needs to be verifiable and secure. Again asm will not allow that to happen. So exposing such lower level stuff directly do not work. Now this doesn't mean that SIMD kind of hardware functionality cannot be exposed to user. However, for that .NET which is a cross-platform/standardized framework needs to comeup with changes to it's specification and alow that to also be standardized via ECMA. There is a reason why .NET has been so successful and cross-platform/hardware agnostic standard has been a key to that. Arbitrary and hacky ways of exposing HW features to code would fragment the sources and drive it down the same pitfall other platforms face. All said, exposing HW features like SIMD to users is a a requirement to get things like games, physics simulation to work. This is more important on sandboxed systems like WP7 because the developer cannot push these things out to native modules and use them over interop. How they can be exposed in a secure, extendable, standardized way is actively debated and I hope that sometime in the future this would be added.Anonymous

June 26, 2011

This is great news, will it also so more with Silverlight too, or just XNA? Or is this the same thing that accellerates transforms in silverlight already?Anonymous

June 30, 2011

Hi, I've just gotten Mango and am starting to look at the Beta Developer Tools. What is the best syntax to take advantage of SIMD with XNA? x += y; x = x+y; Vector.Add with refs Will SIMD be used on all these different ways of saying the same operation?Anonymous

June 30, 2011

During my time developing for WP7 I've also noticed that operations on double are just as fast or even faster than operations on float and have been using the double type for everything. So I guess I'm kind of confused why the Vector types have floats for all the elements. Are floats more optimized in this release so they can be faster than doubles?Anonymous

July 06, 2011

@Grant Kot: Doubles are faster for computation, but the GPU still works with floats. This is why the Vector and Matrix classes use float.Anonymous

August 02, 2011

It would also be pretty interesting if some of the functionality of the ms amp technology could be extended into a future version of the clr. In other words, using extensions to the TPL library and perhaps a new c# restrict keyword similar to the one one in amp for c++, would allow the same kind of multi-core, gpgpu-optimized code that is currently only possible in native code. Though, I'm not sure if the first generation wp7 hardware is capable of direct-compute since it doesn't support shaders. Though of course if future hardware does support it, amp.net cf could fallback to CPU when the app runs on legacy wp7 phones.Anonymous

August 02, 2011

[Sorry for the double post if my other attempt goes through - my browser was being flaky at the time. Plus, I took a second look at it and revised my original post] Good work on SIMD! This is something that has been needed for a long time! I second @lx's comment about needing support for Windows and XBox (and silverlight in the browser for windows and mac, for that matter). I remember doing some performance analysis on the Farseer Physics engine when you guys were first seeking feedback from the community on how to make the .netCF runtime better, so I'm glad that this was the result so soon! Regarding VladR's comment about the need for SIMD intrinsics of some kind for custom computations, I also think that this is important. While SIMD optimization for built-in XNA types is wonderful, not every algorithm that has potential for SIMD optimization can be expressed using those types, or if they can, there are often times when a custom set of SIMD instructions can do the operation more efficiently. I think SIMD intrinsics of some kind over custom types and operations (or even just the built-in types int/long/float/double) is required for general case performance parity with native SIMD code. That said, I don't mean we should be allowing the programmer to specify actual ARM NEON instructions in the code. However, different SIMD processors often have very similar sets of instructions, or near enough that you could have static functions for each instruction (with overloads for supported argument types) and emulate the instructions that aren't in the hardware. So, I do have a couple of suggestions for intrinsics. The first would be the easiest to implement, since it would work on arrays of plain data and could be implemented using P/Invoke into native code without the help of the JIT. With large enough arrays, the native/managed boundary overhead becomes minimal if the loop itself is in native code. First, you could support all of the common SIMD operations over arrays of just plain data. So, for example: float arg1[] = ... float arg2[] = ... float result[] = ... // add each element of arg1 with the corresponding element of arg2 // and place the sum in the corresponding element of result. // the number of elements processed is the length of the smallest of // the three arrays. Overloads should be available to process sub-arrays too Simd.Add(arg1, arg2, result); Although this library would be difficult to use for some use cases (like physics engines, which by and large already use the XNA types which are now SIMD-optimized, so this is less of an issue), this would open up some compute scenarios that aren't there with the XNA types yet (like processing ints, longs, or doubles). The drawback to this is that it often requires algorithms to be redesigned so that the data can be lined up into arrays. Another drawback is that small-scale optimizations, like the ones you added to the Xna types, is not possible. The P/Invoke overhead is too great to make a difference with small numbers of elements. So, the solution is to add support for these new library functions in the JIT, just as you did with the Xna types. So, instead of P/Invoking into native code, the JIT would replace the call to SIMD.Add with the appropriate Simd instructions for the target CPU architecture (or emulate them for non-SIMD hardware). This would allow them to be used on a smaller scale with no performance penalty. For convenience and extra performance for these small-scale scenarios, you could also add some overloads which take single arguments instead of arrays: /// custom long-vector type struct Vector2L { public long X; public long Y; // would be nice if this method itself were inlined into the calling code during JIT public void PlusEquals(Vector2L rhs) { // sort of a Simd.+= operation for longs. // a short-cut for a longer Simd.Add(T x1, T x2, T y1, T y2, out T o1, out T o2) function, // for T in {int, long, float, double} (not generic, just overloads) Simd.Add(ref X, ref Y, rhs.X, rhs.Y); } } Side note: The performance of the PlusEquals method would depend in part on the VM/JIT's ability to inline the PlusEquals method into the calling code. If this couldn't be done, then highly optimized code would have to inline the PlusEquals method by hand, which is bad: Vector2L lhs = ... Vector2L rhs = ... // BAD, shouldn't have to do this to get the fast-path on custom types (this isn't java!) Simd.Add(ref lhs.X, ref lhs.Y, rhs.X, rhs.Y); // GOOD, this should have the exact performance of the above code lhs.PlusEquals(rhs);