Classification – under the hood (part 1)

As promised, I will add more technical information about File Classification Infrastructure (FCI) in a series of technical posts devoted to FCI architecture and internals.

We started the classification project with an ambitious vision: to provide a simple, open and comprehensive way to organize data at enterprise level, primarily for files stored on shares. The classification process has to be simple in order to be understood intuitively by any administrator under pressure of managing millions, maybe billions of files. It needs to be open (i.e. extensible) as we need to deal with a huge matrix of file formats, classification methods and types of management policies on top of classification. Finally, it needs to be comprehensive - by installing the latest version of Windows, administrators should be able to get enough value “out of the box” to get started in organizing their information and apply policies right away.

Overview

Here is a quick introduction in classification, if you don’t know anything about it yet (By the way, a detailed overview of the problem space is presented here, and an functional overview is presented here. There is also a Microsoft Technet step-by-step guide for enabling classification and file management tasks here.)

In essence, classification allows you to assign metadata to every file on a file server. Specifically, classification deals with actionable file metadata, the kind of metadata that can be used to drive file management policies (such as encryption, protection, retention, expiration, etc). This metadata is just a set of classification properties (in the form Name=value) that is attached to files in some form or another, either through explicit or automatic classification. It is important to note that you (the user) are the one responsible in designing your own classification schema for your solution. By default, Windows does not provide (nor enforce) a predefined set of classification properties available by default.

Classification information can be “produced” in several different ways:

- Through automatic classification, where you need to define classification rules that indicate which property value will be assigned to each affected file. Classification rules have a scope (a set of affected namespaces) and also could have a “filtering condition” (think of it as a WHERE clause) for the files being classified. Finally, a rule also specifies the property to be assigned (so one rule is associated with only one property).

- Classification properties could be already residing in the file, when supplied by other property storage systems, or even by previous runs of the automatic classification mechanism. For example, an Office file downloaded from a Sharepoint document library already has extra embedded metadata that could be viewed as classification properties.

- You can also explicitly “set” a certain classification information using a certain API from scripts or LOB applications (using IFsrmClassificationManager::SetFileProperty). Since this mechanism is not rule-based, this method is subject to certain applicability limitations, depending on the scenario. (BTW, you can think of the “Set API” called to set a property on a file as equivalent with running a temporary classification rule that is created once, ran once against the file, and then deleted).

Classification can be consumed in several ways:

- Classification properties can be used directly (from scripts or applications) through “Get” APIs (like IFsrmClassificationManager::EnumFileProperties or IFsrmClassificationManager::GetFileProperty). Such APIs will provide the correct set of properties depending on the set of rules defined ahead of time and/or depending on existing metadata that is already attached to the file.

- Classification properties could be used indirectly, as a filtering criteria, to selectively execute File management tasks – which are tasks that perform a certain action against a desired set of files. One example: you can use a file management task to encrypt any file that has been classified as “Confidentiality=High”.

- Classification properties are also used when generating “Files By Property” reports, such as reporting all files on a server grouped by their Confidentiality information.

Of course, producing/consuming always occurs in parallel – which makes classification look a little bit complex at the first sight.

The classification pipeline

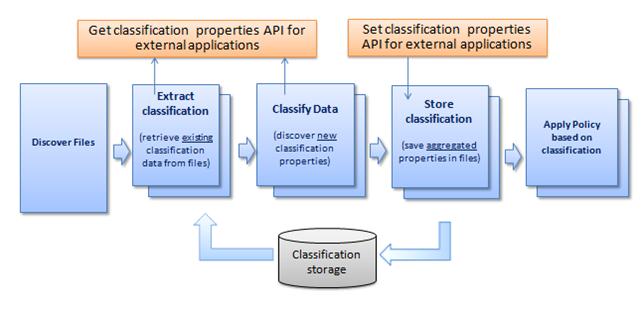

The whole process is simple to understand if you use the classification pipeline as a simple underlying concept. The pipeline defines how the classification process is executed in each combination of the scenarios above that produce/consume classification:

As you can see, classification works in five stages on a file-by-file basis. Let’s drill down into each of them:

1) Discover Files

In this phase we “assemble” a list of files that will be run through the pipeline.

- In the case of automatic classification, we will create a snapshot of the volume and scan the snapshot for any files that might have been changed or added since the last classification (FCI offers a set of heuristics for incremental classification, so we won’t need to rescan the whole world every time we are running an automatic classification job).

- In the case of executing the EnumFileProperties()/GetFileProperty()/SetFileProperty() APIs, we will only provide the file in discussion.

After this step, we generate a stream of “property bag” objects (one per file) that is passed through the pipeline. You can think of the property bag object as an in-memory object holding the set of “Name=value” properties for a given file. Of course, NTFS metadata (file name, attributes, timestamps, etc) is extracted at this stage and inserted in the property bag.

2) Extract classification (retrieve existing classification from files)

In this phase, we try to extract existing properties from files, that have been previously stored by other FCI-unaware applications such as Microsoft Word or Sharepoint. Some of these mechanisms might parse the file content (such as in the case of Office files) or use other techniques (such as discovering classification information that has been “cached” from a previous classification run).

One important thing to note is that existing classification data might be extracted from different sources, and therefore we have a precise process of reconciliation of potentially conflicting metadata. This process is called aggregation – and it is one of the central concepts in the pipeline architecture.

Extracting/storing property values is done through dedicated software components called storage modules. Each of these storage modules implements a standard COM interface to essentially receive a “stream” of property bags (from the discovery/scanning process) and enhance them in the way through extracted property values.

In Windows Server 2008 R2 we ship several storage module by default:

- An Office 2007 storage module that persists properties within Office 2007 file formats (DOCX, XLSX, PPTX, etc) in a format compatible with Sharepoint.

- Similarly, we ship a Office XP-2003 storage module for files in DOC, XLS, PPT, etc format.

- The System Storage module (that persists properties in an alternate data stream attached to the file. This storage module has a dual role. First, it is used as a generic storage module for files that do not support embedded metadata (such as plain TXT files, or files with an unknown extension) and secondly, as a cache for previous classification runs on the same file. This second functionality is particularly important – as it guarantees that you have a way to cache properties for files which did not change in the meantime (assuming that the rules only act on file metadata or content). We will address the caching concept in detail later.

One more thing to note – you can also define your own storage modules that can store metadata either in the file content, or in an external database. It is recommended, though, that any storage module that parses file content should live in its own process with tightened privileges for security reasons (as parsing file content could be subject to buffer overruns).

3) Classify files

At this stage, we identify which rules affect the current file being processed, and then run each rule to produce new classification property values. Again, we use aggregation to reconcile potentially conflicting values coming from different rules (and potentially from the previous stage as well).

Rules are implemented with the help of a different type of pipeline modules called classifiers. A classifier implements a similar interface as a storage module – it accepts a stream of incoming property bags, and it outputs a stream of “enhanced” property bags containing extra properties discovered during classification.

A classifier can look at the file content, the file system metadata, external storage, or all of them to determine the new property values to be assigned. There are many “flavors” of classifiers which we will detail later.

A pipeline could contain one or several different classifiers. There are two classifiers shipped by default with Windows:

- The Folder classifier – which simply assigns properties to files depending on their location on the disk, and

- The Content classifier – which assigns properties to certain files depending on whether certain strings or regular expressions are successfully matched against the file content.

The content classifier can also operate on non-text files (such as Office documents) through the IFilter technology, which basically offers a way to “convert” a certain Office file into a simplified pure text format. One more exciting thing to note – Windows Server 2008 R2 ships with an IFilter-based OCR engine, so the content classifier will also work against text contained in TIFF scanned images! (If you have seen the TechEd keynote demo you know what I mean :-)

4) Store classification properties

This is the natural counterpart of step 2 and is implemented, not surprisingly, by the same storage modules. In this stage the basic philosophy is the following: we store properties in as many locations as possible so we can reconstruct it later.

Of course, we store the aggregated property values as mentioned before.

5) Apply policy based on classification

This is the most interesting of the pipeline steps, and the final stage in the classification pipeline. After all, the whole point of classification is to run some policies against the classification data. Classification in itself is not interesting – what’s truly interesting is the fact that you can run declarative policies on top of classification data.

Policies come in several flavors:

- Expiration File Management Tasks – which have the role of selectively moving files, depending on their age and/or classification information

- Custom File Management Tasks – which can execute a custom script against a certain category of files. Examples could include: migrating certain documents to Sharepoint, custom expiration, custom retention policies, encrypting/protecting sensitive data, HSM policies, deduplication policies, etc. Sky is the limit for your imagination of what can be implemented in a custom file management task

- Automatic Classification – of course, running an automatic classification job can be viewed of a task of (1) saving classification properties for newly-classified files and (2) generating a report containing a summary of the job results.

- The “Files By Property” report – this is a simple report containing a basic statistical distribution of files classified against a certain property.

That’s it for now – in next posts we will drill down even more in each of these phases.

(update – small typos)

Comments

- Anonymous

May 13, 2009

PingBack from http://microsoft-sharepoint.simplynetdev.com/classification-%e2%80%93-under-the-hood-part-1/