you may obtain a .dstore related file system error when processing TFS cube

Recently we have seen a number of issues where TFS cube processing failed with a file system error. The error is always referencing the decode store (.dstore) of the primary key attribute (FileSK) of the "File" dimension.

The error message typically looks similar to this:

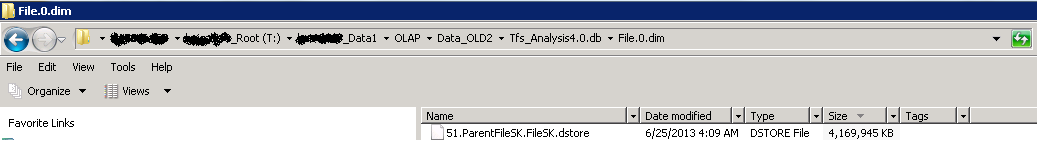

Exception Message: File system error: The following file is corrupted: Physical file:

\\?\T:\Data1\OLAP\Data\Tfs_Analysis4.0.db\File.0.dim\52.ParentFileSK.FileSK.dstore. Logical file .

When you look at the referenced .dstore file (from last successful processing) in the data folder of the SSAS instance, then you will likely notice that the file size approaches 4 GB.

The File dimension in TFS database is set up as a parent/child dimension. And it contains file names from all projects managed by TFS.

In all problem cases I have seen so far the decode store reaches 4 GB when there are about 48 million members in the File dimension. If your TFS data warehouse has a File dimension source table "DimFile" with more than 48 million records, then you can hit the error.

You can quickly check the row count in the "DimFile" table of the TFS data warehouse by executing the following T-SQL query in SQL Server Management Studio (SSMS):

SELECT COUNT(*) As 'RowCount' FROM [TFS_Warehouse4].[dbo].[DimFile]

The observation that we get a processing failure whenever we approach a file size of 4 GB seems to indicate a file size limit, similar to the one we observe for SSAS string stores. However, that's not the case!

In fact we don't have a file size limit for any SSAS file store! The only thing we do have is a record number limit. Every file store can support up to 2^32 (4 giga) records. For string stores each character in a string is treated as a separate record. Meaning that we will reach the record number limit at 4 GB file size.

Decode stores ( .dstore) allow to quickly identify all ancestors for a given the attribute member. A decode store record contains the DataID values for all ancestors of a given member. Thus a record size will be much larger than a byte and we won't be anywhere near the 4 G record limit when the .dstore file size reaches 4 GB.

Further research showed that the problem is related to a code defect that happens to be triggered when file size reaches 4 GB. It was previously observed for partition data files and is described in KB article 2652738:

FIX: "File system error" error when you process a large dimension https://support.microsoft.com/kb/2652738/EN-US

The fix came as part of a SQL Server 2008 R2 SP1 and SQL Server 2012 cumulative updates.

Thus our recommendation is to install the latest Service Packs SQL Server 2008 R2 SP2 or SQL Server 2012 SP1. Both Service Packs include the fix.

Unfortunately we do not have a fix for SQL Server 2008. If you are using SQL Server 2008 and upgrading is no option, then the only way to to get the TFS database processing going again will be to decrease the number of records in the DimFile table to a value well below 48 million.