Federation Repartitioning Operations: Database SPLIT in Action

Repartitioning operations is a large part of the value of federations. The ability to repartition databases enable elasticity in the database tier. With federations online repartitioning operations, database tiers can be made even stretch-ier. Scheduling downtime not required! So apps can expand and contract to engage larger of fewer nodes any time for the database workload just like the middle tier.

One of the repartitioning operations we ship in federation v1 is the SPLIT command. SPLIT is initiated through an ALTER FEDERATION command and specifies the federation distribution key value to use for the operation.

ALTER FEDERATION Orders_FS SPLIT AT(tenant_id=1000)

SPLIT is executed in 2 phases; first phase is executed synchronously and focuses on setting up the operation in motion. The second phase happens asynchronously and typically is where bulk of the time is spent.

- Phase I:

In the initial phase, SQL Azure sets up the new destination databases that will receive the filtered copies of data. The destination databases are marked with a SPLITTING state in sys.databases.

First phase also initiates the filtered copy operations and sets up the metadata that will report on the progress of the operation in sys.dm_federation_operation* views. The new destination databases are not yet members of the federation so they do not show up in sys.federation system views yet.

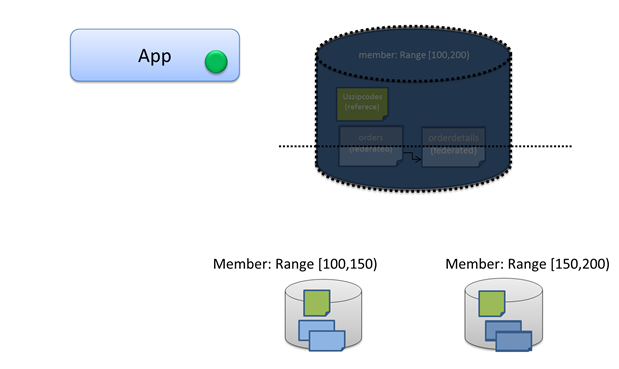

At the end of phase I, control returns back from the ALTER FEDERATION statement much like the database copy statement. The app continues to stay online, thus the green dot in the app box. App can continue to execute DML or DDL. Here is what the setup looks like;

- Phase II:

In this phase longer transaction of moving schema and data to the destination databases is executed. Schema of the source database that is being split and all reference tables are cloned to the destination databases. Schema includes all artifacts from user accounts to permissions to triggers, functions and stored procedures and more. Data in the federated tables go through a filtered copy operation at the SPLIT point specified by the command. The copy streams also build the secondary copies required for all SQL Azure databases as part of the operation. The source database continue to receive changes in the meantime, The changes may be DML statements or schema changes or changes to the property of the source database.

Once all changes are copied to the destination databases, a switchover happens that cuts existing connections and transactions in the source federation member and takes it offline. At this moment the app receives errors for ongoing transactions, thus the red dot in the app box. This behavior is similar to failover behavior in SQL Azure due to HW failures or load balancing actions. Apps with retry logic handle and recover without bubbling the error to users of the system.

Immediately following that, the destination member state is marked online and start accepting connections. Given all participant are ready and this switchover is simply a metadata operations, all of this happen very quickly in the system.

At the switchover, the source member is taken off of the federation metadata in sys.federations* views and destination databases take over and start showing up in the federation metadata. Source database is also dropped as part of the switchover. Federation operation views (sys.dm_federation_operations*) mark the operation complete and is cleaned up.

There may be conditions that causes errors in the process. For example one of the nodes hosting the destination database may fail. SQL Azure continues to retry under these conditions until the operation succeeds. SPLIT can also be run when source member is over its size quota. However waiting until you reach the size quota is not a great strategy given that split may take some time execute. Lets dive into that as well;

Performance Considerations for Split Operation

There are many factors to consider when considering the performance of complex operations such as split. The above picture simplifies the view of SQL Azure databases and hides the 2 additional replicas maintained by SQL Azure for high availability. The replicas exist for both the source and the destination federation members and are built and maintained through the split operation. When considering latency and performance of split it is important to keep in mind that the source members primary copy push data to its own 2 replicas for high availability and filter copy to 6 additional replicas as part of the split operation.

The size of the data that needs to be moved also play a role in the execution time of the split operation. The amount of data that needs to be moved will also be impacted by the rate of change that happen during the duration of the filtered copy at the source database.

Failures is another factor that may effect duration of split. If one of the participating nodes fail over, this cause retries and will also slow down the split operation.

SQL Azure cluster also governs the concurrent copy operations across the nodes and may queue split operation until a channel is acquired for the copy operation.

As always, if you have questions, reach out through the blog.

-cihan