Cloud Numerics and Latent Semantic Indexing, Now with Distributed Sparse Data

You may recall our earlier blog post about Latent Semantic Indexing and Analysis, where we analyzed a few hundred documents. With new features of “Cloud Numerics” Wave 2 we can repeat the analysis: not just for few hundred but for many thousands of documents. The key new features we will use are distributed sparse matrices and singular value decomposition for dimensionality reductions of sparse data. We’ll then use DataParallel.Apply to compute the cosine similarities between documents.

The dataset we use is derived from SEC-10K filings of companies for the year of 2010. There are 8944 documents in total; the number of terms is over 100,000. We have computed the tfidf term-document matrix and stored it as triplets (term number, document number, tfidf frequency). This matrix was computed using Hadoop and Mahout from pre-parsed filings, and then saved into a regular CSV file. We will use the “Cloud Numerics” CSV reader to read in this data. For those interested, “Cloud Numerics” can also read the triplets from the HDFS (Hadoop Distributed File System) format directly. This is a topic for another blog post.

Step 1: Preparation

To run the example, we recommend you deploy a Windows Azure cluster with two compute nodes, which you can do easily with the “Cloud Numerics” Deployment Utility. Alternatively, if you have a local computer with enough memory –-more than 8 GB free-– you can download the files (see next step) and attempt the example on that local computer.

We’ll be creating the application --method by method-- in the steps below.

1. Open Visual Studio, create a new Microsoft “Cloud Numerics” Application, and call it SparseLSI.

2. In the Solution Explorer frame, find the MSCloudNumericsApp project and add a reference to Microsoft.WindowsAzure.StorageClient assembly. We’ll need this assembly to read and write data to and from Azure storage.

3. Next, copy and paste the following piece of code to the Program.cs file as the skeleton of the application.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Microsoft.WindowsAzure;

using Microsoft.WindowsAzure.StorageClient;

using Microsoft.Numerics;

using msnl = Microsoft.Numerics.Local;

using msnd = Microsoft.Numerics.Distributed;

using Microsoft.Numerics.Mathematics;

using Microsoft.Numerics.Statistics;

using Microsoft.Numerics.LinearAlgebra;

using Microsoft.Numerics.DataParallelOps;

namespace MSCloudNumericsApp

{

public class Program

{

}

}

Step 2: Copy Data to Your Own Storage Account

The input data for this application is stored at cloudnumericslab storage account:

https://cloudnumericslab.blob.core.windows.net/lsidata/SEC_10K_2010.csv

and:

https://cloudnumericslab.blob.core.windows.net/lsidata/Docmapping_2010.csv

The methods we will use to read in data to distributed arrays do not support public access to blobs, so you must copy the data to your own Windows Azure storage account.

To do this, let’s add following methods to the “Cloud Numerics” application:

This routine instantiates a CloudBlobClient instance, given an account name and key:

static CloudBlobClient GetBlobClient(string accountName, string accountKey)

{

var storageAccountCredential = new StorageCredentialsAccountAndKey(accountName, accountKey);

var storageAccount = new CloudStorageAccount(storageAccountCredential, true);

return storageAccount.CreateCloudBlobClient();

}

This method enables your application to download and upload blobs between storage accounts:

static void CopyDataToMyBlob(CloudBlobClient blobClient, string originUri, string destContainerName, string destBlobName)

{

var originBlob = new Microsoft.WindowsAzure.StorageClient.CloudBlob(originUri);

var destContainer = blobClient.GetContainerReference(destContainerName);

destContainer.CreateIfNotExist();

var destBlob = destContainer.GetBlobReference(destBlobName);

destBlob.UploadText(originBlob.DownloadText());

}

The third method copies two blobs: the CSV file that holds the term-document matrix, and a list of document names that maps term-document matrix columns to specific companies.

static void CopySecData(CloudBlobClient blobClient, string myContainerName, string secBlobName, string mapBlobName)

{

var secUri = @"https://cloudnumericslab.blob.core.windows.net/lsidata/SEC_10K_2010.csv";

var mapUri = @"https://cloudnumericslab.blob.core.windows.net/lsidata/Docmapping_2010.csv";

CopyDataToMyBlob(blobClient, secUri, myContainerName, secBlobName);

CopyDataToMyBlob(blobClient, mapUri, myContainerName, mapBlobName);

}

Step 3: Read Data from the CSV File into a Sparse Matrix

Create a method that reads data in “triplet” format (row, column, value), slices it into three vectors, and then assembles it into a sparse matrix. As an input argument, our reader will take a class that implements the IParallelReader interface, because this will give us flexibility to use the same method for data stored locally or on Azure (this point becomes more clear in Step 9). The triplet data is shaped n-by-3 where n is total number of unique words in each document of the corpus. The slicing operation yields first, second, and third column as separate vectors, where the first two correspond to indices of terms and documents, respectively. To convert them into row and column indices, we cast the first two into long integers and then call the distributed sparse matrix constructor. Add the following method to the skeleton program from previous step.

static msnd.SparseMatrix<double> ReadTermDocMatrix(msnd.IO.IParallelReader<double> reader)

{

var triplet = msnd.IO.Loader.LoadData<double>(reader);

var rowIndices = triplet.GetSlice(new Slice(), 0).ConvertTo<long>();

var columnIndices = triplet.GetSlice(new Slice(), 1).ConvertTo<long>();

var nonzeroValues = triplet.GetSlice(new Slice(), 2).ConvertTo<double>();

return new msnd.SparseMatrix<double>(rowIndices, columnIndices, nonzeroValues);

}

Step 4: Read List of Documents

From the sparse matrix alone we cannot tell how columns map to filings by specific companies. To provide this mapping, we read in an indexed list of documents. This list was originally produced during the pre-processing of documents.

The following method reads the data file from Windows Azure to a string:

static List<string> ReadDocumentList(CloudBlobClient blobClient, string inputContainerName, string inputBlobName)

{

var resultContainer = blobClient.GetContainerReference(inputContainerName);

var resultBlob = resultContainer.GetBlobReference(inputBlobName);

return GetColumnToDocumentMapping(resultBlob.DownloadText());

}

This method converts the string into list of strings, one for each document:

static List<string> GetColumnToDocumentMapping(string documentList)

{

var rows = documentList.Split('\n');

List<string> columnToDocumentMap = new List<string>();

foreach (var row in rows)

{

var namenum = row.Split(new char[] { ',' });

columnToDocumentMap.Add(namenum[1].Substring(0,namenum[1].Length-1));

}

return columnToDocumentMap;

}

Step 5: Compute Sparse Singular Value Decomposition

The dimensionality of the term-document matrix is huge. In a sense, each document can be considered as a vector in t-dimensional space, where t is the number of words --over 100,000-- in the corpus. Also, the term-document matrix is very sparse, each document uses only a small subset of all available words. We, therefore, can reduce the dimensionality of the problem by applying singular value decomposition.

This SVD operation has three major effects that facilitate subsequent analysis by:

- Reducing noise.

- Grouping together features that explain most of the variance between documents.

- Reducing the dimensionality of the feature space and raw memory footprint of the data.

static msnd.NumericDenseArray<double> ReduceTermDocumentMatrix(msnd.SparseMatrix<double> termDocumentMatrix, long svdComponents)

{

// Compute sparse SVD

var svd = Decompositions.Svd(termDocumentMatrix, svdComponents);

msnd.NumericDenseArray<double> sMatrix = new msnd.NumericDenseArray<double>(svd.S.Shape[0], svd.S.Shape[0]);

sMatrix.Fill(0);

for (long i = 0; i < svd.S.Shape[0]; i++)

{

sMatrix[i, i] = svd.S[i];

}

// Return (V*S). Note that after this step, rows correspond to documents

return Operations.MatrixMultiply(svd.V, sMatrix);

}

The number of SVD components determines the reduced dimensionality. In this example we’ll specify a value of 100; you can experiment with different values. The output is a V*S matrix in reduced-dimensional subspace, which we will use for further analysis.

Step 6: Compute Cosine Similarities Between Documents

At this stage, each document is represented as a row vector in V*S matrix. Now, we’d like to find which documents are most similar to a specific document of interest.

To compare the documents we’ll use a cosine similarity measure. Let’s first add a class with a method that computes cosine similarity between a pair of documents. Note that the first vector is supplied as an argument to the constructor. The reason for this design is that we can then pass the cosine similarity method as an argument to the DataParallel.Apply(), to compute the similarity between a single document of interest and all other documents in parallel. We also mark the class as serializable so it can be passed to DataParallel.Apply().

// Method for computing cosine similarities between documents

[Serializable]

public class SimilarityComparer

{

msnl.NumericDenseArray<double> _documentVector1;

public SimilarityComparer(msnl.NumericDenseArray<double> documentVector1)

{

_documentVector1 = documentVector1;

}

public double CompareSimilarity(msnl.NumericDenseArray<double> documentVector2)

{

double sqnorm1 = ArrayMath.Sum(_documentVector1*_documentVector1);

double sqnorm2 = ArrayMath.Sum(documentVector2 * documentVector2);

return Operations.DotProduct(_documentVector1,documentVector2) / BasicMath.Sqrt(sqnorm1 * sqnorm2);

}

}

Then, we’ll add a method that first looks up the row index of document of interest and gets the slice (row vector) corresponding to that document. It then instantiates SimilarityComparer using that vector, and calls DataParallel.Apply with SimilarityComparer.CompareSimilarity as the method to execute in parallel against rows of the V*S matrix. We sort the results by their similarity comparison score, select the top 10, and write the result to a string.

static string GetSimilarDocuments(List<string> columnToDocumentMap, msnd.NumericDenseArray<double> sv, string interestingCompany)

{

int interestingDocIndex = columnToDocumentMap.IndexOf(interestingCompany);

if (interestingDocIndex == -1) { throw new System.ArgumentException("Company not found!"); }

StringBuilder output = new StringBuilder();

// Compute cosine similarity

var documentVector1 = sv.GetSlice( interestingDocIndex,new Slice());

var similarityComparer = new SimilarityComparer(documentVector1.ToLocalArray());

var similarityResult = DataParallel.Apply(similarityComparer.CompareSimilarity, sv.DimensionSplit(0));

var sortedSimilarity = ArrayMath.ArgSort(similarityResult.Flatten(), false, 0);

long selectedDocuments = 10;

for (long i = 0; i < selectedDocuments; i++)

{

output.Append(columnToDocumentMap[(int)sortedSimilarity.Index[i]] + ", " + sortedSimilarity.Value[i] + "\n");

}

return output.ToString();

}

Step 7: Write out Result

We write the results to a Windows Azure blob as a text file. The blob is made public, so you can view the results using URI http:\\ <myaccount> .cloudapp.net\lsioutput\lisresult.

static void CreateBlobAndUploadText(string text, CloudBlobClient blobClient, string containerName, string blobName)

{

var resultContainer = blobClient.GetContainerReference(containerName);

resultContainer.CreateIfNotExist();

var resultBlob = resultContainer.GetBlobReference(blobName);

// Make result blob publicly readable so it can be accessed using URI

// https://<accountName>.blob.core.windows.net/lsiresult/lsiresult

var resultPermissions = new BlobContainerPermissions();

resultPermissions.PublicAccess = BlobContainerPublicAccessType.Blob;

resultContainer.SetPermissions(resultPermissions);

resultBlob.UploadText(text);

}

Step 8: Put it All Together

Finally, we put together the pieces in a Main method of the application: we specify the input locations, load in data, reduce the term-doc matrix, select Microsoft Corp. as a company of interest, and find similar documents. We also added a Boolean “useAzure” to provide for the optional scenario where local input and output of data is needed for cases where you want to run the application on your workstation, not on Windows Azure.

Note that the strings accountName, accountKey need to be changed to hold the name and key of your own Windows Azure storage account. In case you intend to run on local workstation, ensure that localPath points to the folder that holds the input files.

public static void Main()

{

NumericsRuntime.Initialize();

// Update these to correct Azure storage account information and

// file locations on your system

string accountName = "myAccountName";

string accountKey = "myAccountKey";

string inputContainerName = "lsiinput";

string outputContainerName = "lsioutput";

string outputBlobName = "lsiresult";

// Update this path if running locally

string localPath = @"C:\Users\Public\Documents\";

// Not necessary to update file names

string secFileName = "SEC_10K_2010.csv";

string mapFileName = "Docmapping_2010.csv";

CloudBlobClient blobClient;

// Change to false to run locally

bool useAzure = true;

msnd.SparseMatrix<double> termDoc;

List<string> columnToDocumentMap;

// Read inputs

if (useAzure)

{

// Set up CloudBlobClient

blobClient = GetBlobClient(accountName, accountKey);

// Copy data from Cloud Numerics Lab public blob to your local account

CopySecData(blobClient, inputContainerName, secFileName, mapFileName);

columnToDocumentMap = ReadDocumentList(blobClient, inputContainerName, mapFileName);

var azureCsvReader = new msnd.IO.CsvSingleFromBlobReader<double>(accountName, accountKey, inputContainerName, secFileName, false);

termDoc = ReadTermDocMatrix(azureCsvReader);

}

else

{

blobClient = null;

var dataPath = localPath + secFileName;

string documentList = System.IO.File.ReadAllText(localPath + mapFileName);

columnToDocumentMap = GetColumnToDocumentMapping(documentList);

var localCsvReader = new msnd.IO.CsvFromDiskReader<double>(dataPath, 0, 0, false);

termDoc = ReadTermDocMatrix(localCsvReader);

}

// Perform analysis

long svdComponents = 100;

var sv = ReduceTermDocumentMatrix(termDoc, svdComponents);

string interestingCompany = "MICROSOFT_CORP_2010";

string output = GetSimilarDocuments(columnToDocumentMap, sv, interestingCompany);

if (useAzure)

{

CreateBlobAndUploadText(output, blobClient, outputContainerName, outputBlobName);

}

else

{

System.IO.File.WriteAllText(localPath + "lsiresult.csv", output);

}

Console.WriteLine("Done!");

NumericsRuntime.Shutdown();

}

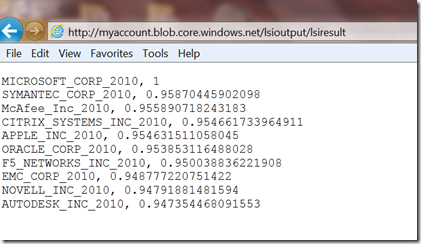

Once all pieces are together, you should be ready to build and deploy the application. In Visual Studio’s Solution Explorer, Set AppConfigure Project as the StartUp Project, build and run the application, and use the “Cloud Numerics” Deployment Utility to deploy to Windows Azure. After a successful run, the resulting cosine similarities should look like the following:

The algorithm has produced a list of technology companies as one would expect. As a sanity check, note that MICROSOFT_CORP_2010 has similarity score of 1 with itself.

This concludes the example; the full example code is available as a Visual Studio 2010 solution at Microsoft Codename “Cloud Numerics” download site.

Comments

- Anonymous

February 26, 2014

The comment has been removed